Bioinformatics () is an

interdisciplinary

Interdisciplinarity or interdisciplinary studies involves the combination of multiple academic disciplines into one activity (e.g., a research project). It draws knowledge from several fields such as sociology, anthropology, psychology, economi ...

field of

science

Science is a systematic discipline that builds and organises knowledge in the form of testable hypotheses and predictions about the universe. Modern science is typically divided into twoor threemajor branches: the natural sciences, which stu ...

that develops methods and

software tool

A programming tool or software development tool is a computer program that is used to software development, develop another computer program, usually by helping the developer manage computer files. For example, a programmer may use a tool called ...

s for understanding

biological data, especially when the data sets are large and complex. Bioinformatics uses

biology

Biology is the scientific study of life and living organisms. It is a broad natural science that encompasses a wide range of fields and unifying principles that explain the structure, function, growth, History of life, origin, evolution, and ...

,

chemistry

Chemistry is the scientific study of the properties and behavior of matter. It is a physical science within the natural sciences that studies the chemical elements that make up matter and chemical compound, compounds made of atoms, molecules a ...

,

physics

Physics is the scientific study of matter, its Elementary particle, fundamental constituents, its motion and behavior through space and time, and the related entities of energy and force. "Physical science is that department of knowledge whi ...

,

computer science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ...

,

data science,

computer programming

Computer programming or coding is the composition of sequences of instructions, called computer program, programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of proc ...

,

information engineering

Information engineering is the engineering discipline that deals with the generation, distribution, analysis, and use of information, data, and knowledge in electrical systems. The field first became identifiable in the early 21st century.

Th ...

,

mathematics

Mathematics is a field of study that discovers and organizes methods, Mathematical theory, theories and theorems that are developed and Mathematical proof, proved for the needs of empirical sciences and mathematics itself. There are many ar ...

and

statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

to analyze and interpret

biological data. The process of analyzing and interpreting data can sometimes be referred to as

computational biology, however this distinction between the two terms is often disputed. To some, the term ''computational biology'' refers to building and using models of biological systems.

Computational, statistical, and computer programming techniques have been used for

computer simulation analyses of biological queries. They include reused specific analysis "pipelines", particularly in the field of

genomics, such as by the identification of

gene

In biology, the word gene has two meanings. The Mendelian gene is a basic unit of heredity. The molecular gene is a sequence of nucleotides in DNA that is transcribed to produce a functional RNA. There are two types of molecular genes: protei ...

s and single

nucleotide

Nucleotides are Organic compound, organic molecules composed of a nitrogenous base, a pentose sugar and a phosphate. They serve as monomeric units of the nucleic acid polymers – deoxyribonucleic acid (DNA) and ribonucleic acid (RNA), both o ...

polymorphisms (

SNPs). These pipelines are used to better understand the genetic basis of disease, unique adaptations, desirable properties (especially in agricultural species), or differences between populations. Bioinformatics also includes

proteomics, which tries to understand the organizational principles within

nucleic acid

Nucleic acids are large biomolecules that are crucial in all cells and viruses. They are composed of nucleotides, which are the monomer components: a pentose, 5-carbon sugar, a phosphate group and a nitrogenous base. The two main classes of nuclei ...

and

protein

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residue (biochemistry), residues. Proteins perform a vast array of functions within organisms, including Enzyme catalysis, catalysing metab ...

sequences.

Image and

signal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomograph ...

allow extraction of useful results from large amounts of raw data. In the field of genetics, it aids in sequencing and annotating genomes and their observed

mutation

In biology, a mutation is an alteration in the nucleic acid sequence of the genome of an organism, virus, or extrachromosomal DNA. Viral genomes contain either DNA or RNA. Mutations result from errors during DNA or viral replication, ...

s. Bioinformatics includes

text mining of biological literature and the development of biological and gene

ontologies to organize and query biological data. It also plays a role in the analysis of gene and protein expression and regulation. Bioinformatics tools aid in comparing, analyzing and interpreting genetic and genomic data and more generally in the understanding of evolutionary aspects of molecular biology. At a more integrative level, it helps analyze and catalogue the biological pathways and networks that are an important part of

systems biology. In

structural biology, it aids in the simulation and modeling of DNA,

RNA,

proteins as well as biomolecular interactions.

History

The first definition of the term ''bioinformatics'' was coined by

Paulien Hogeweg and

Ben Hesper in 1970, to refer to the study of information processes in biotic systems.

This definition placed bioinformatics as a field parallel to

biochemistry

Biochemistry, or biological chemistry, is the study of chemical processes within and relating to living organisms. A sub-discipline of both chemistry and biology, biochemistry may be divided into three fields: structural biology, enzymology, a ...

(the study of chemical processes in biological systems).

Bioinformatics and computational biology involved the analysis of biological data, particularly DNA, RNA, and protein sequences. The field of bioinformatics experienced explosive growth starting in the mid-1990s, driven largely by the

Human Genome Project and by rapid advances in DNA sequencing technology.

Analyzing biological data to produce meaningful information involves writing and running software programs that use

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

s from

graph theory

In mathematics and computer science, graph theory is the study of ''graph (discrete mathematics), graphs'', which are mathematical structures used to model pairwise relations between objects. A graph in this context is made up of ''Vertex (graph ...

,

artificial intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of re ...

,

soft computing,

data mining,

image processing

An image or picture is a visual representation. An image can be two-dimensional, such as a drawing, painting, or photograph, or three-dimensional, such as a carving or sculpture. Images may be displayed through other media, including a pr ...

, and

computer simulation. The algorithms in turn depend on theoretical foundations such as

discrete mathematics,

control theory

Control theory is a field of control engineering and applied mathematics that deals with the control system, control of dynamical systems in engineered processes and machines. The objective is to develop a model or algorithm governing the applic ...

,

system theory,

information theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

, and

statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

.

Sequences

There has been a tremendous advance in speed and cost reduction since the completion of the Human Genome Project, with some labs able to

sequence

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is cal ...

over 100,000 billion bases each year, and a full genome can be sequenced for $1,000 or less.

Computers became essential in molecular biology when

protein sequences became available after

Frederick Sanger determined the sequence of

insulin

Insulin (, from Latin ''insula'', 'island') is a peptide hormone produced by beta cells of the pancreatic islets encoded in humans by the insulin (''INS)'' gene. It is the main Anabolism, anabolic hormone of the body. It regulates the metabol ...

in the early 1950s.

Comparing multiple sequences manually turned out to be impractical.

Margaret Oakley Dayhoff, a pioneer in the field, compiled one of the first protein sequence databases, initially published as books

as well as methods of sequence alignment and

molecular evolution.

Another early contributor to bioinformatics was

Elvin A. Kabat, who pioneered biological sequence analysis in 1970 with his comprehensive volumes of antibody sequences released online with Tai Te Wu between 1980 and 1991.

In the 1970s, new techniques for sequencing DNA were applied to bacteriophage MS2 and øX174, and the extended nucleotide sequences were then parsed with informational and statistical algorithms. These studies illustrated that well known features, such as the coding segments and the triplet code, are revealed in straightforward statistical analyses and were the proof of the concept that bioinformatics would be insightful.

Goals

In order to study how normal cellular activities are altered in different disease states, raw biological data must be combined to form a comprehensive picture of these activities. Therefore, the field of bioinformatics has evolved such that the most pressing task now involves the analysis and interpretation of various types of data. This also includes nucleotide and

amino acid sequences,

protein domain

In molecular biology, a protein domain is a region of a protein's Peptide, polypeptide chain that is self-stabilizing and that Protein folding, folds independently from the rest. Each domain forms a compact folded Protein tertiary structure, thre ...

s, and

protein structures.

Important sub-disciplines within bioinformatics and

computational biology include:

* Development and implementation of computer programs to efficiently access, manage, and use various types of information.

* Development of new mathematical algorithms and statistical measures to assess relationships among members of large data sets. For example, there are methods to locate a

gene

In biology, the word gene has two meanings. The Mendelian gene is a basic unit of heredity. The molecular gene is a sequence of nucleotides in DNA that is transcribed to produce a functional RNA. There are two types of molecular genes: protei ...

within a sequence, to predict protein structure and/or function, and to

cluster protein sequences into families of related sequences.

The primary goal of bioinformatics is to increase the understanding of biological processes. What sets it apart from other approaches is its focus on developing and applying computationally intensive techniques to achieve this goal. Examples include:

pattern recognition

Pattern recognition is the task of assigning a class to an observation based on patterns extracted from data. While similar, pattern recognition (PR) is not to be confused with pattern machines (PM) which may possess PR capabilities but their p ...

,

data mining,

machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

algorithms, and

visualization. Major research efforts in the field include

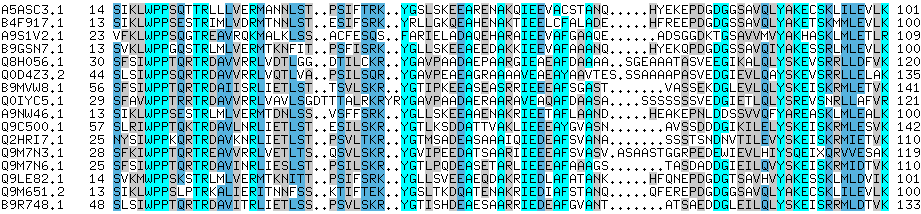

sequence alignment,

gene finding,

genome assembly,

drug design,

drug discovery

In the fields of medicine, biotechnology, and pharmacology, drug discovery is the process by which new candidate medications are discovered.

Historically, drugs were discovered by identifying the active ingredient from traditional remedies or ...

,

protein structure alignment,

protein structure prediction

Protein structure prediction is the inference of the three-dimensional structure of a protein from its amino acid sequence—that is, the prediction of its Protein secondary structure, secondary and Protein tertiary structure, tertiary structure ...

, prediction of

gene expression and

protein–protein interactions,

genome-wide association studies, the modeling of

evolution

Evolution is the change in the heritable Phenotypic trait, characteristics of biological populations over successive generations. It occurs when evolutionary processes such as natural selection and genetic drift act on genetic variation, re ...

and

cell division/mitosis.

Bioinformatics entails the creation and advancement of databases, algorithms, computational and statistical techniques, and theory to solve formal and practical problems arising from the management and analysis of biological data.

Over the past few decades, rapid developments in genomic and other molecular research technologies and developments in

information technologies have combined to produce a tremendous amount of information related to molecular biology. Bioinformatics is the name given to these mathematical and computing approaches used to glean understanding of biological processes.

Common activities in bioinformatics include mapping and analyzing

DNA and protein sequences, aligning DNA and protein sequences to compare them, and creating and viewing 3-D models of protein structures.

Sequence analysis

Since the bacteriophage

Phage Φ-X174 was

sequenced in 1977,

the

DNA sequences of thousands of organisms have been decoded and stored in databases. This sequence information is analyzed to determine genes that encode

protein

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residue (biochemistry), residues. Proteins perform a vast array of functions within organisms, including Enzyme catalysis, catalysing metab ...

s, RNA genes, regulatory sequences, structural motifs, and repetitive sequences. A comparison of genes within a

species

A species () is often defined as the largest group of organisms in which any two individuals of the appropriate sexes or mating types can produce fertile offspring, typically by sexual reproduction. It is the basic unit of Taxonomy (biology), ...

or between different species can show similarities between protein functions, or relations between species (the use of

molecular systematics to construct

phylogenetic trees). With the growing amount of data, it long ago became impractical to analyze DNA sequences manually.

Computer program

A computer program is a sequence or set of instructions in a programming language for a computer to Execution (computing), execute. It is one component of software, which also includes software documentation, documentation and other intangibl ...

s such as

BLAST are used routinely to search sequences—as of 2008, from more than 260,000 organisms, containing over 190 billion

nucleotide

Nucleotides are Organic compound, organic molecules composed of a nitrogenous base, a pentose sugar and a phosphate. They serve as monomeric units of the nucleic acid polymers – deoxyribonucleic acid (DNA) and ribonucleic acid (RNA), both o ...

s.

DNA sequencing

Before sequences can be analyzed, they are obtained from a data storage bank, such as GenBank.

DNA sequencing

DNA sequencing is the process of determining the nucleic acid sequence – the order of nucleotides in DNA. It includes any method or technology that is used to determine the order of the four bases: adenine, thymine, cytosine, and guanine. The ...

is still a non-trivial problem as the raw data may be noisy or affected by weak signals.

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

s have been developed for

base calling for the various experimental approaches to DNA sequencing.

Sequence assembly

Most DNA sequencing techniques produce short fragments of sequence that need to be assembled to obtain complete gene or genome sequences. The

shotgun sequencing technique (used by

The Institute for Genomic Research (TIGR) to sequence the first bacterial genome, ''

Haemophilus influenzae'')

generates the sequences of many thousands of small DNA fragments (ranging from 35 to 900 nucleotides long, depending on the sequencing technology). The ends of these fragments overlap and, when aligned properly by a genome assembly program, can be used to reconstruct the complete genome. Shotgun sequencing yields sequence data quickly, but the task of assembling the fragments can be quite complicated for larger genomes. For a genome as large as the

human genome

The human genome is a complete set of nucleic acid sequences for humans, encoded as the DNA within each of the 23 distinct chromosomes in the cell nucleus. A small DNA molecule is found within individual Mitochondrial DNA, mitochondria. These ar ...

, it may take many days of CPU time on large-memory, multiprocessor computers to assemble the fragments, and the resulting assembly usually contains numerous gaps that must be filled in later. Shotgun sequencing is the method of choice for virtually all genomes sequenced (rather than chain-termination or chemical degradation methods), and genome assembly algorithms are a critical area of bioinformatics research.

Genome annotation

In

genomics,

annotation

An annotation is extra information associated with a particular point in a document or other piece of information. It can be a note that includes a comment or explanation. Annotations are sometimes presented Marginalia, in the margin of book page ...

refers to the process of marking the stop and start regions of genes and other biological features in a sequenced DNA sequence. Many genomes are too large to be annotated by hand. As the rate of

sequencing exceeds the rate of genome annotation, genome annotation has become the new bottleneck in bioinformatics.

Genome annotation can be classified into three levels: the

nucleotide

Nucleotides are Organic compound, organic molecules composed of a nitrogenous base, a pentose sugar and a phosphate. They serve as monomeric units of the nucleic acid polymers – deoxyribonucleic acid (DNA) and ribonucleic acid (RNA), both o ...

, protein, and process levels.

Gene finding is a chief aspect of nucleotide-level annotation. For complex genomes, a combination of

ab initio gene prediction and sequence comparison with expressed sequence databases and other organisms can be successful. Nucleotide-level annotation also allows the integration of genome sequence with other genetic and physical maps of the genome.

The principal aim of protein-level annotation is to assign function to the

protein

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residue (biochemistry), residues. Proteins perform a vast array of functions within organisms, including Enzyme catalysis, catalysing metab ...

products of the genome. Databases of protein sequences and functional domains and motifs are used for this type of annotation. About half of the predicted proteins in a new genome sequence tend to have no obvious function.

Understanding the function of genes and their products in the context of cellular and organismal physiology is the goal of process-level annotation. An obstacle of process-level annotation has been the inconsistency of terms used by different model systems. The Gene Ontology Consortium is helping to solve this problem.

The first description of a comprehensive annotation system was published in 1995

by

The Institute for Genomic Research, which performed the first complete sequencing and analysis of the genome of a free-living (non-

symbiotic) organism, the bacterium ''

Haemophilus influenzae''.

The system identifies the genes encoding all proteins, transfer RNAs, ribosomal RNAs, in order to make initial functional assignments. The

GeneMark program trained to find protein-coding genes in ''

Haemophilus influenzae'' is constantly changing and improving.

Following the goals that the Human Genome Project left to achieve after its closure in 2003, the

ENCODE project was developed by the

National Human Genome Research Institute. This project is a collaborative data collection of the functional elements of the human genome that uses next-generation DNA-sequencing technologies and genomic tiling arrays, technologies able to automatically generate large amounts of data at a dramatically reduced per-base cost but with the same accuracy (base call error) and fidelity (assembly error).

Gene function prediction

While genome annotation is primarily based on sequence similarity (and thus

homology), other properties of sequences can be used to predict the function of genes. In fact, most ''gene'' function prediction methods focus on ''protein'' sequences as they are more informative and more feature-rich. For instance, the distribution of hydrophobic

amino acids predicts

transmembrane segments in proteins. However, protein function prediction can also use external information such as gene (or protein)

expression data,

protein structure, or

protein-protein interactions.

Computational evolutionary biology

Evolutionary biology

Evolutionary biology is the subfield of biology that studies the evolutionary processes such as natural selection, common descent, and speciation that produced the diversity of life on Earth. In the 1930s, the discipline of evolutionary biolo ...

is the study of the origin and descent of

species

A species () is often defined as the largest group of organisms in which any two individuals of the appropriate sexes or mating types can produce fertile offspring, typically by sexual reproduction. It is the basic unit of Taxonomy (biology), ...

, as well as their change over time.

Informatics has assisted evolutionary biologists by enabling researchers to:

* trace the evolution of a large number of organisms by measuring changes in their

DNA, rather than through physical taxonomy or physiological observations alone,

* compare entire

genomes, which permits the study of more complex evolutionary events, such as

gene duplication,

horizontal gene transfer

Horizontal gene transfer (HGT) or lateral gene transfer (LGT) is the movement of genetic material between organisms other than by the ("vertical") transmission of DNA from parent to offspring (reproduction). HGT is an important factor in the e ...

, and the prediction of factors important in bacterial

speciation,

* build complex computational

population genetics models to predict the outcome of the system over time

* track and share information on an increasingly large number of species and organisms

Comparative genomics

The core of comparative genome analysis is the establishment of the correspondence between

genes

In biology, the word gene has two meanings. The Mendelian gene is a basic unit of heredity. The molecular gene is a sequence of nucleotides in DNA that is transcribed to produce a functional RNA. There are two types of molecular genes: protei ...

(

orthology analysis) or other genomic features in different organisms. Intergenomic maps are made to trace the evolutionary processes responsible for the divergence of two genomes. A multitude of evolutionary events acting at various organizational levels shape genome evolution. At the lowest level, point mutations affect individual nucleotides. At a higher level, large chromosomal segments undergo duplication, lateral transfer, inversion, transposition, deletion and insertion. Entire genomes are involved in processes of hybridization, polyploidization and

endosymbiosis

An endosymbiont or endobiont is an organism that lives within the body or cells of another organism. Typically the two organisms are in a mutualism (biology), mutualistic relationship. Examples are nitrogen-fixing bacteria (called rhizobia), whi ...

that lead to rapid speciation. The complexity of genome evolution poses many exciting challenges to developers of mathematical models and algorithms, who have recourse to a spectrum of algorithmic, statistical and mathematical techniques, ranging from exact,

heuristics, fixed parameter and

approximation algorithms for problems based on parsimony models to

Markov chain Monte Carlo algorithms for

Bayesian analysis of problems based on probabilistic models.

Many of these studies are based on the detection of

sequence homology to assign sequences to

protein families.

Pan genomics

Pan genomics is a concept introduced in 2005 by Tettelin and Medini. Pan genome is the complete gene repertoire of a particular

monophyletic

In biological cladistics for the classification of organisms, monophyly is the condition of a taxonomic grouping being a clade – that is, a grouping of organisms which meets these criteria:

# the grouping contains its own most recent co ...

taxonomic group. Although initially applied to closely related strains of a species, it can be applied to a larger context like genus, phylum, etc. It is divided in two parts: the Core genome, a set of genes common to all the genomes under study (often housekeeping genes vital for survival), and the Dispensable/Flexible genome: a set of genes not present in all but one or some genomes under study. A bioinformatics tool BPGA can be used to characterize the Pan Genome of bacterial species.

Genetics of disease

As of 2013, the existence of efficient high-throughput next-generation sequencing technology allows for the identification of cause many different human disorders. Simple

Mendelian inheritance has been observed for over 3,000 disorders that have been identified at the

Online Mendelian Inheritance in Man database, but complex diseases are more difficult. Association studies have found many individual genetic regions that individually are weakly associated with complex diseases (such as

infertility

In biology, infertility is the inability of a male and female organism to Sexual reproduction, reproduce. It is usually not the natural state of a healthy organism that has reached sexual maturity, so children who have not undergone puberty, whi ...

,

and

Alzheimer's disease

Alzheimer's disease (AD) is a neurodegenerative disease and the cause of 60–70% of cases of dementia. The most common early symptom is difficulty in remembering recent events. As the disease advances, symptoms can include problems wit ...

), rather than a single cause.

There are currently many challenges to using genes for diagnosis and treatment, such as how we don't know which genes are important, or how stable the choices an algorithm provides.

Genome-wide association studies have successfully identified thousands of common genetic variants for complex diseases and traits; however, these common variants only explain a small fraction of heritability.

Rare variants may account for some of the

missing heritability. Large-scale

whole genome sequencing

Whole genome sequencing (WGS), also known as full genome sequencing or just genome sequencing, is the process of determining the entirety of the DNA sequence of an organism's genome at a single time. This entails sequencing all of an organism's ...

studies have rapidly sequenced millions of whole genomes, and such studies have identified hundreds of millions of

rare variants.

Functional annotations predict the effect or function of a genetic variant and help to prioritize rare functional variants, and incorporating these annotations can effectively boost the power of genetic association of rare variants analysis of whole genome sequencing studies. Some tools have been developed to provide all-in-one rare variant association analysis for whole-genome sequencing data, including integration of genotype data and their functional annotations, association analysis, result summary and visualization. Meta-analysis of whole genome sequencing studies provides an attractive solution to the problem of collecting large sample sizes for discovering rare variants associated with complex phenotypes.

Analysis of mutations in cancer

In

cancer

Cancer is a group of diseases involving Cell growth#Disorders, abnormal cell growth with the potential to Invasion (cancer), invade or Metastasis, spread to other parts of the body. These contrast with benign tumors, which do not spread. Po ...

, the genomes of affected cells are rearranged in complex or unpredictable ways. In addition to

single-nucleotide polymorphism arrays identifying

point mutations that cause cancer,

oligonucleotide microarrays can be used to identify chromosomal gains and losses (called

comparative genomic hybridization). These detection methods generate

terabytes of data per experiment. The data is often found to contain considerable variability, or

noise

Noise is sound, chiefly unwanted, unintentional, or harmful sound considered unpleasant, loud, or disruptive to mental or hearing faculties. From a physics standpoint, there is no distinction between noise and desired sound, as both are vibrat ...

, and thus

Hidden Markov model and change-point analysis methods are being developed to infer real

copy number changes.

Two important principles can be used to identify cancer by mutations in the

exome. First, cancer is a disease of accumulated somatic mutations in genes. Second, cancer contains driver mutations which need to be distinguished from passengers.

Further improvements in bioinformatics could allow for classifying types of cancer by analysis of cancer driven mutations in the genome. Furthermore, tracking of patients while the disease progresses may be possible in the future with the sequence of cancer samples. Another type of data that requires novel informatics development is the analysis of

lesions found to be recurrent among many tumors.

Gene and protein expression

Analysis of gene expression

The

expression of many genes can be determined by measuring

mRNA

In molecular biology, messenger ribonucleic acid (mRNA) is a single-stranded molecule of RNA that corresponds to the genetic sequence of a gene, and is read by a ribosome in the process of Protein biosynthesis, synthesizing a protein.

mRNA is ...

levels with multiple techniques including

microarrays,

expressed cDNA sequence tag (EST) sequencing,

serial analysis of gene expression (SAGE) tag sequencing,

massively parallel signature sequencing (MPSS),

RNA-Seq, also known as "Whole Transcriptome Shotgun Sequencing" (WTSS), or various applications of multiplexed in-situ hybridization. All of these techniques are extremely noise-prone and/or subject to bias in the biological measurement, and a major research area in computational biology involves developing statistical tools to separate

signal

A signal is both the process and the result of transmission of data over some media accomplished by embedding some variation. Signals are important in multiple subject fields including signal processing, information theory and biology.

In ...

from

noise

Noise is sound, chiefly unwanted, unintentional, or harmful sound considered unpleasant, loud, or disruptive to mental or hearing faculties. From a physics standpoint, there is no distinction between noise and desired sound, as both are vibrat ...

in high-throughput gene expression studies. Such studies are often used to determine the genes implicated in a disorder: one might compare microarray data from cancerous

epithelial cells to data from non-cancerous cells to determine the transcripts that are up-regulated and down-regulated in a particular population of cancer cells.

Analysis of protein expression

Protein microarrays and high throughput (HT)

mass spectrometry (MS) can provide a snapshot of the proteins present in a biological sample. The former approach faces similar problems as with microarrays targeted at mRNA, the latter involves the problem of matching large amounts of mass data against predicted masses from protein sequence databases, and the complicated statistical analysis of samples when multiple incomplete peptides from each protein are detected. Cellular protein localization in a tissue context can be achieved through affinity

proteomics displayed as spatial data based on

immunohistochemistry

Immunohistochemistry is a form of immunostaining. It involves the process of selectively identifying antigens in cells and tissue, by exploiting the principle of Antibody, antibodies binding specifically to antigens in biological tissues. Alber ...

and

tissue microarrays.

Analysis of regulation

Gene regulation is a complex process where a signal, such as an extracellular signal such as a

hormone

A hormone (from the Ancient Greek, Greek participle , "setting in motion") is a class of cell signaling, signaling molecules in multicellular organisms that are sent to distant organs or tissues by complex biological processes to regulate physio ...

, eventually leads to an increase or decrease in the activity of one or more

protein

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residue (biochemistry), residues. Proteins perform a vast array of functions within organisms, including Enzyme catalysis, catalysing metab ...

s. Bioinformatics techniques have been applied to explore various steps in this process.

For example, gene expression can be regulated by nearby elements in the genome. Promoter analysis involves the identification and study of

sequence motifs in the DNA surrounding the protein-coding region of a gene. These motifs influence the extent to which that region is transcribed into mRNA.

Enhancer elements far away from the promoter can also regulate gene expression, through three-dimensional looping interactions. These interactions can be determined by bioinformatic analysis of

chromosome conformation capture experiments.

Expression data can be used to infer gene regulation: one might compare

microarray

A microarray is a multiplex (assay), multiplex lab-on-a-chip. Its purpose is to simultaneously detect the expression of thousands of biological interactions. It is a two-dimensional array on a Substrate (materials science), solid substrate—usu ...

data from a wide variety of states of an organism to form hypotheses about the genes involved in each state. In a single-cell organism, one might compare stages of the

cell cycle

The cell cycle, or cell-division cycle, is the sequential series of events that take place in a cell (biology), cell that causes it to divide into two daughter cells. These events include the growth of the cell, duplication of its DNA (DNA re ...

, along with various stress conditions (heat shock, starvation, etc.).

Clustering algorithms can be then applied to expression data to determine which genes are co-expressed. For example, the upstream regions (promoters) of co-expressed genes can be searched for over-represented

regulatory elements. Examples of clustering algorithms applied in gene clustering are

k-means clustering,

self-organizing maps (SOMs),

hierarchical clustering, and

consensus clustering methods.

Analysis of cellular organization

Several approaches have been developed to analyze the location of organelles, genes, proteins, and other components within cells. A

gene ontology category, ''cellular component'', has been devised to capture subcellular localization in many

biological databases.

Microscopy and image analysis

Microscopic pictures allow for the location of

organelle

In cell biology, an organelle is a specialized subunit, usually within a cell (biology), cell, that has a specific function. The name ''organelle'' comes from the idea that these structures are parts of cells, as Organ (anatomy), organs are to th ...

s as well as molecules, which may be the source of abnormalities in diseases.

Protein localization

Finding the location of proteins allows us to predict what they do. This is called

protein function prediction. For instance, if a protein is found in the

nucleus it may be involved in

gene regulation or

splicing. By contrast, if a protein is found in

mitochondria, it may be involved in

respiration or other

metabolic processes. There are well developed

protein subcellular localization prediction resources available, including protein subcellular location databases, and prediction tools.

Nuclear organization of chromatin

Data from high-throughput

chromosome conformation capture experiments, such as

Hi-C (experiment) and

ChIA-PET, can provide information on the three-dimensional structure and

nuclear organization of

chromatin. Bioinformatic challenges in this field include partitioning the genome into domains, such as

Topologically Associating Domains (TADs), that are organised together in three-dimensional space.

Structural bioinformatics

Finding the structure of proteins is an important application of bioinformatics. The Critical Assessment of Protein Structure Prediction (CASP) is an open competition where worldwide research groups submit protein models for evaluating unknown protein models.

Amino acid sequence

The linear

amino acid sequence of a protein is called the

primary structure. The primary structure can be easily determined from the sequence of

codons on the DNA gene that codes for it. In most proteins, the primary structure uniquely determines the 3-dimensional structure of a protein in its native environment. An exception is the misfolded

prion

A prion () is a Proteinopathy, misfolded protein that induces misfolding in normal variants of the same protein, leading to cellular death. Prions are responsible for prion diseases, known as transmissible spongiform encephalopathy (TSEs), w ...

protein involved in

bovine spongiform encephalopathy. This structure is linked to the function of the protein. Additional structural information includes the ''

secondary'', ''

tertiary'' and ''

quaternary

The Quaternary ( ) is the current and most recent of the three periods of the Cenozoic Era in the geologic time scale of the International Commission on Stratigraphy (ICS), as well as the current and most recent of the twelve periods of the ...

'' structure. A viable general solution to the prediction of the function of a protein remains an open problem. Most efforts have so far been directed towards heuristics that work most of the time.

Homology

In the genomic branch of bioinformatics, homology is used to predict the function of a gene: if the sequence of gene ''A'', whose function is known, is homologous to the sequence of gene ''B,'' whose function is unknown, one could infer that B may share A's function. In structural bioinformatics, homology is used to determine which parts of a protein are important in structure formation and interaction with other proteins.

Homology modeling is used to predict the structure of an unknown protein from existing homologous proteins.

One example of this is hemoglobin in humans and the hemoglobin in legumes (

leghemoglobin), which are distant relatives from the same

protein superfamily

A protein superfamily is the largest grouping (clade) of proteins for which common ancestry can be inferred (see homology (biology), homology). Usually this common ancestry is inferred from structural alignment and mechanistic similarity, even if n ...

. Both serve the same purpose of transporting oxygen in the organism. Although both of these proteins have very different amino acid sequences, their protein structures are very similar, reflecting their shared function and shared ancestor.

Other techniques for predicting protein structure include protein threading and ''de novo'' (from scratch) physics-based modeling.

Another aspect of structural bioinformatics include the use of protein structures for

Virtual Screening models such as

Quantitative Structure-Activity Relationship models and proteochemometric models (PCM). Furthermore, a protein's crystal structure can be used in simulation of for example ligand-binding studies and ''in silico'' mutagenesis studies.

A 2021

deep-learning algorithms-based software called

AlphaFold, developed by Google's

DeepMind, greatly outperforms all other prediction software methods, and has released predicted structures for hundreds of millions of proteins in the AlphaFold protein structure database.

Network and systems biology

''Network analysis'' seeks to understand the relationships within

biological networks such as

metabolic

Metabolism (, from ''metabolē'', "change") is the set of life-sustaining chemical reactions in organisms. The three main functions of metabolism are: the conversion of the energy in food to energy available to run cellular processes; the ...

or

protein–protein interaction networks. Although biological networks can be constructed from a single type of molecule or entity (such as genes), network biology often attempts to integrate many different data types, such as proteins, small molecules, gene expression data, and others, which are all connected physically, functionally, or both.

''Systems biology'' involves the use of

computer simulations of

cellular subsystems (such as the

networks of metabolites and

enzyme

An enzyme () is a protein that acts as a biological catalyst by accelerating chemical reactions. The molecules upon which enzymes may act are called substrate (chemistry), substrates, and the enzyme converts the substrates into different mol ...

s that comprise

metabolism

Metabolism (, from ''metabolē'', "change") is the set of life-sustaining chemical reactions in organisms. The three main functions of metabolism are: the conversion of the energy in food to energy available to run cellular processes; the co ...

,

signal transduction pathways and

gene regulatory networks) to both analyze and visualize the complex connections of these cellular processes.

Artificial life or virtual evolution attempts to understand evolutionary processes via the computer simulation of simple (artificial) life forms.

Molecular interaction networks

Tens of thousands of three-dimensional protein structures have been determined by

X-ray crystallography

X-ray crystallography is the experimental science of determining the atomic and molecular structure of a crystal, in which the crystalline structure causes a beam of incident X-rays to Diffraction, diffract in specific directions. By measuring th ...

and

protein nuclear magnetic resonance spectroscopy (protein NMR) and a central question in structural bioinformatics is whether it is practical to predict possible protein–protein interactions only based on these 3D shapes, without performing

protein–protein interaction experiments. A variety of methods have been developed to tackle the

protein–protein docking problem, though it seems that there is still much work to be done in this field.

Other interactions encountered in the field include Protein–ligand (including drug) and

protein–peptide. Molecular dynamic simulation of movement of atoms about rotatable bonds is the fundamental principle behind computational

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

s, termed docking algorithms, for studying

molecular interactions.

Biodiversity informatics

Biodiversity informatics deals with the collection and analysis of

biodiversity

Biodiversity is the variability of life, life on Earth. It can be measured on various levels. There is for example genetic variability, species diversity, ecosystem diversity and Phylogenetics, phylogenetic diversity. Diversity is not distribut ...

data, such as

taxonomic databases, or

microbiome data. Examples of such analyses include

phylogenetics

In biology, phylogenetics () is the study of the evolutionary history of life using observable characteristics of organisms (or genes), which is known as phylogenetic inference. It infers the relationship among organisms based on empirical dat ...

,

niche modelling,

species richness mapping,

DNA barcoding, or

species

A species () is often defined as the largest group of organisms in which any two individuals of the appropriate sexes or mating types can produce fertile offspring, typically by sexual reproduction. It is the basic unit of Taxonomy (biology), ...

identification tools. A growing area is also

macro-ecology, i.e. the study of how biodiversity is connected to

ecology

Ecology () is the natural science of the relationships among living organisms and their Natural environment, environment. Ecology considers organisms at the individual, population, community (ecology), community, ecosystem, and biosphere lev ...

and human impact, such as

climate change

Present-day climate change includes both global warming—the ongoing increase in Global surface temperature, global average temperature—and its wider effects on Earth's climate system. Climate variability and change, Climate change in ...

.

Others

Literature analysis

The enormous number of published literature makes it virtually impossible for individuals to read every paper, resulting in disjointed sub-fields of research. Literature analysis aims to employ computational and statistical linguistics to mine this growing library of text resources. For example:

* Abbreviation recognition – identify the long-form and abbreviation of biological terms

*

Named-entity recognition – recognizing biological terms such as gene names

* Protein–protein interaction – identify which

protein

Proteins are large biomolecules and macromolecules that comprise one or more long chains of amino acid residue (biochemistry), residues. Proteins perform a vast array of functions within organisms, including Enzyme catalysis, catalysing metab ...

s interact with which proteins from text

The area of research draws from

statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

and

computational linguistics.

High-throughput image analysis

Computational technologies are used to automate the processing, quantification and analysis of large amounts of high-information-content

biomedical imagery. Modern

image analysis systems can improve an observer's

accuracy,

objectivity, or speed. Image analysis is important for both

diagnostics and research. Some examples are:

* high-throughput and high-fidelity quantification and sub-cellular localization (

high-content screening, cytohistopathology,

Bioimage informatics)

*

morphometrics

* clinical image analysis and visualization

* determining the real-time air-flow patterns in breathing lungs of living animals

* quantifying occlusion size in real-time imagery from the development of and recovery during arterial injury

* making behavioral observations from extended video recordings of laboratory animals

* infrared measurements for metabolic activity determination

* inferring clone overlaps in

DNA mapping, e.g. the

Sulston score

High-throughput single cell data analysis

Computational techniques are used to analyse high-throughput, low-measurement single cell data, such as that obtained from

flow cytometry. These methods typically involve finding populations of cells that are relevant to a particular disease state or experimental condition.

Ontologies and data integration

Biological ontologies are

directed acyclic graphs of

controlled vocabularies. They create categories for biological concepts and descriptions so they can be easily analyzed with computers. When categorised in this way, it is possible to gain added value from holistic and integrated analysis.

The

OBO Foundry was an effort to standardise certain ontologies. One of the most widespread is the

Gene ontology which describes gene function. There are also ontologies which describe phenotypes.

Databases

Databases are essential for bioinformatics research and applications. Databases exist for many different information types, including DNA and protein sequences, molecular structures, phenotypes and biodiversity. Databases can contain both empirical data (obtained directly from experiments) and predicted data (obtained from analysis of existing data). They may be specific to a particular organism, pathway or molecule of interest. Alternatively, they can incorporate data compiled from multiple other databases. Databases can have different formats, access mechanisms, and be public or private.

Some of the most commonly used databases are listed below:

* Used in biological sequence analysis:

Genbank

The GenBank sequence database is an open access, annotated collection of all publicly available nucleotide sequences and their protein translations. It is produced and maintained by the National Center for Biotechnology Information (NCBI; a par ...

,

UniProt

* Used in structure analysis:

Protein Data Bank

The Protein Data Bank (PDB) is a database for the three-dimensional structural data of large biological molecules such as proteins and nucleic acids, which is overseen by the Worldwide Protein Data Bank (wwPDB). This structural data is obtained a ...

(PDB)

* Used in finding Protein Families and

Motif Finding:

InterPro,

Pfam

* Used for Next Generation Sequencing:

Sequence Read Archive

* Used in Network Analysis: Metabolic Pathway Databases (

KEGG,

BioCyc), Interaction Analysis Databases, Functional Networks

* Used in design of synthetic genetic circuits:

GenoCAD

Software and tools

Software tools for bioinformatics include simple command-line tools, more complex graphical programs, and standalone web-services. They are made by

bioinformatics companies or by public institutions.

Open-source bioinformatics software

Many

free and open-source software

Free and open-source software (FOSS) is software available under a license that grants users the right to use, modify, and distribute the software modified or not to everyone free of charge. FOSS is an inclusive umbrella term encompassing free ...

tools have existed and continued to grow since the 1980s.

The combination of a continued need for new

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

s for the analysis of emerging types of biological readouts, the potential for innovative ''

in silico'' experiments, and freely available

open code bases have created opportunities for research groups to contribute to both bioinformatics regardless of

funding. The open source tools often act as incubators of ideas, or community-supported

plug-ins in commercial applications. They may also provide ''

de facto'' standards and shared object models for assisting with the challenge of bioinformation integration.

Open-source bioinformatics software includes

Bioconductor,

BioPerl,

Biopython,

BioJava,

BioJS,

BioRuby,

Bioclipse,

EMBOSS, .NET Bio,

Orange with its bioinformatics add-on,

Apache Taverna,

UGENE and

GenoCAD.

The non-profit

Open Bioinformatics Foundation and the annual

Bioinformatics Open Source Conference promote open-source bioinformatics software.

Web services in bioinformatics

SOAP

Soap is a salt (chemistry), salt of a fatty acid (sometimes other carboxylic acids) used for cleaning and lubricating products as well as other applications. In a domestic setting, soaps, specifically "toilet soaps", are surfactants usually u ...

- and

REST-based interfaces have been developed to allow client computers to use algorithms, data and computing resources from servers in other parts of the world. The main advantage are that end users do not have to deal with software and database maintenance overheads.

Basic bioinformatics services are classified by the

EBI into three categories:

SSS (Sequence Search Services),

MSA (Multiple Sequence Alignment), and

BSA (Biological Sequence Analysis). The availability of these

service-oriented bioinformatics resources demonstrate the applicability of web-based bioinformatics solutions, and range from a collection of standalone tools with a common data format under a single web-based interface, to integrative, distributed and extensible

bioinformatics workflow management systems.

Bioinformatics workflow management systems

A

bioinformatics workflow management system is a specialized form of a

workflow management system designed specifically to compose and execute a series of computational or data manipulation steps, or a workflow, in a Bioinformatics application. Such systems are designed to

* provide an easy-to-use environment for individual application scientists themselves to create their own workflows,

* provide interactive tools for the scientists enabling them to execute their workflows and view their results in real-time,

* simplify the process of sharing and reusing workflows between the scientists, and

* enable scientists to track the

provenance of the workflow execution results and the workflow creation steps.

Some of the platforms giving this service:

Galaxy

A galaxy is a Physical system, system of stars, stellar remnants, interstellar medium, interstellar gas, cosmic dust, dust, and dark matter bound together by gravity. The word is derived from the Ancient Greek, Greek ' (), literally 'milky', ...

,

Kepler,

Taverna,

UGENE,

Anduril,

HIVE.

BioCompute and BioCompute Objects

In 2014, the

US Food and Drug Administration sponsored a conference held at the

National Institutes of Health

The National Institutes of Health (NIH) is the primary agency of the United States government responsible for biomedical and public health research. It was founded in 1887 and is part of the United States Department of Health and Human Service ...

Bethesda Campus to discuss reproducibility in bioinformatics. Over the next three years, a consortium of stakeholders met regularly to discuss what would become BioCompute paradigm. These stakeholders included representatives from government, industry, and academic entities. Session leaders represented numerous branches of the FDA and NIH Institutes and Centers, non-profit entities including the

Human Variome Project and the

European Federation for Medical Informatics, and research institutions including

Stanford, the

New York Genome Center, and the

George Washington University.

It was decided that the BioCompute paradigm would be in the form of digital 'lab notebooks' which allow for the reproducibility, replication, review, and reuse, of bioinformatics protocols. This was proposed to enable greater continuity within a research group over the course of normal personnel flux while furthering the exchange of ideas between groups. The US FDA funded this work so that information on pipelines would be more transparent and accessible to their regulatory staff.

In 2016, the group reconvened at the NIH in Bethesda and discussed the potential for a

BioCompute Object, an instance of the BioCompute paradigm. This work was copied as both a "standard trial use" document and a preprint paper uploaded to bioRxiv. The BioCompute object allows for the JSON-ized record to be shared among employees, collaborators, and regulators.

Education platforms

While bioinformatics is taught as an in-person

master's degree

A master's degree (from Latin ) is a postgraduate academic degree awarded by universities or colleges upon completion of a course of study demonstrating mastery or a high-order overview of a specific field of study or area of professional prac ...

at many universities, there are many other methods and technologies available to learn and obtain certification in the subject. The computational nature of bioinformatics lends it to

computer-aided and online learning. Software platforms designed to teach bioinformatics concepts and methods include

Rosalind and online courses offered through the

Swiss Institute of Bioinformatics Training Portal. The

Canadian Bioinformatics Workshops provides videos and slides from training workshops on their website under a

Creative Commons license. The 4273π project or 4273pi project also offers open source educational materials for free. The course runs on low cost

Raspberry Pi computers and has been used to teach adults and school pupils. 4273 is actively developed by a consortium of academics and research staff who have run research level bioinformatics using Raspberry Pi computers and the 4273π operating system.

platforms also provide online certifications in bioinformatics and related disciplines, including

Coursera's Bioinformatics Specialization at the

University of California, San Diego

The University of California, San Diego (UC San Diego in communications material, formerly and colloquially UCSD) is a public university, public Land-grant university, land-grant research university in San Diego, California, United States. Es ...

, Genomic Data Science Specialization at

Johns Hopkins University

The Johns Hopkins University (often abbreviated as Johns Hopkins, Hopkins, or JHU) is a private university, private research university in Baltimore, Maryland, United States. Founded in 1876 based on the European research institution model, J ...

, and

EdX's Data Analysis for Life Sciences XSeries at

Harvard University

Harvard University is a Private university, private Ivy League research university in Cambridge, Massachusetts, United States. Founded in 1636 and named for its first benefactor, the History of the Puritans in North America, Puritan clergyma ...

.

Conferences

There are several large conferences that are concerned with bioinformatics. Some of the most notable examples are

Intelligent Systems for Molecular Biology (ISMB),

European Conference on Computational Biology (ECCB), and

Research in Computational Molecular Biology (RECOMB).

See also

References

Further reading

* Sehgal et al. : Structural, phylogenetic and docking studies of D-amino acid oxidase activator(DAOA ), a candidate schizophrenia gene. Theoretical Biology and Medical Modelling 2013 10 :3.

* Achuthsankar S Nai

Computational Biology & Bioinformatics – A gentle Overview, Communications of Computer Society of India, January 2007

*

Aluru, Srinivas, ed. ''Handbook of Computational Molecular Biology''. Chapman & Hall/Crc, 2006. (Chapman & Hall/Crc Computer and Information Science Series)

* Baldi, P and Brunak, S, ''Bioinformatics: The Machine Learning Approach'', 2nd edition. MIT Press, 2001.

* Barnes, M.R. and Gray, I.C., eds., ''Bioinformatics for Geneticists'', first edition. Wiley, 2003.

* Baxevanis, A.D. and Ouellette, B.F.F., eds., ''Bioinformatics: A Practical Guide to the Analysis of Genes and Proteins'', third edition. Wiley, 2005.

* Baxevanis, A.D., Petsko, G.A., Stein, L.D., and Stormo, G.D., eds., ''

Current Protocols in Bioinformatics''. Wiley, 2007.

* Cristianini, N. and Hahn, M

''Introduction to Computational Genomics'', Cambridge University Press, 2006. ( , )

* Durbin, R., S. Eddy, A. Krogh and G. Mitchison, ''Biological sequence analysis''. Cambridge University Press, 1998.

*

* Keedwell, E., ''Intelligent Bioinformatics: The Application of Artificial Intelligence Techniques to Bioinformatics Problems''. Wiley, 2005.

* Kohane, et al. ''Microarrays for an Integrative Genomics.'' The MIT Press, 2002.

* Lund, O. et al. ''Immunological Bioinformatics.'' The MIT Press, 2005.

*

Pachter, Lior and

Sturmfels, Bernd. "Algebraic Statistics for Computational Biology" Cambridge University Press, 2005.

* Pevzner, Pavel A. ''Computational Molecular Biology: An Algorithmic Approach'' The MIT Press, 2000.

* Soinov, L

Bioinformatics and Pattern Recognition Come Together Journal of Pattern Recognition Research

JPRR), Vol 1 (1) 2006 p. 37–41

* Stevens, Hallam, ''Life Out of Sequence: A Data-Driven History of Bioinformatics'', Chicago: The University of Chicago Press, 2013,

* Tisdall, James. "Beginning Perl for Bioinformatics" O'Reilly, 2001.

*

ttp://www.nap.edu/catalog/2121.html Calculating the Secrets of Life: Contributions of the Mathematical Sciences and computing to Molecular Biology (1995)

Foundations of Computational and Systems Biology MIT CourseComputational Biology: Genomes, Networks, Evolution Free MIT Course

External links

Bioinformatics Resource Portal (SIB)

{{Authority control

Finding the structure of proteins is an important application of bioinformatics. The Critical Assessment of Protein Structure Prediction (CASP) is an open competition where worldwide research groups submit protein models for evaluating unknown protein models.

Finding the structure of proteins is an important application of bioinformatics. The Critical Assessment of Protein Structure Prediction (CASP) is an open competition where worldwide research groups submit protein models for evaluating unknown protein models.

Tens of thousands of three-dimensional protein structures have been determined by

Tens of thousands of three-dimensional protein structures have been determined by