|

Wolff Algorithm

The Wolff algorithm, named after Ulli Wolff, is an algorithm for Monte Carlo simulation of the Ising model and Potts model in which the unit to be flipped is not a single spin (as in the heat bath or Metropolis algorithms) but a cluster of them. This cluster is defined as the set of connected spins sharing the same spin states, based on the Fortuin-Kasteleyn representation. The Wolff algorithm is similar to the Swendsen–Wang algorithm, but different in that the former only flips one randomly chosen cluster with probability 1, while the latter flip every cluster independently with probability 1/2. It is shown numerically that flipping only one cluster decreases the autocorrelation Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable ... time of the spin statistics. The advantage ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ulli Wolff

{{disambiguation ...

Ulli may refer to: * Pulkovo Airport (ICAO airport code ULLI) * Cyclone Ulli, an intense and deadly European windstorm, forming on December 31, 2011, off the coast of New Jersey and dissipating January 7, 2012 See also *Uli (other) Uli may refer to: *Uli, Iran, a village *Uli, Anambra, a town in Nigeria *Uli I of Mali *Uli (design), by the Igbo people of Nigeria *Uli figure, from New Ireland, Papua New Guinea *Uli (food), a rice-based food * ISO 639 code for the Ulithian lang ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm can be expressed within a finite amount of spac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Simulation

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of ris ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ising Model

The Ising model () (or Lenz-Ising model or Ising-Lenz model), named after the physicists Ernst Ising and Wilhelm Lenz, is a mathematical model of ferromagnetism in statistical mechanics. The model consists of discrete variables that represent magnetic dipole moments of atomic "spins" that can be in one of two states (+1 or −1). The spins are arranged in a graph, usually a lattice (where the local structure repeats periodically in all directions), allowing each spin to interact with its neighbors. Neighboring spins that agree have a lower energy than those that disagree; the system tends to the lowest energy but heat disturbs this tendency, thus creating the possibility of different structural phases. The model allows the identification of phase transitions as a simplified model of reality. The two-dimensional square-lattice Ising model is one of the simplest statistical models to show a phase transition. The Ising model was invented by the physicist , who gave it as a pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Potts Model

In statistical mechanics, the Potts model, a generalization of the Ising model, is a model of interacting spins on a crystalline lattice. By studying the Potts model, one may gain insight into the behaviour of ferromagnets and certain other phenomena of solid-state physics. The strength of the Potts model is not so much that it models these physical systems well; it is rather that the one-dimensional case is exactly solvable, and that it has a rich mathematical formulation that has been studied extensively. The model is named after Renfrey Potts, who described the model near the end of his 1951 Ph.D. thesis. The model was related to the "planar Potts" or " clock model", which was suggested to him by his advisor, Cyril Domb. The four-state Potts model is sometimes known as the Ashkin–Teller model, after Julius Ashkin and Edward Teller, who considered an equivalent model in 1943. The Potts model is related to, and generalized by, several other models, including the XY mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

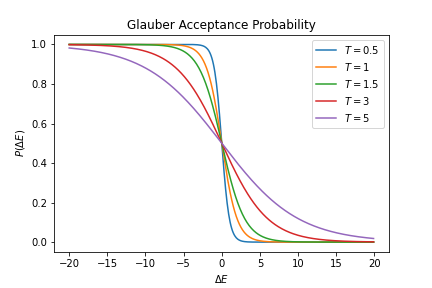

Glauber Dynamics

In statistical physics, Glauber dynamics is a way to simulate the Ising model (a model of magnetism) on a computer. It is a type of Markov Chain Monte Carlo algorithm. The algorithm In the Ising model, we have say N particles that can spin up (+1) or down (-1). Say the particles are on a 2D grid. We label each with an x and y coordinate. Glauber's algorithm becomes: # Choose a particle \sigma_ at random. # Sum its four neighboring spins. S = \sigma_ + \sigma_ + \sigma_ + \sigma_. # Compute the change in energy if the spin x, y were to flip. This is \Delta E = 2\sigma_ S (see the Hamiltonian for the Ising model). # Flip the spin with probability e^/(1 + e^) where T is the temperature . # Display the new grid. Repeat the above N times. In Glauber algorithm, if the energy change in flipping a spin is zero, \Delta E = 0, then the spin would always gets flipped with probability p(0, T) = 0.5. Glauber V.S. Metropolis–Hastings algorithm Metropolis–Hastings algorithm gives id ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metropolis–Hastings Algorithm

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. This sequence can be used to approximate the distribution (e.g. to generate a histogram) or to compute an integral (e.g. an expected value). Metropolis–Hastings and other MCMC algorithms are generally used for sampling from multi-dimensional distributions, especially when the number of dimensions is high. For single-dimensional distributions, there are usually other methods (e.g. adaptive rejection sampling) that can directly return independent samples from the distribution, and these are free from the problem of autocorrelated samples that is inherent in MCMC methods. History The algorithm was named after Nicholas Metropolis and W.K. Hastings. Metropolis was the first author to appear on the list of authors of the 1953 article '' Equatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Cluster Model

In statistical mechanics, probability theory, graph theory, etc. the random cluster model is a random graph that generalizes and unifies the Ising model, Potts model, and percolation model. It is used to study random combinatorial structures, electrical networks, etc. It is also referred to as the RC model or sometimes the FK representation after its founders Cees Fortuin and Piet Kasteleyn. Definition Let G = (V,E) be a graph, and \omega: E \to \ be a bond configuration on the graph that maps each edge to a value of either 0 or 1. We say that a bond is ''closed'' on edge e\in E if \omega(e)=0, and open if \omega(e)=1. If we let A(\omega) = \ be the set of open bonds, then an open cluster is any connected component in A(\omega). Note that an open cluster can be a single vertex (if that vertex is not incident to any open bonds). Suppose an edge is open independently with probability p and closed otherwise, then this is just the standard Bernoulli percolation process. The probabi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Swendsen–Wang Algorithm

The Swendsen–Wang algorithm is the first non-local or cluster algorithm for Monte Carlo simulation for large systems near Critical point (thermodynamics), criticality. It has been introduced by Robert Swendsen and Jian-Sheng Wang in 1987 at Carnegie Mellon University, Carnegie Mellon. The original algorithm was designed for the Ising model, Ising and Potts models, and it was later generalized to other systems as well, such as the XY model by Wolff algorithm and particles of fluids. The key ingredient was the random cluster model, a representation of the Ising or Potts model, Potts model through percolation models of connecting bonds, due to Fortuin and Kasteleyn. It has been generalized by Barbu and Zhu to arbitrary sampling probabilities by viewing it as a Metropolis–Hastings algorithm and computing the acceptance probability of the proposed Monte Carlo move. Motivation The problem of the critical slowing-down affecting local processes is of fundamental importance in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelatio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Netlib

Netlib is a repository of software for scientific computing maintained by AT&T, Bell Laboratories, the University of Tennessee and Oak Ridge National Laboratory. Netlib comprises many separate programs and libraries. Most of the code is written in C and Fortran, with some programs in other languages. History The project began with email distribution on UUCP, ARPANET and CSNET in the 1980s. The code base of Netlib was written at a time when computer software was not yet considered merchandise. Therefore, no license terms or terms of use are stated for many programs. Before the Berne Convention Implementation Act of 1988 (and the earlier Copyright Act of 1976) works without an explicit copyright notice were public-domain software. Also, most of the Netlib code is work of US government employees and therefore in the public domain. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Methods

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |