Vector Processing on:

[Wikipedia]

[Google]

[Amazon]

In

Other examples followed.

Other examples followed.

Additionally, vector processors can be more resource-efficient by using slower hardware and saving power, but still achieving throughput and having less latency than SIMD, through vector chaining.

Consider both a SIMD processor and a vector processor working on 4 64-bit elements, doing a LOAD, ADD, MULTIPLY and STORE sequence. If the SIMD width is 4, then the SIMD processor must LOAD four elements entirely before it can move on to the ADDs, must complete all the ADDs before it can move on to the MULTIPLYs, and likewise must complete all of the MULTIPLYs before it can start the STOREs. This is by definition and by design.

Having to perform 4-wide simultaneous 64-bit LOADs and 64-bit STOREs is very costly in hardware (256 bit data paths to memory). Having 4x 64-bit ALUs, especially MULTIPLY, likewise. To avoid these high costs, a SIMD processor would have to have 1-wide 64-bit LOAD, 1-wide 64-bit STORE, and only 2-wide 64-bit ALUs. As shown in the diagram, which assumes a multi-issue execution model, the consequences are that the operations now take longer to complete. If multi-issue is not possible, then the operations take even longer because the LD may not be issued (started) at the same time as the first ADDs, and so on. If there are only 4-wide 64-bit SIMD ALUs, the completion time is even worse: only when all four LOADs have completed may the SIMD operations start, and only when all ALU operations have completed may the STOREs begin.

A vector processor, by contrast, even if it is ''single-issue'' and uses no SIMD ALUs, only having 1-wide 64-bit LOAD, 1-wide 64-bit STORE (and, as in the Cray-1, the ability to run MULTIPLY simultaneously with ADD), may complete the four operations faster than a SIMD processor with 1-wide LOAD, 1-wide STORE, and 2-wide SIMD. This more efficient resource utilization, due to vector chaining, is a key advantage and difference compared to SIMD. SIMD, by design and definition, cannot perform chaining except to the entire group of results.

Additionally, vector processors can be more resource-efficient by using slower hardware and saving power, but still achieving throughput and having less latency than SIMD, through vector chaining.

Consider both a SIMD processor and a vector processor working on 4 64-bit elements, doing a LOAD, ADD, MULTIPLY and STORE sequence. If the SIMD width is 4, then the SIMD processor must LOAD four elements entirely before it can move on to the ADDs, must complete all the ADDs before it can move on to the MULTIPLYs, and likewise must complete all of the MULTIPLYs before it can start the STOREs. This is by definition and by design.

Having to perform 4-wide simultaneous 64-bit LOADs and 64-bit STOREs is very costly in hardware (256 bit data paths to memory). Having 4x 64-bit ALUs, especially MULTIPLY, likewise. To avoid these high costs, a SIMD processor would have to have 1-wide 64-bit LOAD, 1-wide 64-bit STORE, and only 2-wide 64-bit ALUs. As shown in the diagram, which assumes a multi-issue execution model, the consequences are that the operations now take longer to complete. If multi-issue is not possible, then the operations take even longer because the LD may not be issued (started) at the same time as the first ADDs, and so on. If there are only 4-wide 64-bit SIMD ALUs, the completion time is even worse: only when all four LOADs have completed may the SIMD operations start, and only when all ALU operations have completed may the STOREs begin.

A vector processor, by contrast, even if it is ''single-issue'' and uses no SIMD ALUs, only having 1-wide 64-bit LOAD, 1-wide 64-bit STORE (and, as in the Cray-1, the ability to run MULTIPLY simultaneously with ADD), may complete the four operations faster than a SIMD processor with 1-wide LOAD, 1-wide STORE, and 2-wide SIMD. This more efficient resource utilization, due to vector chaining, is a key advantage and difference compared to SIMD. SIMD, by design and definition, cannot perform chaining except to the entire group of results.

; Hypothetical RISC machine

; assume a, b, and c are memory locations in their respective registers

; add 10 numbers in a to 10 numbers in b, store results in c

move $10, count ; count := 10

loop:

load r1, a

load r2, b

add r3, r1, r2 ; r3 := r1 + r2

store r3, c

add a, a, $4 ; move on

add b, b, $4

add c, c, $4

dec count ; decrement

jnez count, loop ; loop back if count is not yet 0

ret

But to a vector processor, this task looks considerably different:

; assume we have vector registers v1-v3

; with size equal or larger than 10

move $10, count ; count = 10

vload v1, a, count

vload v2, b, count

vadd v3, v1, v2

vstore v3, c, count

ret

Note the complete lack of looping in the instructions, because it is the ''hardware'' which has performed 10 sequential operations: effectively the loop count is on an explicit ''per-instruction'' basis.

Cray-style vector ISAs take this a step further and provide a global "count" register, called vector length (VL):

; again assume we have vector registers v1-v3

; with size larger than or equal to 10

setvli $10 # Set vector length VL=10

vload v1, a # 10 loads from a

vload v2, b # 10 loads from b

vadd v3, v1, v2 # 10 adds

vstore v3, c # 10 stores into c

ret

There are several savings inherent in this approach.

# only three address translations are needed. Depending on the architecture, this can represent a significant savings by itself.

# Another saving is fetching and decoding the instruction itself, which has to be done only one time instead of ten.

# The code itself is also smaller, which can lead to more efficient memory use, reduction in L1 instruction cache size, reduction in power consumption.

# With the program size being reduced branch prediction has an easier job.

# With the length (equivalent to SIMD width) not being hard-coded into the instruction, not only is the encoding more compact, it's also "future-proof" and allows even

void iaxpy(size_t n, int a, const int x[], int y[])

In each iteration, every element of y has an element of x multiplied by a and added to it. The program is expressed in scalar linear form for readability.

loop:

load32 r1, x ; load one 32bit data

load32 r2, y

mul32 r1, a, r1 ; r1 := r1 * a

add32 r3, r1, r2 ; r3 := r1 + r2

store32 r3, y

addl x, x, $4 ; x := x + 4

addl y, y, $4

subl n, n, $1 ; n := n - 1

jgz n, loop ; loop back if n > 0

out:

ret

The STAR-like code remains concise, but because the STAR-100's vectorisation was by design based around memory accesses, an extra slot of memory is now required to process the information. Two times the latency is also needed due to the extra requirement of memory access.

; Assume tmp is pre-allocated

vmul tmp, a, x, n ; tmp = a * x vadd y, y, tmp, n ; y = y + tmp ret

splatx4 v4, a ; v4 = a,a,a,a

The time taken would be basically the same as a vector implementation of described above.

vloop:

load32x4 v1, x

load32x4 v2, y

mul32x4 v1, a, v1 ; v1 := v1 * a

add32x4 v3, v1, v2 ; v3 := v1 + v2

store32x4 v3, y

addl x, x, $16 ; x := x + 16

addl y, y, $16

subl n, n, $4 ; n := n - 4

jgz n, vloop ; go back if n > 0

out:

ret

Note that both x and y pointers are incremented by 16, because that is how long (in bytes) four 32-bit integers are. The decision was made that the algorithm ''shall'' only cope with 4-wide SIMD, therefore the constant is hard-coded into the program.

Unfortunately for SIMD, the clue was in the assumption above, "that n is a multiple of 4" as well as "aligned access", which, clearly, is a limited specialist use-case.

Realistically, for general-purpose loops such as in portable libraries, where n cannot be limited in this way, the overhead of setup and cleanup for SIMD in order to cope with non-multiples of the SIMD width, can far exceed the instruction count inside the loop itself. Assuming worst-case that the hardware cannot do misaligned SIMD memory accesses, a real-world algorithm will:

* first have to have a preparatory section which works on the beginning unaligned data, up to the first point where SIMD memory-aligned operations can take over. this will either involve (slower) scalar-only operations or smaller-sized packed SIMD operations. Each copy implements the full algorithm inner loop.

* perform the aligned SIMD loop at the maximum SIMD width up until the last few elements (those remaining that do not fit the fixed SIMD width)

* have a cleanup phase which, like the preparatory section, is just as large and just as complex.

Eight-wide SIMD requires repeating the inner loop algorithm first with four-wide SIMD elements, then two-wide SIMD, then one (scalar), with a test and branch in between each one, in order to cover the first and last remaining SIMD elements (0 <= n <= 7).

This more than ''triples'' the size of the code, in fact in extreme cases it results in an ''order of magnitude'' increase in instruction count! This can easily be demonstrated by compiling the iaxpy example for AVX-512, using the options to gcc.

Over time as the ISA evolves to keep increasing performance, it results in ISA Architects adding 2-wide SIMD, then 4-wide SIMD, then 8-wide and upwards. It can therefore be seen why AVX-512 exists in x86.

Without predication, the wider the SIMD width the worse the problems get, leading to massive opcode proliferation, degraded performance, extra power consumption and unnecessary software complexity.

Vector processors on the other hand are designed to issue computations of variable length for an arbitrary count, n, and thus require very little setup, and no cleanup. Even compared to those SIMD ISAs which have masks (but no instruction), Vector processors produce much more compact code because they do not need to perform explicit mask calculation to cover the last few elements (illustrated below).

vloop:

# prepare mask. few ISAs have min though

min t0, n, $4 ; t0 = min(n, 4)

shift m, $1, t0 ; m = 1< 0

out:

ret

Here it can be seen that the code is much cleaner but a little complex: at least, however, there is no setup or cleanup: on the last iteration of the loop, the predicate mask will be set to either 0b0000, 0b0001, 0b0011, 0b0111 or 0b1111, resulting in between 0 and 4 SIMD element operations being performed, respectively.

One additional potential complication: some RISC ISAs do not have a "min" instruction, needing instead to use a branch or scalar predicated compare.

It is clear how predicated SIMD at least merits the term "vector capable", because it can cope with variable-length vectors by using predicate masks. The final evolving step to a "true" vector ISA, however, is to not have any evidence in the ISA ''at all'' of a SIMD width, leaving that entirely up to the hardware.

vloop:

setvl t0, n # VL=t0=min(MVL, n)

vld32 v0, x # load vector x

vld32 v1, y # load vector y

vmadd32 v1, v0, a # v1 += v0 * a

vst32 v1, y # store Y

add y, t0*4 # advance y by VL*4

add x, t0*4 # advance x by VL*4

sub n, t0 # n -= VL (t0)

bnez n, vloop # repeat if n != 0

This is essentially not very different from the SIMD version (processes 4 data elements per loop), or from the initial Scalar version (processes just the one). n still contains the number of data elements remaining to be processed, but t0 contains the copy of VL – the number that is ''going'' to be processed in each iteration. t0 is subtracted from n after each iteration, and if n is zero then all elements have been processed.

A number of things to note, when comparing against the Predicated SIMD assembly variant:

# The instruction has embedded within it a instruction

# Where the SIMD variant hard-coded both the width (4) into the creation of the mask ''and'' in the SIMD width (load32x4 etc.) the vector ISA equivalents have no such limit. This makes vector programs both portable, Vendor Independent, and future-proof.

# Setting VL effectively ''creates a hidden predicate mask'' that is automatically applied to the vectors

# Where with predicated SIMD the mask bitlength is limited to that which may be held in a scalar (or special mask) register, vector ISA's mask registers have no such limitation. Cray-I vectors could be just over 1,000 elements (in 1977).

Thus it can be seen, very clearly, how vector ISAs reduce the number of instructions.

Also note, that just like the predicated SIMD variant, the pointers to x and y are advanced by t0 times four because they both point to 32 bit data, but that n is decremented by straight t0. Compared to the fixed-size SIMD assembler there is very little apparent difference: x and y are advanced by hard-coded constant 16, n is decremented by a hard-coded 4, so initially it is hard to appreciate the significance. The difference comes in the realisation that the vector hardware could be capable of doing 4 simultaneous operations, or 64, or 10,000, it would be the exact same vector assembler for all of them ''and there would still be no SIMD cleanup code''. Even compared to the predicate-capable SIMD, it is still more compact, clearer, more elegant and uses less resources.

Not only is it a much more compact program (saving on L1 Cache size), but as previously mentioned, the vector version can issue far more data processing to the ALUs, again saving power because Instruction Decode and Issue can sit idle.

Additionally, the number of elements going in to the function can start at zero. This sets the vector length to zero, which effectively disables all vector instructions, turning them into no-ops, at runtime. Thus, unlike non-predicated SIMD, even when there are no elements to process there is still no wasted cleanup code.

void (size_t n, int a, const int x[])

Here, an accumulator (y) is used to sum up all the values in the array, x.

set y, 0 ; y initialised to zero

loop:

load32 r1, x ; load one 32bit data

add32 y, y, r1 ; y := y + r1

addl x, x, $4 ; x := x + 4

subl n, n, $1 ; n := n - 1

jgz n, loop ; loop back if n > 0

out:

ret y ; returns result, y

This is very straightforward. "y" starts at zero, 32 bit integers are loaded one at a time into r1, added to y, and the address of the array "x" moved on to the next element in the array.

addl r3, x, $16 ; for 2nd 4 of x

load32x4 v1, x ; first 4 of x

load32x4 v2, r3 ; 2nd 4 of x

add32x4 v1, v2, v1 ; add 2 groups

At this point four adds have been performed:

* - First SIMD ADD: element 0 of first group added to element 0 of second group

* - Second SIMD ADD: element 1 of first group added to element 1 of second group

* - Third SIMD ADD: element 2 of first group added to element 2 of second group

* - Fourth SIMD ADD: element 3 of first group added to element 3 of second group

but with 4-wide SIMD being incapable by design of adding for example, things go rapidly downhill just as they did with the general case of using SIMD for general-purpose IAXPY loops. To sum the four partial results, two-wide SIMD can be used, followed by a single scalar add, to finally produce the answer, but, frequently, the data must be transferred out of dedicated SIMD registers before the last scalar computation can be performed.

Even with a general loop (n not fixed), the only way to use 4-wide SIMD is to assume four separate "streams", each offset by four elements. Finally, the four partial results have to be summed. Other techniques involve shuffle:

examples online can be found for AVX-512 of how to do "Horizontal Sum"

Aside from the size of the program and the complexity, an additional potential problem arises if floating-point computation is involved: the fact that the values are not being summed in strict order (four partial results) could result in rounding errors.

setvl t0, n # VL=t0=min(MVL, n)

vld32 v0, x # load vector x

vredadd32 y, v0 # reduce-add into y

The code when n is larger than the maximum vector length is not that much more complex, and is a similar pattern to the first example ("IAXPY").

set y, 0

vloop:

setvl t0, n # VL=t0=min(MVL, n)

vld32 v0, x # load vector x

vredadd32 y, y, v0 # add all x into y

add x, t0*4 # advance x by VL*4

sub n, t0 # n -= VL (t0)

bnez n, vloop # repeat if n != 0

ret y

The simplicity of the algorithm is stark in comparison to SIMD. Again, just as with the IAXPY example, the algorithm is length-agnostic (even on Embedded implementations where maximum vector length could be only one).

Implementations in hardware may, if they are certain that the right answer will be produced, perform the reduction in parallel. Some vector ISAs offer a parallel reduction mode as an explicit option, for when the programmer knows that any potential rounding errors do not matter, and low latency is critical.

This example again highlights a key critical fundamental difference between true vector processors and those SIMD processors, including most commercial GPUs, which are inspired by features of vector processors.

computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and softw ...

, a vector processor or array processor is a central processing unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions ...

(CPU) that implements an instruction set

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, s ...

where its instructions are designed to operate efficiently and effectively on large one-dimensional array

In computer science, an array is a data structure consisting of a collection of ''elements'' ( values or variables), of same memory size, each identified by at least one ''array index'' or ''key'', a collection of which may be a tuple, known ...

s of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data

Single instruction, multiple data (SIMD) is a type of parallel computer, parallel processing in Flynn's taxonomy. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneousl ...

(SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, compression and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators.

Vector machines appeared in the early 1970s and dominated supercomputer

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instruc ...

design through the 1970s into the 1990s, notably the various Cray

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed ...

platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor

A microprocessor is a computer processor (computing), processor for which the data processing logic and control is included on a single integrated circuit (IC), or a small number of ICs. The microprocessor contains the arithmetic, logic, a ...

designs led to a decline in vector supercomputers during the 1990s.

History

Early research and development

Vector processing development began in the early 1960s at theWestinghouse Electric Corporation

The Westinghouse Electric Corporation was an American manufacturing company founded in 1886 by George Westinghouse and headquartered in Pittsburgh, Pennsylvania. It was originally named "Westinghouse Electric & Manufacturing Company" and was ...

in their ''Solomon'' project. Solomon's goal was to dramatically increase math performance by using a large number of simple coprocessors under the control of a single master Central processing unit

A central processing unit (CPU), also called a central processor, main processor, or just processor, is the primary Processor (computing), processor in a given computer. Its electronic circuitry executes Instruction (computing), instructions ...

(CPU). The CPU fed a single common instruction to all of the arithmetic logic unit

In computing, an arithmetic logic unit (ALU) is a Combinational logic, combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on ...

s (ALUs), one per cycle, but with a different data point for each one to work on. This allowed the Solomon machine to apply a single algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algo ...

to a large data set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more table (database), database tables, where every column (database), column of a table represents a particular Variable (computer sci ...

, fed in the form of an array.

In 1962, Westinghouse cancelled the project, but the effort was restarted by the University of Illinois at Urbana–Champaign

The University of Illinois Urbana-Champaign (UIUC, U of I, Illinois, or University of Illinois) is a public land-grant research university in the Champaign–Urbana metropolitan area, Illinois, United States. Established in 1867, it is the f ...

as the ILLIAC IV. Their version of the design originally called for a 1 GFLOPS machine with 256 ALUs, but, when it was finally delivered in 1972, it had only 64 ALUs and could reach only 100 to 150 MFLOPS. Nevertheless, it showed that the basic concept was sound, and, when used on data-intensive applications, such as computational fluid dynamics

Computational fluid dynamics (CFD) is a branch of fluid mechanics that uses numerical analysis and data structures to analyze and solve problems that involve fluid dynamics, fluid flows. Computers are used to perform the calculations required ...

, the ILLIAC was the fastest machine in the world. The ILLIAC approach of using separate ALUs for each data element is not common to later designs, and is often referred to under a separate category, massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

computing. Around this time Flynn categorized this type of processing as an early form of single instruction, multiple threads (SIMT).

International Computers Limited

International Computers Limited (ICL) was a British computer hardware, computer software and computer services company that operated from 1968 until 2002. It was formed through a merger of International Computers and Tabulators (ICT), English Ele ...

sought to avoid many of the difficulties with the ILLIAC concept with its own Distributed Array Processor (DAP) design, categorising the ILLIAC and DAP as cellular array processors that potentially offered substantial performance benefits over conventional vector processor designs such as the CDC STAR-100 and Cray 1.

Computer for operations with functions

A computer for operations with functions was presented and developed by Kartsev in 1967.Supercomputers

The first vector supercomputers are theControl Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer company that in the 1960s was one of the nine major U.S. computer companies, which group included IBM, the Burroughs Corporation, and the Digital Equipment Corporation (DEC), the N ...

STAR-100 and Texas Instruments

Texas Instruments Incorporated (TI) is an American multinational semiconductor company headquartered in Dallas, Texas. It is one of the top 10 semiconductor companies worldwide based on sales volume. The company's focus is on developing analog ...

Advanced Scientific Computer (ASC), which were introduced in 1974 and 1972, respectively.

The basic ASC (i.e., "one pipe") ALU used a pipeline architecture that supported both scalar and vector computations, with peak performance reaching approximately 20 MFLOPS, readily achieved when processing long vectors. Expanded ALU configurations supported "two pipes" or "four pipes" with a corresponding 2X or 4X performance gain. Memory bandwidth was sufficient to support these expanded modes.

The STAR-100 was otherwise slower than CDC's own supercomputers like the CDC 7600, but at data-related tasks they could keep up while being much smaller and less expensive. However the machine also took considerable time decoding the vector instructions and getting ready to run the process, so it required very specific data sets to work on before it actually sped anything up.

The vector technique was first fully exploited in 1976 by the famous Cray-1. Instead of leaving the data in memory like the STAR-100 and ASC, the Cray design had eight vector registers, which held sixty-four 64-bit words each. The vector instructions were applied between registers, which is much faster than talking to main memory. Whereas the STAR-100 would apply a single operation across a long vector in memory and then move on to the next operation, the Cray design would load a smaller section of the vector into registers and then apply as many operations as it could to that data, thereby avoiding many of the much slower memory access operations.

The Cray design used pipeline parallelism to implement vector instructions rather than multiple ALUs. In addition, the design had completely separate pipelines for different instructions, for example, addition/subtraction was implemented in different hardware than multiplication. This allowed a batch of vector instructions to be pipelined into each of the ALU subunits, a technique they called ''vector chaining''. The Cray-1 normally had a performance of about 80 MFLOPS, but with up to three chains running it could peak at 240 MFLOPS and averaged around 150 – far faster than any machine of the era.

Other examples followed.

Other examples followed. Control Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer company that in the 1960s was one of the nine major U.S. computer companies, which group included IBM, the Burroughs Corporation, and the Digital Equipment Corporation (DEC), the N ...

tried to re-enter the high-end market again with its ETA-10 machine, but it sold poorly and they took that as an opportunity to leave the supercomputing field entirely. In the early and mid-1980s Japanese companies ( Fujitsu, Hitachi

() is a Japanese Multinational corporation, multinational Conglomerate (company), conglomerate founded in 1910 and headquartered in Chiyoda, Tokyo. The company is active in various industries, including digital systems, power and renewable ener ...

and Nippon Electric Corporation (NEC) introduced register-based vector machines similar to the Cray-1, typically being slightly faster and much smaller. Oregon

Oregon ( , ) is a U.S. state, state in the Pacific Northwest region of the United States. It is a part of the Western U.S., with the Columbia River delineating much of Oregon's northern boundary with Washington (state), Washington, while t ...

-based Floating Point Systems (FPS) built add-on array processors for minicomputer

A minicomputer, or colloquially mini, is a type of general-purpose computer mostly developed from the mid-1960s, built significantly smaller and sold at a much lower price than mainframe computers . By 21st century-standards however, a mini is ...

s, later building their own minisupercomputers.

Throughout, Cray continued to be the performance leader, continually beating the competition with a series of machines that led to the Cray-2, Cray X-MP and Cray Y-MP. Since then, the supercomputer market has focused much more on massively parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of ...

processing rather than better implementations of vector processors. However, recognising the benefits of vector processing, IBM developed Virtual Vector Architecture for use in supercomputers coupling several scalar processors to act as a vector processor.

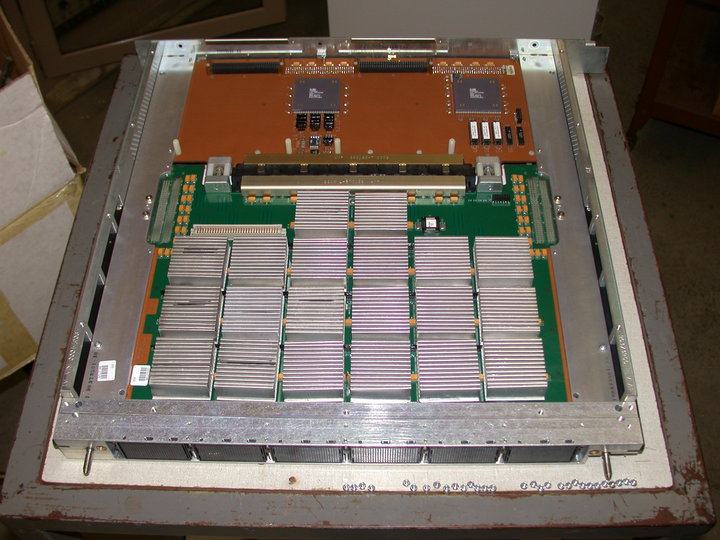

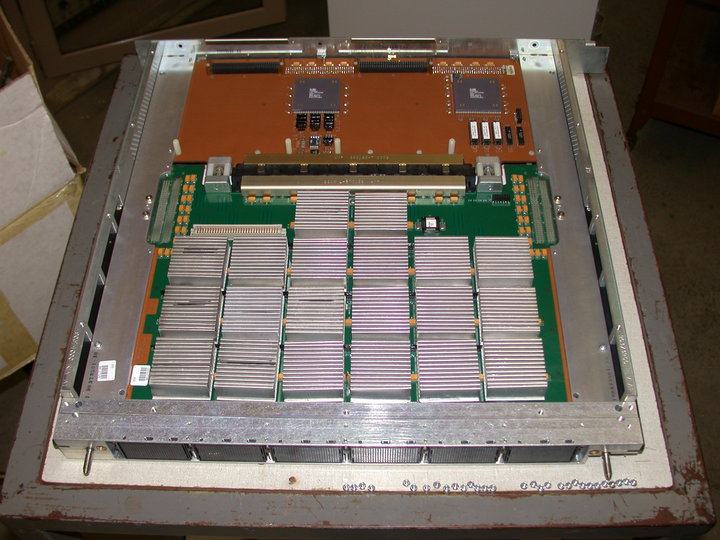

Although vector supercomputers resembling the Cray-1 are less popular these days, NEC has continued to make this type of computer up to the present day with their SX series of computers. Most recently, the SX-Aurora TSUBASA places the processor and either 24 or 48 gigabytes of memory on an HBM 2 module within a card that physically resembles a graphics coprocessor, but instead of serving as a co-processor, it is the main computer with the PC-compatible computer into which it is plugged serving support functions.

GPU

Modern graphics processing units ( GPUs) include an array of shader pipelines which may be driven by compute kernels, and can be considered vector processors (using a similar strategy for hiding memory latencies). As shown in Flynn's 1972 paper the key distinguishing factor of SIMT-based GPUs is that it has a single instruction decoder-broadcaster but that the cores receiving and executing that same instruction are otherwise reasonably normal: their own ALUs, their own register files, their own Load/Store units and their own independent L1 data caches. Thus although all cores simultaneously execute the exact same instruction in lock-step with each other they do so with completely different data from completely different memory locations. This is ''significantly'' more complex and involved than "Packed SIMD", which is strictly limited to execution of parallel pipelined arithmetic operations only. Although the exact internal details of today's commercial GPUs are proprietary secrets, the MIAOW team was able to piece together anecdotal information sufficient to implement a subset of the AMDGPU architecture.Recent development

Several modern CPU architectures are being designed as vector processors. The RISC-V vector extension follows similar principles as the early vector processors, and is being implemented in commercial products such as the Andes Technology AX45MPV. There are also severalopen source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use and view the source code, design documents, or content of the product. The open source model is a decentrali ...

vector processor architectures being developed, including ForwardCom and Libre-SOC.

Comparison with modern architectures

most commodity CPUs implement architectures that feature fixed-length SIMD instructions. On first inspection these can be considered a form of vector processing because they operate on multiple (vectorized, explicit length) data sets, and borrow features from vector processors. However, by definition, the addition of SIMD cannot, by itself, qualify a processor as an actual ''vector processor'', because SIMD is , and vectors are . The difference is illustrated below with examples, showing and comparing the three categories: Pure SIMD, Predicated SIMD, and Pure Vector Processing. * Pure (fixed) SIMD - also known as "Packed SIMD", SIMD within a register (SWAR), and Pipelined Processor in Flynn's Taxonomy. Common examples using SIMD with features inspired by vector processors include: Intel x86's MMX, SSE and AVX instructions, AMD's 3DNow! extensions, ARM NEON, Sparc's VIS extension,PowerPC

PowerPC (with the backronym Performance Optimization With Enhanced RISC – Performance Computing, sometimes abbreviated as PPC) is a reduced instruction set computer (RISC) instruction set architecture (ISA) created by the 1991 Apple Inc., App ...

's AltiVec and MIPS' MSA. In 2000, IBM

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American Multinational corporation, multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is ...

, Toshiba

is a Japanese multinational electronics company headquartered in Minato, Tokyo. Its diversified products and services include power, industrial and social infrastructure systems, elevators and escalators, electronic components, semiconductors ...

and Sony

is a Japanese multinational conglomerate (company), conglomerate headquartered at Sony City in Minato, Tokyo, Japan. The Sony Group encompasses various businesses, including Sony Corporation (electronics), Sony Semiconductor Solutions (i ...

collaborated to create the Cell processor, which is also SIMD.

* Predicated SIMD - also known as associative processing. Two notable examples which have per-element (lane-based) predication are ARM SVE2 and AVX-512

* Pure Vectors - as categorised in Duncan's taxonomy - these include the original Cray-1, Convex C-Series, NEC SX, and RISC-V RVV. Although memory-based, the CDC STAR-100 was also a vector processor.

Other CPU designs include some multiple instructions for vector processing on multiple (vectorized) data sets, typically known as MIMD (Multiple Instruction, Multiple Data) and realized with VLIW

Very long instruction word (VLIW) refers to instruction set architectures that are designed to exploit instruction-level parallelism (ILP). A VLIW processor allows programs to explicitly specify instructions to execute in parallel computing, para ...

(Very Long Instruction Word) and EPIC

Epic commonly refers to:

* Epic poetry, a long narrative poem celebrating heroic deeds and events significant to a culture or nation

* Epic film, a genre of film defined by the spectacular presentation of human drama on a grandiose scale

Epic(s) ...

(Explicitly Parallel Instruction Computing). The Fujitsu FR-V VLIW/vector processor combines both technologies.

Difference between SIMD and vector processors

SIMD instruction sets lack crucial features when compared to vector instruction sets. The most important of these is that vector processors, inherently by definition and design, have always been variable-length since their inception. Whereas pure (fixed-width, no predication) SIMD is often mistakenly claimed to be "vector" (because SIMD processes data which happens to be vectors), through close analysis and comparison of historic and modern ISAs, actual vector ISAs may be observed to have the following features that no SIMD ISA has: * a way to set the vector length, such as the instruction in RISCV RVV, or the instruction in NEC SX, without restricting the length to apower of two

A power of two is a number of the form where is an integer, that is, the result of exponentiation with number 2, two as the Base (exponentiation), base and integer as the exponent. In the fast-growing hierarchy, is exactly equal to f_1^ ...

or to a multiple of a fixed data width.

* Iteration and reduction over elements vectors.

Predicated SIMD (part of Flynn's taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966 and extended in 1972. The classification system has stuck, and it has been used as a tool in the design of modern processors and their functionalit ...

) which is comprehensive individual element-level predicate masks on every vector instruction as is now available in ARM SVE2. And AVX-512, almost qualifies as a vector processor. Predicated SIMD uses fixed-width SIMD ALUs but allows locally controlled (predicated) activation of units to provide the appearance of variable length vectors. Examples below help explain these categorical distinctions.

SIMD, because it uses fixed-width batch processing, is to cope with iteration and reduction. This is illustrated further with examples, below.

Additionally, vector processors can be more resource-efficient by using slower hardware and saving power, but still achieving throughput and having less latency than SIMD, through vector chaining.

Consider both a SIMD processor and a vector processor working on 4 64-bit elements, doing a LOAD, ADD, MULTIPLY and STORE sequence. If the SIMD width is 4, then the SIMD processor must LOAD four elements entirely before it can move on to the ADDs, must complete all the ADDs before it can move on to the MULTIPLYs, and likewise must complete all of the MULTIPLYs before it can start the STOREs. This is by definition and by design.

Having to perform 4-wide simultaneous 64-bit LOADs and 64-bit STOREs is very costly in hardware (256 bit data paths to memory). Having 4x 64-bit ALUs, especially MULTIPLY, likewise. To avoid these high costs, a SIMD processor would have to have 1-wide 64-bit LOAD, 1-wide 64-bit STORE, and only 2-wide 64-bit ALUs. As shown in the diagram, which assumes a multi-issue execution model, the consequences are that the operations now take longer to complete. If multi-issue is not possible, then the operations take even longer because the LD may not be issued (started) at the same time as the first ADDs, and so on. If there are only 4-wide 64-bit SIMD ALUs, the completion time is even worse: only when all four LOADs have completed may the SIMD operations start, and only when all ALU operations have completed may the STOREs begin.

A vector processor, by contrast, even if it is ''single-issue'' and uses no SIMD ALUs, only having 1-wide 64-bit LOAD, 1-wide 64-bit STORE (and, as in the Cray-1, the ability to run MULTIPLY simultaneously with ADD), may complete the four operations faster than a SIMD processor with 1-wide LOAD, 1-wide STORE, and 2-wide SIMD. This more efficient resource utilization, due to vector chaining, is a key advantage and difference compared to SIMD. SIMD, by design and definition, cannot perform chaining except to the entire group of results.

Additionally, vector processors can be more resource-efficient by using slower hardware and saving power, but still achieving throughput and having less latency than SIMD, through vector chaining.

Consider both a SIMD processor and a vector processor working on 4 64-bit elements, doing a LOAD, ADD, MULTIPLY and STORE sequence. If the SIMD width is 4, then the SIMD processor must LOAD four elements entirely before it can move on to the ADDs, must complete all the ADDs before it can move on to the MULTIPLYs, and likewise must complete all of the MULTIPLYs before it can start the STOREs. This is by definition and by design.

Having to perform 4-wide simultaneous 64-bit LOADs and 64-bit STOREs is very costly in hardware (256 bit data paths to memory). Having 4x 64-bit ALUs, especially MULTIPLY, likewise. To avoid these high costs, a SIMD processor would have to have 1-wide 64-bit LOAD, 1-wide 64-bit STORE, and only 2-wide 64-bit ALUs. As shown in the diagram, which assumes a multi-issue execution model, the consequences are that the operations now take longer to complete. If multi-issue is not possible, then the operations take even longer because the LD may not be issued (started) at the same time as the first ADDs, and so on. If there are only 4-wide 64-bit SIMD ALUs, the completion time is even worse: only when all four LOADs have completed may the SIMD operations start, and only when all ALU operations have completed may the STOREs begin.

A vector processor, by contrast, even if it is ''single-issue'' and uses no SIMD ALUs, only having 1-wide 64-bit LOAD, 1-wide 64-bit STORE (and, as in the Cray-1, the ability to run MULTIPLY simultaneously with ADD), may complete the four operations faster than a SIMD processor with 1-wide LOAD, 1-wide STORE, and 2-wide SIMD. This more efficient resource utilization, due to vector chaining, is a key advantage and difference compared to SIMD. SIMD, by design and definition, cannot perform chaining except to the entire group of results.

Description

In general terms, CPUs are able to manipulate one or two pieces of data at a time. For instance, most CPUs have an instruction that essentially says "add A to B and put the result in C". The data for A, B and C could be—in theory at least—encoded directly into the instruction. However, in efficient implementation things are rarely that simple. The data is rarely sent in raw form, and is instead "pointed to" by passing in an address to a memory location that holds the data. Decoding this address and getting the data out of the memory takes some time, during which the CPU traditionally would sit idle waiting for the requested data to show up. As CPU speeds have increased, this memory latency has historically become a large impediment to performance; see . In order to reduce the amount of time consumed by these steps, most modern CPUs use a technique known as instruction pipelining in which the instructions pass through several sub-units in turn. The first sub-unit reads the address and decodes it, the next "fetches" the values at those addresses, and the next does the math itself. With pipelining the "trick" is to start decoding the next instruction even before the first has left the CPU, in the fashion of anassembly line

An assembly line, often called ''progressive assembly'', is a manufacturing process where the unfinished product moves in a direct line from workstation to workstation, with parts added in sequence until the final product is completed. By mechan ...

, so the address decoder is constantly in use. Any particular instruction takes the same amount of time to complete, a time known as the '' latency'', but the CPU can process an entire batch of operations, in an overlapping fashion, much faster and more efficiently than if it did so one at a time.

Vector processors take this concept one step further. Instead of pipelining just the instructions, they also pipeline the data itself. The processor is fed instructions that say not just to add A to B, but to add all of the numbers "from here to here" to all of the numbers "from there to there". Instead of constantly having to decode instructions and then fetch the data needed to complete them, the processor reads a single instruction from memory, and it is simply implied in the definition of the instruction ''itself'' that the instruction will operate again on another item of data, at an address one increment larger than the last. This allows for significant savings in decoding time.

To illustrate what a difference this can make, consider the simple task of adding two groups of 10 numbers together. In a normal programming language one would write a "loop" that picked up each of the pairs of numbers in turn, and then added them. To the CPU, this would look something like this:

embedded processor

An embedded system is a specialized computer system—a combination of a computer processor, computer memory, and input/output peripheral devices—that has a dedicated function within a larger mechanical or electronic system. It is em ...

designs to consider using vectors purely to gain all the other advantages, rather than go for high performance.

Additionally, in more modern vector processor ISAs, "Fail on First" or "Fault First" has been introduced (see below) which brings even more advantages.

But more than that, a high performance vector processor may have multiple functional units adding those numbers in parallel. The checking of dependencies between those numbers is not required as a vector instruction specifies multiple independent operations. This simplifies the control logic required, and can further improve performance by avoiding stalls. The math operations thus completed far faster overall, the limiting factor being the time required to fetch the data from memory.

Not all problems can be attacked with this sort of solution. Including these types of instructions necessarily adds complexity to the core CPU. That complexity typically makes ''other'' instructions run slower—i.e., whenever it is not adding up many numbers in a row. The more complex instructions also add to the complexity of the decoders, which might slow down the decoding of the more common instructions such as normal adding. (''This can be somewhat mitigated by keeping the entire ISA to RISC

In electronics and computer science, a reduced instruction set computer (RISC) is a computer architecture designed to simplify the individual instructions given to the computer to accomplish tasks. Compared to the instructions given to a comp ...

principles: RVV only adds around 190 vector instructions even with the advanced features.'')

Vector processors were traditionally designed to work best only when there are large amounts of data to be worked on. For this reason, these sorts of CPUs were found primarily in supercomputer

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instruc ...

s, as the supercomputers themselves were, in general, found in places such as weather prediction centers and physics labs, where huge amounts of data are "crunched". However, as shown above and demonstrated by RISC-V RVV the ''efficiency'' of vector ISAs brings other benefits which are compelling even for Embedded use-cases.

Vector instructions

The vector pseudocode example above comes with a big assumption that the vector computer can process more than ten numbers in one batch. For a greater quantity of numbers in the vector register, it becomes unfeasible for the computer to have a register that large. As a result, the vector processor either gains the ability to perform loops itself, or exposes some sort of vector control (status) register to the programmer, usually known as a vector Length. The self-repeating instructions are found in early vector computers like the STAR-100, where the above action would be described in a single instruction (somewhat like ). They are also found in thex86

x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel, based on the 8086 microprocessor and its 8-bit-external-bus variant, the 8088. Th ...

architecture as the prefix. However, only very simple calculations can be done effectively in hardware this way without a very large cost increase. Since all operands have to be in memory for the STAR-100 architecture, the latency caused by access became huge too.

Broadcom included space in all vector operations of the Videocore IV ISA for a field, but unlike the STAR-100 which uses memory for its repeats, the Videocore IV repeats are on all operations including arithmetic vector operations. The repeat length can be a small range of power of two

A power of two is a number of the form where is an integer, that is, the result of exponentiation with number 2, two as the Base (exponentiation), base and integer as the exponent. In the fast-growing hierarchy, is exactly equal to f_1^ ...

or sourced from one of the scalar registers.

The Cray-1 introduced the idea of using processor register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-onl ...

s to hold vector data in batches. The batch lengths (vector length, VL) could be dynamically set with a special instruction, the significance compared to Videocore IV (and, crucially as will be shown below, SIMD as well) being that the repeat length does not have to be part of the instruction encoding. This way, significantly more work can be done in each batch; the instruction encoding is much more elegant and compact as well. The only drawback is that in order to take full advantage of this extra batch processing capacity, the memory load and store speed correspondingly had to increase as well. This is sometimes claimed to be a disadvantage of Cray-style vector processors: in reality it is part of achieving high performance throughput, as seen in GPUs, which face exactly the same issue.

Modern SIMD computers claim to improve on early Cray by directly using multiple ALUs, for a higher degree of parallelism compared to only using the normal scalar pipeline. Modern vector processors (such as the SX-Aurora TSUBASA) combine both, by issuing multiple data to multiple internal pipelined SIMD ALUs, the number issued being dynamically chosen by the vector program at runtime. Masks can be used to selectively load and store data in memory locations, and use those same masks to selectively disable processing element of SIMD ALUs. Some processors with SIMD ( AVX-512, ARM SVE2) are capable of this kind of selective, per-element ( "predicated") processing, and it is these which somewhat deserve the nomenclature "vector processor" or at least deserve the claim of being capable of "vector processing". SIMD processors without per-element predication

( MMX, SSE, AltiVec) categorically do not.

Modern GPUs, which have many small compute units each with their own independent SIMD ALUs, use Single Instruction Multiple Threads

Single instruction, multiple threads (SIMT) is an execution model used in parallel computing where single instruction, multiple data (SIMD) is combined with zero-overhead Thread (computing)#Multithreading, multithreading, i.e. multithreading where ...

(SIMT). SIMT units run from a shared single broadcast synchronised Instruction Unit. The "vector registers" are very wide and the pipelines tend to be long. The "threading" part of SIMT involves the way data is handled independently on each of the compute units.

In addition, GPUs such as the Broadcom Videocore IV and other external vector processors like the NEC SX-Aurora TSUBASA may use fewer vector units than the width implies: instead of having 64 units for a 64-number-wide register, the hardware might instead do a pipelined loop over 16 units for a hybrid approach. The Broadcom Videocore IV is also capable of this hybrid approach: nominally stating that its SIMD QPU Engine supports 16-long FP array operations in its instructions, it actually does them 4 at a time, as (another) form of "threads".

Vector instruction example

This example starts with an algorithm ("IAXPY"), first show it in scalar instructions, then SIMD, then predicated SIMD, and finally vector instructions. This incrementally helps illustrate the difference between a traditional vector processor and a modern SIMD one. The example starts with a 32-bit integer variant of the "DAXPY" function, in C:Scalar assembler

The scalar version of this would load one of each of x and y, process one calculation, store one result, and loop:Pure (non-predicated, packed) SIMD

A modern packed SIMD architecture, known by many names (listed inFlynn's taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966 and extended in 1972. The classification system has stuck, and it has been used as a tool in the design of modern processors and their functionalit ...

), can do most of the operation in batches. The code is mostly similar to the scalar version. It is assumed that both x and y are properly aligned here (only start on a multiple of 16) and that n is a multiple of 4, as otherwise some setup code would be needed to calculate a mask or to run a scalar version. It can also be assumed, for simplicity, that the SIMD instructions have an option to automatically repeat scalar operands, like ARM NEON can. If it does not, a "splat" (broadcast) must be used, to copy the scalar argument across a SIMD register:

Predicated SIMD

Assuming a hypothetical predicated (mask capable) SIMD ISA, and again assuming that the SIMD instructions can cope with misaligned data, the instruction loop would look like this:Pure (true) vector ISA

For Cray-style vector ISAs such as RVV, an instruction called "" (set vector length) is used. The hardware first defines how many data values it can process in one "vector": this could be either actual registers or it could be an internal loop (the hybrid approach, mentioned above). This maximum amount (the number of hardware "lanes") is termed "MVL" (Maximum Vector Length). Note that, as seen in SX-Aurora and Videocore IV, MVL may be an actual hardware lane quantity ''or a virtual one''. ''(Note: As mentioned in the ARM SVE2 Tutorial, programmers must not make the mistake of assuming a fixed vector width: consequently MVL is not a quantity that the programmer needs to know. This can be a little disconcerting after years of SIMD mindset).'' On calling with the number of outstanding data elements to be processed, "" is permitted (essentially required) to limit that to the Maximum Vector Length (MVL) and thus returns the ''actual'' number that can be processed by the hardware in subsequent vector instructions, and sets the internal special register, "VL", to that same amount. ARM refers to this technique as "vector length agnostic" programming in its tutorials on SVE2. Below is the Cray-style vector assembler for the same SIMD style loop, above. Note that t0 (which, containing a convenient copy of VL, can vary) is used instead of hard-coded constants:Vector reduction example

This example starts with an algorithm which involves reduction. Just as with the previous example, it will be first shown in scalar instructions, then SIMD, and finally vector instructions, starting in c:Scalar assembler

The scalar version of this would load each of x, add it to y, and loop:SIMD reduction

This is where the problems start. SIMD by design is incapable of doing arithmetic operations "inter-element". Element 0 of one SIMD register may be added to Element 0 of another register, but Element 0 may not be added to anything other than another Element 0. This places some severe limitations on potential implementations. For simplicity it can be assumed that n is exactly 8:Vector ISA reduction

Vector instruction sets have arithmetic reduction operations ''built-in'' to the ISA. If it is assumed that n is less or equal to the maximum vector length, only three instructions are required:Insights from examples

Compared to any SIMD processor claiming to be a vector processor, the order of magnitude reduction in program size is almost shocking. However, this level of elegance at the ISA level has quite a high price tag at the hardware level: # From the IAXPY example, it can be seen that unlike SIMD processors, which can simplify their internal hardware by avoiding dealing with misaligned memory access, a vector processor cannot get away with such simplification: algorithms are written which inherently rely on Vector Load and Store being successful, regardless of alignment of the start of the vector. # Whilst from the reduction example it can be seen that, aside from permute instructions, SIMD by definition avoids inter-lane operations entirely (element 0 can only be added to another element 0), vector processors tackle this head-on. What programmers are forced to do in software (using shuffle and other tricks, to swap data into the right "lane") vector processors must do in hardware, automatically. Overall then there is a choice to either have # complex software and simplified hardware (SIMD) # simplified software and complex hardware (vector processors) These stark differences are what distinguishes a vector processor from one that has SIMD.Vector processor features

Where many SIMD ISAs borrow or are inspired by the list below, typical features that a vector processor will have are: * Vector Load and Store – Vector architectures with a register-to-register design (analogous to load–store architectures for scalar processors) have instructions for transferring multiple elements between the memory and the vector registers. Typically, multiple addressing modes are supported. The unit-stride addressing mode is essential; modern vector architectures typically also support arbitrary constant strides, as well as the scatter/gather (also called ''indexed'') addressing mode. Advanced architectures may also include support for ''segment'' load and stores, and ''fail-first'' variants of the standard vector load and stores. Segment loads read a vector from memory, where each element is adata structure

In computer science, a data structure is a data organization and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the relationships amo ...

containing multiple members. The members are extracted from data structure (element), and each extracted member is placed into a different vector register.

* Masked Operations – predicate masks allow parallel if/then/else constructs without resorting to branches. This allows code with conditional statements to be vectorized.

* Compress and Expand – usually using a bit-mask, data is linearly compressed or expanded (redistributed) based on whether bits in the mask are set or clear, whilst always preserving the sequential order and never duplicating values (unlike Gather-Scatter aka permute). These instructions feature in AVX-512.

* Register Gather, Scatter (aka permute) – a less restrictive more generic variation of the compress/expand theme which instead takes one vector to specify the indices to use to "reorder" another vector. Gather/scatter is more complex to implement than compress/expand, and, being inherently non-sequential, can interfere with vector chaining. Not to be confused with Gather-scatter Memory Load/Store modes, Gather/scatter vector operations act on the vector registers, and are often termed a permute instruction instead.

* Splat and Extract – useful for interaction between scalar and vector, these broadcast a single value across a vector, or extract one item from a vector, respectively.

* Iota – a very simple and strategically useful instruction which drops sequentially-incrementing immediates into successive elements. Usually starts from zero.

* Reduction and Iteration

Iteration is the repetition of a process in order to generate a (possibly unbounded) sequence of outcomes. Each repetition of the process is a single iteration, and the outcome of each iteration is then the starting point of the next iteration.

...

– operations that perform mapreduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster.

A MapReduce program is composed of a ''map'' procedure, which performs filte ...

on a vector (for example, find the one maximum value of an entire vector, or sum all elements). Iteration is of the form x = y + x -1/code> where Reduction is of the form x = y + y �� + y -1/code>

* Matrix Multiply support – either by way of algorithmically loading data from memory, or reordering (remapping) the normally linear access to vector elements, or providing "Accumulators", arbitrary-sized matrices may be efficiently processed. IBM POWER10 provides MMA instructions although for arbitrary Matrix widths that do not fit the exact SIMD size data repetition techniques are needed which is wasteful of register file resources. NVidia provides a high-level Matrix CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated gene ...

API although the internal details are not available. The most resource-efficient technique is in-place reordering of access to otherwise linear vector data.

* Advanced Math formats – often includes Galois field arithmetic, but can include binary-coded decimal or decimal fixed-point, and support for much larger (arbitrary precision) arithmetic operations by supporting parallel carry-in and carry-out

* Bit manipulation – including vectorised versions of bit-level permutation operations, bitfield insert and extract, centrifuge operations, population count, and many others.

GPU vector processing features

With many 3D shader

In computer graphics, a shader is a computer program that calculates the appropriate levels of light, darkness, and color during the rendering of a 3D scene—a process known as '' shading''. Shaders have evolved to perform a variety of s ...

applications needing trigonometric operations as well as short vectors for common operations (RGB, ARGB, XYZ, XYZW) support for the following is typically present in modern GPUs, in addition to those found in vector processors:

* Sub-vectors – elements may typically contain two, three or four sub-elements (vec2, vec3, vec4) where any given bit of a predicate mask applies to the whole vec2/3/4, not the elements in the sub-vector. Sub-vectors are also introduced in RISC-V RVV (termed "LMUL"). Subvectors are a critical integral part of the Vulkan SPIR-V spec.

* Sub-vector Swizzle – aka "Lane Shuffling" which allows sub-vector inter-element computations without needing extra (costly, wasteful) instructions to move the sub-elements into the correct SIMD "lanes" and also saves predicate mask bits. Effectively an in-flight mini-permute of the sub-vector, this heavily features in 3D Shader binaries and is sufficiently important as to be part of the Vulkan SPIR-V spec. The Broadcom Videocore IV uses the terminology "Lane rotate" where the rest of the industry uses the term "swizzle".

* Transcendentals – trigonometric operations such as sine

In mathematics, sine and cosine are trigonometric functions of an angle. The sine and cosine of an acute angle are defined in the context of a right triangle: for the specified angle, its sine is the ratio of the length of the side opposite th ...

, cosine

In mathematics, sine and cosine are trigonometric functions of an angle. The sine and cosine of an acute angle are defined in the context of a right triangle: for the specified angle, its sine is the ratio of the length of the side opposite that ...

and logarithm

In mathematics, the logarithm of a number is the exponent by which another fixed value, the base, must be raised to produce that number. For example, the logarithm of to base is , because is to the rd power: . More generally, if , the ...

obviously feature much more predominantly in 3D than in many demanding HPC workloads. Of interest, however, is that speed is far more important than accuracy in 3D for GPUs, where computation of pixel coordinates simply do not require high precision. The Vulkan specification recognises this and sets surprisingly low accuracy requirements, so that GPU Hardware can reduce power usage. The concept of reducing accuracy where it is simply not needed is explored in the MIPS-3D extension.

Fault (or Fail) First

Introduced in ARM SVE2 and RISC-V RVV is the concept of speculative sequential Vector Loads. ARM SVE2 has a special register named "First Fault Register", where RVV modifies (truncates) the Vector Length (VL).

The basic principle of is to attempt a large sequential Vector Load, but to allow the hardware to arbitrarily truncate the ''actual'' amount loaded to either the amount that would succeed without raising a memory fault or simply to an amount (greater than zero) that is most convenient. The important factor is that ''subsequent'' instructions are notified or may determine exactly how many Loads actually succeeded, using that quantity to only carry out work on the data that has actually been loaded.

Contrast this situation with SIMD, which is a fixed (inflexible) load width and fixed data processing width, unable to cope with loads that cross page boundaries, and even if they were they are unable to adapt to what actually succeeded, yet, paradoxically, if the SIMD program were to even attempt to find out in advance (in each inner loop, every time) what might optimally succeed, those instructions only serve to hinder performance because they would, by necessity, be part of the critical inner loop.

This begins to hint at the reason why is so innovative, and is best illustrated by memcpy or strcpy when implemented with standard 128-bit non-predicated SIMD. For IBM POWER9 the number of hand-optimised instructions to implement strncpy is in excess of 240.

By contrast, the same strncpy routine in hand-optimised RVV assembler is a mere 22 instructions.

The above SIMD example could potentially fault and fail at the end of memory, due to attempts to read too many values: it could also cause significant numbers of page or misaligned faults by similarly crossing over boundaries. In contrast, by allowing the vector architecture the freedom to decide how many elements to load, the first part of a strncpy, if beginning initially on a sub-optimal memory boundary, may return just enough loads such that on ''subsequent'' iterations of the loop the batches of vectorised memory reads are optimally aligned with the underlying caches and virtual memory arrangements. Additionally, the hardware may choose to use the opportunity to end any given loop iteration's memory reads ''exactly'' on a page boundary (avoiding a costly second TLB lookup), with speculative execution preparing the next virtual memory page whilst data is still being processed in the current loop. All of this is determined by the hardware, not the program itself.ARM SVE2 paper by N. Stevens

/ref>

Performance and speed up

Let ''r'' be the vector speed ratio and ''f'' be the vectorization ratio. If the time taken for the vector unit to add an array of 64 numbers is 10 times faster than its equivalent scalar counterpart, r = 10. Also, if the total number of operations in a program is 100, out of which only 10 are scalar (after vectorization), then f = 0.9, i.e., 90% of the work is done by the vector unit. It follows the achievable speed up of: