|

Massively Parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of threads. One approach is grid computing, where the processing power of many computers in distributed, diverse administrative domains is opportunistically used whenever a computer is available.''Grid computing: experiment management, tool integration, and scientific workflows'' by Radu Prodan, Thomas Fahringer 2007 pages 1–4 An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.''Parallel and Distributed Computational Intelligence'' by Francisco Fernández de Vega 2010 pages 65–68 Another approach is grouping many processors in close proximity to each other, as in a computer cluster. In such a centralized system the speed and flexibility of the interconnect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor (computing)

In computing and computer science, a processor or processing unit is an electrical component (digital circuit) that performs operations on an external data source, usually memory or some other data stream. It typically takes the form of a microprocessor, which can be implemented on a single or a few tightly integrated metal–oxide–semiconductor integrated circuit chips. In the past, processors were constructed using multiple individual vacuum tubes, multiple individual transistors, or multiple integrated circuits. The term is frequently used to refer to the central processing unit (CPU), the main processor in a system. However, it can also refer to other coprocessors, such as a graphics processing unit (GPU). Traditional processors are typically based on silicon; however, researchers have developed experimental processors based on alternative materials such as carbon nanotubes, graphene, diamond, and alloys made of elements from groups three and five of the periodic tabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse Appliance

In computing, the term data warehouse appliance (DWA) was coined by Foster Hinshaw for a database machine architecture for data warehouses (DW) specifically marketed for big data analysis and discovery that is simple to use (not a pre-configuration) and has a high performance for the workload. A DWA includes an integrated set of servers, storage, operating systems, and databases. In marketing, the term evolved to include pre-installed and pre-optimized hardware and software as well as similar software-only systems promoted as easy to install on specific recommended hardware configurations or preconfigured as a complete system. These are marketing uses of the term and do not reflect the technical definition. A DWA is designed specifically for high performance big data analytics and is delivered as an easy-to-use packaged system. DW appliances are marketed for data volumes in the terabyte to petabyte range. Technology The data warehouse appliance (DWA) has several characteristics w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Manycore Processor

Manycore processors are special kinds of multi-core processors designed for a high degree of parallel processing, containing numerous simpler, independent processor cores (from a few tens of cores to thousands or more). Manycore processors are used extensively in embedded computers and high-performance computing. Contrast with multicore architecture Manycore processors are distinct from multi-core processors in being optimized from the outset for a higher degree of explicit parallelism, and for higher throughput (or lower power consumption) at the expense of latency and lower single-thread performance. The broader category of multi-core processors, by contrast, are usually designed to efficiently run ''both'' parallel ''and'' serial code, and therefore place more emphasis on high single-thread performance (e.g. devoting more silicon to out-of-order execution, deeper pipelines, more superscalar execution units, and larger, more general caches), and shared memory. These techn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs. CUDA was created by Nvidia in 2006. When it was first introduced, the name was an acronym for ''Compute Unified Device Architecture'', but Nvidia later dropped the common use of the acronym and now rarely expands it. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications. CUDA is designed to work with programming languages such as C, C++, Fortran, Python and Julia. This accessibility makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cellular Automaton

A cellular automaton (pl. cellular automata, abbrev. CA) is a discrete model of computation studied in automata theory. Cellular automata are also called cellular spaces, tessellation automata, homogeneous structures, cellular structures, tessellation structures, and iterative arrays. Cellular automata have found application in various areas, including physics, theoretical biology and microstructure modeling. A cellular automaton consists of a regular grid of ''cells'', each in one of a finite number of ''State (computer science), states'', such as ''on'' and ''off'' (in contrast to a coupled map lattice). The grid can be in any finite number of dimensions. For each cell, a set of cells called its ''neighborhood'' is defined relative to the specified cell. An initial state (time ''t'' = 0) is selected by assigning a state for each cell. A new ''generation'' is created (advancing ''t'' by 1), according to some fixed ''rule'' (generally, a mathematical function) that dete ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

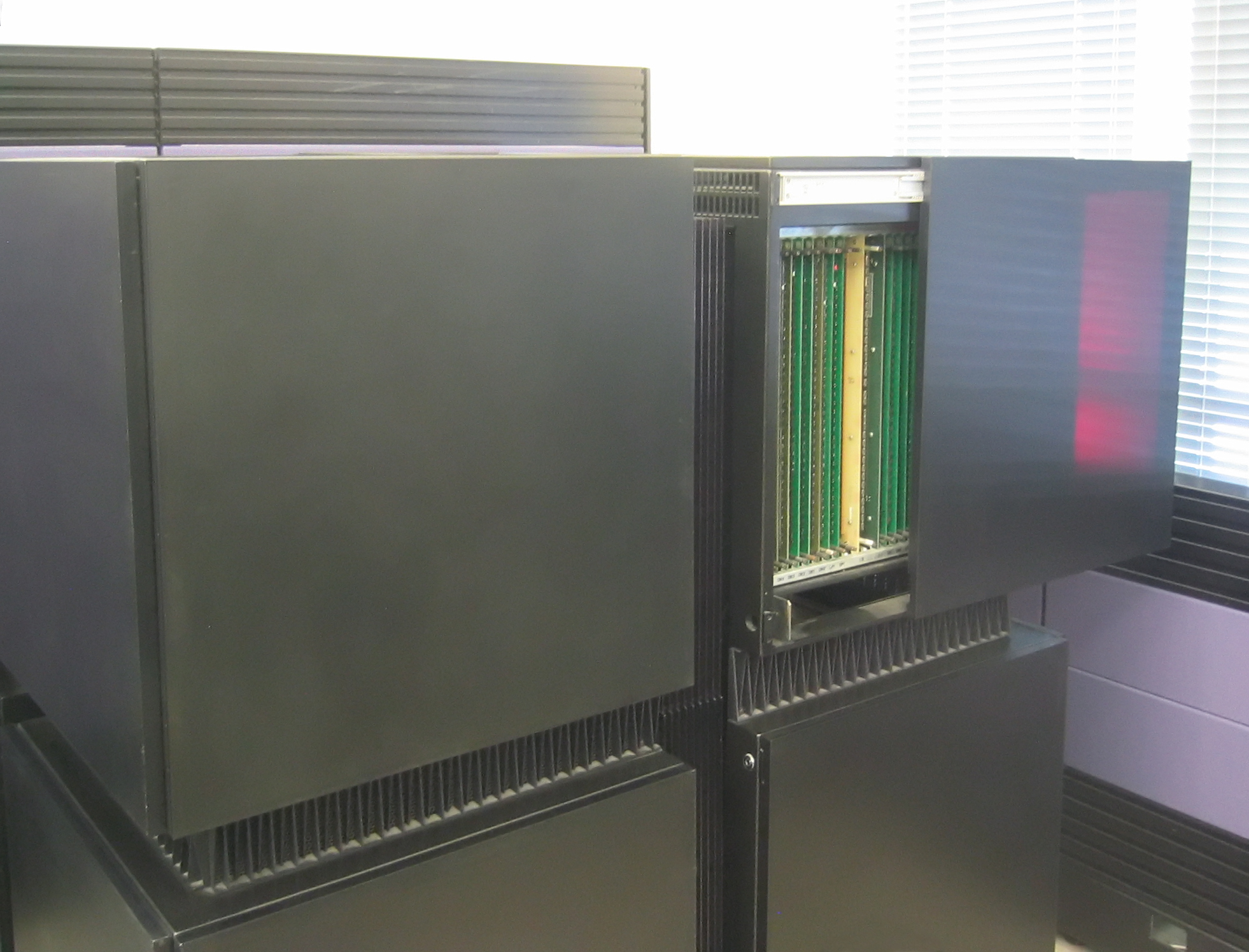

Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Multiprocessing

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all input and output devices, and are controlled by a single operating system instance that treats all processors equally, reserving none for special purposes. Most multiprocessor systems today use an SMP architecture. In the case of multi-core processors, the SMP architecture applies to the cores, treating them as separate processors. Professor John D. Kubiatowicz considers traditionally SMP systems to contain processors without caches. Culler and Pal-Singh in their 1998 book "Parallel Computer Architecture: A Hardware/Software Approach" mention: "The term SMP is widely used but causes a bit of confusion. ..The more precise description of what is intended by SMP is a shared memory multiprocessor where the cost of accessing a memory location ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared-nothing Architecture

A shared-nothing architecture (SN) is a distributed computing architecture in which each update request is satisfied by a single node (processor/memory/storage unit) in a computer cluster. The intent is to eliminate contention among nodes. Nodes do not share (independently access) the same memory or storage. One alternative architecture is shared everything, in which requests are satisfied by arbitrary combinations of nodes. This may introduce contention, as multiple nodes may seek to update the same data at the same time. It also contrasts with shared-disk and shared-memory architectures. SN eliminates single points of failure, allowing the overall system to continue operating despite failures in individual nodes and allowing individual nodes to upgrade hardware or software without a system-wide shutdown. A SN system can scale simply by adding nodes, since no central resource bottlenecks the system. In databases, a term for the part of a database on a single node is a '' sha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process-oriented Programming

Process-oriented programming is a programming paradigm that separates the concerns of data structures and the concurrent processes that act upon them. The data structures in this case are typically persistent, complex, and large scale - the subject of general purpose applications, as opposed to specialized processing of specialized data sets seen in high productivity applications (HPC). The model allows the creation of large scale applications that partially share common data sets. Programs are functionally decomposed into parallel processes that create and act upon logically shared data. The paradigm was originally invented for parallel computers in the 1980s, especially computers built with transputer microprocessors by INMOS, or similar architectures. Occam was an early process-oriented language developed for the Transputer. Some derivations have evolved from the message passing paradigm of Occam to enable uniform efficiency when porting applications between distributed memory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Embarrassingly Parallel

In parallel computing, an embarrassingly parallel workload or problem (also called embarrassingly parallelizable, perfectly parallel, delightfully parallel or pleasingly parallel) is one where little or no effort is needed to split the problem into a number of parallel tasks. This is due to minimal or no dependency upon communication between the parallel tasks, or for results between them.Section 1.4.4 of: These differ from distributed computing problems, which need communication between tasks, especially communication of intermediate results. They are easier to perform on server farms which lack the special infrastructure used in a true supercomputer cluster. They are well-suited to large, Internet-based volunteer computing platforms such as BOINC, and suffer less from parallel slowdown. The opposite of embarrassingly parallel problems are inherently serial problems, which cannot be parallelized at all. A common example of an embarrassingly parallel problem is 3D video renderi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiprocessing

Multiprocessing (MP) is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined ( multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). A multiprocessor is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. A 2009 textbook defined multiprocessor system similarly, but noted that the processors may share "some or all of the system’s memory and I/O facilities"; it also gave tightly coupled system as a synonymous term. At the operating system level, ''multiprocessing'' is sometimes used to refer to the executi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |