|

Single Instruction, Multiple Threads

Single instruction, multiple threads (SIMT) is an execution model used in parallel computing where single instruction, multiple data (SIMD) is combined with zero-overhead multithreading, i.e. multithreading where the hardware is capable of switching between threads on a cycle-by-cycle basis. There are two models of multithreading involved. In addition to the zero-overhead multithreading mentioned, the SIMD execution hardware is virtualized to represent a multiprocessor, but is inferior to a SPMD processor in that instructions in all "threads" are executed in lock-step in the lanes of the SIMD processor which can only execute the same instruction in a given cycle across all lanes. The SIMT execution model has been implemented on several GPUs and is relevant for general-purpose computing on graphics processing units (GPGPU), e.g. some supercomputer A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

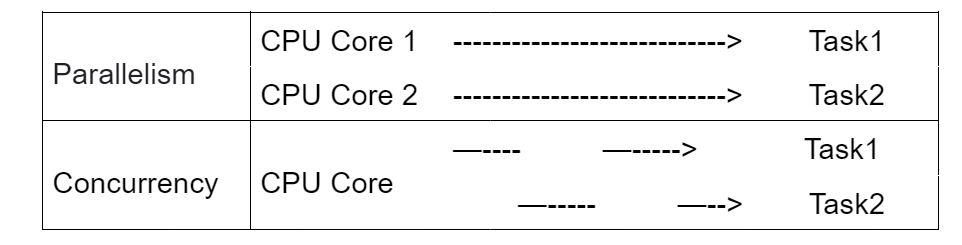

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GDDR SDRAM

Graphics DDR SDRAM (GDDR SDRAM) is a type of synchronous dynamic random-access memory (SDRAM) specifically designed for applications requiring high bandwidth, e.g. graphics processing units (GPUs). GDDR SDRAM is distinct from the more widely known types of DDR SDRAM, such as DDR4 and DDR5, although they share some of the same features—including double data rate (DDR) data transfers. , GDDR SDRAM has been succeeded by GDDR2, GDDR3, GDDR4, GDDR5, GDDR5X, GDDR6, GDDR6X, GDDR6W and GDDR7. Generations File:ATI Radeon X1300 256MB - Hynix HY5DU561622CTP-5-5390.jpg, Hynix GDDR SDRAM File:SAMSUNG@QDDR3-SDRAM@256MBit@K5J55323QF-GC16 Stack-DSC01234-DSC01284 - ZS-retouched.jpg, A Samsung GDDR3 256MBit package File:Sapphire Ultimate HD 4670 512MB - Qimonda HYB18H512321BF-10-93577.jpg, A 512 MBit Qimonda GDDR3 SDRAM package File:SAMSUNG@QDDR3-SDRAM@256MBit@K5J55323QF-GC16 Stack-DSC01340-DSC01367 - ZS-retouched.jpg, Inside a Samsung GDDR3 256MBit package DDR SGRAM GDDR was init ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPGPU

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. Essentially, a GPGPU pipeline is a kind of parallel processing between one or more GPUs and CPUs that analyzes data as if it were in image or other graphic form. While GPUs operate at lower frequencies, they typically have many times the number of cores. Thus, GPUs can process far more pictures and graphical data per second than a traditional CPU. Migrating data into graphical form and then using the GPU to scan and analyze it can create a large speedup. GPGPU pipelines were developed at the beginning of the 21st century for grap ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Classes Of Computers

Computers can be classified, or typed, in many ways. Some common classifications of computers are given below. Classes by purpose , - , style="text-align: left;", Notes: Microcomputers (personal computers) Microcomputers became the most common type of computer in the late 20th century. The term "microcomputer" was introduced with the advent of systems based on single-chip microprocessors. The best-known early system was the Altair 8800, introduced in 1975. The term "microcomputer" has practically become an anachronism as it has fallen into disuse. These computers include: * Desktop computers – A case put under or on a desk. The display may be optional, depending on use. The case size may vary, depending on the required expansion slots. Very small computers of this kind may be integrated into the monitor. * Rackmount computers – The cases of these computers fit into 19-inch racks, and may be space-optimized and very flat. A dedicated display, keyboard, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thread Block (CUDA Programming)

A thread block is a programming abstraction that represents a group of Thread (computing), threads that can be executed serially or in parallel. For better process and data mapping, threads are grouped into thread blocks. The number of threads in a thread block was formerly limited by the architecture to a total of 512 threads per block, but as of March 2010, with compute capability 2.x and higher, blocks may contain up to 1024 threads. The threads in the same thread block run on the same stream multiprocessor. Threads in the same block can communicate with each other via shared memory, barrier Synchronization (computer science), synchronization or other synchronization primitives such as atomic operations. Multiple blocks are combined to form a grid. All the blocks in the same grid contain the same number of threads. The number of threads in a block is limited, but grids can be used for computations that require a large number of thread blocks to operate in parallel and to use al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General-purpose Computing On Graphics Processing Units

General-purpose computing on graphics processing units (GPGPU, or less often GPGP) is the use of a graphics processing unit (GPU), which typically handles computation only for computer graphics, to perform computation in applications traditionally handled by the central processing unit (CPU). The use of multiple video cards in one computer, or large numbers of graphics chips, further parallelizes the already parallel nature of graphics processing. Essentially, a GPGPU pipeline is a kind of parallel processing between one or more GPUs and CPUs that analyzes data as if it were in image or other graphic form. While GPUs operate at lower frequencies, they typically have many times the number of cores. Thus, GPUs can process far more pictures and graphical data per second than a traditional CPU. Migrating data into graphical form and then using the GPU to scan and analyze it can create a large speedup. GPGPU pipelines were developed at the beginning of the 21st century for graphic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphics Core Next

Graphics Core Next (GCN) is the codename for a series of microarchitectures and an instruction set architecture that were developed by AMD for its GPUs as the successor to its TeraScale microarchitecture. The first product featuring GCN was launched on January 9, 2012. GCN is a reduced instruction set SIMD microarchitecture contrasting the very long instruction word SIMD architecture of TeraScale. GCN requires considerably more transistors than TeraScale, but offers advantages for general-purpose GPU (GPGPU) computation due to a simpler compiler. GCN graphics chips were fabricated with CMOS at 28 nm, and with FinFET at 14 nm (by Samsung Electronics and GlobalFoundries) and 7 nm (by TSMC), available on selected models in AMD's Radeon HD 7000, HD 8000, 200, 300, 400, 500 and Vega series of graphics cards, including the separately released Radeon VII. GCN was also used in the graphics portion of Accelerated Processing Units (APUs), including those in the PlayStation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenCL

OpenCL (Open Computing Language) is a software framework, framework for writing programs that execute across heterogeneous computing, heterogeneous platforms consisting of central processing units (CPUs), graphics processing units (GPUs), digital signal processors (DSPs), field-programmable gate arrays (FPGAs) and other processors or hardware accelerators. OpenCL specifies a programming language (based on C99) for programming these devices and application programming interfaces (APIs) to control the platform and execute programs on the OpenCL compute devices, compute devices. OpenCL provides a standard interface for parallel computing using Task parallelism, task- and Data parallelism, data-based parallelism. OpenCL is an open standard maintained by the Khronos Group, a Non-profit organization, non-profit, open standards organisation. Conformant implementations (passed the Conformance Test Suite) are available from a range of companies including AMD, Arm (company), Arm, Caden ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CUDA

In computing, CUDA (Compute Unified Device Architecture) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs. CUDA was created by Nvidia in 2006. When it was first introduced, the name was an acronym for ''Compute Unified Device Architecture'', but Nvidia later dropped the common use of the acronym and now rarely expands it. CUDA is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements for the execution of compute kernels. In addition to drivers and runtime kernels, the CUDA platform includes compilers, libraries and developer tools to help programmers accelerate their applications. CUDA is designed to work with programming languages such as C, C++, Fortran, Python and Julia. This accessibility makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |