|

MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster. A MapReduce program is composed of a ''map'' procedure, which performs filtering and sorting (such as sorting students by first name into queues, one queue for each name), and a '' reduce'' method, which performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The "MapReduce System" (also called "infrastructure" or "framework") orchestrates the processing by marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, and providing for redundancy and fault tolerance. The model is a specialization of the ''split-apply-combine'' strategy for data analysis. It is inspired by the map and reduce functions commonly used in functional programming,"Our abstracti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. Overview The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality, where nodes manipulate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

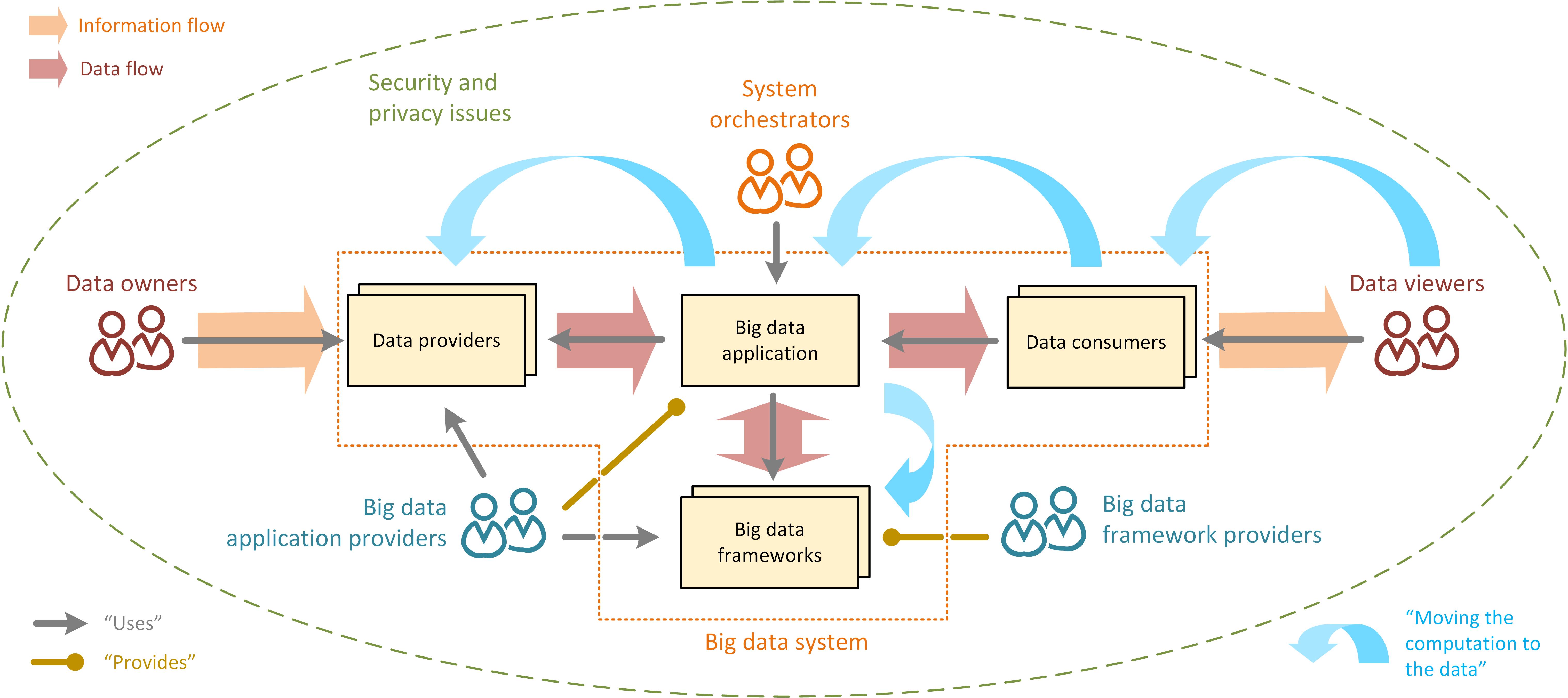

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reduce (parallel Pattern)

In computer science, the reduction operator is a type of Operator (computer programming), operator that is commonly used in Parallel computing, parallel programming to reduce the elements of an array into a single result. Reduction operators are Associative property, associative and often (but not necessarily) Commutative property, commutative. The reduction of sets of elements is an integral part of programming models such as MapReduce, Map Reduce, where a reduction operator is applied (Map (higher-order function), mapped) to all elements before they are reduced. Other parallel algorithms use reduction operators as primary operations to solve more complex problems. Many reduction operators can be used for broadcasting to distribute data to all processors. Theory A reduction operator can help break down a task into various partial tasks by calculating partial results which can be used to obtain a final result. It allows certain serial operations to be performed in parallel and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Mahout

Apache Mahout is a project of the Apache Software Foundation to produce free implementations of distributed or otherwise scalable machine learning algorithms focused primarily on linear algebra. In the past, many of the implementations use the Apache Hadoop platform, however today it is primarily focused on Apache Spark. Mahout also provides Java/Scala libraries for common math operations (focused on linear algebra and statistics) and primitive Java collections. Mahout is a work in progress; a number of algorithms have been implemented. Features Samsara Apache Mahout-Samsara refers to a Scala domain specific language (DSL) that allows users to use R-Like syntax as opposed to traditional Scala-like syntax. This allows user to express algorithms concisely and clearly. val G = B %*% B.t - C - C.t + (ksi dot ksi) * (s_q cross s_q) Backend agnostic Apache Mahout's code abstracts the domain-specific language from the engine where the code is run. While active development ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Programming Model

A programming model is an execution model coupled to an API or a particular pattern of code. In this style, there are actually two execution models in play: the execution model of the base programming language and the execution model of the programming model. An example is Spark where Java is the base language, and Spark is the programming model. Execution may be based on what appear to be library calls. Other examples include the POSIX Threads library and Hadoop's MapReduce. In both cases, the execution model of the programming model is different from that of the base language in which the code is written. For example, the C programming language has no behavior in its execution model for input/output or thread behavior. But such behavior can be invoked from C syntax, by making what appears to be a call to a normal C library. What distinguishes a programming model from a normal library is that the behavior of the call cannot be understood in terms of the language the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shard (database Architecture)

A database shard, or simply a shard, is a horizontal partition of data in a database or search engine. Each shard may be held on a separate database server instance, to spread load. Some data in a database remains present in all shards, but some appears only in a single shard. Each shard acts as the single source for this subset of data. Database architecture Horizontal partitioning is a database design principle whereby '' rows'' of a database table are held separately, rather than being split into columns (which is what normalization and vertical partitioning do, to differing extents). Each partition forms part of a shard, which may in turn be located on a separate database server or physical location. There are numerous advantages to the horizontal partitioning of data. Since tables are divided and distributed into multiple servers, the total number of rows in each table in each database is reduced. This reduces index size, which generally improves search performance. A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster (computing)

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike Grid computing, grid computers, computer clusters have each Node (networking), node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each Node (networking), node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Map (parallel Pattern)

Map is an idiom in parallel computing where a simple operation is applied to all elements of a sequence, potentially in parallel. It is used to solve embarrassingly parallel problems: those problems that can be decomposed into independent subtasks, requiring no communication/synchronization between the subtasks except a join or barrier at the end. When applying the map pattern, one formulates an ''elemental function'' that captures the operation to be performed on a data item that represents a part of the problem, then applies this elemental function in one or more threads of execution, hyperthreads, SIMD lanes or on multiple computers. Some parallel programming systems, such as OpenMP and Cilk, have language support for the map pattern in the form of a parallel for loop; languages such as OpenCL and CUDA support elemental functions (as " kernels") at the language level. The map pattern is typically combined with other parallel design patterns. For example, map combined with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generic Trademark

A generic trademark, also known as a genericized trademark or proprietary eponym, is a trademark or brand name that, because of its popularity or significance, has become the generic term for, or synonymous with, a general class of products or services, usually against the intentions of the trademark's owner. A trademark is prone to genericization, or "genericide", when a brand name acquires substantial market dominance or mind share, becoming so widely used for similar products or services that it is no longer associated with the trademark owner, e.g., linoleum, bubble wrap, thermos, and aspirin. A trademark thus popularized is at risk of being challenged or revoked, unless the trademark owner works sufficiently to correct and prevent such broad use. Trademark owners can inadvertently contribute to genericization by failing to provide an alternative generic name for their product or service or using the trademark in similar fashion to generic terms. In one example, the Oti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Petabyte

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol () refer to an 8-bit byte as an octet. Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness. The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used. The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Server Farm

A server farm or server cluster is a collection of Server (computing), computer servers, usually maintained by an organization to supply server functionality far beyond the capability of a single machine. They often consist of thousands of computers which require a large amount of power to run and to keep cool. At the optimum performance level, a server farm has enormous financial and environmental costs. They often include backup servers that can take over the functions of primary servers that may fail. Server farms are typically collocated with the network switches and/or router (computing), routers that enable communication between different parts of the cluster and the cluster's users. Server "farmers" typically mount computers, routers, power supplies and related electronics on 19-inch racks in a server room or data center. Applications Server farms are commonly used for cluster computing. Many modern supercomputers comprise giant server farms of high-speed Processor (comput ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |