In

linear algebra

Linear algebra is the branch of mathematics concerning linear equations such as:

:a_1x_1+\cdots +a_nx_n=b,

linear maps such as:

:(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n,

and their representations in vector spaces and through matrices.

...

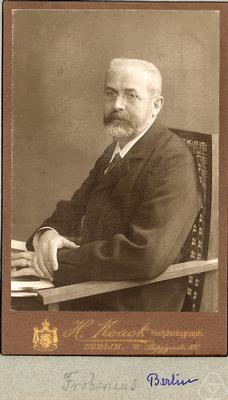

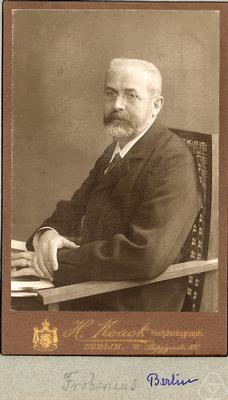

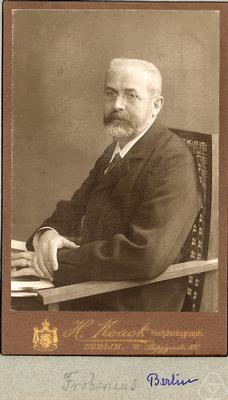

, the Cayley–Hamilton theorem (named after the mathematicians

Arthur Cayley

Arthur Cayley (; 16 August 1821 – 26 January 1895) was a prolific United Kingdom of Great Britain and Ireland, British mathematician who worked mostly on algebra. He helped found the modern British school of pure mathematics.

As a child, C ...

and

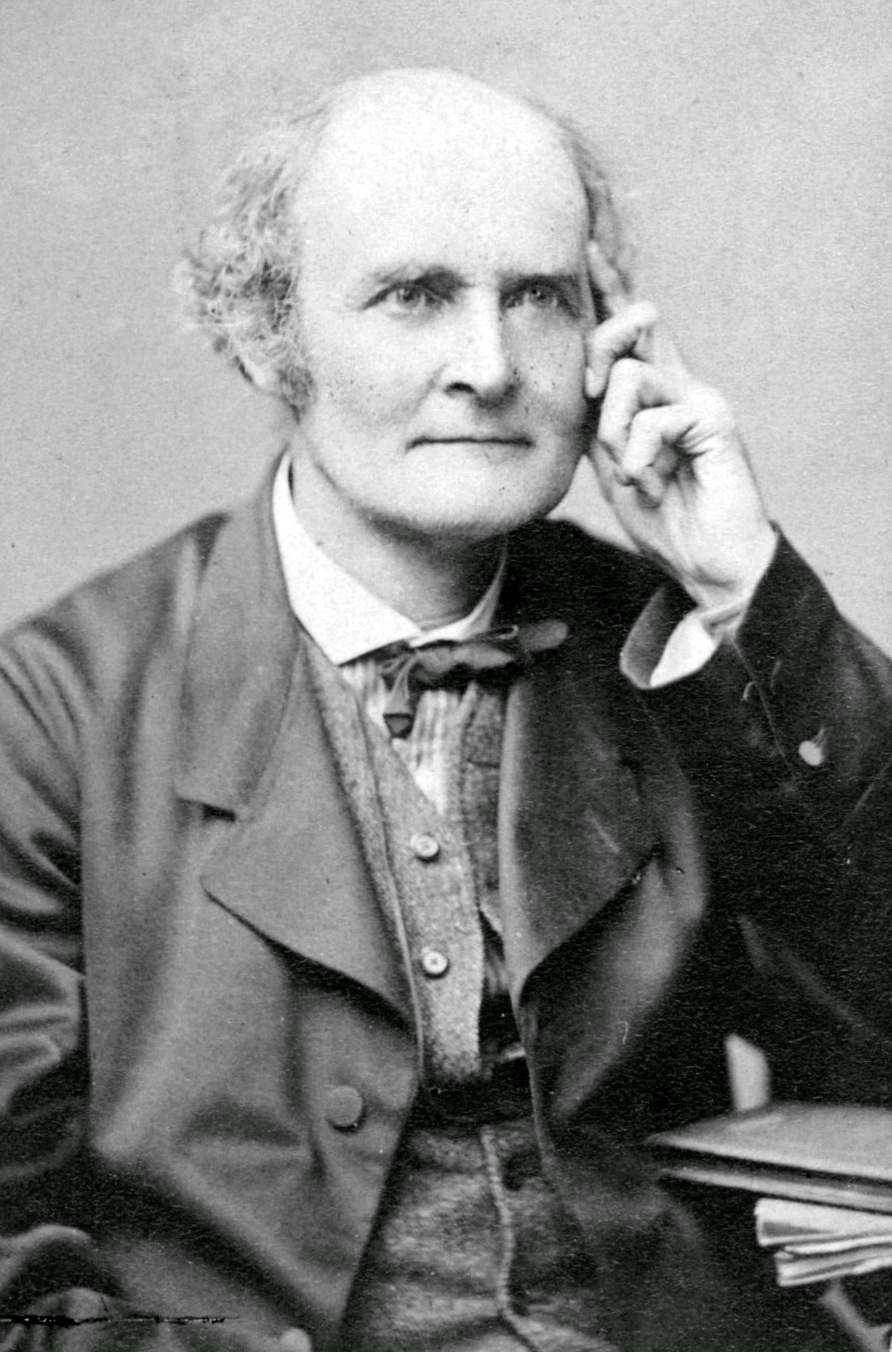

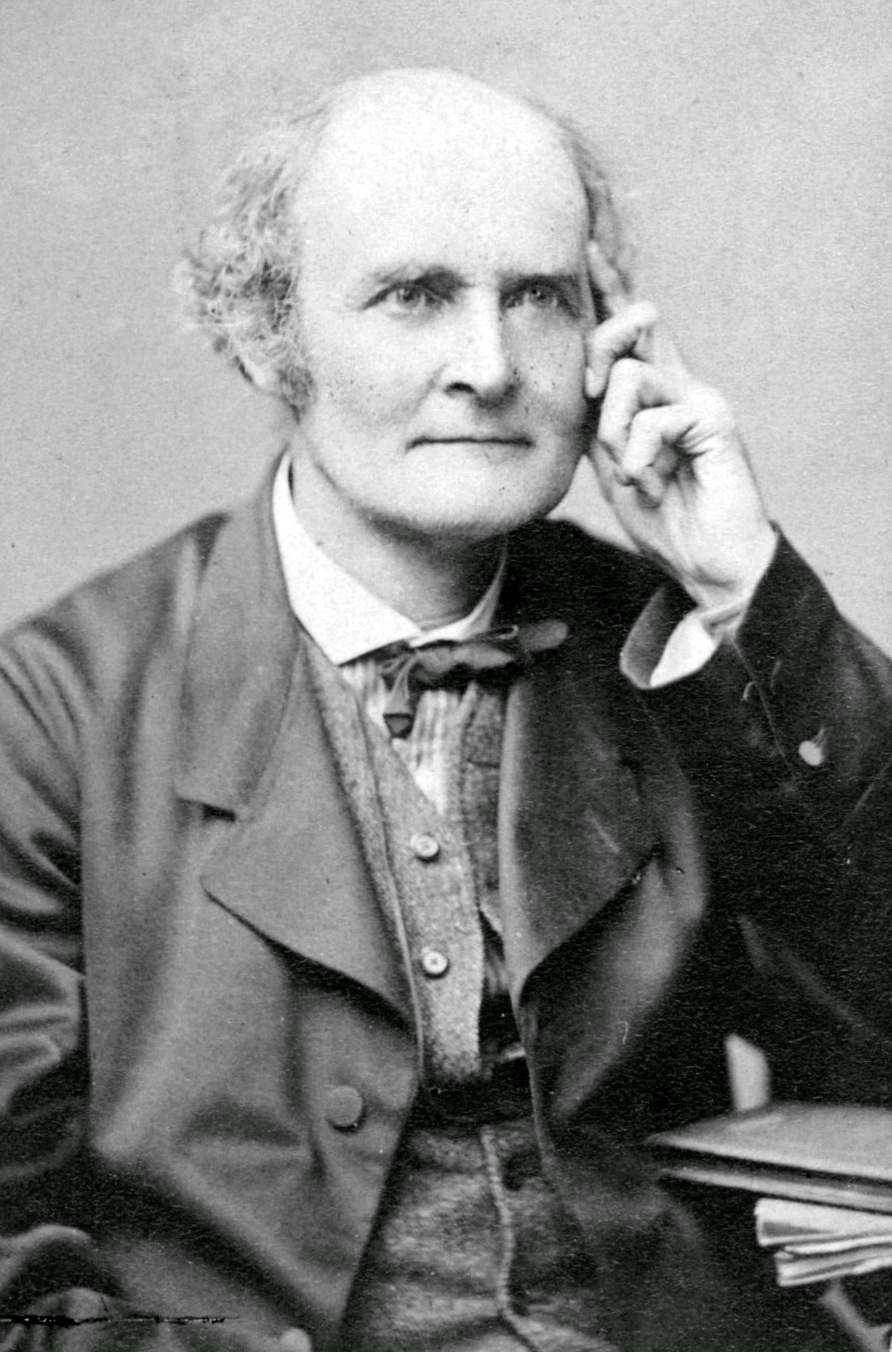

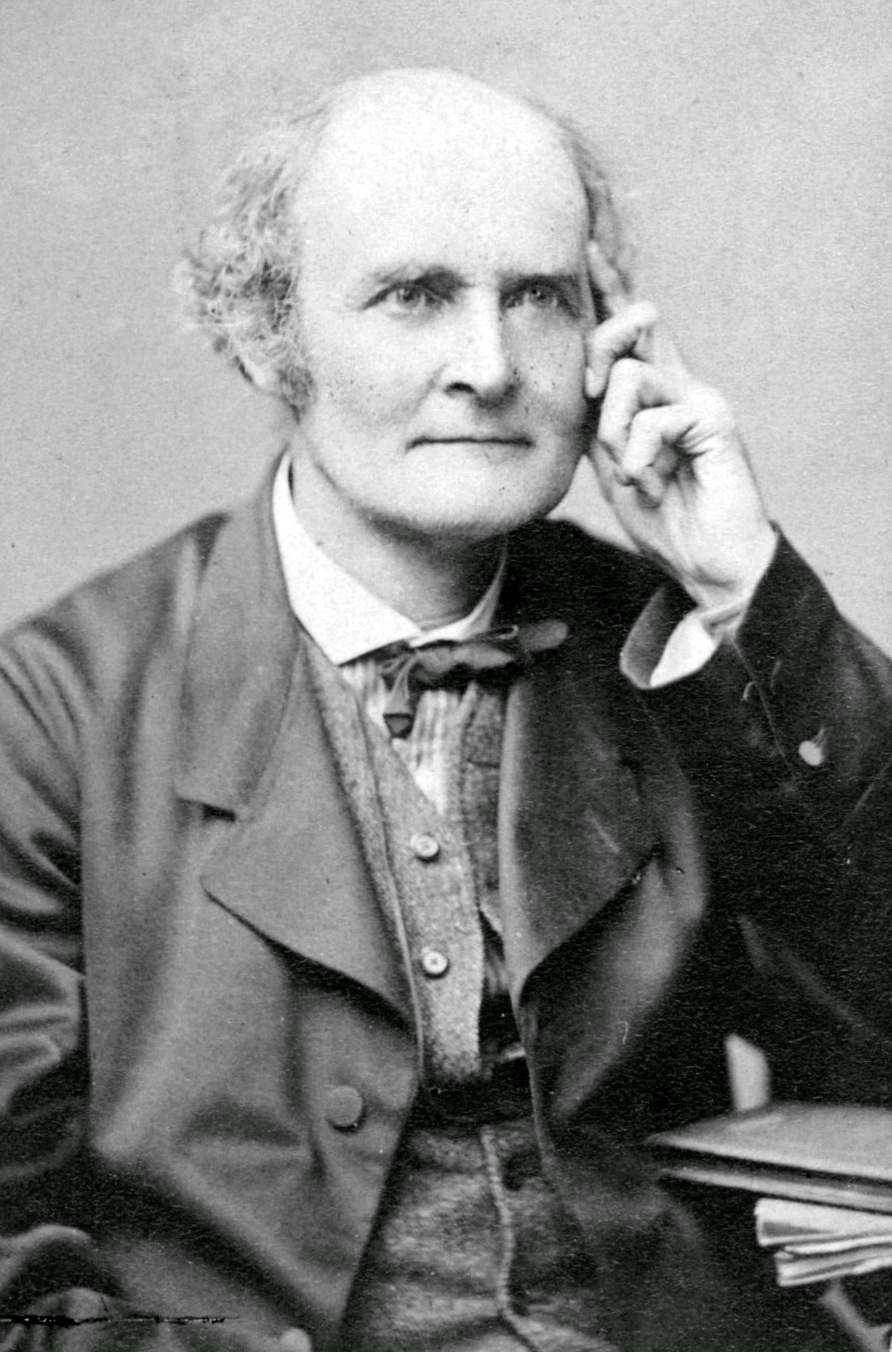

William Rowan Hamilton

Sir William Rowan Hamilton Doctor of Law, LL.D, Doctor of Civil Law, DCL, Royal Irish Academy, MRIA, Royal Astronomical Society#Fellow, FRAS (3/4 August 1805 – 2 September 1865) was an Irish mathematician, astronomer, and physicist. He was the ...

) states that every

square matrix

In mathematics, a square matrix is a matrix with the same number of rows and columns. An ''n''-by-''n'' matrix is known as a square matrix of order Any two square matrices of the same order can be added and multiplied.

Square matrices are often ...

over a

commutative ring

In mathematics, a commutative ring is a ring in which the multiplication operation is commutative. The study of commutative rings is called commutative algebra. Complementarily, noncommutative algebra is the study of ring properties that are not sp ...

(such as the

real

Real may refer to:

Currencies

* Brazilian real (R$)

* Central American Republic real

* Mexican real

* Portuguese real

* Spanish real

* Spanish colonial real

Music Albums

* ''Real'' (L'Arc-en-Ciel album) (2000)

* ''Real'' (Bright album) (2010) ...

or

complex number

In mathematics, a complex number is an element of a number system that extends the real numbers with a specific element denoted , called the imaginary unit and satisfying the equation i^= -1; every complex number can be expressed in the form ...

s or the

integers

An integer is the number zero (), a positive natural number (, , , etc.) or a negative integer with a minus sign (−1, −2, −3, etc.). The negative numbers are the additive inverses of the corresponding positive numbers. In the language o ...

) satisfies its own

characteristic equation.

If is a given matrix and is the

identity matrix

In linear algebra, the identity matrix of size n is the n\times n square matrix with ones on the main diagonal and zeros elsewhere.

Terminology and notation

The identity matrix is often denoted by I_n, or simply by I if the size is immaterial o ...

, then the

characteristic polynomial

In linear algebra, the characteristic polynomial of a square matrix is a polynomial which is invariant under matrix similarity and has the eigenvalues as roots. It has the determinant and the trace of the matrix among its coefficients. The chara ...

of is defined as

, where is the

determinant

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and ...

operation and is a

variable

Variable may refer to:

* Variable (computer science), a symbolic name associated with a value and whose associated value may be changed

* Variable (mathematics), a symbol that represents a quantity in a mathematical expression, as used in many ...

for a

scalar

Scalar may refer to:

*Scalar (mathematics), an element of a field, which is used to define a vector space, usually the field of real numbers

* Scalar (physics), a physical quantity that can be described by a single element of a number field such ...

element of the base

ring

Ring may refer to:

* Ring (jewellery), a round band, usually made of metal, worn as ornamental jewelry

* To make a sound with a bell, and the sound made by a bell

:(hence) to initiate a telephone connection

Arts, entertainment and media Film and ...

. Since the entries of the matrix

are (linear or constant)

polynomial

In mathematics, a polynomial is an expression consisting of indeterminates (also called variables) and coefficients, that involves only the operations of addition, subtraction, multiplication, and positive-integer powers of variables. An exa ...

s in , the determinant is also a

degree

Degree may refer to:

As a unit of measurement

* Degree (angle), a unit of angle measurement

** Degree of geographical latitude

** Degree of geographical longitude

* Degree symbol (°), a notation used in science, engineering, and mathematics

...

-

monic polynomial

In algebra, a monic polynomial is a single-variable polynomial (that is, a univariate polynomial) in which the leading coefficient (the nonzero coefficient of highest degree) is equal to 1. Therefore, a monic polynomial has the form:

:x^n+c_x^+\cd ...

in ,

One can create an analogous polynomial

in the matrix instead of the scalar variable , defined as

The Cayley–Hamilton theorem states that this polynomial expression is equal to the

zero matrix In mathematics, particularly linear algebra, a zero matrix or null matrix is a matrix all of whose entries are zero. It also serves as the additive identity of the additive group of m \times n matrices, and is denoted by the symbol O or 0 followed ...

, which is to say that

. The

theorem

In mathematics, a theorem is a statement that has been proved, or can be proved. The ''proof'' of a theorem is a logical argument that uses the inference rules of a deductive system to establish that the theorem is a logical consequence of th ...

allows

to be expressed as a

linear combination of the lower matrix powers of . When the ring is a

field

Field may refer to:

Expanses of open ground

* Field (agriculture), an area of land used for agricultural purposes

* Airfield, an aerodrome that lacks the infrastructure of an airport

* Battlefield

* Lawn, an area of mowed grass

* Meadow, a grass ...

, the Cayley–Hamilton theorem is equivalent to the statement that the

minimal polynomial of a square matrix

divides

In mathematics, a divisor of an integer n, also called a factor of n, is an integer m that may be multiplied by some integer to produce n. In this case, one also says that n is a multiple of m. An integer n is divisible or evenly divisible by ...

its characteristic polynomial.

The theorem was first

proven

Proven is a rural village in the Belgian province of West Flanders, and a "deelgemeente" of the municipality Poperinge. The village has about 1400 inhabitants.

The church and parish of Proven are named after Saint Victor. The Saint Victor Chur ...

in 1853

in terms of inverses of linear functions of

quaternion

In mathematics, the quaternion number system extends the complex numbers. Quaternions were first described by the Irish mathematician William Rowan Hamilton in 1843 and applied to mechanics in three-dimensional space. Hamilton defined a quatern ...

s, a

''non-commutative'' ring, by Hamilton.

This corresponds to the special case of certain real or complex matrices. The theorem holds for general quaternionic matrices.

[Due to the non-commutative nature of the multiplication operation for quaternions and related constructions, care needs to be taken with definitions, most notably in this context, for the determinant. The theorem holds as well for the slightly less well-behaved ]split-quaternion

In abstract algebra, the split-quaternions or coquaternions form an algebraic structure introduced by James Cockle in 1849 under the latter name. They form an associative algebra of dimension four over the real numbers.

After introduction in th ...

s, see . The rings of quaternions and split-quaternions can both be represented by certain complex matrices. (When restricted to unit norm, these are the groups

A group is a number of persons or things that are located, gathered, or classed together.

Groups of people

* Cultural group, a group whose members share the same cultural identity

* Ethnic group, a group whose members share the same ethnic ide ...

and respectively.) Therefore it is not surprising that the theorem holds.

There is no such matrix representation for the octonion

In mathematics, the octonions are a normed division algebra over the real numbers, a kind of hypercomplex number system. The octonions are usually represented by the capital letter O, using boldface or blackboard bold \mathbb O. Octonions have e ...

s, since the multiplication operation is not associative in this case. However, a modified Cayley–Hamilton theorem still holds for the octonions, see . Cayley in 1858 stated it for and smaller matrices, but only published a proof for the case.

[ The general case was first proved by Ferdinand Frobenius in 1878.]

Examples

matrices

For a matrix , the characteristic polynomial is given by , and so is trivial.

matrices

As a concrete example, let

:

Its characteristic polynomial is given by

:

The Cayley–Hamilton theorem claims that, if we ''define''

:

then

:

We can verify by computation that indeed,

:

For a generic matrix,

:

the characteristic polynomial is given by , so the Cayley–Hamilton theorem states that

:

which is indeed always the case, evident by working out the entries of 2.

Applications

Determinant and inverse matrix

For a general invertible matrix

In linear algebra, an -by- square matrix is called invertible (also nonsingular or nondegenerate), if there exists an -by- square matrix such that

:\mathbf = \mathbf = \mathbf_n \

where denotes the -by- identity matrix and the multiplicati ...

, i.e., one with nonzero determinant, −1 can thus be written as an order polynomial expression in : As indicated, the Cayley–Hamilton theorem amounts to the identity

The coefficients are given by the elementary symmetric polynomial

In mathematics, specifically in commutative algebra, the elementary symmetric polynomials are one type of basic building block for symmetric polynomials, in the sense that any symmetric polynomial can be expressed as a polynomial in elementary sy ...

s of the eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted b ...

s of . Using Newton identities

In mathematics, Newton's identities, also known as the Girard–Newton formulae, give relations between two types of symmetric polynomials, namely between power sums and elementary symmetric polynomials. Evaluated at the roots of a monic polynomi ...

, the elementary symmetric polynomials can in turn be expressed in terms of power sum symmetric polynomial In mathematics, specifically in commutative algebra, the power sum symmetric polynomials are a type of basic building block for symmetric polynomials, in the sense that every symmetric polynomial with rational coefficients can be expressed as a su ...

s of the eigenvalues:

:

where is the trace

Trace may refer to:

Arts and entertainment Music

* ''Trace'' (Son Volt album), 1995

* ''Trace'' (Died Pretty album), 1993

* Trace (band), a Dutch progressive rock band

* ''The Trace'' (album)

Other uses in arts and entertainment

* ''Trace'' ...

of the matrix . Thus, we can express in terms of the trace of powers of .

In general, the formula for the coefficients is given in terms of complete exponential Bell polynomials

In combinatorial mathematics, the Bell polynomials, named in honor of Eric Temple Bell, are used in the study of set partitions. They are related to Stirling and Bell numbers. They also occur in many applications, such as in the Faà di Bruno's fo ...

as[An explicit expression for these coefficients is

:

where the sum is taken over the sets of all integer partitions satisfying the equation

:]

:

In particular, the determinant of equals . Thus, the determinant can be written as the trace identity In mathematics, a trace identity is any equation involving the trace of a matrix.

Properties

Trace identities are invariant under simultaneous conjugation.

Uses

They are frequently used in the invariant theory of n \times n matrices to find th ...

:

:

Likewise, the characteristic polynomial can be written as

:

and, by multiplying both sides by (note ), one is led to an expression for the inverse of as a trace identity,

:

Another method for obtaining these coefficients for a general matrix, provided no root be zero, relies on the following alternative expression for the determinant,

:

Hence, by virtue of the Mercator series

In mathematics, the Mercator series or Newton–Mercator series is the Taylor series for the natural logarithm:

:\ln(1+x)=x-\frac+\frac-\frac+\cdots

In summation notation,

:\ln(1+x)=\sum_^\infty \frac x^n.

The series converges to the natural ...

,

:

where the exponential ''only'' needs be expanded to order , since is of order , the net negative powers of automatically vanishing by the C–H theorem. (Again, this requires a ring containing the rational number

In mathematics, a rational number is a number that can be expressed as the quotient or fraction of two integers, a numerator and a non-zero denominator . For example, is a rational number, as is every integer (e.g. ). The set of all ration ...

s.) Differentiation of this expression with respect to allows one to express the coefficients of the characteristic polynomial for general as determinants of matrices,[See, e.g., p. 54 of , which solves ]Jacobi's formula

In matrix calculus, Jacobi's formula expresses the derivative of the determinant of a matrix ''A'' in terms of the adjugate of ''A'' and the derivative of ''A''., Part Three, Section 8.3

If is a differentiable map from the real numbers to mat ...

,

:

where is the adjugate matrix of the next section.

There also exists an equivalent, related recursive algorithm introduced by Urbain Le Verrier

Urbain Jean Joseph Le Verrier FRS (FOR) HFRSE (; 11 March 1811 – 23 September 1877) was a French astronomer and mathematician who specialized in celestial mechanics and is best known for predicting the existence and position of Neptune using ...

and Dmitry Konstantinovich Faddeev

Dmitry Konstantinovich Faddeev ( rus, Дми́трий Константи́нович Фадде́ев, , ˈdmʲitrʲɪj kənstɐnʲˈtʲinəvʲɪtɕ fɐˈdʲe(j)ɪf; 30 June 1907 – 20 October 1989) was a Soviet mathematician.

Biography

Dmitry w ...

—the Faddeev–LeVerrier algorithm

In mathematics (linear algebra), the Faddeev–LeVerrier algorithm is a recursive method to calculate the coefficients of the characteristic polynomial p_A(\lambda)=\det (\lambda I_n - A) of a square matrix, , named after Dmitry Konstantinovic ...

, which reads

:

(see, e.g., p 88 of .) Observe as the recursion terminates.

See the algebraic proof in the following section, which relies on the modes of the adjugate, .

Specifically, and the above derivative of when one traces it yields

: (), and the above recursions, in turn.

:

; Examples

For instance, the first few Bell polynomials are = 1, , , and .

Using these to specify the coefficients of the characteristic polynomial of a matrix yields

:

The coefficient gives the determinant of the matrix, minus its trace, while its inverse is given by

:

It is apparent from the general formula for ''c''''n''−''k'', expressed in terms of Bell polynomials, that the expressions

:

always give the coefficients of and of in the characteristic polynomial of any matrix, respectively. So, for a matrix , the statement of the Cayley–Hamilton theorem can also be written as

:

where the right-hand side designates a matrix with all entries reduced to zero. Likewise, this determinant in the case, is now

:

This expression gives the negative of coefficient of in the general case, as seen below.

Similarly, one can write for a matrix ,

:

where, now, the determinant is ,

:

and so on for larger matrices. The increasingly complex expressions for the coefficients is deducible from Newton's identities

In mathematics, Newton's identities, also known as the Girard–Newton formulae, give relations between two types of symmetric polynomials, namely between power sums and elementary symmetric polynomials. Evaluated at the roots of a monic polynomia ...

or the Faddeev–LeVerrier algorithm

In mathematics (linear algebra), the Faddeev–LeVerrier algorithm is a recursive method to calculate the coefficients of the characteristic polynomial p_A(\lambda)=\det (\lambda I_n - A) of a square matrix, , named after Dmitry Konstantinovic ...

.

''n''-th power of matrix

The Cayley–Hamilton theorem always provides a relationship between the powers of (though not always the simplest one), which allows one to simplify expressions involving such powers, and evaluate them without having to compute the power ''n'' or any higher powers of .

As an example, for the theorem gives

:

Then, to calculate , observe

:

:

Likewise,

:

:

Notice that we have been able to write the matrix power as the sum of two terms. In fact, matrix power of any order can be written as a matrix polynomial of degree at most , where is the size of a square matrix. This is an instance where Cayley–Hamilton theorem can be used to express a matrix function, which we will discuss below systematically.

Matrix functions

Given an analytic function

In mathematics, an analytic function is a function that is locally given by a convergent power series. There exist both real analytic functions and complex analytic functions. Functions of each type are infinitely differentiable, but complex an ...

:

and the characteristic polynomial of degree of an matrix , the function can be expressed using long division as

:

where is some quotient polynomial and is a remainder polynomial such that .

By the Cayley–Hamilton theorem, replacing by the matrix gives , so one has

:

Thus, the analytic function of the matrix can be expressed as a matrix polynomial of degree less than .

Let the remainder polynomial be

:

Since , evaluating the function at the eigenvalues of yields

:

This amounts to a system of linear equation

In mathematics, a linear equation is an equation that may be put in the form

a_1x_1+\ldots+a_nx_n+b=0, where x_1,\ldots,x_n are the variables (or unknowns), and b,a_1,\ldots,a_n are the coefficients, which are often real numbers. The coefficien ...

s, which can be solved to determine the coefficients . Thus, one has

:

When the eigenvalues are repeated, that is for some , two or more equations are identical; and hence the linear equations cannot be solved uniquely. For such cases, for an eigenvalue with multiplicity , the first derivatives of vanish at the eigenvalue. This

leads to the extra linearly independent solutions

:

which, combined with others, yield the required equations to solve for .

Finding a polynomial that passes through the points is essentially an interpolation problem, and can be solved using Lagrange or Newton interpolation techniques, leading to Sylvester's formula In matrix theory, Sylvester's formula or Sylvester's matrix theorem (named after J. J. Sylvester) or Lagrange−Sylvester interpolation expresses an analytic function of a matrix as a polynomial in , in terms of the eigenvalues and eigenvectors ...

.

For example, suppose the task is to find the polynomial representation of

:

The characteristic polynomial is , and the eigenvalues are . Let . Evaluating at the eigenvalues, one obtains two linear equations, and .

Solving the equations yields and . Thus, it follows that

:

If, instead, the function were , then the coefficients would have been and ; hence

:

As a further example, when considering

:

then the characteristic polynomial is , and the eigenvalues are .

As before, evaluating the function at the eigenvalues gives us the linear equations and ; the solution of which gives, and . Thus, for this case,

:

which is a rotation matrix In linear algebra, a rotation matrix is a transformation matrix that is used to perform a rotation in Euclidean space. For example, using the convention below, the matrix

:R = \begin

\cos \theta & -\sin \theta \\

\sin \theta & \cos \theta

\end ...

.

Standard examples of such usage is the exponential map from the Lie algebra

In mathematics, a Lie algebra (pronounced ) is a vector space \mathfrak g together with an Binary operation, operation called the Lie bracket, an Alternating multilinear map, alternating bilinear map \mathfrak g \times \mathfrak g \rightarrow ...

of a matrix Lie group

In mathematics, a Lie group (pronounced ) is a group (mathematics), group that is also a differentiable manifold. A manifold is a space that locally resembles Euclidean space, whereas groups define the abstract concept of a binary operation a ...

into the group. It is given by a matrix exponential

In mathematics, the matrix exponential is a matrix function on square matrices analogous to the ordinary exponential function. It is used to solve systems of linear differential equations. In the theory of Lie groups, the matrix exponential gives ...

,

:

Such expressions have long been known for ,

:

where the are the Pauli matrices

In mathematical physics and mathematics, the Pauli matrices are a set of three complex matrices which are Hermitian, involutory and unitary. Usually indicated by the Greek letter sigma (), they are occasionally denoted by tau () when used in ...

and for ,

:

which is Rodrigues' rotation formula

In the theory of three-dimensional rotation, Rodrigues' rotation formula, named after Olinde Rodrigues, is an efficient algorithm for rotating a vector in space, given an axis and angle of rotation. By extension, this can be used to transform al ...

. For the notation, see 3D rotation group#A note on Lie algebras.

More recently, expressions have appeared for other groups, like the Lorentz group

In physics and mathematics, the Lorentz group is the group of all Lorentz transformations of Minkowski spacetime, the classical and quantum setting for all (non-gravitational) physical phenomena. The Lorentz group is named for the Dutch physicis ...

, and , as well as . The group is the conformal group

In mathematics, the conformal group of an inner product space is the group of transformations from the space to itself that preserve angles. More formally, it is the group of transformations that preserve the conformal geometry of the space.

Se ...

of spacetime

In physics, spacetime is a mathematical model that combines the three dimensions of space and one dimension of time into a single four-dimensional manifold. Spacetime diagrams can be used to visualize relativistic effects, such as why differen ...

, its simply connected

In topology, a topological space is called simply connected (or 1-connected, or 1-simply connected) if it is path-connected and every path between two points can be continuously transformed (intuitively for embedded spaces, staying within the spac ...

cover (to be precise, the simply connected cover of the connected component of ). The expressions obtained apply to the standard representation of these groups. They require knowledge of (some of) the eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted b ...

s of the matrix to exponentiate. For (and hence for ), closed expressions have been obtained for ''all'' irreducible representations, i.e. of any spin.

Algebraic number theory

The Cayley–Hamilton theorem is an effective tool for computing the minimal polynomial of algebraic integer

In algebraic number theory, an algebraic integer is a complex number which is integral over the integers. That is, an algebraic integer is a complex root of some monic polynomial (a polynomial whose leading coefficient is 1) whose coefficients ...

s. For example, given a finite extension

In

In

In

In