|

Eigenvalue

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by \lambda, is the factor by which the eigenvector is scaled. Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed. Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated. Formal definition If is a linear transformation from a vector space over a field into itself and is a nonzero vector in , then is an eigenvector of if is a scalar multiple of . This can be written as T(\mathbf) = \lambda \mathbf, where is a scalar in , known as the eigenvalue, characteristic value, or characteristic root ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenvectors Of A Linear Operator

In linear algebra, an eigenvector () or characteristic vector of a linear transformation is a nonzero vector that changes at most by a scalar factor when that linear transformation is applied to it. The corresponding eigenvalue, often denoted by \lambda, is the factor by which the eigenvector is scaled. Geometrically, an eigenvector, corresponding to a real nonzero eigenvalue, points in a direction in which it is stretched by the transformation and the eigenvalue is the factor by which it is stretched. If the eigenvalue is negative, the direction is reversed. Loosely speaking, in a multidimensional vector space, the eigenvector is not rotated. Formal definition If is a linear transformation from a vector space over a field into itself and is a nonzero vector in , then is an eigenvector of if is a scalar multiple of . This can be written as T(\mathbf) = \lambda \mathbf, where is a scalar in , known as the eigenvalue, characteristic value, or characteristic root as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigendecomposition Of A Matrix

In linear algebra, eigendecomposition is the Matrix factorization, factorization of a matrix (mathematics), matrix into a canonical form, whereby the matrix is represented in terms of its eigenvalues and eigenvectors. Only diagonalizable matrix, diagonalizable matrices can be factorized in this way. When the matrix being factorized is a normal matrix, normal or real symmetric matrix, the decomposition is called "spectral decomposition", derived from the spectral theorem. Fundamental theory of matrix eigenvectors and eigenvalues A (nonzero) vector of dimension is an eigenvector of a square matrix if it satisfies a linear equation of the form :\mathbf \mathbf = \lambda \mathbf for some scalar . Then is called the eigenvalue corresponding to . Geometrically speaking, the eigenvectors of are the vectors that merely elongates or shrinks, and the amount that they elongate/shrink by is the eigenvalue. The above equation is called the eigenvalue equation or the eigenvalue probl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stability Theory

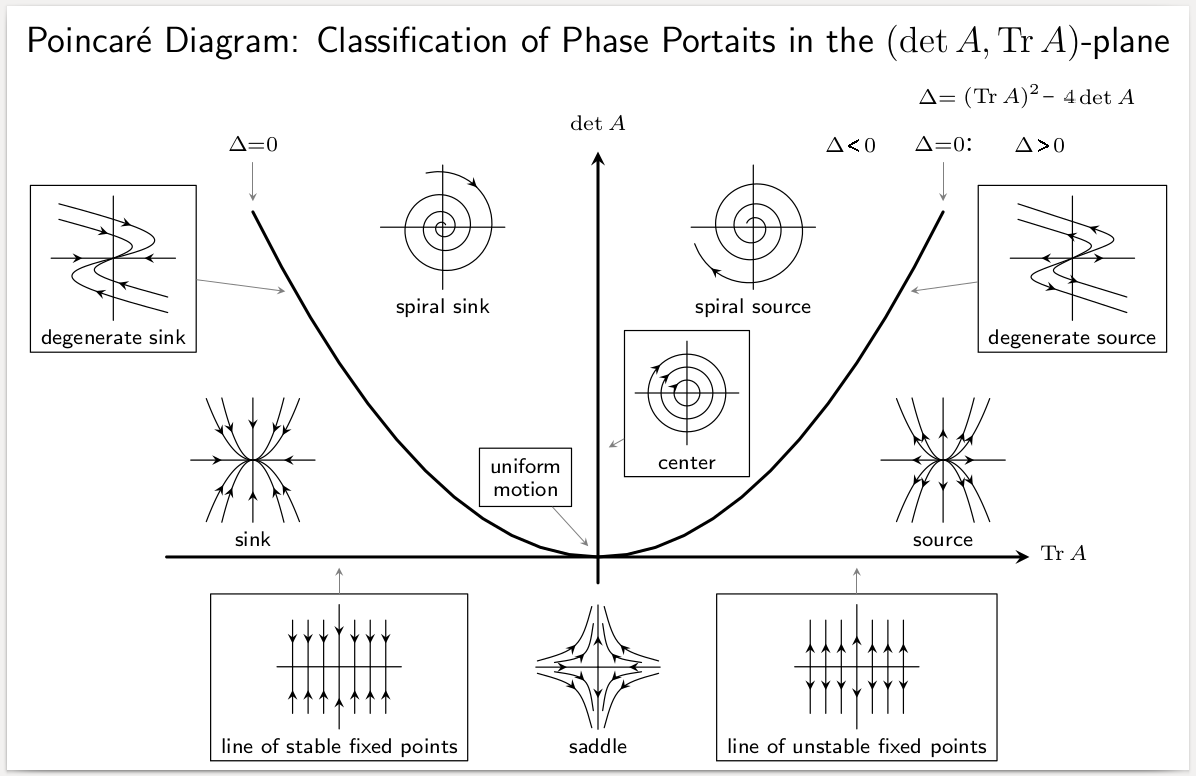

In mathematics, stability theory addresses the stability of solutions of differential equations and of trajectories of dynamical systems under small perturbations of initial conditions. The heat equation, for example, is a stable partial differential equation because small perturbations of initial data lead to small variations in temperature at a later time as a result of the maximum principle. In partial differential equations one may measure the distances between functions using Lp norms or the sup norm, while in differential geometry one may measure the distance between spaces using the Gromov–Hausdorff distance. In dynamical systems, an orbit is called ''Lyapunov stable'' if the forward orbit of any point is in a small enough neighborhood or it stays in a small (but perhaps, larger) neighborhood. Various criteria have been developed to prove stability or instability of an orbit. Under favorable circumstances, the question may be reduced to a well-studied problem involvi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vibration Analysis

Vibration is a mechanical phenomenon whereby oscillations occur about an equilibrium point. The word comes from Latin ''vibrationem'' ("shaking, brandishing"). The oscillations may be periodic, such as the motion of a pendulum—or random, such as the movement of a tire on a gravel road. Vibration can be desirable: for example, the motion of a tuning fork, the reed in a woodwind instrument or harmonica, a mobile phone, or the cone of a loudspeaker. In many cases, however, vibration is undesirable, wasting energy and creating unwanted sound. For example, the vibrational motions of engines, electric motors, or any mechanical device in operation are typically unwanted. Such vibrations could be caused by imbalances in the rotating parts, uneven friction, or the meshing of gear teeth. Careful designs usually minimize unwanted vibrations. The studies of sound and vibration are closely related. Sound, or pressure waves, are generated by vibrating structures (e.g. vocal cords); thes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenface

An eigenface () is the name given to a set of eigenvectors when used in the computer vision problem of human face recognition. The approach of using eigenfaces for recognition was developed by Sirovich and Kirby and used by Matthew Turk and Alex Pentland in face classification. The eigenvectors are derived from the covariance matrix of the probability distribution over the high-dimensional vector space of face images. The eigenfaces themselves form a basis set of all images used to construct the covariance matrix. This produces dimension reduction by allowing the smaller set of basis images to represent the original training images. Classification can be achieved by comparing how faces are represented by the basis set. History The eigenface approach began with a search for a low-dimensional representation of face images. Sirovich and Kirby showed that principal component analysis could be used on a collection of face images to form a set of basis features. These basis images, k ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two by three matrix", a "-matrix", or a matrix of dimension . Without further specifications, matrices represent linear maps, and allow explicit computations in linear algebra. Therefore, the study of matrices is a large part of linear algebra, and most properties and operations of abstract linear algebra can be expressed in terms of matrices. For example, matrix multiplication represents composition of linear maps. Not all matrices are related to linear algebra. This is, in particular, the case in graph theory, of incidence matrices, and adjacency matrices. ''This article focuses on matrices related to linear algebra, and, unle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Algebra

Linear algebra is the branch of mathematics concerning linear equations such as: :a_1x_1+\cdots +a_nx_n=b, linear maps such as: :(x_1, \ldots, x_n) \mapsto a_1x_1+\cdots +a_nx_n, and their representations in vector spaces and through matrices. Linear algebra is central to almost all areas of mathematics. For instance, linear algebra is fundamental in modern presentations of geometry, including for defining basic objects such as lines, planes and rotations. Also, functional analysis, a branch of mathematical analysis, may be viewed as the application of linear algebra to spaces of functions. Linear algebra is also used in most sciences and fields of engineering, because it allows modeling many natural phenomena, and computing efficiently with such models. For nonlinear systems, which cannot be modeled with linear algebra, it is often used for dealing with first-order approximations, using the fact that the differential of a multivariate function at a point is the linear ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space

In mathematics and physics, a vector space (also called a linear space) is a set whose elements, often called ''vectors'', may be added together and multiplied ("scaled") by numbers called '' scalars''. Scalars are often real numbers, but can be complex numbers or, more generally, elements of any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called ''vector axioms''. The terms real vector space and complex vector space are often used to specify the nature of the scalars: real coordinate space or complex coordinate space. Vector spaces generalize Euclidean vectors, which allow modeling of physical quantities, such as forces and velocity, that have not only a magnitude, but also a direction. The concept of vector spaces is fundamental for linear algebra, together with the concept of matrix, which allows computing in vector spaces. This provides a concise and synthetic way for manipulating and studying systems of linear eq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space

In mathematics and physics, a vector space (also called a linear space) is a set whose elements, often called ''vectors'', may be added together and multiplied ("scaled") by numbers called '' scalars''. Scalars are often real numbers, but can be complex numbers or, more generally, elements of any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called ''vector axioms''. The terms real vector space and complex vector space are often used to specify the nature of the scalars: real coordinate space or complex coordinate space. Vector spaces generalize Euclidean vectors, which allow modeling of physical quantities, such as forces and velocity, that have not only a magnitude, but also a direction. The concept of vector spaces is fundamental for linear algebra, together with the concept of matrix, which allows computing in vector spaces. This provides a concise and synthetic way for manipulating and studying systems of linear eq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Square Matrix

In mathematics, a square matrix is a matrix with the same number of rows and columns. An ''n''-by-''n'' matrix is known as a square matrix of order Any two square matrices of the same order can be added and multiplied. Square matrices are often used to represent simple linear transformations, such as shearing or rotation. For example, if R is a square matrix representing a rotation (rotation matrix) and \mathbf is a column vector describing the position of a point in space, the product R\mathbf yields another column vector describing the position of that point after that rotation. If \mathbf is a row vector, the same transformation can be obtained using where R^ is the transpose of Main diagonal The entries a_ (''i'' = 1, …, ''n'') form the main diagonal of a square matrix. They lie on the imaginary line which runs from the top left corner to the bottom right corner of the matrix. For instance, the main diagonal of the 4×4 matrix above contains the elements , , , . The d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scaling (geometry)

In affine geometry, uniform scaling (or isotropic scaling) is a linear transformation that enlarges (increases) or shrinks (diminishes) objects by a ''scale factor'' that is the same in all directions. The result of uniform scaling is similarity (geometry), similar (in the geometric sense) to the original. A scale factor of 1 is normally allowed, so that congruence (geometry), congruent shapes are also classed as similar. Uniform scaling happens, for example, when enlarging or reducing a photograph, or when creating a scale model of a building, car, airplane, etc. More general is scaling with a separate scale factor for each axis direction. Non-uniform scaling (anisotropic scaling) is obtained when at least one of the scaling factors is different from the others; a special case is directional scaling or stretching (in one direction). Non-uniform scaling changes the shape of the object; e.g. a square may change into a rectangle, or into a parallelogram if the sides of the squar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complex Number

In mathematics, a complex number is an element of a number system that extends the real numbers with a specific element denoted , called the imaginary unit and satisfying the equation i^= -1; every complex number can be expressed in the form a + bi, where and are real numbers. Because no real number satisfies the above equation, was called an imaginary number by René Descartes. For the complex number a+bi, is called the , and is called the . The set of complex numbers is denoted by either of the symbols \mathbb C or . Despite the historical nomenclature "imaginary", complex numbers are regarded in the mathematical sciences as just as "real" as the real numbers and are fundamental in many aspects of the scientific description of the natural world. Complex numbers allow solutions to all polynomial equations, even those that have no solutions in real numbers. More precisely, the fundamental theorem of algebra asserts that every non-constant polynomial equation with real or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |