Computational Pharmacology on:

[Wikipedia]

[Google]

[Amazon]

Computational biology refers to the use of

Computational biology refers to the use of

Computational genomics is the study of the genomes of

Computational genomics is the study of the genomes of

The algorithm follows these steps:

# Randomly select ''k'' distinct data points. These are the initial clusters.

# Measure the distance between each point and each of the 'k' clusters. (This is the distance of the points from each point ''k'').

# Assign each point to the nearest cluster.

# Find the center of each cluster (medoid).

# Repeat until the clusters no longer change.

# Assess the quality of the clustering by adding up the variation within each cluster.

# Repeat the processes with different values of k.

# Pick the best value for 'k' by finding the "elbow" in the plot of which k value has the lowest variance.

One example of this in biology is used in the 3D mapping of a genome. Information of a mouse's HIST1 region of chromosome 13 is gathered from

The algorithm follows these steps:

# Randomly select ''k'' distinct data points. These are the initial clusters.

# Measure the distance between each point and each of the 'k' clusters. (This is the distance of the points from each point ''k'').

# Assign each point to the nearest cluster.

# Find the center of each cluster (medoid).

# Repeat until the clusters no longer change.

# Assess the quality of the clustering by adding up the variation within each cluster.

# Repeat the processes with different values of k.

# Pick the best value for 'k' by finding the "elbow" in the plot of which k value has the lowest variance.

One example of this in biology is used in the 3D mapping of a genome. Information of a mouse's HIST1 region of chromosome 13 is gathered from

A common supervised learning algorithm is the

A common supervised learning algorithm is the

bioinformatics.org

{{DEFAULTSORT:Computational Biology Bioinformatics Computational fields of study

Computational biology refers to the use of

Computational biology refers to the use of data analysis

Data analysis is a process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, enco ...

, mathematical modeling and computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and big data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller am ...

, the field also has foundations in applied mathematics, chemistry

Chemistry is the science, scientific study of the properties and behavior of matter. It is a natural science that covers the Chemical element, elements that make up matter to the chemical compound, compounds made of atoms, molecules and ions ...

, and genetics. It differs from biological computing, a subfield of computer engineering

Computer engineering (CoE or CpE) is a branch of electrical engineering and computer science that integrates several fields of computer science and electronic engineering required to develop computer hardware and software. Computer engineers ...

which uses bioengineering

Biological engineering or

bioengineering is the application of principles of biology and the tools of engineering to create usable, tangible, economically-viable products. Biological engineering employs knowledge and expertise from a number o ...

to build computer

A computer is a machine that can be programmed to Execution (computing), carry out sequences of arithmetic or logical operations (computation) automatically. Modern digital electronic computers can perform generic sets of operations known as C ...

s.

History

Bioinformatics

Bioinformatics () is an interdisciplinary field that develops methods and software tools for understanding biological data, in particular when the data sets are large and complex. As an interdisciplinary field of science, bioinformatics combi ...

, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field.

By 1982, researchers shared information via punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting relevant information.

Perhaps the best-known example of computational biology, the Human Genome Project

The Human Genome Project (HGP) was an international scientific research project with the goal of determining the base pairs that make up human DNA, and of identifying, mapping and sequencing all of the genes of the human genome from both a ...

, officially began in 1990. By 2003, the project had mapped around 85% of the human genome, satisfying its initial goals. Work continued, however, and by 2021 level "complete genome" was reached with only 0.3% remaining bases covered by potential issues. The missing Y chromosome was added in January 2022.

Since the late 1990s, computational biology has become an important part of biology, leading to numerous subfields. Today, the International Society for Computational Biology recognizes 21 different 'Communities of Special Interest', each representing a slice of the larger field. In addition to helping sequence the human genome, computational biology has helped create accurate models of the human brain, map the 3D structure of genomes, and model biological systems.

Applications

Anatomy

Computational anatomy is the study of anatomical shape and form at the visible or gross anatomical scale of morphology. It involves the development of computational mathematical and data-analytical methods for modeling and simulating biological structures. It focuses on the anatomical structures being imaged, rather than the medical imaging devices. Due to the availability of dense 3D measurements via technologies such asmagnetic resonance imaging

Magnetic resonance imaging (MRI) is a medical imaging technique used in radiology to form pictures of the anatomy and the physiological processes of the body. MRI scanners use strong magnetic fields, magnetic field gradients, and radio wave ...

, computational anatomy has emerged as a subfield of medical imaging

Medical imaging is the technique and process of imaging the interior of a body for clinical analysis and medical intervention, as well as visual representation of the function of some organs or tissues (physiology). Medical imaging seeks to rev ...

and bioengineering

Biological engineering or

bioengineering is the application of principles of biology and the tools of engineering to create usable, tangible, economically-viable products. Biological engineering employs knowledge and expertise from a number o ...

for extracting anatomical coordinate systems at the morpheme scale in 3D.

The original formulation of computational anatomy is as a generative model of shape and form from exemplars acted upon via transformations. The diffeomorphism group is used to study different coordinate systems via coordinate transformations as generated via the Lagrangian and Eulerian velocities of flow from one anatomical configuration in to another. It relates with shape statistics Statistical shape analysis is an analysis of the geometrical properties of some given set of shapes by statistical methods. For instance, it could be used to quantify differences between male and female gorilla skull shapes, normal and pathological ...

and morphometrics, with the distinction that diffeomorphisms are used to map coordinate systems, whose study is known as diffeomorphometry.

Data and modeling

Mathematical biology is the use of mathematical models of living organisms to examine the systems that govern structure, development, and behavior in biological systems. This entails a more theoretical approach to problems, rather than its more empirically-minded counterpart of experimental biology. Mathematical biology draws ondiscrete mathematics

Discrete mathematics is the study of mathematical structures that can be considered "discrete" (in a way analogous to discrete variables, having a bijection with the set of natural numbers) rather than "continuous" (analogously to continuous f ...

, topology (also useful for computational modeling), Bayesian statistics, linear algebra and Boolean algebra.

These mathematical approaches have enabled the creation of databases and other methods for storing, retrieving, and analyzing biological data, a field known as bioinformatics

Bioinformatics () is an interdisciplinary field that develops methods and software tools for understanding biological data, in particular when the data sets are large and complex. As an interdisciplinary field of science, bioinformatics combi ...

. Usually, this process involves genetics and analyzing genes.

Gathering and analyzing large datasets have made way for growing research fields such as data mining, and computational biomodeling, which refers to building computer models and visual simulations of biological systems. This allows researchers to predict how such systems will react to different environments, useful for determining if a system can "maintain their state and functions against external and internal perturbations". While current techniques focus on small biological systems, researchers are working on approaches that will allow for larger networks to be analyzed and modeled. A majority of researchers believe that this will be essential in developing modern medical approaches to creating new drugs and gene therapy. A useful modeling approach is to use Petri nets via tools such as esyN

esyN (Easy Networks) is a bioinformatics web-tool for visualizing, building and analysing molecular interaction networks. esyN is based on cytoscape.js and its aim is to make it easy for everybody to perform network analysis.

esyN is connected ...

.

Along similar lines, until recent decades theoretical ecology has largely dealt with analytic models that were detached from the statistical model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of Sample (statistics), sample data (and similar data from a larger Statistical population, population). A statistical model repres ...

s used by empirical

Empirical evidence for a proposition is evidence, i.e. what supports or counters this proposition, that is constituted by or accessible to sense experience or experimental procedure. Empirical evidence is of central importance to the sciences and ...

ecologists. However, computational methods have aided in developing ecological theory via simulation of ecological systems, in addition to increasing application of methods from computational statistics in ecological analyses.

Systems Biology

Systems biology consists of computing the interactions between various biological systems ranging from the cellular level to entire populations with the goal of discovering emergent properties. This process usually involves networking cell signaling and metabolic pathways. Systems biology often uses computational techniques from biological modeling and graph theory to study these complex interactions at cellular levels.Evolutionary biology

Computational biology has assisted evolutionary biology by: * Using DNA data to reconstruct the tree of life with computational phylogenetics * Fitting population genetics models (either forward time or backward time) to DNA data to make inferences aboutdemographic

Demography () is the statistical study of populations, especially human beings.

Demographic analysis examines and measures the dimensions and dynamics of populations; it can cover whole societies or groups defined by criteria such as edu ...

or selective history

* Building population genetics models of evolutionary systems from first principles in order to predict what is likely to evolve

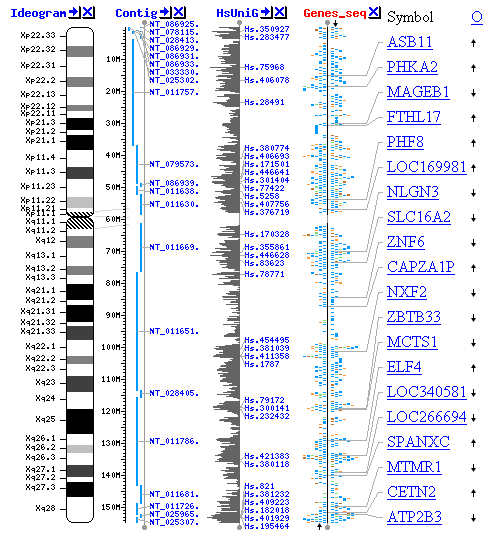

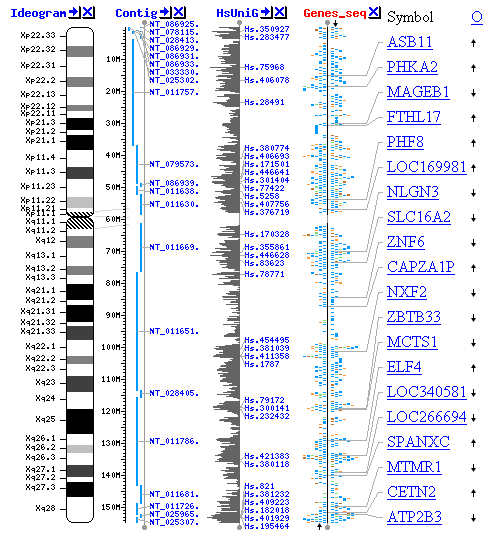

Genomics

Computational genomics is the study of the genomes of

Computational genomics is the study of the genomes of cells

Cell most often refers to:

* Cell (biology), the functional basic unit of life

Cell may also refer to:

Locations

* Monastic cell, a small room, hut, or cave in which a religious recluse lives, alternatively the small precursor of a monastery w ...

and organisms. The Human Genome Project

The Human Genome Project (HGP) was an international scientific research project with the goal of determining the base pairs that make up human DNA, and of identifying, mapping and sequencing all of the genes of the human genome from both a ...

is one example of computational genomics. This project looks to sequence the entire human genome into a set of data. Once fully implemented, this could allow for doctors to analyze the genome of an individual patient. This opens the possibility of personalized medicine, prescribing treatments based on an individual's pre-existing genetic patterns. Researchers are looking to sequence the genomes of animals, plants, bacteria, and all other types of life.

One of the main ways that genomes are compared is by sequence homology

Sequence homology is the biological homology between DNA, RNA, or protein sequences, defined in terms of shared ancestry in the evolutionary history of life. Two segments of DNA can have shared ancestry because of three phenomena: either a spe ...

. Homology is the study of biological structures and nucleotide sequences in different organisms that come from a common ancestor

An ancestor, also known as a forefather, fore-elder or a forebear, is a parent or (recursively) the parent of an antecedent (i.e., a grandparent, great-grandparent, great-great-grandparent and so forth). ''Ancestor'' is "any person from whom ...

. Research suggests that between 80 and 90% of genes in newly sequenced prokaryotic genomes can be identified this way.

Sequence alignment

In bioinformatics, a sequence alignment is a way of arranging the sequences of DNA, RNA, or protein to identify regions of similarity that may be a consequence of functional, structural, or evolutionary relationships between the sequences. Alig ...

is another process for comparing and detecting similarities between biological sequences or genes. Sequence alignment is useful in a number of bioinformatics applications, such as computing the longest common subsequence of two genes or comparing variants of certain diseases.

An untouched project in computational genomics is the analysis of intergenic regions, which comprise roughly 97% of the human genome. Researchers are working to understand the functions of non-coding regions of the human genome through the development of computational and statistical methods and via large consortia projects such as ENCODE and the Roadmap Epigenomics Project.

Understanding how individual genes contribute to the biology of an organism at the molecular

A molecule is a group of two or more atoms held together by attractive forces known as chemical bonds; depending on context, the term may or may not include ions which satisfy this criterion. In quantum physics, organic chemistry, and bioche ...

, cellular, and organism levels is known as gene ontology. The Gene Ontology Consortium's mission is to develop an up-to-date, comprehensive, computational model of biological systems, from the molecular level to larger pathways, cellular, and organism-level systems. The Gene Ontology resource provides a computational representation of current scientific knowledge about the functions of genes (or, more properly, the protein and non-coding RNA

Ribonucleic acid (RNA) is a polymeric molecule essential in various biological roles in coding, decoding, regulation and expression of genes. RNA and deoxyribonucleic acid ( DNA) are nucleic acids. Along with lipids, proteins, and carbohydra ...

molecules produced by genes) from many different organisms, from humans to bacteria.

3D genomics is a subsection in computational biology that focuses on the organization and interaction of genes within a eukaryotic cell. One method used to gather 3D genomic data is through Genome Architecture Mapping (GAM). GAM measures 3D distances of chromatin and DNA in the genome by combining cryosectioning

The frozen section procedure is a pathological laboratory procedure to perform rapid microscopic analysis of a specimen. It is used most often in oncological surgery. The technical name for this procedure is cryosection. The microtome device that ...

, the process of cutting a strip from the nucleus to examine the DNA, with laser microdissection. A nuclear profile is simply this strip or slice that is taken from the nucleus. Each nuclear profile contains genomic windows, which are certain sequences of nucleotides - the base unit of DNA. GAM captures a genome network of complex, multi enhancer chromatin contacts throughout a cell.

Neuroscience

Computational neuroscience is the study of brain function in terms of the information processing properties of the nervous system. A subset of neuroscience, it looks to model the brain to examine specific aspects of the neurological system. Models of the brain include: * Realistic Brain Models: These models look to represent every aspect of the brain, including as much detail at the cellular level as possible. Realistic models provide the most information about the brain, but also have the largest margin for error. More variables in a brain model create the possibility for more error to occur. These models do not account for parts of the cellular structure that scientists do not know about. Realistic brain models are the most computationally heavy and the most expensive to implement. * Simplifying Brain Models: These models look to limit the scope of a model in order to assess a specificphysical property

A physical property is any property that is measurable, whose value describes a state of a physical system. The changes in the physical properties of a system can be used to describe its changes between momentary states. Physical properties are ...

of the neurological system. This allows for the intensive computational problems to be solved, and reduces the amount of potential error from a realistic brain model.

It is the work of computational neuroscientists to improve the algorithms and data structures currently used to increase the speed of such calculations.

Computational neuropsychiatry is an emerging field that uses mathematical and computer-assisted modeling of brain mechanisms involved in mental disorders. Several initiatives have demonstrated that computational modeling is an important contribution to understand neuronal circuits that could generate mental functions and dysfunctions.

Pharmacology

Computational pharmacology is "the study of the effects of genomic data to find links between specificgenotype

The genotype of an organism is its complete set of genetic material. Genotype can also be used to refer to the alleles or variants an individual carries in a particular gene or genetic location. The number of alleles an individual can have in a ...

s and diseases and then screening drug data". The pharmaceutical industry requires a shift in methods to analyze drug data. Pharmacologists were able to use Microsoft Excel to compare chemical and genomic data related to the effectiveness of drugs. However, the industry has reached what is referred to as the Excel barricade. This arises from the limited number of cells accessible on a spreadsheet. This development led to the need for computational pharmacology. Scientists and researchers develop computational methods to analyze these massive data sets. This allows for an efficient comparison between the notable data points and allows for more accurate drugs to be developed.

Analysts project that if major medications fail due to patents, that computational biology will be necessary to replace current drugs on the market. Doctoral students in computational biology are being encouraged to pursue careers in industry rather than take Post-Doctoral positions. This is a direct result of major pharmaceutical companies needing more qualified analysts of the large data sets required for producing new drugs.

Similarly, computational oncology aims to determine the future mutations in cancer through algorithmic approaches. Research in this field has led to the use of high-throughput measurement that millions of data points using robotics and other sensing devices. This data is collected from DNA, RNA

Ribonucleic acid (RNA) is a polymeric molecule essential in various biological roles in coding, decoding, regulation and expression of genes. RNA and deoxyribonucleic acid ( DNA) are nucleic acids. Along with lipids, proteins, and carbohydra ...

, and other biological structures. Areas of focus include determining the characteristics of tumors, analyzing molecules that are deterministic in causing cancer, and understanding how the human genome relates to the causation of tumors and cancer.

Techniques

Computational biologists use a wide range of software and algorithms to carry out their research.Unsupervised Learning

Unsupervised learning is a type of algorithm that finds patterns in unlabeled data. One example isk-means clustering

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster with the nearest mean (cluster centers or ...

, which aims to partition ''n'' data points into ''k'' clusters, in which each data point belongs to the cluster with the nearest mean. Another version is the k-medoids algorithm, which, when selecting a cluster center or cluster centroid, will pick one of its data points in the set, and not just an average of the cluster.

The algorithm follows these steps:

# Randomly select ''k'' distinct data points. These are the initial clusters.

# Measure the distance between each point and each of the 'k' clusters. (This is the distance of the points from each point ''k'').

# Assign each point to the nearest cluster.

# Find the center of each cluster (medoid).

# Repeat until the clusters no longer change.

# Assess the quality of the clustering by adding up the variation within each cluster.

# Repeat the processes with different values of k.

# Pick the best value for 'k' by finding the "elbow" in the plot of which k value has the lowest variance.

One example of this in biology is used in the 3D mapping of a genome. Information of a mouse's HIST1 region of chromosome 13 is gathered from

The algorithm follows these steps:

# Randomly select ''k'' distinct data points. These are the initial clusters.

# Measure the distance between each point and each of the 'k' clusters. (This is the distance of the points from each point ''k'').

# Assign each point to the nearest cluster.

# Find the center of each cluster (medoid).

# Repeat until the clusters no longer change.

# Assess the quality of the clustering by adding up the variation within each cluster.

# Repeat the processes with different values of k.

# Pick the best value for 'k' by finding the "elbow" in the plot of which k value has the lowest variance.

One example of this in biology is used in the 3D mapping of a genome. Information of a mouse's HIST1 region of chromosome 13 is gathered from Gene Expression Omnibus

Gene Expression Omnibus (GEO) is a database for gene expression profiling and RNA methylation profiling managed by the National Center for Biotechnology Information (NCBI). These high-throughput screening genomics data are derived from microarray ...

. This information contains data on which nuclear profiles show up in certain genomic regions. With this information, the Jaccard distance

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the Similarity measure, similarity and diversity index, diversity of Sample (statistics), sample sets. It was developed by Grove Karl Gilbert i ...

can be used to find a normalized distance between all the loci.

Graph Analytics

Graph analytics, or network analysis, is the study of graphs that represent connections between different objects. Graphs can represent all kinds of networks in biology such as Protein-protein interaction networks, regulatory networks, Metabolic and biochemical networks and much more. There are many ways to analyze these networks. One of which is looking at Centrality in graphs. Finding centrality in graphs assigns nodes rankings to their popularity or centrality in the graph. This can be useful in finding what nodes are most important. This can be very useful in biology in many ways. For example, if we were to have data on the activity of genes in a given time period, we can use degree centrality to see what genes are most active throughout the network, or what genes interact with others the most throughout the network. This can help us understand what roles certain genes play in the network. There are many ways to calculate centrality in graphs all of which can give different kinds of information on centrality. Finding centralities in biology can be applied in many different circumstances, some of which are gene regulatory, protein interaction and metabolic networks.Supervised Learning

Supervised learning is a type of algorithm that learns from labeled data and learns how to assign labels to future data that is unlabeled. In biology supervised learning can be helpful when we have data that we know how to categorize and we would like to categorize more data into those categories. A common supervised learning algorithm is the

A common supervised learning algorithm is the random forest

Random forests or random decision forests is an ensemble learning method for classification, regression and other tasks that operates by constructing a multitude of decision trees at training time. For classification tasks, the output of th ...

, which uses numerous decision trees to train a model to classify a dataset. Forming the basis of the random forest, a decision tree is a structure which aims to classify, or label, some set of data using certain known features of that data. A practical biological example of this would be taking an individual's genetic data and predicting whether or not that individual is predisposed to develop a certain disease or cancer. At each internal node the algorithm checks the dataset for exactly one feature, a specific gene in the previous example, and then branches left or right based on the result. Then at each leaf node, the decision tree assigns a class label to the dataset. So in practice, the algorithm walks a specific root-to-leaf path based on the input dataset through the decision tree, which results in the classification of that dataset. Commonly, decision trees have target variables that take on discrete values, like yes/no, in which case it is referred to as a classification tree

Classification chart or classification tree is a synopsis of the classification scheme, designed to illustrate the structure of any particular field.

Overview

Classification is the process in which ideas and objects are recognized, differenti ...

, but if the target variable is continuous then it is called a regression tree

Decision tree learning is a supervised learning approach used in statistics, data mining and machine learning. In this formalism, a classification or regression decision tree is used as a predictive model to draw conclusions about a set of obse ...

. To construct a decision tree, it must first be trained using a training set to identify which features are the best predictors of the target variable.

Open source software

Open source software provides a platform for computational biology where everyone can access and benefit from software developed in research. PLOS cites four main reasons for the use of open source software: *Reproducibility

Reproducibility, also known as replicability and repeatability, is a major principle underpinning the scientific method. For the findings of a study to be reproducible means that results obtained by an experiment or an observational study or in a ...

: This allows for researchers to use the exact methods used to calculate the relations between biological data.

*Faster development: developers and researchers do not have to reinvent existing code for minor tasks. Instead they can use pre-existing programs to save time on the development and implementation of larger projects.

* Increased quality: Having input from multiple researchers studying the same topic provides a layer of assurance that errors will not be in the code.

*Long-term availability: Open source programs are not tied to any businesses or patents. This allows for them to be posted to multiple web pages and ensure that they are available in the future.

Research

There are several large conferences that are concerned with computational biology. Some notable examples are Intelligent Systems for Molecular Biology, European Conference on Computational Biology and Research in Computational Molecular Biology. There are also numerous journals dedicated to computational biology. Some notable examples include Journal of Computational Biology andPLOS Computational Biology

''PLOS Computational Biology'' is a monthly peer-reviewed open access scientific journal covering computational biology. It was established in 2005 by the Public Library of Science in association with the International Society for Computational B ...

, a peer-reviewed open access journal that has many notable research projects in the field of computational biology. They provide reviews on software, tutorials for open source software, and display information on upcoming computational biology conferences.

Related fields

Computational biology,bioinformatics

Bioinformatics () is an interdisciplinary field that develops methods and software tools for understanding biological data, in particular when the data sets are large and complex. As an interdisciplinary field of science, bioinformatics combi ...

and mathematical biology

Mathematical and theoretical biology, or biomathematics, is a branch of biology which employs theoretical analysis, mathematical models and abstractions of the living organisms to investigate the principles that govern the structure, development a ...

are all interdisciplinary approaches to the life sciences

This list of life sciences comprises the branches of science that involve the scientific study of life – such as microorganisms, plants, and animals including human beings. This science is one of the two major branches of natural science, the ...

that draw from quantitative disciplines such as mathematics and information science. The NIH describes computational/mathematical biology as the use of computational/mathematical approaches to address theoretical and experimental questions in biology and, by contrast, bioinformatics as the application of information science to understand complex life-sciences data.

Specifically, the NIH defines

While each field is distinct, there may be significant overlap at their interface, so much so that to many, bioinformatics and computational biology are terms that are used interchangeably.

The terms computational biology and evolutionary computation

In computer science, evolutionary computation is a family of algorithms for global optimization inspired by biological evolution, and the subfield of artificial intelligence and soft computing studying these algorithms. In technical terms, they ...

have a similar name, but are not to be confused. Unlike computational biology, evolutionary computation is not concerned with modeling and analyzing biological data. It instead creates algorithms based on the ideas of evolution across species. Sometimes referred to as genetic algorithm

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to gene ...

s, the research of this field can be applied to computational biology. While evolutionary computation is not inherently a part of computational biology, computational evolutionary biology is a subfield of it.

See also

References

External links

bioinformatics.org

{{DEFAULTSORT:Computational Biology Bioinformatics Computational fields of study