|

Bayesian Statistics

Bayesian statistics ( or ) is a theory in the field of statistics based on the Bayesian interpretation of probability, where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation, which views probability as the limit of the relative frequency of an event after many trials. More concretely, analysis in Bayesian methods codifies prior knowledge in the form of a prior distribution. Bayesian statistical methods use Bayes' theorem to compute and update probabilities after obtaining new data. Bayes' theorem describes the conditional probability of an event based on data as well as prior information or beliefs about the event or conditions related to the event. For example, in Bayesian inference, Bayes' theorem can ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution, one can construct a Markov chain whose elements' distribution approximates it – that is, the Markov chain's Discrete-time Markov chain#Stationary distributions, equilibrium distribution matches the target distribution. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Markov chain Monte Carlo methods are used to study probability distributions that are too complex or too highly N-dimensional space, dimensional to study with analytic techniques alone. Various algorithms exist for constructing such Markov chains, including the Metropolis–Hastings algorithm. General explanation Markov chain Monte Carlo methods create samples from a continuous random variable, with probability density proportional to a known function. These samples can be used to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mode (statistics)

In statistics, the mode is the value that appears most often in a set of data values. If is a discrete random variable, the mode is the value at which the probability mass function takes its maximum value (i.e., ). In other words, it is the value that is most likely to be sampled. Like the statistical mean and median, the mode is a way of expressing, in a (usually) single number, important information about a random variable or a population (statistics), population. The numerical value of the mode is the same as that of the mean and median in a normal distribution, and it may be very different in highly skewed distributions. The mode is not necessarily unique in a given discrete distribution since the probability mass function may take the same maximum value at several points , , etc. The most extreme case occurs in Uniform distribution (discrete), uniform distributions, where all values occur equally frequently. A mode of a continuous probability distribution is often conside ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum A Posteriori

An estimation procedure that is often claimed to be part of Bayesian statistics is the maximum a posteriori (MAP) estimate of an unknown quantity, that equals the mode of the posterior density with respect to some reference measure, typically the Lebesgue measure. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior density over the quantity one wants to estimate. MAP estimation is therefore a regularization of maximum likelihood estimation, so is not a well-defined statistic of the Bayesian posterior distribution. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Integral

In mathematics, an integral is the continuous analog of a Summation, sum, which is used to calculate area, areas, volume, volumes, and their generalizations. Integration, the process of computing an integral, is one of the two fundamental operations of calculus,Integral calculus is a very well established mathematical discipline for which there are many sources. See and , for example. the other being Derivative, differentiation. Integration was initially used to solve problems in mathematics and physics, such as finding the area under a curve, or determining displacement from velocity. Usage of integration expanded to a wide variety of scientific fields thereafter. A definite integral computes the signed area of the region in the plane that is bounded by the Graph of a function, graph of a given Function (mathematics), function between two points in the real line. Conventionally, areas above the horizontal Coordinate axis, axis of the plane are positive while areas below are n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Outcome (probability)

In probability theory, an outcome is a possible result of an Experiment (probability theory), experiment or trial. Each possible outcome of a particular experiment is unique, and different outcomes are Mutually exclusive events, mutually exclusive (only one outcome will occur on each trial of the experiment). All of the possible outcomes of an experiment form the elements of a sample space. For the experiment where we flip a coin twice, the four possible ''outcomes'' that make up our ''sample space'' are (H, T), (T, H), (T, T) and (H, H), where "H" represents a "heads", and "T" represents a "tails". Outcomes should not be confused with Event (probability theory), events, which are (or informally, "groups") of outcomes. For comparison, we could define an event to occur when "at least one 'heads'" is flipped in the experiment - that is, when the outcome contains at least one 'heads'. This event would contain all outcomes in the sample space except the element (T, T). Sets of outco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sample Space

In probability theory, the sample space (also called sample description space, possibility space, or outcome space) of an experiment or random trial is the set of all possible outcomes or results of that experiment. A sample space is usually denoted using set notation, and the possible ordered outcomes, or sample points, are listed as elements in the set. It is common to refer to a sample space by the labels ''S'', Ω, or ''U'' (for " universal set"). The elements of a sample space may be numbers, words, letters, or symbols. They can also be finite, countably infinite, or uncountably infinite. A subset of the sample space is an event, denoted by E. If the outcome of an experiment is included in E, then event E has occurred. For example, if the experiment is tossing a single coin, the sample space is the set \, where the outcome H means that the coin is heads and the outcome T means that the coin is tails. The possible events are E=\, E=\, E = \, and E = \. For tossing two ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partition Of A Set

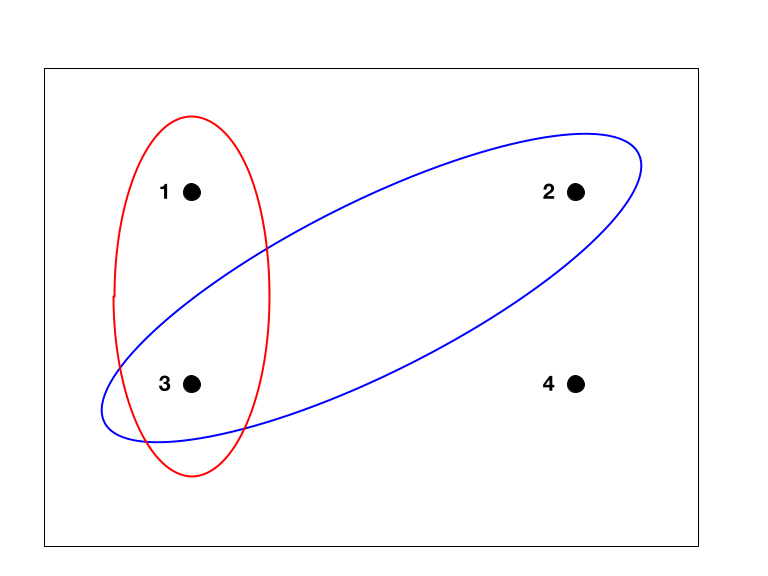

In mathematics, a partition of a set is a grouping of its elements into Empty set, non-empty subsets, in such a way that every element is included in exactly one subset. Every equivalence relation on a Set (mathematics), set defines a partition of this set, and every partition defines an equivalence relation. A set equipped with an equivalence relation or a partition is sometimes called a setoid, typically in type theory and proof theory. Definition and notation A partition of a set ''X'' is a set of non-empty subsets of ''X'' such that every element ''x'' in ''X'' is in exactly one of these subsets (i.e., the subsets are nonempty mutually disjoint sets). Equivalently, a family of sets ''P'' is a partition of ''X'' if and only if all of the following conditions hold: *The family ''P'' does not contain the empty set (that is \emptyset \notin P). *The union (set theory), union of the sets in ''P'' is equal to ''X'' (that is \textstyle\bigcup_ A = X). The sets in ''P'' are said ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Law Of Total Probability

In probability theory, the law (or formula) of total probability is a fundamental rule relating marginal probabilities to conditional probabilities. It expresses the total probability of an outcome which can be realized via several distinct events, hence the name. Statement The law of total probability isZwillinger, D., Kokoska, S. (2000) ''CRC Standard Probability and Statistics Tables and Formulae'', CRC Press. page 31. a theorem that states, in its discrete case, if \left\ is a finite or countably infinite set of mutually exclusive and collectively exhaustive events, then for any event A :P(A)=\sum_n P(A\cap B_n) or, alternatively, :P(A)=\sum_n P(A\mid B_n)P(B_n), where, for any n, if P(B_n) = 0 , then these terms are simply omitted from the summation since P(A\mid B_n) is finite. The summation can be interpreted as a weighted average, and consequently the marginal probability, P(A), is sometimes called "average probability"; "overall probability" is sometimes used i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Posterior Probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition (such as a scientific hypothesis, or parameter values), given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating. In the context of Bayesian statistics, the posterior probability distribution usually describes the epistemic uncertainty about statistical parameters conditional on a collection of observed data. From a given posterior distribution, various point and interval estimates can be derived, such as the maximum a posteriori (MAP) or the highest posterior density int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |