|

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its instructions are designed to operate efficiently and effectively on large one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SWAR Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector processing techniques also operate in video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to a decline in vector supercomput ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computing machinery. It includes the study and experimentation of algorithmic processes, and development of both hardware and software. Computing has scientific, engineering, mathematical, technological and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology and software engineering. The term "computing" is also synonymous with counting and calculating. In earlier times, it was used in reference to the action performed by mechanical computing machines, and before that, to human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. Computing is intimately tied to the representation of numbers, though mathematical conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Processing Unit

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs). The form, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic and logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the fetching (from memory), decoding and execution (of instructions) by directing the coordinated operations of the ALU, registers and other co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Texas Instruments

Texas Instruments Incorporated (TI) is an American technology company headquartered in Dallas, Texas, that designs and manufactures semiconductors and various integrated circuits, which it sells to electronics designers and manufacturers globally. It is one of the top 10 semiconductor companies worldwide based on sales volume. The company's focus is on developing analog chips and embedded processors, which account for more than 80% of its revenue. TI also produces TI digital light processing technology and education technology products including calculators, microcontrollers, and multi-core processors. The company holds 45,000 patents worldwide as of 2016. Texas Instruments emerged in 1951 after a reorganization of Geophysical Service Incorporated, a company founded in 1930 that manufactured equipment for use in the seismic industry, as well as defense electronics. TI produced the world's first commercial silicon transistor in 1954, and the same year designed and manufactured t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Data Corporation

Control Data Corporation (CDC) was a mainframe and supercomputer firm. CDC was one of the nine major United States computer companies through most of the 1960s; the others were IBM, Burroughs Corporation, DEC, NCR, General Electric, Honeywell, RCA, and UNIVAC. CDC was well-known and highly regarded throughout the industry at the time. For most of the 1960s, Seymour Cray worked at CDC and developed a series of machines that were the fastest computers in the world by far, until Cray left the company to found Cray Research (CRI) in the 1970s. After several years of losses in the early 1980s, in 1988 CDC started to leave the computer manufacturing business and sell the related parts of the company, a process that was completed in 1992 with the creation of Control Data Systems, Inc. The remaining businesses of CDC currently operate as Ceridian. Background and origins: World War II–1957 During World War II the U.S. Navy had built up a classified team of engineers to build codeb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer For Operations With Functions

Within computer engineering and computer science, a computer for operations with (mathematical) functions (unlike the usual computer) operates with functions at the hardware level (i.e. without programming these operations).see also here http://www.sigcis.org/files/SIGCISMC2010_001.pdf and english version here(see alshere in Russian (see alshere in Russian History A computing machine for operations with functions was presented and developed by Mikhail Kartsev in 1967. Among the operations of this computing machine were the functions addition, subtraction and multiplication, functions comparison, the same operations between a function and a number, finding the function maximum, computing indefinite integral, computing definite integral of derivative of two functions, derivative of two functions, shift of a function along the X-axis etc. By its architecture this computing machine was (using the modern terminology) a vector processor or ''array processor'', a central processin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flynn's Taxonomy

Flynn's taxonomy is a classification of computer architectures, proposed by Michael J. Flynn in 1966 and extended in 1972. The classification system has stuck, and it has been used as a tool in design of modern processors and their functionalities. Since the rise of multiprocessing central processing units (CPUs), a multiprogramming context has evolved as an extension of the classification system. Vector processing, covered by Duncan's taxonomy, is missing from Flynn's work because the Cray-1 was released in 1977: Flynn's second paper was published in 1972. Classifications The four initial classifications defined by Flynn are based upon the number of concurrent instruction (or control) streams and data streams available in the architecture. Flynn later defined three additional sub-categories of SIMD in 1972. Single instruction stream, single data stream (SISD) A sequential computer which exploits no parallelism in either the instruction or data streams. Single control unit (C ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Massively Parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of threads. One approach is grid computing, where the processing power of many computers in distributed, diverse administrative domains is opportunistically used whenever a computer is available.''Grid computing: experiment management, tool integration, and scientific workflows'' by Radu Prodan, Thomas Fahringer 2007 pages 1–4 An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.''Parallel and Distributed Computational Intelligence'' by Francisco Fernández de Vega 2010 pages 65–68 Another approach is grouping many processors in close proximity to each other, as in a computer cluster. In such a centralized system the speed and flexibility of the interconnect beco ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Fluid Dynamics

Computational fluid dynamics (CFD) is a branch of fluid mechanics that uses numerical analysis and data structures to analyze and solve problems that involve fluid flows. Computers are used to perform the calculations required to simulate the free-stream flow of the fluid, and the interaction of the fluid ( liquids and gases) with surfaces defined by boundary conditions. With high-speed supercomputers, better solutions can be achieved, and are often required to solve the largest and most complex problems. Ongoing research yields software that improves the accuracy and speed of complex simulation scenarios such as transonic or turbulent flows. Initial validation of such software is typically performed using experimental apparatus such as wind tunnels. In addition, previously performed analytical or empirical analysis of a particular problem can be used for comparison. A final validation is often performed using full-scale testing, such as flight tests. CFD is applied to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GFLOPS

In computing, floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. Floating-point arithmetic Floating-point arithmetic is needed for very large or very small real numbers, or computations that require a large dynamic range. Floating-point representation is similar to scientific notation, except everything is carried out in base two, rather than base ten. The encoding scheme stores the sign, the exponent (in base two for Cray and VAX, base two or ten for IEEE floating point formats, and base 16 for IBM Floating Point Architecture) and the significand (number after the radix point). While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32-bit numbers called ''single precision'', as well as 64-b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

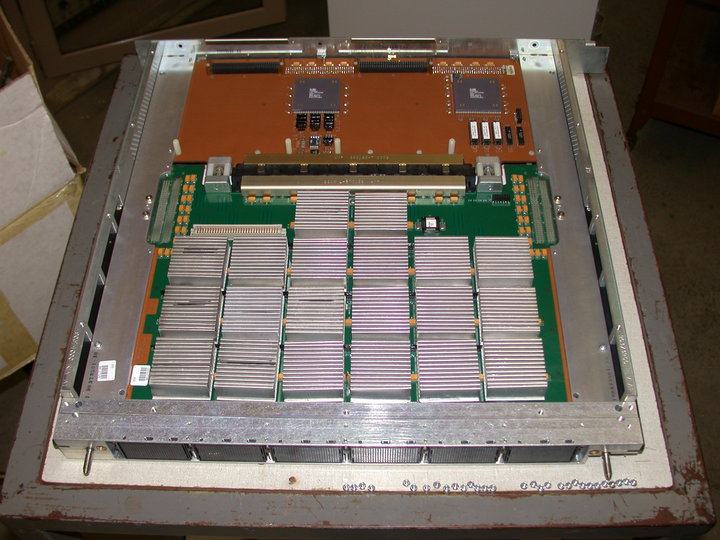

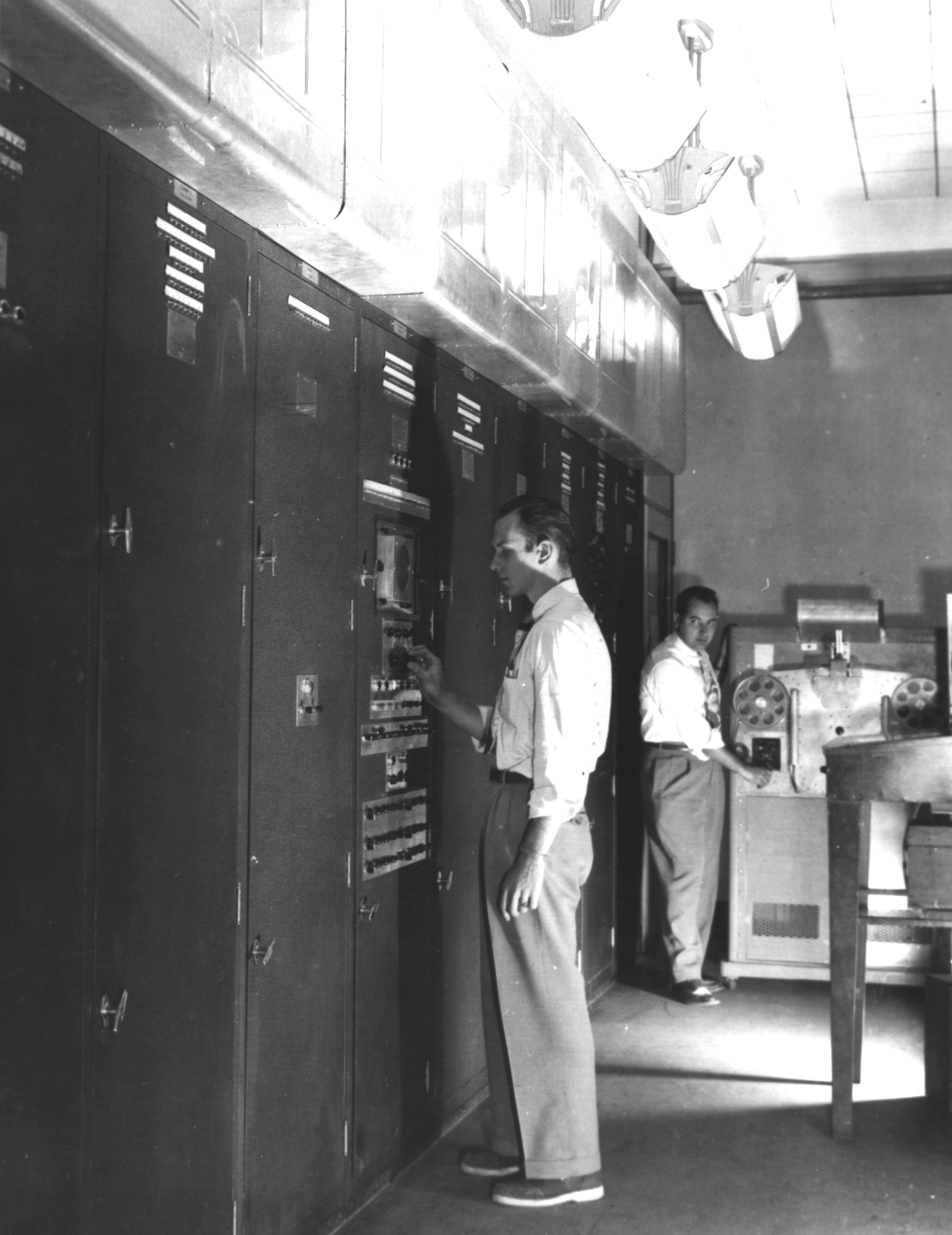

ILLIAC IV

The ILLIAC IV was the first massively parallel computer. The system was originally designed to have 256 64-bit floating point units (FPUs) and four central processing units (CPUs) able to process 1 billion operations per second. Due to budget constraints, only a single "quadrant" with 64 FPUs and a single CPU was built. Since the FPUs all had to process the same instruction – ADD, SUB etc. – in modern terminology the design would be considered to be single instruction, multiple data, or SIMD. The concept of building a computer using an array of processors came to Daniel Slotnick while working as a programmer on the IAS machine in 1952. A formal design did not start until 1960, when Slotnick was working at Westinghouse Electric and arranged development funding under a US Air Force contract. When that funding ended in 1964, Slotnick moved to the University of Illinois and joined the Illinois Automatic Computer (ILLIAC) team. With funding from Advanced Research Projects Agency ( ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

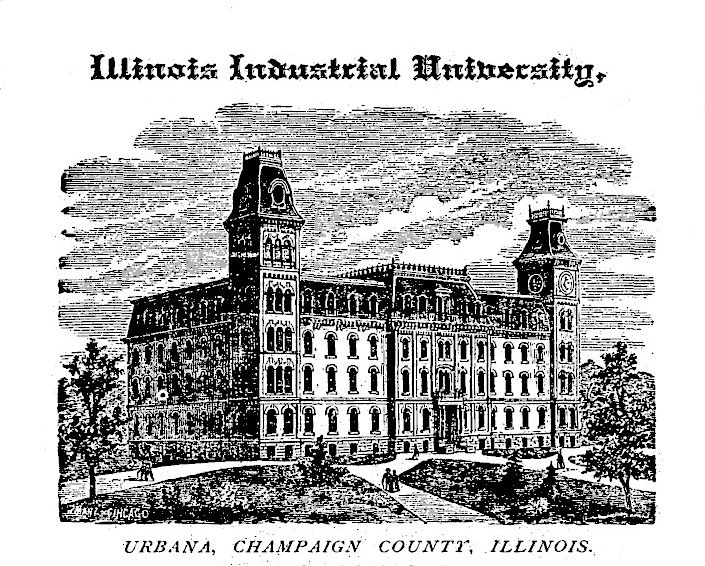

University Of Illinois At Urbana–Champaign

The University of Illinois Urbana-Champaign (U of I, Illinois, University of Illinois, or UIUC) is a public land-grant research university in Illinois in the twin cities of Champaign and Urbana. It is the flagship institution of the University of Illinois system and was founded in 1867. Enrolling over 56,000 undergraduate and graduate students, the University of Illinois is one of the largest public universities by enrollment in the country. The University of Illinois Urbana-Champaign is a member of the Association of American Universities and is classified among "R1: Doctoral Universities – Very high research activity". In fiscal year 2019, research expenditures at Illinois totaled $652 million. The campus library system possesses the second-largest university library in the United States by holdings after Harvard University. The university also hosts the National Center for Supercomputing Applications and is home to the fastest supercomputer on a university campus. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |