|

Logistic-beta Distribution

The term generalized logistic distribution is used as the name for several different families of probability distributions. For example, Johnson et al.Johnson, N.L., Kotz, S., Balakrishnan, N. (1995) ''Continuous Univariate Distributions, Volume 2'', Wiley. (pages 140–142) list four forms, which are listed below. Type I has also been called the skew-logistic distribution. Type IV subsumes the other types and is obtained when applying the logit transform to beta random variates. Following the same convention as for the log-normal distribution, type IV may be referred to as the logistic-beta distribution, with reference to the standard logistic function, which is the inverse of the logit transform. For other families of distributions that have also been called generalized logistic distributions, see the shifted log-logistic distribution, which is a generalization of the log-logistic distribution; and the metalog ("meta-logistic") distribution, which is highly shape-and-bounds f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distributions

In probability theory and statistics, a probability distribution is a function that gives the probabilities of occurrence of possible events for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalization With Location And Scale Parameters

A generalization is a form of abstraction whereby common properties of specific instances are formulated as general concepts or claims. Generalizations posit the existence of a domain or set of elements, as well as one or more common characteristics shared by those elements (thus creating a conceptual model). As such, they are the essential basis of all valid deductive inferences (particularly in logic, mathematics and science), where the process of verification is necessary to determine whether a generalization holds true for any given situation. Generalization can also be used to refer to the process of identifying the parts of a whole, as belonging to the whole. The parts, which might be unrelated when left on their own, may be brought together as a group, hence belonging to the whole by establishing a common relation between them. However, the parts cannot be generalized into a whole—until a common relation is established among ''all'' parts. This does not mean that the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is f(x) = \frac e^\,. The parameter is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma^2 is the variance. The standard deviation of the distribution is (sigma). A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heavy-tailed Distributions

In probability theory, heavy-tailed distributions are probability distributions whose tails are not exponentially bounded: that is, they have heavier tails than the exponential distribution. Roughly speaking, “heavy-tailed” means the distribution decreases more slowly than an exponential distribution, so extreme values are more likely. In many applications it is the right tail of the distribution that is of interest, but a distribution may have a heavy left tail, or both tails may be heavy. There are three important subclasses of heavy-tailed distributions: the fat-tailed distributions, the long-tailed distributions, and the subexponential distributions. In practice, all commonly used heavy-tailed distributions belong to the subexponential class, introduced by Jozef Teugels. There is still some discrepancy over the use of the term heavy-tailed. There are two other definitions in use. Some authors use the term to refer to those distributions which do not have all their po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Of Log-pdf's- Sigmoid-beta Vs Normal Vs Cauchy

Comparison or comparing is the act of evaluating two or more things by determining the relevant, comparable characteristics of each thing, and then determining which characteristics of each are similar to the other, which are different, and to what degree. Where characteristics are different, the differences may then be evaluated to determine which thing is best suited for a particular purpose. The description of similarities and differences found between the two things is also called a comparison. Comparison can take many distinct forms, varying by field: To compare things, they must have characteristics that are similar enough in relevant ways to merit comparison. If two things are too different to compare in a useful way, an attempt to compare them is colloquially referred to in English as "comparing apples and oranges." Comparison is widely used in society, in science and the arts. General usage Comparison is a natural activity, which even animals engage in when decidin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chirality (mathematics)

In geometry, a figure is chiral (and said to have chirality) if it is not identical to its mirror image, or, more precisely, if it cannot be mapped to its mirror image by Rotation (mathematics), rotations and Translation (geometry), translations alone. An object that is not chiral is said to be ''achiral''. A chiral object and its mirror image are said to be enantiomorphs. The word ''chirality'' is derived from the Greek (cheir), the hand, the most familiar chiral object; the word ''enantiomorph'' stems from the Greek (enantios) 'opposite' + (morphe) 'form'. Examples Some chiral three-dimensional objects, such as the helix, can be assigned a right or left handedness, according to the right-hand rule. Many other familiar objects exhibit the same chiral symmetry of the human body, such as gloves and shoes. Right shoes differ from left shoes only by being mirror images of each other. In contrast thin gloves may not be considered chiral if you can wear them wiktionary:inside ou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined. For a unimodal distribution (a distribution with a single peak), negative skew commonly indicates that the ''tail'' is on the left side of the distribution, and positive skew indicates that the tail is on the right. In cases where one tail is long but the other tail is fat, skewness does not obey a simple rule. For example, a zero value in skewness means that the tails on both sides of the mean balance out overall; this is the case for a symmetric distribution but can also be true for an asymmetric distribution where one tail is long and thin, and the other is short but fat. Thus, the judgement on the symmetry of a given distribution by using only its skewness is risky; the distribution shape must be taken into account. Introduction Consider the two d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Logistic Distribution

The term generalized logistic distribution is used as the name for several different families of probability distributions. For example, Johnson et al.Johnson, N.L., Kotz, S., Balakrishnan, N. (1995) ''Continuous Univariate Distributions, Volume 2'', Wiley. (pages 140–142) list four forms, which are listed below. #Type I, Type I has also been called the skew-logistic distribution. #Type IV, Type IV subsumes the other types and is obtained when applying the logit transform to beta distribution, beta random variates. Following the same convention as for the log-normal distribution, type IV may be referred to as the logistic-beta distribution, with reference to the standard logistic function, which is the inverse of the logit transform. For other families of distributions that have also been called generalized logistic distributions, see the shifted log-logistic distribution, which is a generalization of the log-logistic distribution; and the Metalog distribution, metalog ("meta-lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulant Generating Function

In probability theory and statistics, the cumulants of a probability distribution are a set of quantities that provide an alternative to the '' moments'' of the distribution. Any two probability distributions whose moments are identical will have identical cumulants as well, and vice versa. The first cumulant is the mean, the second cumulant is the variance, and the third cumulant is the same as the third central moment. But fourth and higher-order cumulants are not equal to central moments. In some cases theoretical treatments of problems in terms of cumulants are simpler than those using moments. In particular, when two or more random variables are statistically independent, the th-order cumulant of their sum is equal to the sum of their th-order cumulants. As well, the third and higher-order cumulants of a normal distribution are zero, and it is the only distribution with this property. Just as for moments, where ''joint moments'' are used for collections of random variables ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

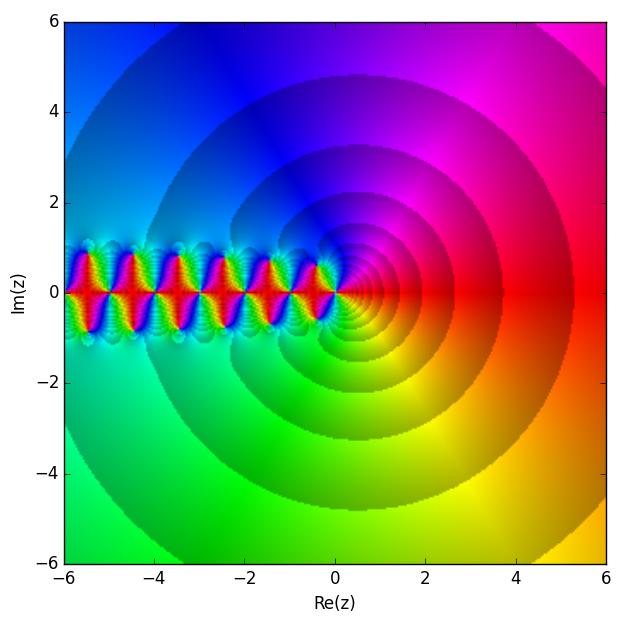

Polygamma Function

In mathematics, the polygamma function of order is a meromorphic function on the complex numbers \mathbb defined as the th derivative of the logarithm of the gamma function: :\psi^(z) := \frac \psi(z) = \frac \ln\Gamma(z). Thus :\psi^(z) = \psi(z) = \frac holds where is the digamma function and is the gamma function. They are holomorphic on \mathbb \backslash\mathbb_. At all the nonpositive integers these polygamma functions have a pole of order . The function is sometimes called the trigamma function. Integral representation When and , the polygamma function equals :\begin \psi^(z) &= (-1)^\int_0^\infty \frac\,\mathrmt \\ &= -\int_0^1 \frac(\ln t)^m\,\mathrmt\\ &= (-1)^m!\zeta(m+1,z) \end where \zeta(s,q) is the Hurwitz zeta function. This expresses the polygamma function as the Laplace transform of . It follows from Bernstein's theorem on monotone functions that, for and real and non-negative, is a completely monotone function. Setting in the above ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Trigamma Function

In mathematics, the trigamma function, denoted or , is the second of the polygamma functions, and is defined by : \psi_1(z) = \frac \ln\Gamma(z). It follows from this definition that : \psi_1(z) = \frac \psi(z) where is the digamma function. It may also be defined as the sum of the series : \psi_1(z) = \sum_^\frac, making it a special case of the Hurwitz zeta function : \psi_1(z) = \zeta(2,z). Note that the last two formulas are valid when is not a natural number. Calculation A double integral representation, as an alternative to the ones given above, may be derived from the series representation: : \psi_1(z) = \int_0^1\!\!\int_0^x\frac\,dy\,dx using the formula for the sum of a geometric series. Integration over yields: : \psi_1(z) = -\int_0^1\frac\,dx An asymptotic expansion as a Laurent series can be obtained via the derivative of the asymptotic expansion of the digamma function: :\begin \psi_1(z) &\sim \left(\ln z - \sum_^\infty \frac\right) \\ &= \fra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |