Rate–distortion theory on:

[Wikipedia]

[Google]

[Amazon]

Rate–distortion theory is a major branch of

Rate–distortion theory gives an analytical expression for how much compression can be achieved using lossy compression methods. Many of the existing audio, speech, image, and video compression techniques have transforms, quantization, and bit-rate allocation procedures that capitalize on the general shape of rate–distortion functions.

Rate–distortion theory was created by

Rate–distortion theory gives an analytical expression for how much compression can be achieved using lossy compression methods. Many of the existing audio, speech, image, and video compression techniques have transforms, quantization, and bit-rate allocation procedures that capitalize on the general shape of rate–distortion functions.

Rate–distortion theory was created by

"Rethinking Lossy Compression: The Rate-Distortion-Perception Tradeoff"

Proceedings of the International Conference on Machine Learning, 2019. In the most simple case (which is actually used in most cases), the distortion is defined as the expected value of the square of the difference between input and output signal (i.e., the

Rate–distortion theory tell us that 'no compression system exists that performs outside the gray area'. The closer a practical compression system is to the red (lower) bound, the better it performs. As a general rule, this bound can only be attained by increasing the coding block length parameter. Nevertheless, even at unit blocklengths one can often find good (scalar) quantizers that operate at distances from the rate–distortion function that are practically relevant.

This rate–distortion function holds only for Gaussian memoryless sources. It is known that the Gaussian source is the most "difficult" source to encode: for a given mean square error, it requires the greatest number of bits. The performance of a practical compression system working on—say—images, may well be below the lower bound shown.

Rate–distortion theory tell us that 'no compression system exists that performs outside the gray area'. The closer a practical compression system is to the red (lower) bound, the better it performs. As a general rule, this bound can only be attained by increasing the coding block length parameter. Nevertheless, even at unit blocklengths one can often find good (scalar) quantizers that operate at distances from the rate–distortion function that are practically relevant.

This rate–distortion function holds only for Gaussian memoryless sources. It is known that the Gaussian source is the most "difficult" source to encode: for a given mean square error, it requires the greatest number of bits. The performance of a practical compression system working on—say—images, may well be below the lower bound shown.

PyRated

Python code for basic calculations in rate-distortion theory.

VcDemo Image and Video Compression Learning Tool

{{DEFAULTSORT:Rate-distortion theory Data compression Information theory

information theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist a ...

which provides the theoretical foundations for lossy data compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size ...

; it addresses the problem of determining the minimal number of bits per symbol, as measured by the rate ''R'', that should be communicated over a channel, so that the source (input signal) can be approximately reconstructed at the receiver (output signal) without exceeding an expected distortion ''D''.

Introduction

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American people, American mathematician, electrical engineering, electrical engineer, and cryptography, cryptographer known as a "father of information theory".

As a 21-year-o ...

in his foundational work on information theory.

In rate–distortion theory, the ''rate'' is usually understood as the number of bit

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represente ...

s per data sample to be stored or transmitted. The notion of ''distortion'' is a subject of on-going discussion.Blau, Y. & Michaeli, T"Rethinking Lossy Compression: The Rate-Distortion-Perception Tradeoff"

Proceedings of the International Conference on Machine Learning, 2019. In the most simple case (which is actually used in most cases), the distortion is defined as the expected value of the square of the difference between input and output signal (i.e., the

mean squared error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between ...

). However, since we know that most lossy compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size ...

techniques operate on data that will be perceived by human consumers (listening to music

Music is generally defined as the art of arranging sound to create some combination of form, harmony, melody, rhythm or otherwise expressive content. Exact definitions of music vary considerably around the world, though it is an aspect ...

, watching pictures and video) the distortion measure should preferably be modeled on human perception

Perception () is the organization, identification, and interpretation of sensory information in order to represent and understand the presented information or environment. All perception involves signals that go through the nervous system ...

and perhaps aesthetics

Aesthetics, or esthetics, is a branch of philosophy that deals with the nature of beauty and taste, as well as the philosophy of art (its own area of philosophy that comes out of aesthetics). It examines aesthetic values, often expressed thr ...

: much like the use of probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

in lossless compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistic ...

, distortion measures can ultimately be identified with loss function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost ...

s as used in Bayesian estimation

Estimation (or estimating) is the process of finding an estimate or approximation, which is a value that is usable for some purpose even if input data may be incomplete, uncertain, or unstable. The value is nonetheless usable because it is der ...

and decision theory

Decision theory (or the theory of choice; not to be confused with choice theory) is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical ...

. In audio compression, perceptual models (and therefore perceptual distortion measures) are relatively well developed and routinely used in compression techniques such as MP3

MP3 (formally MPEG-1 Audio Layer III or MPEG-2 Audio Layer III) is a coding format for digital audio developed largely by the Fraunhofer Society in Germany, with support from other digital scientists in the United States and elsewhere. Origin ...

or Vorbis

Vorbis is a free and open-source software project headed by the Xiph.Org Foundation. The project produces an audio coding format and software reference encoder/decoder (codec) for lossy audio compression. Vorbis is most commonly used in conjun ...

, but are often not easy to include in rate–distortion theory. In image and video compression, the human perception models are less well developed and inclusion is mostly limited to the JPEG

JPEG ( ) is a commonly used method of lossy compression for digital images, particularly for those images produced by digital photography. The degree of compression can be adjusted, allowing a selectable tradeoff between storage size and imag ...

and MPEG

The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by International Organization for Standardization, ISO and International Electrotechnical Commission, IEC that sets standards for media coding, includ ...

weighting ( quantization, normalization) matrix.

Distortion functions

Distortion functions measure the cost of representing a symbol by an approximated symbol . Typical distortion functions are the Hamming distortion and the Squared-error distortion.Hamming distortion

:Squared-error distortion

:Rate–distortion functions

The functions that relate the rate and distortion are found as the solution of the following minimization problem: : Here , sometimes called a test channel, is the conditionalprobability density function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) can ...

(PDF) of the communication channel output (compressed signal) for a given input (original signal) , and is the mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such ...

between and defined as

:

where and are the entropy of the output signal ''Y'' and the conditional entropy

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y given that the value of another random variable X is known. Here, information is measured in shannons, na ...

of the output signal given the input signal, respectively:

:

:

The problem can also be formulated as a distortion–rate function, where we find the infimum

In mathematics, the infimum (abbreviated inf; plural infima) of a subset S of a partially ordered set P is a greatest element in P that is less than or equal to each element of S, if such an element exists. Consequently, the term ''greatest low ...

over achievable distortions for given rate constraint. The relevant expression is:

:

The two formulations lead to functions which are inverses of each other.

The mutual information can be understood as a measure for 'prior' uncertainty the receiver has about the sender's signal (''H''(''Y'')), diminished by the uncertainty that is left after receiving information about the sender's signal (). Of course the decrease in uncertainty is due to the communicated amount of information, which is .

As an example, in case there is ''no'' communication at all, then and . Alternatively, if the communication channel is perfect and the received signal is identical to the signal at the sender, then and .

In the definition of the rate–distortion function, and are the distortion between and for a given and the prescribed maximum distortion, respectively. When we use the mean squared error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between ...

as distortion measure, we have (for amplitude-continuous signals):

:

As the above equations show, calculating a rate–distortion function requires the stochastic description of the input in terms of the PDF , and then aims at finding the conditional PDF that minimize rate for a given distortion . These definitions can be formulated measure-theoretically to account for discrete and mixed random variables as well.

An analytical solution to this minimization problem is often difficult to obtain except in some instances for which we next offer two of the best known examples. The rate–distortion function of any source is known to obey several fundamental properties, the most important ones being that it is a continuous

Continuity or continuous may refer to:

Mathematics

* Continuity (mathematics), the opposing concept to discreteness; common examples include

** Continuous probability distribution or random variable in probability and statistics

** Continuous ...

, monotonically decreasing

In mathematics, a monotonic function (or monotone function) is a function between ordered sets that preserves or reverses the given order. This concept first arose in calculus, and was later generalized to the more abstract setting of order ...

convex

Convex or convexity may refer to:

Science and technology

* Convex lens, in optics

Mathematics

* Convex set, containing the whole line segment that joins points

** Convex polygon, a polygon which encloses a convex set of points

** Convex polytope ...

(U) function

Function or functionality may refer to:

Computing

* Function key, a type of key on computer keyboards

* Function model, a structured representation of processes in a system

* Function object or functor or functionoid, a concept of object-oriente ...

and thus the shape for the function in the examples is typical (even measured rate–distortion functions in real life tend to have very similar forms).

Although analytical solutions to this problem are scarce, there are upper and lower bounds to these functions including the famous Shannon lower bound (SLB), which in the case of squared error and memoryless sources, states that for arbitrary sources with finite differential entropy,

:

where ''h''(''D'') is the differential entropy of a Gaussian random variable with variance D. This lower bound is extensible to sources with memory and other distortion measures. One important feature of the SLB is that it is asymptotically tight in the low distortion regime for a wide class of sources and in some occasions, it actually coincides with the rate–distortion function. Shannon Lower Bounds can generally be found if the distortion between any two numbers can be expressed as a function of the difference between the value of these two numbers.

The Blahut–Arimoto algorithm, co-invented by Richard Blahut, is an elegant iterative technique for numerically obtaining rate–distortion functions of arbitrary finite input/output alphabet sources and much work has been done to extend it to more general problem instances.

When working with stationary sources with memory, it is necessary to modify the definition of the rate distortion function and it must be understood in the sense of a limit taken over sequences of increasing lengths.

:

where

:

and

:

where superscripts denote a complete sequence up to that time and the subscript 0 indicates initial state.

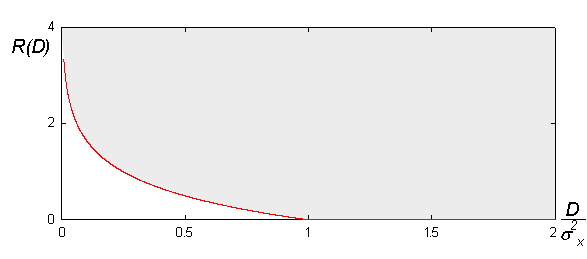

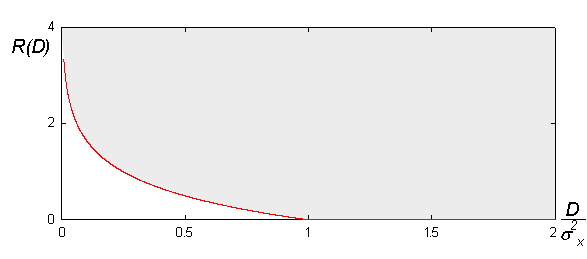

Memoryless (independent) Gaussian source with squared-error distortion

If we assume that is aGaussian

Carl Friedrich Gauss (1777–1855) is the eponym of all of the topics listed below.

There are over 100 topics all named after this German mathematician and scientist, all in the fields of mathematics, physics, and astronomy. The English eponymo ...

random variable with variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers ...

, and if we assume that successive samples of the signal are stochastically independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence o ...

(or equivalently, the source is ''memoryless

In probability and statistics, memorylessness is a property of certain probability distributions. It usually refers to the cases when the distribution of a "waiting time" until a certain event does not depend on how much time has elapsed already ...

'', or the signal is ''uncorrelated''), we find the following analytical expression

In mathematics, a closed-form expression is a mathematical expression that uses a finite number of standard operations. It may contain constants, variables, certain well-known operations (e.g., + − × ÷), and functions (e.g., ''n''th roo ...

for the rate–distortion function:

:

The following figure shows what this function looks like:

Rate–distortion theory tell us that 'no compression system exists that performs outside the gray area'. The closer a practical compression system is to the red (lower) bound, the better it performs. As a general rule, this bound can only be attained by increasing the coding block length parameter. Nevertheless, even at unit blocklengths one can often find good (scalar) quantizers that operate at distances from the rate–distortion function that are practically relevant.

This rate–distortion function holds only for Gaussian memoryless sources. It is known that the Gaussian source is the most "difficult" source to encode: for a given mean square error, it requires the greatest number of bits. The performance of a practical compression system working on—say—images, may well be below the lower bound shown.

Rate–distortion theory tell us that 'no compression system exists that performs outside the gray area'. The closer a practical compression system is to the red (lower) bound, the better it performs. As a general rule, this bound can only be attained by increasing the coding block length parameter. Nevertheless, even at unit blocklengths one can often find good (scalar) quantizers that operate at distances from the rate–distortion function that are practically relevant.

This rate–distortion function holds only for Gaussian memoryless sources. It is known that the Gaussian source is the most "difficult" source to encode: for a given mean square error, it requires the greatest number of bits. The performance of a practical compression system working on—say—images, may well be below the lower bound shown.

Memoryless (independent) Bernoulli source with Hamming distortion

The rate-distortion function of aBernoulli random variable

In probability theory and statistics, the Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli,James Victor Uspensky: ''Introduction to Mathematical Probability'', McGraw-Hill, New York 1937, page 45 is the discrete probabili ...

with Hamming distortion is given by:

:

where denotes the binary entropy function

In information theory, the binary entropy function, denoted \operatorname H(p) or \operatorname H_\text(p), is defined as the entropy of a Bernoulli process with probability p of one of two values. It is a special case of \Eta(X), the entropy fun ...

.

Plot of the rate-distortion function for :

Connecting rate-distortion theory to channel capacity

Suppose we want to transmit information about a source to the user with a distortion not exceeding ''D''. Rate–distortion theory tells us that at least bits/symbol of information from the source must reach the user. We also know from Shannon's channel coding theorem that if the source entropy is ''H'' bits/symbol, and the channel capacity is ''C'' (where ), then bits/symbol will be lost when transmitting this information over the given channel. For the user to have any hope of reconstructing with a maximum distortion ''D'', we must impose the requirement that the information lost in transmission does not exceed the maximum tolerable loss of bits/symbol. This means that the channel capacity must be at least as large as .See also

*Decorrelation

Decorrelation is a general term for any process that is used to reduce autocorrelation within a signal, or cross-correlation within a set of signals, while preserving other aspects of the signal. A frequently used method of decorrelation is the u ...

* Rate–distortion optimization Rate-distortion optimization (RDO) is a method of improving video quality in video compression. The name refers to the optimization of the amount of ''distortion'' (loss of video quality) against the amount of data required to encode the video, th ...

* Data compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression ...

* Sphere packing

In geometry, a sphere packing is an arrangement of non-overlapping spheres within a containing space. The spheres considered are usually all of identical size, and the space is usually three-dimensional Euclidean space. However, sphere packing p ...

* White noise

In signal processing, white noise is a random signal having equal intensity at different frequencies, giving it a constant power spectral density. The term is used, with this or similar meanings, in many scientific and technical disciplines, ...

* Blahut–Arimoto algorithm

References

External links

PyRated

Python code for basic calculations in rate-distortion theory.

VcDemo Image and Video Compression Learning Tool

{{DEFAULTSORT:Rate-distortion theory Data compression Information theory