Bayesian inference is a method of

statistical inference in which

Bayes' theorem is used to update the probability for a hypothesis as more

evidence or

information

Information is an abstract concept that refers to that which has the power to inform. At the most fundamental level information pertains to the interpretation of that which may be sensed. Any natural process that is not completely random ...

becomes available. Bayesian inference is an important technique in

statistics, and especially in

mathematical statistics

Mathematical statistics is the application of probability theory, a branch of mathematics, to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques which are used for this include mathematical an ...

. Bayesian updating is particularly important in the

dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including

science

Science is a systematic endeavor that Scientific method, builds and organizes knowledge in the form of Testability, testable explanations and predictions about the universe.

Science may be as old as the human species, and some of the earli ...

,

engineering

Engineering is the use of scientific principles to design and build machines, structures, and other items, including bridges, tunnels, roads, vehicles, and buildings. The discipline of engineering encompasses a broad range of more speciali ...

,

philosophy,

medicine

Medicine is the science and practice of caring for a patient, managing the diagnosis, prognosis, prevention, treatment, palliation of their injury or disease, and promoting their health. Medicine encompasses a variety of health care pr ...

,

sport

Sport pertains to any form of competitive physical activity or game that aims to use, maintain, or improve physical ability and skills while providing enjoyment to participants and, in some cases, entertainment to spectators. Sports can, ...

, and

law

Law is a set of rules that are created and are enforceable by social or governmental institutions to regulate behavior,Robertson, ''Crimes against humanity'', 90. with its precise definition a matter of longstanding debate. It has been vario ...

. In the philosophy of

decision theory

Decision theory (or the theory of choice; not to be confused with choice theory) is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical ...

, Bayesian inference is closely related to subjective probability, often called "

Bayesian probability

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification ...

".

Introduction to Bayes' rule

Formal explanation

Bayesian inference derives the

posterior probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior ...

as a

consequence

Consequence may refer to:

* Logical consequence, also known as a ''consequence relation'', or ''entailment''

* In operant conditioning, a result of some behavior

* Consequentialism, a theory in philosophy in which the morality of an act is determi ...

of two

antecedents: a

prior probability

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one's beliefs about this quantity before some evidence is taken into ...

and a "

likelihood function

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood funct ...

" derived from a

statistical model for the observed data. Bayesian inference computes the posterior probability according to

Bayes' theorem:

where

*

stands for any ''hypothesis'' whose probability may be affected by

data

In the pursuit of knowledge, data (; ) is a collection of discrete Value_(semiotics), values that convey information, describing quantity, qualitative property, quality, fact, statistics, other basic units of meaning, or simply sequences of sy ...

(called ''evidence'' below). Often there are competing hypotheses, and the task is to determine which is the most probable.

*

, the ''

prior probability

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one's beliefs about this quantity before some evidence is taken into ...

'', is the estimate of the probability of the hypothesis

''before'' the data

, the current evidence, is observed.

*

, the ''evidence'', corresponds to new data that were not used in computing the prior probability.

*

, the ''

posterior probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior ...

'', is the probability of

''given''

, i.e., ''after''

is observed. This is what we want to know: the probability of a hypothesis ''given'' the observed evidence.

*

is the probability of observing

''given''

, and is called the ''

likelihood

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood functi ...

''. As a function of

with

fixed, it indicates the compatibility of the evidence with the given hypothesis. The likelihood function is a function of the evidence,

, while the posterior probability is a function of the hypothesis,

.

*

is sometimes termed the

marginal likelihood

A marginal likelihood is a likelihood function that has been integrated over the parameter space. In Bayesian statistics, it represents the probability of generating the observed sample from a prior and is therefore often referred to as model evi ...

or "model evidence". This factor is the same for all possible hypotheses being considered (as is evident from the fact that the hypothesis

does not appear anywhere in the symbol, unlike for all the other factors), so this factor does not enter into determining the relative probabilities of different hypotheses.

For different values of

, only the factors

and

, both in the numerator, affect the value of

– the posterior probability of a hypothesis is proportional to its prior probability (its inherent likeliness) and the newly acquired likelihood (its compatibility with the new observed evidence).

Bayes' rule can also be written as follows:

because

and

where

is "not

", the

logical negation of

.

One quick and easy way to remember the equation would be to use Rule of Multiplication:

Alternatives to Bayesian updating

Bayesian updating is widely used and computationally convenient. However, it is not the only updating rule that might be considered rational.

Ian Hacking noted that traditional "

Dutch book

In gambling, a Dutch book or lock is a set of odds and bets, established by the bookmaker, that ensures that the bookmaker will profit—at the expense of the gamblers—regardless of the outcome of the event (a horse race, for example) on which ...

" arguments did not specify Bayesian updating: they left open the possibility that non-Bayesian updating rules could avoid Dutch books. Hacking wrote "And neither the Dutch book argument nor any other in the personalist arsenal of proofs of the probability axioms entails the dynamic assumption. Not one entails Bayesianism. So the personalist requires the dynamic assumption to be Bayesian. It is true that in consistency a personalist could abandon the Bayesian model of learning from experience. Salt could lose its savour."

Indeed, there are non-Bayesian updating rules that also avoid Dutch books (as discussed in the literature on "

probability kinematics Radical probabilism is a hypothesis in philosophy, in particular epistemology, and probability theory that holds that no facts are known for certain. That view holds profound implications for statistical inference. The philosophy is particularly ass ...

") following the publication of

Richard C. Jeffrey

Richard Carl Jeffrey (August 5, 1926 – November 9, 2002) was an American philosopher, logician, and probability theory, probability theorist. He is best known for developing and championing the philosophy of radical probabilism and the associa ...

's rule, which applies Bayes' rule to the case where the evidence itself is assigned a probability. The additional hypotheses needed to uniquely require Bayesian updating have been deemed to be substantial, complicated, and unsatisfactory.

Inference over exclusive and exhaustive possibilities

If evidence is simultaneously used to update belief over a set of exclusive and exhaustive propositions, Bayesian inference may be thought of as acting on this belief distribution as a whole.

General formulation

Suppose a process is generating independent and identically distributed events

, but the probability distribution is unknown. Let the event space

represent the current state of belief for this process. Each model is represented by event

. The conditional probabilities

are specified to define the models.

is the degree of belief in

. Before the first inference step,

is a set of ''initial prior probabilities''. These must sum to 1, but are otherwise arbitrary.

Suppose that the process is observed to generate

. For each

, the prior

is updated to the posterior

. From

Bayes' theorem:

Upon observation of further evidence, this procedure may be repeated.

Multiple observations

For a sequence of

independent and identically distributed

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usual ...

observations

, it can be shown by induction that repeated application of the above is equivalent to

where

Parametric formulation: motivating the formal description

By parameterizing the space of models, the belief in all models may be updated in a single step. The distribution of belief over the model space may then be thought of as a distribution of belief over the parameter space. The distributions in this section are expressed as continuous, represented by probability densities, as this is the usual situation. The technique is however equally applicable to discrete distributions.

Let the vector

span the parameter space. Let the initial prior distribution over

be

, where

is a set of parameters to the prior itself, or ''

hyperparameter

In Bayesian statistics, a hyperparameter is a parameter of a prior distribution; the term is used to distinguish them from parameters of the model for the underlying system under analysis.

For example, if one is using a beta distribution to mo ...

s''. Let

be a sequence of

independent and identically distributed

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usual ...

event observations, where all

are distributed as

for some

.

Bayes' theorem is applied to find the

posterior distribution

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior p ...

over

:

where

Formal description of Bayesian inference

Definitions

*

, a data point in general. This may in fact be a

vector

Vector most often refers to:

*Euclidean vector, a quantity with a magnitude and a direction

*Vector (epidemiology), an agent that carries and transmits an infectious pathogen into another living organism

Vector may also refer to:

Mathematic ...

of values.

*

, the

parameter

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when ...

of the data point's distribution, i.e., This may be a

vector

Vector most often refers to:

*Euclidean vector, a quantity with a magnitude and a direction

*Vector (epidemiology), an agent that carries and transmits an infectious pathogen into another living organism

Vector may also refer to:

Mathematic ...

of parameters.

*

, the

hyperparameter

In Bayesian statistics, a hyperparameter is a parameter of a prior distribution; the term is used to distinguish them from parameters of the model for the underlying system under analysis.

For example, if one is using a beta distribution to mo ...

of the parameter distribution, i.e., This may be a

vector

Vector most often refers to:

*Euclidean vector, a quantity with a magnitude and a direction

*Vector (epidemiology), an agent that carries and transmits an infectious pathogen into another living organism

Vector may also refer to:

Mathematic ...

of hyperparameters.

*

is the sample, a set of

observed data points, i.e.,

.

*

, a new data point whose distribution is to be predicted.

Bayesian inference

*The

prior distribution

In Bayesian statistical inference, a prior probability distribution, often simply called the prior, of an uncertain quantity is the probability distribution that would express one's beliefs about this quantity before some evidence is taken int ...

is the distribution of the parameter(s) before any data is observed, i.e.

. The prior distribution might not be easily determined; in such a case, one possibility may be to use the

Jeffreys prior

In Bayesian probability, the Jeffreys prior, named after Sir Harold Jeffreys, is a non-informative (objective) prior distribution for a parameter space; its density function is proportional to the square root of the determinant of the Fisher info ...

to obtain a prior distribution before updating it with newer observations.

*The

sampling distribution

In statistics, a sampling distribution or finite-sample distribution is the probability distribution of a given random-sample-based statistic. If an arbitrarily large number of samples, each involving multiple observations (data points), were sep ...

is the distribution of the observed data conditional on its parameters, i.e. This is also termed the

likelihood

The likelihood function (often simply called the likelihood) represents the probability of random variable realizations conditional on particular values of the statistical parameters. Thus, when evaluated on a given sample, the likelihood functi ...

, especially when viewed as a function of the parameter(s), sometimes written

.

*The

marginal likelihood

A marginal likelihood is a likelihood function that has been integrated over the parameter space. In Bayesian statistics, it represents the probability of generating the observed sample from a prior and is therefore often referred to as model evi ...

(sometimes also termed the ''evidence'') is the distribution of the observed data

marginalized over the parameter(s), i.e.

It quantifies the agreement between data and expert opinion, in a geometric sense that can be made precise.

*The

posterior distribution

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior p ...

is the distribution of the parameter(s) after taking into account the observed data. This is determined by

Bayes' rule

In probability theory and statistics, Bayes' theorem (alternatively Bayes' law or Bayes' rule), named after Thomas Bayes, describes the probability of an event, based on prior knowledge of conditions that might be related to the event. For examp ...

, which forms the heart of Bayesian inference:

This is expressed in words as "posterior is proportional to likelihood times prior", or sometimes as "posterior = likelihood times prior, over evidence".

* In practice, for almost all complex Bayesian models used in machine learning, the posterior distribution

is not obtained in a closed form distribution, mainly because the parameter space for

can be very high, or the Bayesian model retains certain hierarchical structure formulated from the observations

and parameter

. In such situations, we need to resort to approximation techniques.

Bayesian prediction

*The

posterior predictive distribution

Posterior may refer to:

* Posterior (anatomy), the end of an organism opposite to its head

** Buttocks, as a euphemism

* Posterior horn (disambiguation)

* Posterior probability

The posterior probability is a type of conditional probability that r ...

is the distribution of a new data point, marginalized over the posterior:

*The

prior predictive distribution

Prior (or prioress) is an ecclesiastical title for a superior in some religious orders. The word is derived from the Latin for "earlier" or "first". Its earlier generic usage referred to any monastic superior. In abbeys, a prior would be l ...

is the distribution of a new data point, marginalized over the prior:

Bayesian theory calls for the use of the posterior predictive distribution to do

predictive inference

Statistical inference is the process of using data analysis to infer properties of an underlying distribution of probability.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properti ...

, i.e., to

predict

A prediction (Latin ''præ-'', "before," and ''dicere'', "to say"), or forecast, is a statement about a future event or data. They are often, but not always, based upon experience or knowledge. There is no universal agreement about the exact ...

the distribution of a new, unobserved data point. That is, instead of a fixed point as a prediction, a distribution over possible points is returned. Only this way is the entire posterior distribution of the parameter(s) used. By comparison, prediction in

frequentist statistics

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pr ...

often involves finding an optimum point estimate of the parameter(s)—e.g., by

maximum likelihood

In statistics, maximum likelihood estimation (MLE) is a method of estimating the parameters of an assumed probability distribution, given some observed data. This is achieved by maximizing a likelihood function so that, under the assumed stat ...

or

maximum a posteriori estimation

In Bayesian statistics, a maximum a posteriori probability (MAP) estimate is an estimate of an unknown quantity, that equals the mode of the posterior distribution. The MAP can be used to obtain a point estimate of an unobserved quantity on the ...

(MAP)—and then plugging this estimate into the formula for the distribution of a data point. This has the disadvantage that it does not account for any uncertainty in the value of the parameter, and hence will underestimate the

variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbe ...

of the predictive distribution.

In some instances, frequentist statistics can work around this problem. For example,

confidence intervals and

prediction interval

In statistical inference, specifically predictive inference, a prediction interval is an estimate of an interval in which a future observation will fall, with a certain probability, given what has already been observed. Prediction intervals are ...

s in frequentist statistics when constructed from a

normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

with unknown

mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value (magnitude and sign) of a given data set.

For a data set, the '' ari ...

and

variance

In probability theory and statistics, variance is the expectation of the squared deviation of a random variable from its population mean or sample mean. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbe ...

are constructed using a

Student's t-distribution. This correctly estimates the variance, due to the facts that (1) the average of normally distributed random variables is also normally distributed, and (2) the predictive distribution of a normally distributed data point with unknown mean and variance, using conjugate or uninformative priors, has a Student's t-distribution. In Bayesian statistics, however, the posterior predictive distribution can always be determined exactly—or at least to an arbitrary level of precision when numerical methods are used.

Both types of predictive distributions have the form of a

compound probability distribution In probability and statistics, a compound probability distribution (also known as a mixture distribution or contagious distribution) is the probability distribution that results from assuming that a random variable is distributed according to som ...

(as does the

marginal likelihood

A marginal likelihood is a likelihood function that has been integrated over the parameter space. In Bayesian statistics, it represents the probability of generating the observed sample from a prior and is therefore often referred to as model evi ...

). In fact, if the prior distribution is a

conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

, such that the prior and posterior distributions come from the same family, it can be seen that both prior and posterior predictive distributions also come from the same family of compound distributions. The only difference is that the posterior predictive distribution uses the updated values of the hyperparameters (applying the Bayesian update rules given in the

conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

article), while the prior predictive distribution uses the values of the hyperparameters that appear in the prior distribution.

Mathematical properties

Interpretation of factor

. That is, if the model were true, the evidence would be more likely than is predicted by the current state of belief. The reverse applies for a decrease in belief. If the belief does not change,

. That is, the evidence is independent of the model. If the model were true, the evidence would be exactly as likely as predicted by the current state of belief.

Cromwell's rule

If

then

. If

, then

. This can be interpreted to mean that hard convictions are insensitive to counter-evidence.

The former follows directly from Bayes' theorem. The latter can be derived by applying the first rule to the event "not

" in place of "

", yielding "if

, then

", from which the result immediately follows.

Asymptotic behaviour of posterior

Consider the behaviour of a belief distribution as it is updated a large number of times with

independent and identically distributed

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usual ...

trials. For sufficiently nice prior probabilities, the

Bernstein-von Mises theorem gives that in the limit of infinite trials, the posterior converges to a

Gaussian distribution independent of the initial prior under some conditions firstly outlined and rigorously proven by

Joseph L. Doob in 1948, namely if the random variable in consideration has a finite

probability space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models t ...

. The more general results were obtained later by the statistician

David A. Freedman who published in two seminal research papers in 1963 and 1965 when and under what circumstances the asymptotic behaviour of posterior is guaranteed. His 1963 paper treats, like Doob (1949), the finite case and comes to a satisfactory conclusion. However, if the random variable has an infinite but countable

probability space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models t ...

(i.e., corresponding to a die with infinite many faces) the 1965 paper demonstrates that for a dense subset of priors the

Bernstein-von Mises theorem is not applicable. In this case there is

almost surely

In probability theory, an event is said to happen almost surely (sometimes abbreviated as a.s.) if it happens with probability 1 (or Lebesgue measure 1). In other words, the set of possible exceptions may be non-empty, but it has probability 0 ...

no asymptotic convergence. Later in the 1980s and 1990s

Freedman and

Persi Diaconis

Persi Warren Diaconis (; born January 31, 1945) is an American mathematician of Greek descent and former professional magician. He is the Mary V. Sunseri Professor of Statistics and Mathematics at Stanford University.

He is particularly know ...

continued to work on the case of infinite countable probability spaces. To summarise, there may be insufficient trials to suppress the effects of the initial choice, and especially for large (but finite) systems the convergence might be very slow.

Conjugate priors

In parameterized form, the prior distribution is often assumed to come from a family of distributions called

conjugate prior

In Bayesian probability theory, if the posterior distribution p(\theta \mid x) is in the same probability distribution family as the prior probability distribution p(\theta), the prior and posterior are then called conjugate distributions, and th ...

s. The usefulness of a conjugate prior is that the corresponding posterior distribution will be in the same family, and the calculation may be expressed in

closed form.

Estimates of parameters and predictions

It is often desired to use a posterior distribution to estimate a parameter or variable. Several methods of Bayesian estimation select

measurements of central tendency from the posterior distribution.

For one-dimensional problems, a unique median exists for practical continuous problems. The posterior median is attractive as a

robust estimator

Robust statistics are statistics with good performance for data drawn from a wide range of probability distributions, especially for distributions that are not normal distribution, normal. Robust Statistics, statistical methods have been developed ...

.

If there exists a finite mean for the posterior distribution, then the posterior mean is a method of estimation.

Taking a value with the greatest probability defines

maximum ''a posteriori'' (MAP) estimates:

There are examples where no maximum is attained, in which case the set of MAP estimates is

empty

Empty may refer to:

Music Albums

* ''Empty'' (God Lives Underwater album) or the title song, 1995

* ''Empty'' (Nils Frahm album), 2020

* ''Empty'' (Tait album) or the title song, 2001

Songs

* "Empty" (The Click Five song), 2007

* ...

.

There are other methods of estimation that minimize the posterior ''

risk

In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value (such as health, well-being, wealth, property or the environm ...

'' (expected-posterior loss) with respect to a

loss function, and these are of interest to

statistical decision theory

Decision theory (or the theory of choice; not to be confused with choice theory) is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical ...

using the sampling distribution ("frequentist statistics").

The

posterior predictive distribution

Posterior may refer to:

* Posterior (anatomy), the end of an organism opposite to its head

** Buttocks, as a euphemism

* Posterior horn (disambiguation)

* Posterior probability

The posterior probability is a type of conditional probability that r ...

of a new observation

(that is independent of previous observations) is determined by

Examples

Probability of a hypothesis

Suppose there are two full bowls of cookies. Bowl #1 has 10 chocolate chip and 30 plain cookies, while bowl #2 has 20 of each. Our friend Fred picks a bowl at random, and then picks a cookie at random. We may assume there is no reason to believe Fred treats one bowl differently from another, likewise for the cookies. The cookie turns out to be a plain one. How probable is it that Fred picked it out of bowl #1?

Intuitively, it seems clear that the answer should be more than a half, since there are more plain cookies in bowl #1. The precise answer is given by Bayes' theorem. Let

correspond to bowl #1, and

to bowl #2.

It is given that the bowls are identical from Fred's point of view, thus

, and the two must add up to 1, so both are equal to 0.5.

The event

is the observation of a plain cookie. From the contents of the bowls, we know that

and

Bayes' formula then yields

Before we observed the cookie, the probability we assigned for Fred having chosen bowl #1 was the prior probability,

, which was 0.5. After observing the cookie, we must revise the probability to

, which is 0.6.

Making a prediction

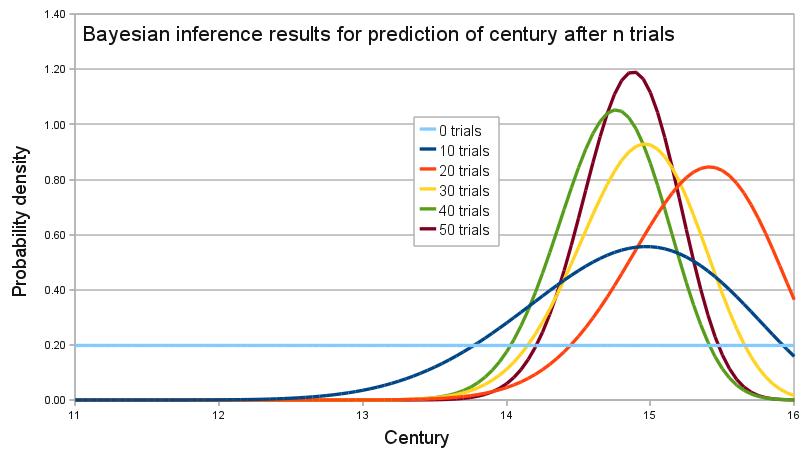

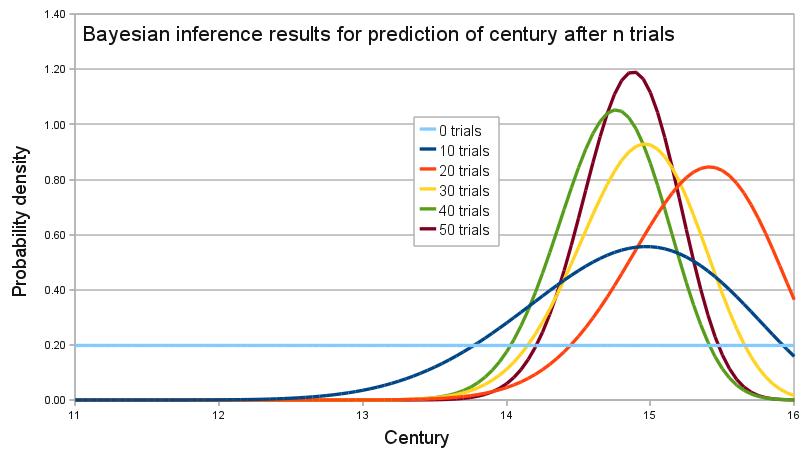

An archaeologist is working at a site thought to be from the medieval period, between the 11th century to the 16th century. However, it is uncertain exactly when in this period the site was inhabited. Fragments of pottery are found, some of which are glazed and some of which are decorated. It is expected that if the site were inhabited during the early medieval period, then 1% of the pottery would be glazed and 50% of its area decorated, whereas if it had been inhabited in the late medieval period then 81% would be glazed and 5% of its area decorated. How confident can the archaeologist be in the date of inhabitation as fragments are unearthed?

The degree of belief in the continuous variable

(century) is to be calculated, with the discrete set of events

as evidence. Assuming linear variation of glaze and decoration with time, and that these variables are independent,

Assume a uniform prior of

, and that trials are

independent and identically distributed

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usual ...

. When a new fragment of type

is discovered, Bayes' theorem is applied to update the degree of belief for each

:

A computer simulation of the changing belief as 50 fragments are unearthed is shown on the graph. In the simulation, the site was inhabited around 1420, or

. By calculating the area under the relevant portion of the graph for 50 trials, the archaeologist can say that there is practically no chance the site was inhabited in the 11th and 12th centuries, about 1% chance that it was inhabited during the 13th century, 63% chance during the 14th century and 36% during the 15th century. The

Bernstein-von Mises theorem asserts here the asymptotic convergence to the "true" distribution because the

probability space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models t ...

corresponding to the discrete set of events

is finite (see above section on asymptotic behaviour of the posterior).

In frequentist statistics and decision theory

A

decision-theoretic justification of the use of Bayesian inference was given by

Abraham Wald

Abraham Wald (; hu, Wald Ábrahám, yi, אברהם וואַלד; – ) was a Jewish Hungarian mathematician who contributed to decision theory, geometry, and econometrics and founded the field of statistical sequential analysis. One ...

, who proved that every unique Bayesian procedure is

admissible. Conversely, every

admissible statistical procedure is either a Bayesian procedure or a limit of Bayesian procedures.

[Bickel & Doksum (2001, p. 32)]

Wald characterized admissible procedures as Bayesian procedures (and limits of Bayesian procedures), making the Bayesian formalism a central technique in such areas of

frequentist inference

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pro ...

as

parameter estimation

Estimation theory is a branch of statistics that deals with estimating the values of parameters based on measured empirical data that has a random component. The parameters describe an underlying physical setting in such a way that their valu ...

,

hypothesis testing

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis.

Hypothesis testing allows us to make probabilistic statements about population parameters.

...

, and computing

confidence intervals

In frequentist statistics, a confidence interval (CI) is a range of estimates for an unknown parameter. A confidence interval is computed at a designated ''confidence level''; the 95% confidence level is most common, but other levels, such as 9 ...

. For example:

* "Under some conditions, all admissible procedures are either Bayes procedures or limits of Bayes procedures (in various senses). These remarkable results, at least in their original form, are due essentially to Wald. They are useful because the property of being Bayes is easier to analyze than admissibility."

* "In decision theory, a quite general method for proving admissibility consists in exhibiting a procedure as a unique Bayes solution."

*"In the first chapters of this work, prior distributions with finite support and the corresponding Bayes procedures were used to establish some of the main theorems relating to the comparison of experiments. Bayes procedures with respect to more general prior distributions have played a very important role in the development of statistics, including its asymptotic theory." "There are many problems where a glance at posterior distributions, for suitable priors, yields immediately interesting information. Also, this technique can hardly be avoided in sequential analysis."

*"A useful fact is that any Bayes decision rule obtained by taking a proper prior over the whole parameter space must be admissible"

*"An important area of investigation in the development of admissibility ideas has been that of conventional sampling-theory procedures, and many interesting results have been obtained."

Model selection

Bayesian methodology also plays a role in

model selection

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the ...

where the aim is to select one model from a set of competing models that represents most closely the underlying process that generated the observed data. In Bayesian model comparison, the model with the highest

posterior probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior ...

given the data is selected. The posterior probability of a model depends on the evidence, or

marginal likelihood

A marginal likelihood is a likelihood function that has been integrated over the parameter space. In Bayesian statistics, it represents the probability of generating the observed sample from a prior and is therefore often referred to as model evi ...

, which reflects the probability that the data is generated by the model, and on the

prior belief of the model. When two competing models are a priori considered to be equiprobable, the ratio of their posterior probabilities corresponds to the

Bayes factor

The Bayes factor is a ratio of two competing statistical models represented by their marginal likelihood, and is used to quantify the support for one model over the other. The models in questions can have a common set of parameters, such as a nul ...

. Since Bayesian model comparison is aimed on selecting the model with the highest posterior probability, this methodology is also referred to as the maximum a posteriori (MAP) selection rule or the MAP probability rule.

Probabilistic programming

While conceptually simple, Bayesian methods can be mathematically and numerically challenging. Probabilistic programming languages (PPLs) implement functions to easily build Bayesian models together with efficient automatic inference methods. This helps separate the model building from the inference, allowing practitioners to focus on their specific problems and leaving PPLs to handle the computational details for them.

Applications

Statistical data analysis

See the separate Wikipedia entry on

Bayesian Statistics

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a ''degree of belief'' in an event. The degree of belief may be based on prior knowledge about the event, ...

, specifically the

Statistical modeling

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, ...

section in that page.

Computer applications

Bayesian inference has applications in

artificial intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech r ...

and

expert systems. Bayesian inference techniques have been a fundamental part of computerized

pattern recognition

Pattern recognition is the automated recognition of patterns and regularities in data. It has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics ...

techniques since the late 1950s. There is also an ever-growing connection between Bayesian methods and simulation-based

Monte Carlo

Monte Carlo (; ; french: Monte-Carlo , or colloquially ''Monte-Carl'' ; lij, Munte Carlu ; ) is officially an administrative area of the Principality of Monaco, specifically the ward of Monte Carlo/Spélugues, where the Monte Carlo Casino is ...

techniques since complex models cannot be processed in closed form by a Bayesian analysis, while a

graphical model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probabili ...

structure ''may'' allow for efficient simulation algorithms like the

Gibbs sampling

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for obtaining a sequence of observations which are approximated from a specified multivariate probability distribution, when direct sampling is dif ...

and other

Metropolis–Hastings algorithm

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. This sequ ...

schemes. Recently Bayesian inference has gained popularity among the

phylogenetics

In biology, phylogenetics (; from Greek φυλή/ φῦλον [] "tribe, clan, race", and wikt:γενετικός, γενετικός [] "origin, source, birth") is the study of the evolutionary history and relationships among or within groups ...

community for these reasons; a number of applications allow many demographic and evolutionary parameters to be estimated simultaneously.

As applied to

statistical classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagn ...

, Bayesian inference has been used to develop algorithms for identifying

e-mail spam

Email spam, also referred to as junk email, spam mail, or simply spam, is unsolicited messages sent in bulk by email (spamming).

The name comes from a Monty Python sketch in which the name of the canned pork product Spam is ubiquitous, unavoida ...

. Applications which make use of Bayesian inference for spam filtering include

CRM114

The CRM 114 Discriminator is a fictional piece of radio equipment in Stanley Kubrick's film ''Dr. Strangelove'' (1964), the destruction of which prevents the crew of a B-52 from receiving the recall code that would stop them from dropping their ...

,

DSPAM,

Bogofilter,

SpamAssassin

Apache SpamAssassin is a computer program used for e-mail spam filtering. It uses a variety of spam-detection techniques, including DNS and fuzzy checksum techniques, Bayesian filtering, external programs, blacklists and online databases. It i ...

,

SpamBayes,

Mozilla

Mozilla (stylized as moz://a) is a free software community founded in 1998 by members of Netscape. The Mozilla community uses, develops, spreads and supports Mozilla products, thereby promoting exclusively free software and open standards, w ...

, XEAMS, and others. Spam classification is treated in more detail in the article on the

naïve Bayes classifier.

Solomonoff's Inductive inference is the theory of prediction based on observations; for example, predicting the next symbol based upon a given series of symbols. The only assumption is that the environment follows some unknown but computable probability distribution. It is a formal inductive framework that combines two well-studied principles of inductive inference: Bayesian statistics and

Occam's Razor. Solomonoff's universal prior probability of any prefix ''p'' of a computable sequence ''x'' is the sum of the probabilities of all programs (for a universal computer) that compute something starting with ''p''. Given some ''p'' and any computable but unknown probability distribution from which ''x'' is sampled, the universal prior and Bayes' theorem can be used to predict the yet unseen parts of ''x'' in optimal fashion.

Bioinformatics and healthcare applications

Bayesian inference has been applied in different

Bioinformatics applications, including differential gene expression analysis.

[Robinson, Mark D & McCarthy, Davis J & Smyth, Gordon K edgeR: a Bioconductor package for differential expression analysis of digital gene expression data, Bioinformatics.] Bayesian inference is also used in a general cancer risk model, called

CIRI (Continuous Individualized Risk Index), where serial measurements are incorporated to update a Bayesian model which is primarily built from prior knowledge.

In the courtroom

Bayesian inference can be used by jurors to coherently accumulate the evidence for and against a defendant, and to see whether, in totality, it meets their personal threshold for '

beyond a reasonable doubt

Beyond a reasonable doubt is a legal standard of proof required to validate a criminal conviction in most adversarial legal systems. It is a higher standard of proof than the balance of probabilities standard commonly used in civil cases, beca ...

'. Bayes' theorem is applied successively to all evidence presented, with the posterior from one stage becoming the prior for the next. The benefit of a Bayesian approach is that it gives the juror an unbiased, rational mechanism for combining evidence. It may be appropriate to explain Bayes' theorem to jurors in

odds form, as

betting odds

Odds provide a measure of the likelihood of a particular outcome. They are calculated as the ratio of the number of events that produce that outcome to the number that do not. Odds are commonly used in gambling and statistics.

Odds also have ...

are more widely understood than probabilities. Alternatively, a

logarithmic approach, replacing multiplication with addition, might be easier for a jury to handle.

If the existence of the crime is not in doubt, only the identity of the culprit, it has been suggested that the prior should be uniform over the qualifying population. For example, if 1,000 people could have committed the crime, the prior probability of guilt would be 1/1000.

The use of Bayes' theorem by jurors is controversial. In the United Kingdom, a defence

expert witness

An expert witness, particularly in common law countries such as the United Kingdom, Australia, and the United States, is a person whose opinion by virtue of education, training, certification, skills or experience, is accepted by the judge as ...

explained Bayes' theorem to the jury in ''

R v Adams

R, or r, is the eighteenth letter of the Latin alphabet, used in the modern English alphabet, the alphabets of other western European languages and others worldwide. Its name in English is ''ar'' (pronounced ), plural ''ars'', or in Irela ...

''. The jury convicted, but the case went to appeal on the basis that no means of accumulating evidence had been provided for jurors who did not wish to use Bayes' theorem. The Court of Appeal upheld the conviction, but it also gave the opinion that "To introduce Bayes' Theorem, or any similar method, into a criminal trial plunges the jury into inappropriate and unnecessary realms of theory and complexity, deflecting them from their proper task."

Gardner-Medwin argues that the criterion on which a verdict in a criminal trial should be based is ''not'' the probability of guilt, but rather the ''probability of the evidence, given that the defendant is innocent'' (akin to a

frequentist

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pro ...

p-value). He argues that if the posterior probability of guilt is to be computed by Bayes' theorem, the prior probability of guilt must be known. This will depend on the incidence of the crime, which is an unusual piece of evidence to consider in a criminal trial. Consider the following three propositions:

*A The known facts and testimony could have arisen if the defendant is guilty

*B The known facts and testimony could have arisen if the defendant is innocent

*C The defendant is guilty.

Gardner-Medwin argues that the jury should believe both A and not-B in order to convict. A and not-B implies the truth of C, but the reverse is not true. It is possible that B and C are both true, but in this case he argues that a jury should acquit, even though they know that they will be letting some guilty people go free. See also

Lindley's paradox

Lindley's paradox is a counterintuitive situation in statistics in which the Bayesian and frequentist approaches to a hypothesis testing problem give different results for certain choices of the prior distribution. The problem of the disagreement ...

.

Bayesian epistemology

Bayesian epistemology

Bayesian epistemology is a formal approach to various topics in epistemology that has its roots in Thomas Bayes' work in the field of probability theory. One advantage of its formal method in contrast to traditional epistemology is that its concep ...

is a movement that advocates for Bayesian inference as a means of justifying the rules of inductive logic.

Karl Popper and

David Miller have rejected the idea of Bayesian rationalism, i.e. using Bayes rule to make epistemological inferences: It is prone to the same

vicious circle as any other

justificationist epistemology, because it presupposes what it attempts to justify. According to this view, a rational interpretation of Bayesian inference would see it merely as a probabilistic version of

falsification, rejecting the belief, commonly held by Bayesians, that high likelihood achieved by a series of Bayesian updates would prove the hypothesis beyond any reasonable doubt, or even with likelihood greater than 0.

Other

* The

scientific method

The scientific method is an empirical method for acquiring knowledge that has characterized the development of science since at least the 17th century (with notable practitioners in previous centuries; see the article history of scientific ...

is sometimes interpreted as an application of Bayesian inference. In this view, Bayes' rule guides (or should guide) the updating of probabilities about

hypotheses conditional on new observations or

experiment

An experiment is a procedure carried out to support or refute a hypothesis, or determine the efficacy or likelihood of something previously untried. Experiments provide insight into Causality, cause-and-effect by demonstrating what outcome oc ...

s. The Bayesian inference has also been applied to treat

stochastic scheduling problems with incomplete information by Cai et al. (2009).

*

Bayesian search theory

Bayesian search theory is the application of Bayesian statistics to the search for lost objects. It has been used several times to find lost sea vessels, for example USS Scorpion (SSN-589), USS ''Scorpion'', and has played a key role in the recover ...

is used to search for lost objects.

*

Bayesian inference in phylogeny

Bayesian inference of phylogeny combines the information in the prior and in the data likelihood to create the so-called posterior probability of trees, which is the probability that the tree is correct given the data, the prior and the likelihood ...

*

Bayesian tool for methylation analysis Bayesian tool for methylation analysis, also known as BATMAN, is a statistical tool for analysing methylated DNA immunoprecipitation (MeDIP) profiles. It can be applied to large datasets generated using either oligonucleotide arrays (MeDIP-chip) or ...

*

Bayesian approaches to brain function Bayesian approaches to brain function investigate the capacity of the nervous system to operate in situations of uncertainty in a fashion that is close to the optimal prescribed by Bayesian statistics. This term is used in behavioural sciences and n ...

investigate the brain as a Bayesian mechanism.

* Bayesian inference in ecological studies

* Bayesian inference is used to estimate parameters in stochastic chemical kinetic models

* Bayesian inference in

econophysics

Econophysics is a heterodox interdisciplinary research field, applying theories and methods originally developed by physicists in order to solve problems in economics, usually those including uncertainty or stochastic processes and nonlinear dynam ...

for currency or

stock market prediction

*

Bayesian inference in marketing

*

Bayesian inference in motor learning

* Bayesian inference is used in

probabilistic numerics

Probabilistic numerics is a scientific field at the intersection of statistics, machine learning and applied mathematics, where tasks in numerical analysis including finding numerical solutions for numerical integration, integration, Numerical line ...

to solve numerical problems

Bayes and Bayesian inference

The problem considered by Bayes in Proposition 9 of his essay, "

An Essay towards solving a Problem in the Doctrine of Chances

''An Essay towards solving a Problem in the Doctrine of Chances'' is a work on the mathematical theory of probability by Thomas Bayes, published in 1763, two years after its author's death, and containing multiple amendments and additions due to h ...

", is the posterior distribution for the parameter ''a'' (the success rate) of the

binomial distribution.

History

The term ''Bayesian'' refers to

Thomas Bayes

Thomas Bayes ( ; 1701 7 April 1761) was an English statistician, philosopher and Presbyterian minister who is known for formulating a specific case of the theorem that bears his name: Bayes' theorem. Bayes never published what would become his ...

(1701–1761), who proved that probabilistic limits could be placed on an unknown event. However, it was

Pierre-Simon Laplace

Pierre-Simon, marquis de Laplace (; ; 23 March 1749 – 5 March 1827) was a French scholar and polymath whose work was important to the development of engineering, mathematics, statistics, physics, astronomy, and philosophy. He summarized ...

(1749–1827) who introduced (as Principle VI) what is now called

Bayes' theorem and used it to address problems in

celestial mechanics

Celestial mechanics is the branch of astronomy that deals with the motions of objects in outer space. Historically, celestial mechanics applies principles of physics (classical mechanics) to astronomical objects, such as stars and planets, to ...

, medical statistics,

reliability

Reliability, reliable, or unreliable may refer to:

Science, technology, and mathematics Computing

* Data reliability (disambiguation), a property of some disk arrays in computer storage

* High availability

* Reliability (computer networking), a ...

, and

jurisprudence

Jurisprudence, or legal theory, is the theoretical study of the propriety of law. Scholars of jurisprudence seek to explain the nature of law in its most general form and they also seek to achieve a deeper understanding of legal reasoning a ...

.

Early Bayesian inference, which used uniform priors following Laplace's

principle of insufficient reason

The principle of indifference (also called principle of insufficient reason) is a rule for assigning epistemic probabilities. The principle of indifference states that in the absence of any relevant evidence, agents should distribute their cre ...

, was called "

inverse probability

In probability theory, inverse probability is an obsolete term for the probability distribution of an unobserved variable.

Today, the problem of determining an unobserved variable (by whatever method) is called inferential statistics, the method o ...

" (because it

infers backwards from observations to parameters, or from effects to causes

[). After the 1920s, "inverse probability" was largely supplanted by a collection of methods that came to be called ]frequentist statistics

Frequentist inference is a type of statistical inference based in frequentist probability, which treats “probability” in equivalent terms to “frequency” and draws conclusions from sample-data by means of emphasizing the frequency or pr ...

.[

In the 20th century, the ideas of Laplace were further developed in two different directions, giving rise to ''objective'' and ''subjective'' currents in Bayesian practice. In the objective or "non-informative" current, the statistical analysis depends on only the model assumed, the data analyzed,]Markov chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain ...

methods, which removed many of the computational problems, and an increasing interest in nonstandard, complex applications.machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

.

See also

References

Citations

Sources

* Aster, Richard; Borchers, Brian, and Thurber, Clifford (2012). ''Parameter Estimation and Inverse Problems'', Second Edition, Elsevier. ,

*

* Box, G. E. P. and Tiao, G. C. (1973) ''Bayesian Inference in Statistical Analysis'', Wiley,

*

*

* Jaynes E. T. (2003) ''Probability Theory: The Logic of Science'', CUP.

Link to Fragmentary Edition of March 1996

.

*

*

Further reading

* For a full report on the history of Bayesian statistics and the debates with frequentists approaches, read

Elementary

The following books are listed in ascending order of probabilistic sophistication:

* Stone, JV (2013), "Bayes' Rule: A Tutorial Introduction to Bayesian Analysis",

Sebtel Press, England.

*

*

*

*

* Bolstad, William M. (2007) ''Introduction to Bayesian Statistics'': Second Edition, John Wiley

* Updated classic textbook. Bayesian theory clearly presented.

* Lee, Peter M. ''Bayesian Statistics: An Introduction''. Fourth Edition (2012), John Wiley

*

*

Intermediate or advanced

*

*

* DeGroot, Morris H., ''Optimal Statistical Decisions''. Wiley Classics Library. 2004. (Originally published (1970) by McGraw-Hill.) .

*

* Jaynes, E. T. (1998

''Probability Theory: The Logic of Science''

* O'Hagan, A. and Forster, J. (2003) ''Kendall's Advanced Theory of Statistics'', Volume 2B: ''Bayesian Inference''. Arnold, New York. .

*

* Glenn Shafer and Pearl, Judea, eds. (1988) ''Probabilistic Reasoning in Intelligent Systems'', San Mateo, CA: Morgan Kaufmann.

* Pierre Bessière et al. (2013),

Bayesian Programming

, CRC Press.

* Francisco J. Samaniego (2010), "A Comparison of the Bayesian and Frequentist Approaches to Estimation" Springer, New York,

External links

*

Bayesian Statistics

from Scholarpedia.

from Queen Mary University of London

* ttp://cocosci.berkeley.edu/tom/bayes.html Bayesian reading list categorized and annotated b

Tom Griffiths

* A. Hajek and S. Hartmann

Bayesian Epistemology

in: J. Dancy et al. (eds.), A Companion to Epistemology. Oxford: Blackwell 2010, 93–106.

* S. Hartmann and J. Sprenger

Bayesian Epistemology

in: S. Bernecker and D. Pritchard (eds.), Routledge Companion to Epistemology. London: Routledge 2010, 609–620.

''Stanford Encyclopedia of Philosophy'': "Inductive Logic"Bayesian Confirmation Theory

{{DEFAULTSORT:Bayesian Inference

Logic and statistics

Statistical forecasting

An archaeologist is working at a site thought to be from the medieval period, between the 11th century to the 16th century. However, it is uncertain exactly when in this period the site was inhabited. Fragments of pottery are found, some of which are glazed and some of which are decorated. It is expected that if the site were inhabited during the early medieval period, then 1% of the pottery would be glazed and 50% of its area decorated, whereas if it had been inhabited in the late medieval period then 81% would be glazed and 5% of its area decorated. How confident can the archaeologist be in the date of inhabitation as fragments are unearthed?

The degree of belief in the continuous variable (century) is to be calculated, with the discrete set of events as evidence. Assuming linear variation of glaze and decoration with time, and that these variables are independent,

Assume a uniform prior of , and that trials are

An archaeologist is working at a site thought to be from the medieval period, between the 11th century to the 16th century. However, it is uncertain exactly when in this period the site was inhabited. Fragments of pottery are found, some of which are glazed and some of which are decorated. It is expected that if the site were inhabited during the early medieval period, then 1% of the pottery would be glazed and 50% of its area decorated, whereas if it had been inhabited in the late medieval period then 81% would be glazed and 5% of its area decorated. How confident can the archaeologist be in the date of inhabitation as fragments are unearthed?

The degree of belief in the continuous variable (century) is to be calculated, with the discrete set of events as evidence. Assuming linear variation of glaze and decoration with time, and that these variables are independent,

Assume a uniform prior of , and that trials are