|

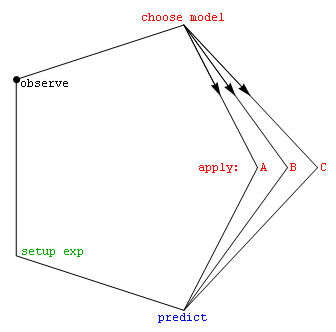

Model Selection

Model selection is the task of selecting a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making or optimization under uncertainty. Introduction In its most basic forms, model selection is one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

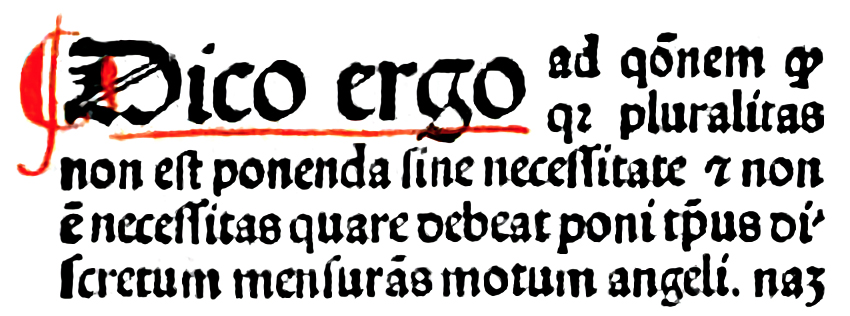

Occam’s Razor

Occam's razor, Ockham's razor, or Ocham's razor ( la, novacula Occami), also known as the principle of parsimony or the law of parsimony ( la, lex parsimoniae), is the problem-solving principle that "entities should not be multiplied beyond necessity". It is generally understood in the sense that with competing theories or explanations, the simpler one, for example a model with fewer parameters, is to be preferred. The idea is frequently attributed to English Franciscan friar William of Ockham (), a scholastic philosopher and theologian, although he never used these exact words. This philosophical razor advocates that when presented with competing hypotheses about the same prediction, one should select the solution with the fewest assumptions, and that this is not meant to be a way of choosing between hypotheses that make different predictions. Similarly, in science, Occam's razor is used as an abductive heuristic in the development of theoretical models rather than as a rigorou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bias Of An Estimator

In statistics, the bias of an estimator (or bias function) is the difference between this estimator's expected value and the true value of the parameter being estimated. An estimator or decision rule with zero bias is called ''unbiased''. In statistics, "bias" is an property of an estimator. Bias is a distinct concept from consistency: consistent estimators converge in probability to the true value of the parameter, but may be biased or unbiased; see bias versus consistency for more. All else being equal, an unbiased estimator is preferable to a biased estimator, although in practice, biased estimators (with generally small bias) are frequently used. When a biased estimator is used, bounds of the bias are calculated. A biased estimator may be used for various reasons: because an unbiased estimator does not exist without further assumptions about a population; because an estimator is difficult to compute (as in unbiased estimation of standard deviation); because a biased estimato ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

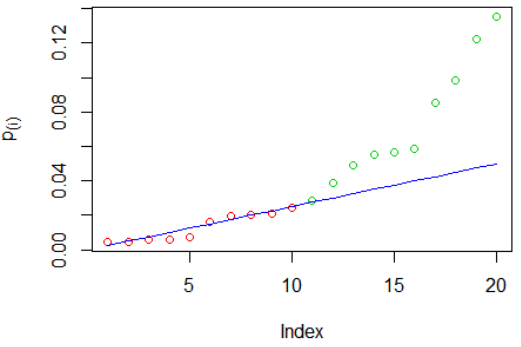

False Discovery Rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections of the null). Equivalently, the FDR is the expected ratio of the number of false positive classifications (false discoveries) to the total number of positive classifications (rejections of the null). The total number of rejections of the null include both the number of false positives (FP) and true positives (TP). Simply put, FDR = FP / (FP + TP). FDR-controlling procedures provide less stringent control of Type I errors compared to family-wise error rate (FWER) controlling procedures (such as the Bonferroni correction), which control the probability of ''at least one'' Type I error. Thus, FDR-controlling procedures have greater power, at the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deviance Information Criterion

The deviance information criterion (DIC) is a hierarchical modeling generalization of the Akaike information criterion (AIC). It is particularly useful in Bayesian model selection problems where the posterior distributions of the models have been obtained by Markov chain Monte Carlo (MCMC) simulation. DIC is an asymptotic approximation as the sample size becomes large, like AIC. It is only valid when the posterior distribution is approximately multivariate normal. Definition Define the deviance as D(\theta)=-2 \log(p(y, \theta))+C\, , where y are the data, \theta are the unknown parameters of the model and p(y, \theta) is the likelihood function. C is a constant that cancels out in all calculations that compare different models, and which therefore does not need to be known. There are two calculations in common usage for the effective number of parameters of the model. The first, as described in , is p_D=\overline-D(\bar), where \bar is the expectation of \theta. The second, as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistical analysis will generalize to an independent data set. Cross-validation is a resampling method that uses different portions of the data to test and train a model on different iterations. It is mainly used in settings where the goal is prediction, and one wants to estimate how accurately a predictive model will perform in practice. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation dataset or ''testing set''). The goal of cross-validation is to test the model's ability to predict new data that was not used in estimating it, in order to flag problems like overfitting or selection bias and to give an insight o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Information Criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on the likelihood function and it is closely related to the Akaike information criterion (AIC). When fitting models, it is possible to increase the likelihood by adding parameters, but doing so may result in overfitting. Both BIC and AIC attempt to resolve this problem by introducing a penalty term for the number of parameters in the model; the penalty term is larger in BIC than in AIC for sample sizes greater than 7. The BIC was developed by Gideon E. Schwarz and published in a 1978 paper, where he gave a Bayesian argument for adopting it. Definition The BIC is formally defined as : \mathrm = k\ln(n) - 2\ln(\widehat L). \ where *\hat L = the maximized value of the likelihood function of the model M, i.e. \hat L=p(x\mid\widehat\theta, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayes Factor

The Bayes factor is a ratio of two competing statistical models represented by their marginal likelihood, and is used to quantify the support for one model over the other. The models in questions can have a common set of parameters, such as a null hypothesis and an alternative, but this is not necessary; for instance, it could also be a non-linear model compared to its linear approximation. The Bayes factor can be thought of as a Bayesian analog to the likelihood-ratio test, but since it uses the (integrated) marginal likelihood instead of the maximized likelihood, both tests only coincide under simple hypotheses (e.g., two specific parameter values). Also, in contrast with null hypothesis significance testing, Bayes factors support evaluation of evidence ''in favor'' of a null hypothesis, rather than only allowing the null to be rejected or not rejected. Although conceptually simple, the computation of the Bayes factor can be challenging depending on the complexity of the model ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information criterion ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model Specification

In statistics, model specification is part of the process of building a statistical model: specification consists of selecting an appropriate functional form for the model and choosing which variables to include. For example, given personal income y together with years of schooling s and on-the-job experience x, we might specify a functional relationship y = f(s,x) as follows: : \ln y = \ln y_0 + \rho s + \beta_1 x + \beta_2 x^2 + \varepsilon where \varepsilon is the unexplained error term that is supposed to comprise independent and identically distributed Gaussian variables. The statistician Sir David Cox has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Specification error and bias Specification error occurs when the functional form or the choice of independent variables poorly represent relevant aspects of the true data-generating process. In particular, bias (the expected value of the di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exploratory Data Analysis

In statistics, exploratory data analysis (EDA) is an approach of analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or not, but primarily EDA is for seeing what the data can tell us beyond the formal modeling and thereby contrasts traditional hypothesis testing. Exploratory data analysis has been promoted by John Tukey since 1970 to encourage statisticians to explore the data, and possibly formulate hypotheses that could lead to new data collection and experiments. EDA is different from initial data analysis (IDA), which focuses more narrowly on checking assumptions required for model fitting and hypothesis testing, and handling missing values and making transformations of variables as needed. EDA encompasses IDA. Overview Tukey defined data analysis in 1961 as: "Procedures for analyzing data, techniques for interpreting the results of such procedures, ways of pla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transformation (statistics)

In statistics, data transformation is the application of a deterministic mathematical function to each point in a data set—that is, each data point ''zi'' is replaced with the transformed value ''yi'' = ''f''(''zi''), where ''f'' is a function. Transforms are usually applied so that the data appear to more closely meet the assumptions of a statistical inference procedure that is to be applied, or to improve the interpretability or appearance of graphs. Nearly always, the function that is used to transform the data is invertible, and generally is continuous. The transformation is usually applied to a collection of comparable measurements. For example, if we are working with data on peoples' incomes in some currency unit, it would be common to transform each person's income value by the logarithm function. Motivation Guidance for how data should be transformed, or whether a transformation should be applied at all, should come from the particular statistical analysis to be per ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |