|

Unsupervised Classification

Unsupervised learning is a type of algorithm that learns patterns from untagged data. The hope is that through mimicry, which is an important mode of learning in people, the machine is forced to build a concise representation of its world and then generate imaginative content from it. In contrast to supervised learning where data is tagged by an expert, e.g. tagged as a "ball" or "fish", unsupervised methods exhibit self-organization that captures patterns as probability densities or a combination of neural feature preferences encoded in the machine's weights and activations. The other levels in the supervision spectrum are reinforcement learning where the machine is given only a numerical performance score as guidance, and semi-supervised learning where a small portion of the data is tagged. Neural networks Tasks vs. methods Neural network tasks are often categorized as discriminative (recognition) or generative (imagination). Often but not always, discriminative ta ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

Supervised learning (SL) is a machine learning paradigm for problems where the available data consists of labelled examples, meaning that each data point contains features (covariates) and an associated label. The goal of supervised learning algorithms is learning a function that maps feature vectors (inputs) to labels (output), based on example input-output pairs. It infers a function from ' consisting of a set of ''training examples''. In supervised learning, each example is a ''pair'' consisting of an input object (typically a vector) and a desired output value (also called the ''supervisory signal''). A supervised learning algorithm analyzes the training data and produces an inferred function, which can be used for mapping new examples. An optimal scenario will allow for the algorithm to correctly determine the class labels for unseen instances. This requires the learning algorithm to generalize from the training data to unseen situations in a "reasonable" way (see inductive b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hopfield Network

A Hopfield network (or Ising model of a neural network or Ising–Lenz–Little model) is a form of recurrent artificial neural network and a type of spin glass system popularised by John Hopfield in 1982 as described earlier by Little in 1974 based on Ernst Ising's work with Wilhelm Lenz on the Ising model. Hopfield networks serve as content-addressable ("associative") memory systems with binary threshold nodes, or with continuous variables. Hopfield networks also provide a model for understanding human memory. Origins The Ising model of a neural network as a memory model was first proposed by William A. Little in 1974, which was acknowledged by Hopfield in his 1982 paper. Networks with continuous dynamics were developed by Hopfield in his 1984 paper. A major advance in memory storage capacity was developed by Krotov and Hopfield in 2016 through a change in network dynamics and energy function. This idea was further extended by Demircigil and collaborators in 2017. The contin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Target Recognition

Automatic target recognition (ATR) is the ability for an algorithm or device to recognize targets or other objects based on data obtained from sensors. Target recognition was initially done by using an audible representation of the received signal, where a trained operator who would decipher that sound to classify the target illuminated by the radar. While these trained operators had success, automated methods have been developed and continue to be developed that allow for more accuracy and speed in classification. ATR can be used to identify man made objects such as ground and air vehicles as well as for biological targets such as animals, humans, and vegetative clutter. This can be useful for everything from recognizing an object on a battlefield to filtering out interference caused by large flocks of birds on Doppler weather radar. Possible military applications include a simple identification system such as an IFF transponder, and is used in other applications such as unmanne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Adaptive Resonance Theory

Adaptive resonance theory (ART) is a theory developed by Stephen Grossberg and Gail Carpenter on aspects of how the brain processes information. It describes a number of neural network models which use supervised and unsupervised learning methods, and address problems such as pattern recognition and prediction. The primary intuition behind the ART model is that object identification and recognition generally occur as a result of the interaction of 'top-down' observer expectations with 'bottom-up' sensory information. The model postulates that 'top-down' expectations take the form of a memory template or prototype that is then compared with the actual features of an object as detected by the senses. This comparison gives rise to a measure of category belongingness. As long as this difference between sensation and expectation does not exceed a set threshold called the 'vigilance parameter', the sensed object will be considered a member of the expected class. The system thus offers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Self-organizing Map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher dimensional data set while preserving the topological structure of the data. For example, a data set with p variables measured in n observations could be represented as clusters of observations with similar values for the variables. These clusters then could be visualized as a two-dimensional "map" such that observations in proximal clusters have more similar values than observations in distal clusters. This can make high-dimensional data easier to visualize and analyze. An SOM is a type of artificial neural network but is trained using competitive learning rather than the error-correction learning (e.g., backpropagation with gradient descent) used by other artificial neural networks. The SOM was introduced by the Finnish professor Teuvo Kohonen in the 1980s and therefore is sometim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains. An ANN is based on a collection of connected units or nodes called artificial neurons, which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal to other neurons. An artificial neuron receives signals then processes them and can signal neurons connected to it. The "signal" at a connection is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs. The connections are called ''edges''. Neurons and edges typically have a ''weight'' that adjusts as learning proceeds. The weight increases or decreases the strength of the signal at a connection. Neurons may have a threshold such that a signal is sent only if the aggregate signal crosses that threshold. Typically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pattern Recognition

Pattern recognition is the automated recognition of patterns and regularities in data. It has applications in statistical data analysis, signal processing, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Pattern recognition has its origins in statistics and engineering; some modern approaches to pattern recognition include the use of machine learning, due to the increased availability of big data and a new abundance of processing power. These activities can be viewed as two facets of the same field of application, and they have undergone substantial development over the past few decades. Pattern recognition systems are commonly trained from labeled "training" data. When no labeled data are available, other algorithms can be used to discover previously unknown patterns. KDD and data mining have a larger focus on unsupervised methods and stronger connection to business use. Pattern recognition focuses more on the s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spike-timing-dependent Plasticity

Spike-timing-dependent plasticity (STDP) is a biological process that adjusts the strength of connections between neurons in the brain. The process adjusts the connection strengths based on the relative timing of a particular neuron's output and input action potentials (or spikes). The STDP process partially explains the activity-dependent development of nervous systems, especially with regard to long-term potentiation and long-term depression. Process Under the STDP process, if an input spike to a neuron tends, on average, to occur immediately ''before'' that neuron's output spike, then that particular input is made somewhat stronger. If an input spike tends, on average, to occur immediately ''after'' an output spike, then that particular input is made somewhat weaker hence: "spike-timing-dependent plasticity". Thus, inputs that might be the cause of the post-synaptic neuron's excitation are made even more likely to contribute in the future, whereas inputs that are not the cause ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hebbian Learning

Hebbian theory is a neuroscientific theory claiming that an increase in synaptic efficacy arises from a presynaptic cell's repeated and persistent stimulation of a postsynaptic cell. It is an attempt to explain synaptic plasticity, the adaptation of brain neurons during the learning process. It was introduced by Donald Hebb in his 1949 book ''The Organization of Behavior.'' The theory is also called Hebb's rule, Hebb's postulate, and cell assembly theory. Hebb states it as follows: Let us assume that the persistence or repetition of a reverberatory activity (or "trace") tends to induce lasting cellular changes that add to its stability. ... When an axon of cell ''A'' is near enough to excite a cell ''B'' and repeatedly or persistently takes part in firing it, some growth process or metabolic change takes place in one or both cells such that ''A''’s efficiency, as one of the cells firing ''B'', is increased. The theory is often summarized as "Cells that fire together wire togeth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Donald Hebb

Donald Olding Hebb (July 22, 1904 – August 20, 1985) was a Canadian psychologist who was influential in the area of neuropsychology, where he sought to understand how the function of neurons contributed to psychological processes such as learning. He is best known for his theory of Hebbian learning, which he introduced in his classic 1949 work ''The Organization of Behavior''. He has been described as the father of neuropsychology and neural networks. A ''Review of General Psychology'' survey, published in 2002, ranked Hebb as the 19th most cited psychologist of the 20th century. His views on learning described behavior and thought in terms of brain function, explaining cognitive processes in terms of connections between neuron assemblies. Early life Donald Hebb was born in Chester, Nova Scotia, the oldest of four children of Arthur M. and M. Clara (Olding) Hebb, and lived there until the age of 16, when his parents moved to Dartmouth, Nova Scotia. Hebb's parents were both med ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variational Autoencoder

In machine learning, a variational autoencoder (VAE), is an artificial neural network architecture introduced by Diederik P. Kingma and Max Welling, belonging to the families of probabilistic graphical models and variational Bayesian methods. Variational autoencoders are often associated with the autoencoder model because of its architectural affinity, but with significant differences in the goal and mathematical formulation. Variational autoencoders are probabilistic generative models that require neural networks as only a part of their overall structure, as e.g. in VQ-VAE. The neural network components are typically referred to as the encoder and decoder for the first and second component respectively. The first neural network maps the input variable to a latent space that corresponds to the parameters of a variational distribution. In this way, the encoder can produce multiple different samples that all come from the same distribution. The decoder has the opposite function, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Helmholtz Machine

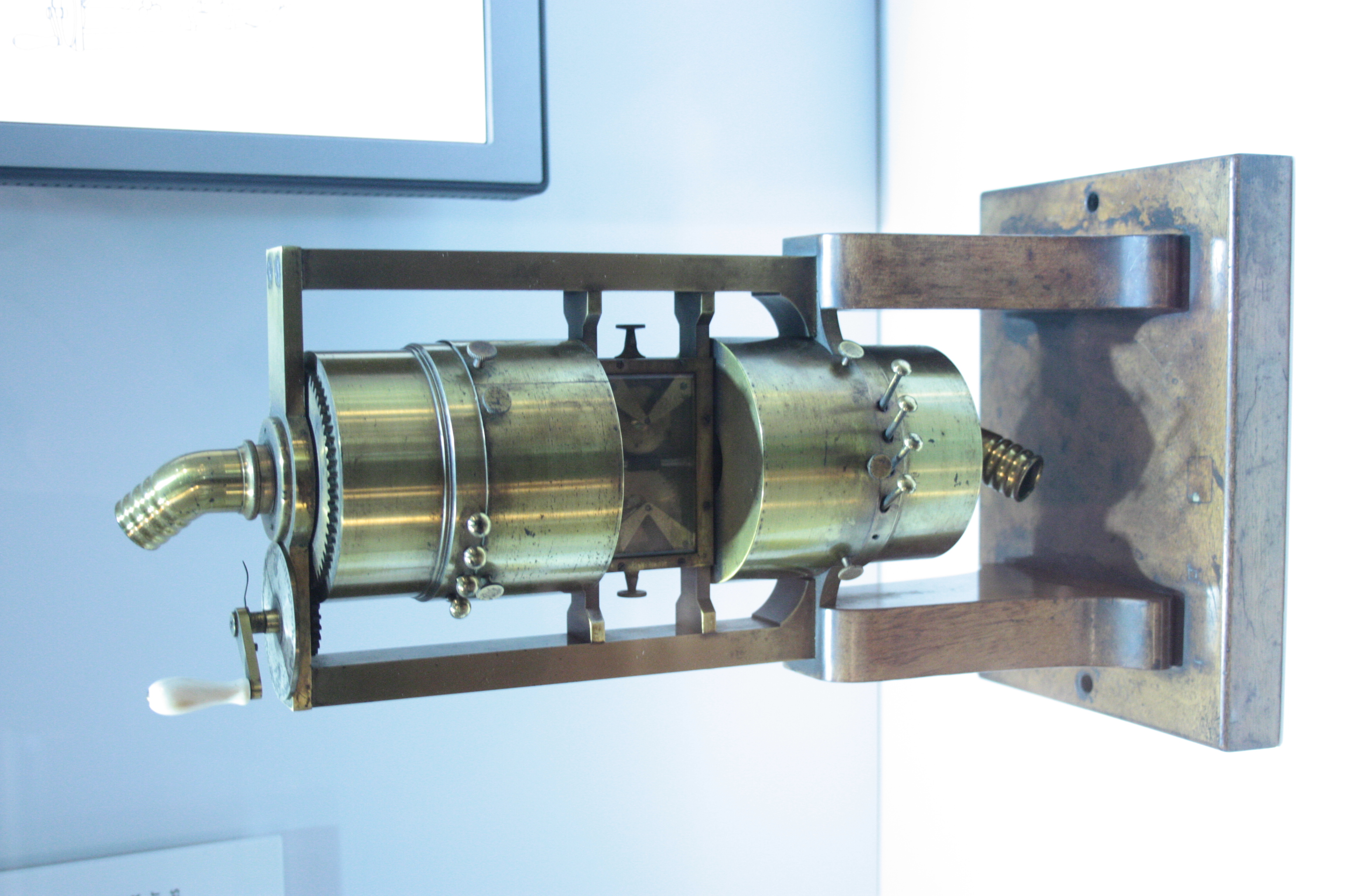

Hermann Ludwig Ferdinand von Helmholtz (31 August 1821 – 8 September 1894) was a German physicist and physician who made significant contributions in several scientific fields, particularly hydrodynamic stability. The Helmholtz Association, the largest German association of research institutions, is named in his honor. In the fields of physiology and psychology, Helmholtz is known for his mathematics concerning the eye, theories of vision, ideas on the visual perception of space, color vision research, the sensation of tone, perceptions of sound, and empiricism in the physiology of perception. In physics, he is known for his theories on the conservation of energy, work in electrodynamics, chemical thermodynamics, and on a mechanical foundation of thermodynamics. As a philosopher, he is known for his philosophy of science, ideas on the relation between the laws of perception and the laws of nature, the science of aesthetics, and ideas on the civilizing power of science. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |