|

Tf–idf

In information retrieval, tf–idf (also TF*IDF, TFIDF, TF–IDF, or Tf–idf), short for term frequency–inverse document frequency, is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus. It is often used as a weighting factor in searches of information retrieval, text mining, and user modeling. The tf–idf value increases proportionally to the number of times a word appears in the document and is offset by the number of documents in the corpus that contain the word, which helps to adjust for the fact that some words appear more frequently in general. tf–idf is one of the most popular term-weighting schemes today. A survey conducted in 2015 showed that 83% of text-based recommender systems in digital libraries use tf–idf. Variations of the tf–idf weighting scheme are often used by search engines as a central tool in scoring and ranking a document's relevance given a user query. tf–idf can be successfully u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

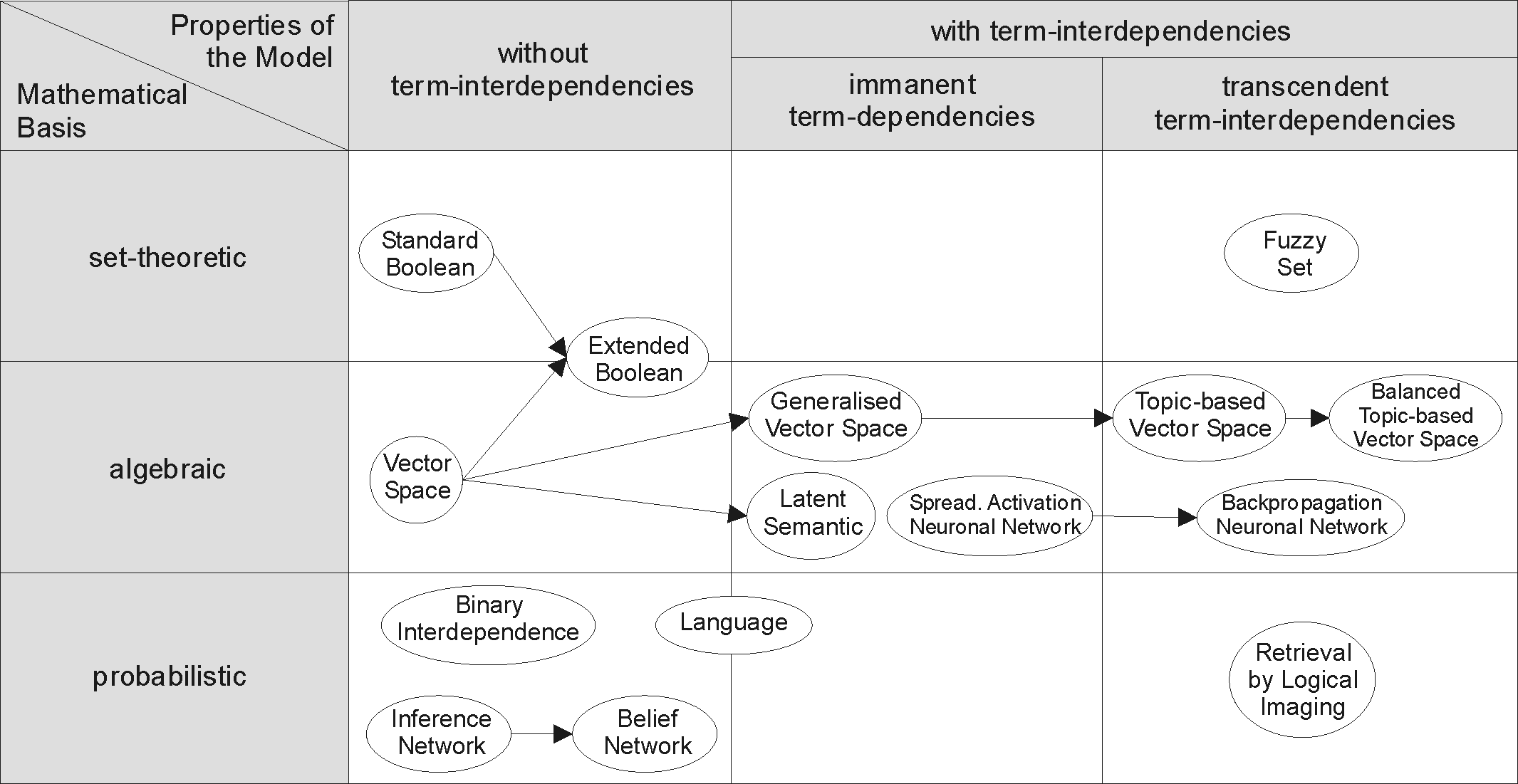

Information Retrieval

Information retrieval (IR) in computing and information science is the process of obtaining information system resources that are relevant to an information need from a collection of those resources. Searches can be based on full-text or other content-based indexing. Information retrieval is the science of searching for information in a document, searching for documents themselves, and also searching for the metadata that describes data, and for databases of texts, images or sounds. Automated information retrieval systems are used to reduce what has been called information overload. An IR system is a software system that provides access to books, journals and other documents; stores and manages those documents. Web search engines are the most visible IR applications. Overview An information retrieval process begins when a user or searcher enters a query into the system. Queries are formal statements of information needs, for example search strings in web search engines. In inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

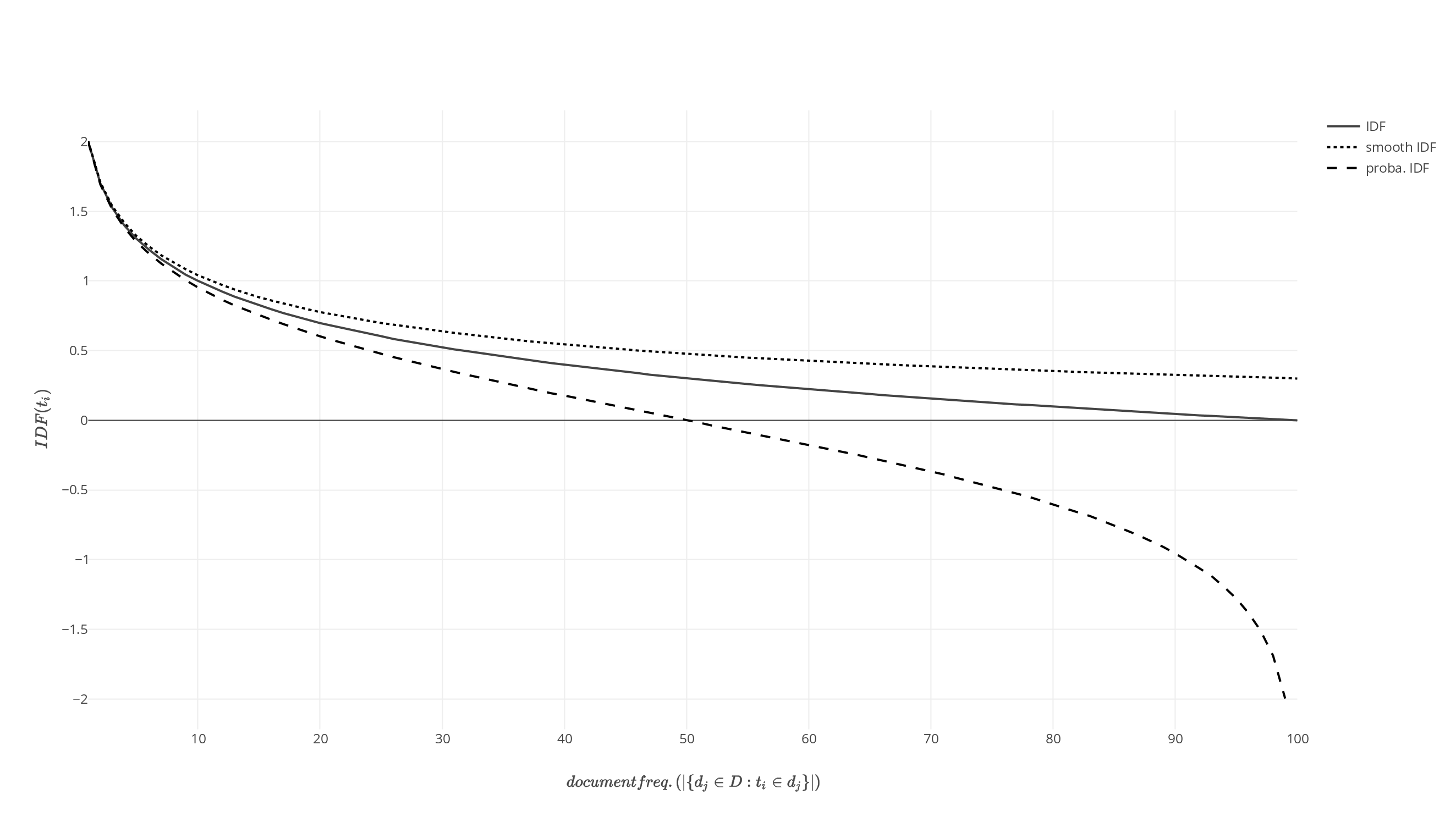

Plot IDF Functions

Plot or Plotting may refer to: Art, media and entertainment * Plot (narrative), the story of a piece of fiction Music * ''The Plot'' (album), a 1976 album by jazz trumpeter Enrico Rava * The Plot (band), a band formed in 2003 Other * ''Plot'' (film), a 1973 French-Italian film * ''Plotting'' (video game), a 1989 Taito puzzle video game, also called Flipull * ''The Plot'' (video game), a platform game released in 1988 for the Amstrad CPC and Sinclair Spectrum * ''Plotting'' (non-fiction), a 1939 book on writing by Jack Woodford * ''The Plot'' (novel), a 2021 mystery by Jean Hanff Korelitz Graphics * Plot (graphics), a graphical technique for representing a data set * Plot (radar), a graphic display that shows all collated data from a ship's on-board sensors * Plot plan, a type of drawing which shows existing and proposed conditions for a given area Land * Plot (land), a piece of land used for building on ** Burial plot, a piece of land a person is buried in * Quadrat, a de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kullback–Leibler Divergence

In mathematical statistics, the Kullback–Leibler divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how one probability distribution ''P'' is different from a second, reference probability distribution ''Q''. A simple interpretation of the KL divergence of ''P'' from ''Q'' is the expected excess surprise from using ''Q'' as a model when the actual distribution is ''P''. While it is a distance, it is not a metric, the most familiar type of distance: it is not symmetric in the two distributions (in contrast to variation of information), and does not satisfy the triangle inequality. Instead, in terms of information geometry, it is a type of divergence, a generalization of squared distance, and for certain classes of distributions (notably an exponential family), it satisfies a generalized Pythagorean theorem (which applies to squared distances). In the simple case, a relative entropy of 0 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Word Embedding

In natural language processing (NLP), word embedding is a term used for the representation of words for text analysis, typically in the form of a real-valued vector that encodes the meaning of the word such that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using a set of language modeling and feature learning techniques where words or phrases from the vocabulary are mapped to vectors of real numbers. Methods to generate this mapping include neural networks, dimensionality reduction on the word co-occurrence matrix, probabilistic models, explainable knowledge base method, and explicit representation in terms of the context in which words appear. Word and phrase embeddings, when used as the underlying input representation, have been shown to boost the performance in NLP tasks such as syntactic parsing and sentiment analysis. Development and history of the approach In Distributional semantics, a quantitative m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Base 10 Logarithm

In mathematics, the common logarithm is the logarithm with base 10. It is also known as the decadic logarithm and as the decimal logarithm, named after its base, or Briggsian logarithm, after Henry Briggs, an English mathematician who pioneered its use, as well as standard logarithm. Historically, it was known as ''logarithmus decimalis'' or ''logarithmus decadis''. It is indicated by , , or sometimes with a capital (however, this notation is ambiguous, since it can also mean the complex natural logarithmic multi-valued function). On calculators, it is printed as "log", but mathematicians usually mean natural logarithm (logarithm with base e ≈ 2.71828) rather than common logarithm when they write "log". To mitigate this ambiguity, the ISO 80000 specification recommends that should be written , and should be . Before the early 1970s, handheld electronic calculators were not available, and mechanical calculators capable of multiplication were bulky, expensive and not wide ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mutual Information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as shannons (bits), nats or hartleys) obtained about one random variable by observing the other random variable. The concept of mutual information is intimately linked to that of entropy of a random variable, a fundamental notion in information theory that quantifies the expected "amount of information" held in a random variable. Not limited to real-valued random variables and linear dependence like the correlation coefficient, MI is more general and determines how different the joint distribution of the pair (X,Y) is from the product of the marginal distributions of X and Y. MI is the expected value of the pointwise mutual information (PMI). The quantity was defined and analyzed by Claude Shannon in his landmark paper "A Mathemati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Entropy

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y given that the value of another random variable X is known. Here, information is measured in shannons, nats, or hartley Hartley may refer to: Places Australia *Hartley, New South Wales *Hartley, South Australia **Electoral district of Hartley, a state electoral district Canada *Hartley Bay, British Columbia United Kingdom *Hartley, Cumbria *Hartley, Plymou ...s. The ''entropy of Y conditioned on X'' is written as \Eta(Y, X). Definition The conditional entropy of Y given X is defined as where \mathcal X and \mathcal Y denote the support sets of X and Y. ''Note:'' Here, the convention is that the expression 0 \log 0 should be treated as being equal to zero. This is because \lim_ \theta\, \log \theta = 0. Intuitively, notice that by definition of Expected value, expected value and of Conditional Probability, conditional proba ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a random phe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Event Space

In probability theory, a probability space or a probability triple (\Omega, \mathcal, P) is a mathematical construct that provides a formal model of a random process or "experiment". For example, one can define a probability space which models the throwing of a die. A probability space consists of three elements:Stroock, D. W. (1999). Probability theory: an analytic view. Cambridge University Press. # A sample space, \Omega, which is the set of all possible outcomes. # An event space, which is a set of events \mathcal, an event being a set of outcomes in the sample space. # A probability function, which assigns each event in the event space a probability, which is a number between 0 and 1. In order to provide a sensible model of probability, these elements must satisfy a number of axioms, detailed in this article. In the example of the throw of a standard die, we would take the sample space to be \. For the event space, we could simply use the set of all subsets of the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Self-information

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative way of expressing probability, much like odds or log-odds, but which has particular mathematical advantages in the setting of information theory. The Shannon information can be interpreted as quantifying the level of "surprise" of a particular outcome. As it is such a basic quantity, it also appears in several other settings, such as the length of a message needed to transmit the event given an optimal source coding of the random variable. The Shannon information is closely related to ''entropy'', which is the expected value of the self-information of a random variable, quantifying how surprising the random variable is "on average". This is the average amount of self-information an observer would expect to gain about a random variable wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of non-deterministic or uncertain processes or measured quantities that may either be single occurrences or evolve over time in a random fashion). Although it is not possible to perfectly predict random events, much can be said about their behavior. Two major results in probability ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |