tf–idf on:

[Wikipedia]

[Google]

[Amazon]

In

The inverse document frequency is a measure of how much information the word provides, i.e., how common or rare it is across all documents. It is the logarithmically scaled inverse fraction of the documents that contain the word (obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient):

:

with

* : is the set of all documents in the corpus

* : total number of documents in the corpus

* : number of documents where the term appears (i.e., ). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the numerator and denominator to .

The inverse document frequency is a measure of how much information the word provides, i.e., how common or rare it is across all documents. It is the logarithmically scaled inverse fraction of the documents that contain the word (obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient):

:

with

* : is the set of all documents in the corpus

* : total number of documents in the corpus

* : number of documents where the term appears (i.e., ). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the numerator and denominator to .

Anatomy of a search engine

as used in

TfidfTransformer

in

Text to Matrix Generator (TMG)

MATLAB toolbox that can be used for various tasks in text mining (TM) specifically i) indexing, ii) retrieval, iii) dimensionality reduction, iv) clustering, v) classification. The indexing step offers the user the ability to apply local and global weighting methods, including tf–idf.

Term-frequency explained

Explanation of term-frequency {{DEFAULTSORT:Tf-Idf Statistical natural language processing Ranking functions Vector space model

information retrieval

Information retrieval (IR) in computing and information science is the task of identifying and retrieving information system resources that are relevant to an Information needs, information need. The information need can be specified in the form ...

, tf–idf (term frequency–inverse document frequency, TF*IDF, TFIDF, TF–IDF, or Tf–idf) is a measure of importance of a word to a document

A document is a writing, written, drawing, drawn, presented, or memorialized representation of thought, often the manifestation of nonfiction, non-fictional, as well as fictional, content. The word originates from the Latin ', which denotes ...

in a collection or corpus

Corpus (plural ''corpora'') is Latin for "body". It may refer to:

Linguistics

* Text corpus, in linguistics, a large and structured set of texts

* Speech corpus, in linguistics, a large set of speech audio files

* Corpus linguistics, a branch of ...

, adjusted for the fact that some words appear more frequently in general. Like the bag-of-words model, it models a document as a multiset

In mathematics, a multiset (or bag, or mset) is a modification of the concept of a set that, unlike a set, allows for multiple instances for each of its elements. The number of instances given for each element is called the ''multiplicity'' of ...

of words, without word order

In linguistics, word order (also known as linear order) is the order of the syntactic constituents of a language. Word order typology studies it from a cross-linguistic perspective, and examines how languages employ different orders. Correlatio ...

. It is a refinement over the simple bag-of-words model

The bag-of-words (BoW) model is a model of text which uses an unordered collection (a "multiset, bag") of words. It is used in natural language processing and information retrieval (IR). It disregards word order (and thus most of syntax or gramm ...

, by allowing the weight of words to depend on the rest of the corpus.

It was often used as a weighting factor in searches of information retrieval, text mining

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from differe ...

, and user modeling. A survey conducted in 2015 showed that 83% of text-based recommender systems in digital libraries used tf–idf. Variations of the tf–idf weighting scheme were often used by search engine

A search engine is a software system that provides hyperlinks to web pages, and other relevant information on World Wide Web, the Web in response to a user's web query, query. The user enters a query in a web browser or a mobile app, and the sea ...

s as a central tool in scoring and ranking a document's relevance

Relevance is the connection between topics that makes one useful for dealing with the other. Relevance is studied in many different fields, including cognitive science, logic, and library and information science. Epistemology studies it in gener ...

given a user query.

One of the simplest ranking functions is computed by summing the tf–idf for each query term; many more sophisticated ranking functions are variants of this simple model.

Motivations

Karen Spärck Jones

Karen Ida Boalth Spärck Jones (26 August 1935 – 4 April 2007) was a self-taught programmer and a pioneering British computer and information scientist responsible for the concept of inverse document frequency (IDF), a technology that unde ...

(1972) conceived a statistical interpretation of term-specificity called Inverse Document Frequency (idf), which became a cornerstone of term weighting:

For example, the df (document frequency) and idf for some words in Shakespeare's 37 plays are as follows:

We see that "Romeo

Romeo Montague () is the male protagonist of William Shakespeare's tragedy ''Romeo and Juliet''. The son of Characters in Romeo and Juliet#Lord Montague, Lord Montague and his wife, Characters in Romeo and Juliet#Lady Montague, Lady Montague, he ...

", "Falstaff

Sir John Falstaff is a fictional character who appears in three plays by William Shakespeare and is eulogised in a fourth. His significance as a fully developed character is primarily formed in the plays ''Henry IV, Part 1'' and '' Part 2'', w ...

", and "salad" appears in very few plays, so seeing these words, one could get a good idea as to which play it might be. In contrast, "good" and "sweet" appears in every play and are completely uninformative as to which play it is.

Definition

# The tf–idf is the product of two statistics, ''term frequency'' and ''inverse document frequency''. There are various ways for determining the exact values of both statistics. # A formula that aims to define the importance of a keyword or phrase within a document or a web page.Term frequency

Term frequency, , is the relative frequency of term within document , :, where is the ''raw count'' of a term in a document, i.e., the number of times that term occurs in document . Note the denominator is simply the total number of terms in document (counting each occurrence of the same term separately). There are various other ways to define term frequency: * the raw count itself: * Boolean "frequencies": if occurs in and 0 otherwise; * logarithmically scaled frequency: ; * augmented frequency, to prevent a bias towards longer documents, e.g. raw frequency divided by the raw frequency of the most frequently occurring term in the document: :Inverse document frequency

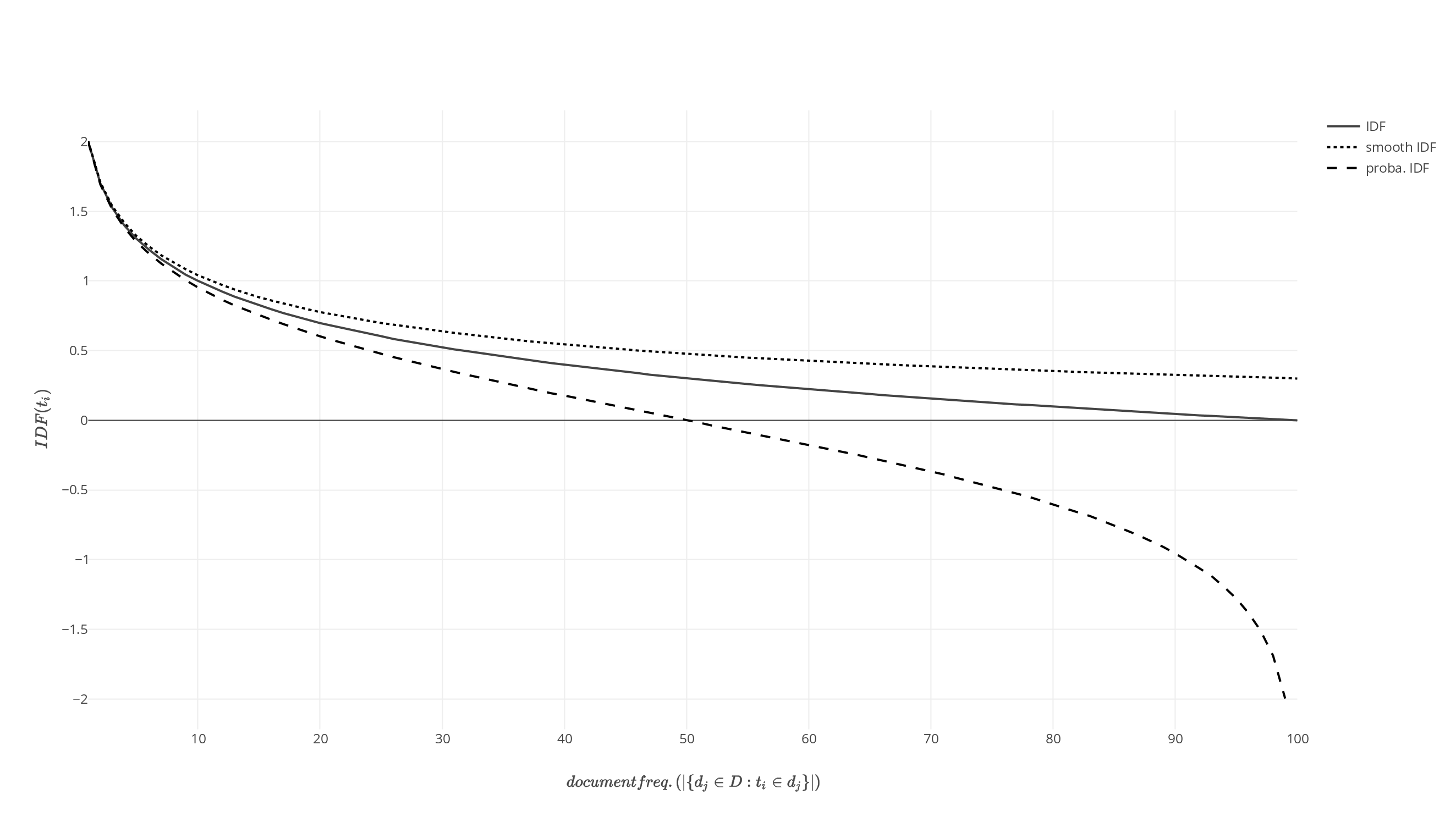

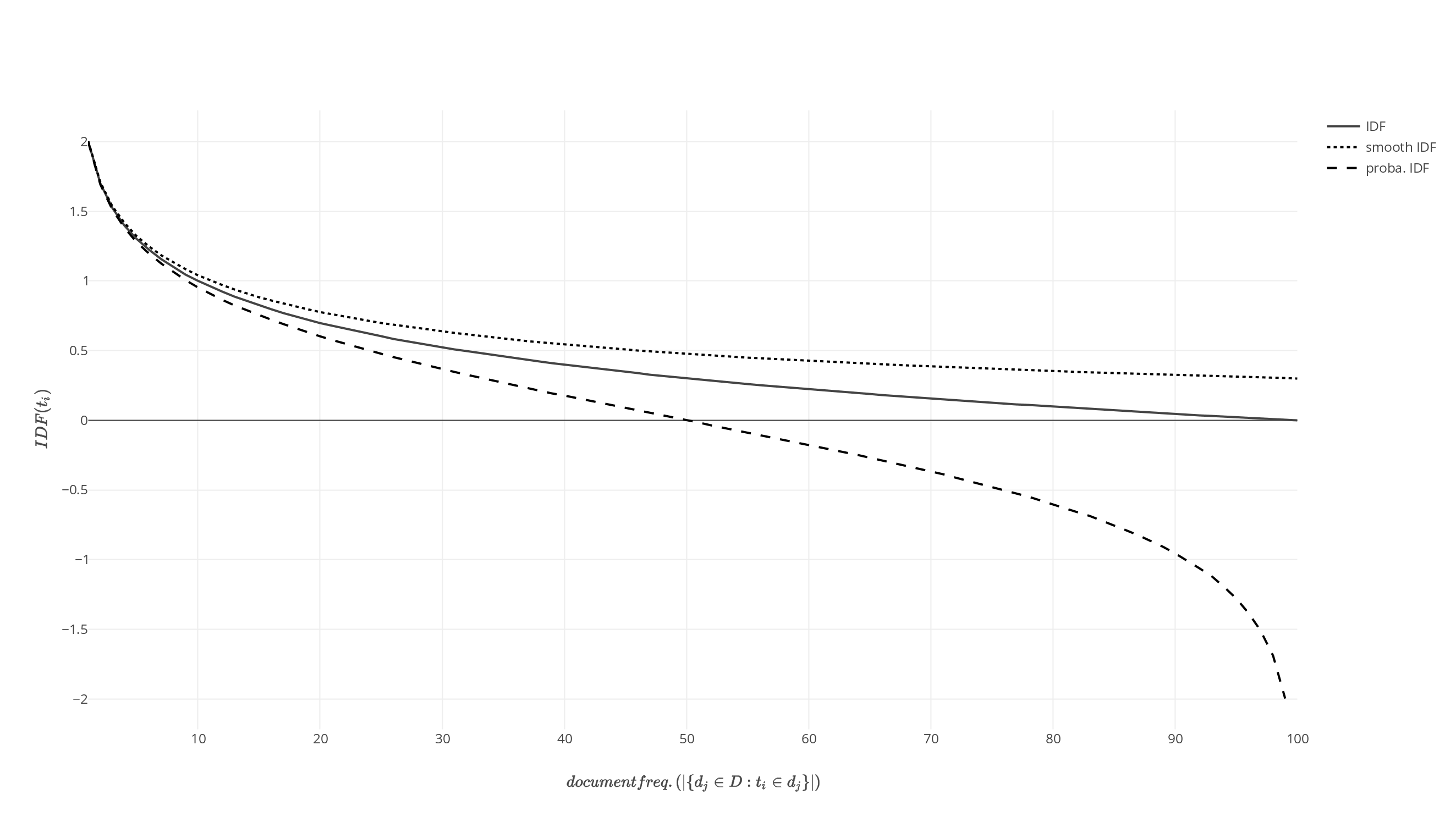

The inverse document frequency is a measure of how much information the word provides, i.e., how common or rare it is across all documents. It is the logarithmically scaled inverse fraction of the documents that contain the word (obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient):

:

with

* : is the set of all documents in the corpus

* : total number of documents in the corpus

* : number of documents where the term appears (i.e., ). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the numerator and denominator to .

The inverse document frequency is a measure of how much information the word provides, i.e., how common or rare it is across all documents. It is the logarithmically scaled inverse fraction of the documents that contain the word (obtained by dividing the total number of documents by the number of documents containing the term, and then taking the logarithm of that quotient):

:

with

* : is the set of all documents in the corpus

* : total number of documents in the corpus

* : number of documents where the term appears (i.e., ). If the term is not in the corpus, this will lead to a division-by-zero. It is therefore common to adjust the numerator and denominator to .

Term frequency–inverse document frequency

Then tf–idf is calculated as : A high weight in tf–idf is reached by a high termfrequency

Frequency is the number of occurrences of a repeating event per unit of time. Frequency is an important parameter used in science and engineering to specify the rate of oscillatory and vibratory phenomena, such as mechanical vibrations, audio ...

(in the given document) and a low document frequency of the term in the whole collection of documents; the weights hence tend to filter out common terms. Since the ratio inside the idf's log function is always greater than or equal to 1, the value of idf (and tf–idf) is greater than or equal to 0. As a term appears in more documents, the ratio inside the logarithm approaches 1, bringing the idf and tf–idf closer to 0.

Justification of idf

Idf was introduced as "term specificity" byKaren Spärck Jones

Karen Ida Boalth Spärck Jones (26 August 1935 – 4 April 2007) was a self-taught programmer and a pioneering British computer and information scientist responsible for the concept of inverse document frequency (IDF), a technology that unde ...

in a 1972 paper. Although it has worked well as a heuristic

A heuristic or heuristic technique (''problem solving'', '' mental shortcut'', ''rule of thumb'') is any approach to problem solving that employs a pragmatic method that is not fully optimized, perfected, or rationalized, but is nevertheless ...

, its theoretical foundations have been troublesome for at least three decades afterward, with many researchers trying to find information theoretic justifications for it.

Spärck Jones's own explanation did not propose much theory, aside from a connection to Zipf's law

Zipf's law (; ) is an empirical law stating that when a list of measured values is sorted in decreasing order, the value of the -th entry is often approximately inversely proportional to .

The best known instance of Zipf's law applies to the ...

. Attempts have been made to put idf on a probabilistic

Probability is a branch of mathematics and statistics concerning events and numerical descriptions of how likely they are to occur. The probability of an event is a number between 0 and 1; the larger the probability, the more likely an e ...

footing, by estimating the probability that a given document contains a term as the relative document frequency,

:

so that we can define idf as

:

Namely, the inverse document frequency is the logarithm of "inverse" relative document frequency.

This probabilistic interpretation in turn takes the same form as that of self-information

In information theory, the information content, self-information, surprisal, or Shannon information is a basic quantity derived from the probability of a particular event occurring from a random variable. It can be thought of as an alternative w ...

. However, applying such information-theoretic notions to problems in information retrieval leads to problems when trying to define the appropriate event space

Event may refer to:

Gatherings of people

* Ceremony, an event of ritual significance, performed on a special occasion

* Convention (meeting), a gathering of individuals engaged in some common interest

* Event management, the organization of e ...

s for the required probability distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical descri ...

s: not only documents need to be taken into account, but also queries and terms.

Link with information theory

Both term frequency and inverse document frequency can be formulated in terms ofinformation theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, ...

; it helps to understand why their product has a meaning in terms of joint informational content of a document. A characteristic assumption about the distribution is that:

:

This assumption and its implications, according to Aizawa: "represent the heuristic that tf–idf employs."

The conditional entropy

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable Y given that the value of another random variable X is known. Here, information is measured in shannons, n ...

of a "randomly chosen" document in the corpus , conditional to the fact it contains a specific term (and assuming that all documents have equal probability to be chosen) is:

:

In terms of notation, and are "random variables" corresponding to respectively draw a document or a term. The mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

can be expressed as

:

The last step is to expand , the unconditional probability to draw a term, with respect to the (random) choice of a document, to obtain:

:

This expression shows that summing the Tf–idf of all possible terms and documents recovers the mutual information between documents and term taking into account all the specificities of their joint distribution. Each Tf–idf hence carries the "bit of information" attached to a term x document pair.

Example of tf–idf

Suppose that we have term count tables of a corpus consisting of only two documents, as listed on the right. The calculation of tf–idf for the term "this" is performed as follows: In its raw frequency form, tf is just the frequency of the "this" for each document. In each document, the word "this" appears once; but as the document 2 has more words, its relative frequency is smaller. : : An idf is constant per corpus, and accounts for the ratio of documents that include the word "this". In this case, we have a corpus of two documents and all of them include the word "this". : So tf–idf is zero for the word "this", which implies that the word is not very informative as it appears in all documents. : : The word "example" is more interesting - it occurs three times, but only in the second document: : : : Finally, : : (using the base 10 logarithm).Beyond terms

The idea behind tf–idf also applies to entities other than terms. In 1998, the concept of idf was applied to citations. The authors argued that "if a very uncommon citation is shared by two documents, this should be weighted more highly than a citation made by a large number of documents". In addition, tf–idf was applied to "visual words" with the purpose of conducting object matching in videos, and entire sentences. However, the concept of tf–idf did not prove to be more effective in all cases than a plain tf scheme (without idf). When tf–idf was applied to citations, researchers could find no improvement over a simple citation-count weight that had no idf component.Derivatives

A number of term-weighting schemes have derived from tf–idf. One of them is TF–PDF (term frequency * proportional document frequency). TF–PDF was introduced in 2001 in the context of identifying emerging topics in the media. The PDF component measures the difference of how often a term occurs in different domains. Another derivate is TF–IDuF. In TF–IDuF, idf is not calculated based on the document corpus that is to be searched or recommended. Instead, idf is calculated on users' personal document collections. The authors report that TF–IDuF was equally effective as tf–idf but could also be applied in situations when, e.g., a user modeling system has no access to a global document corpus. The DELTA TF-IDF derivative uses the difference in importance of a term across two specific classes, like positive and negative sentiment. For example, it can assign a high score to a word like "excellent" in positive reviews and a low score to the same word in negative reviews. This helps identify words that strongly indicate the sentiment of a document, potentially leading to improved accuracy in text classification tasks.See also

*Word embedding

In natural language processing, a word embedding is a representation of a word. The embedding is used in text analysis. Typically, the representation is a real-valued vector that encodes the meaning of the word in such a way that the words that ...

* Kullback–Leibler divergence

In mathematical statistics, the Kullback–Leibler (KL) divergence (also called relative entropy and I-divergence), denoted D_\text(P \parallel Q), is a type of statistical distance: a measure of how much a model probability distribution is diff ...

* Latent Dirichlet allocation

In natural language processing, latent Dirichlet allocation (LDA) is a Bayesian network (and, therefore, a generative statistical model) for modeling automatically extracted topics in textual corpora. The LDA is an example of a Bayesian topic ...

* Latent semantic analysis

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the d ...

* Mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

* Noun phrase

A noun phrase – or NP or nominal (phrase) – is a phrase that usually has a noun or pronoun as its head, and has the same grammatical functions as a noun. Noun phrases are very common cross-linguistically, and they may be the most frequently ...

* Okapi BM25

* PageRank

PageRank (PR) is an algorithm used by Google Search to rank web pages in their search engine results. It is named after both the term "web page" and co-founder Larry Page. PageRank is a way of measuring the importance of website pages. Accordin ...

* Vector space model

Vector space model or term vector model is an algebraic model for representing text documents (or more generally, items) as vector space, vectors such that the distance between vectors represents the relevance between the documents. It is used in i ...

* Word count

The word count is the number of words in a document or passage of text. Word counting may be needed when a text is required to stay within certain numbers of words. This may particularly be the case in academia, legal proceedings, journalism and ad ...

* SMART Information Retrieval System

References

* * * *External links and suggested reading

* Gensim is a Python library for vector space modeling and includes tf–idf weighting.Anatomy of a search engine

as used in

Lucene

Apache Lucene is a free and open-source search engine software library, originally written in Java by Doug Cutting. It is supported by the Apache Software Foundation and is released under the Apache Software License. Lucene is widely used as a ...

TfidfTransformer

in

scikit-learn

scikit-learn (formerly scikits.learn and also known as sklearn) is a free and open-source machine learning library for the Python programming language.

It features various classification, regression and clustering algorithms including support ...

Text to Matrix Generator (TMG)

MATLAB toolbox that can be used for various tasks in text mining (TM) specifically i) indexing, ii) retrieval, iii) dimensionality reduction, iv) clustering, v) classification. The indexing step offers the user the ability to apply local and global weighting methods, including tf–idf.

Term-frequency explained

Explanation of term-frequency {{DEFAULTSORT:Tf-Idf Statistical natural language processing Ranking functions Vector space model