|

Scatter (vector Addressing)

Gather/scatter is a type of memory addressing that at once collects (gathers) from, or stores (scatters) data to, multiple, arbitrary memory indices. Examples of its use include sparse linear algebra operations, sorting algorithms, fast Fourier transforms, and some computational graph theory problems. It is the vector equivalent of register indirect addressing, with gather involving indexed reads, and scatter, indexed writes. Vector processors (and some SIMD units in CPUs) have hardware support for gather and scatter operations, as do many input/output systems, allowing large data sets to be transferred to main memory more rapidly. The concept is somewhat similar to vectored I/O, which is sometimes also referred to as scatter-gather I/O. This system differs in that it is used to map multiple sources of data from contiguous structures into a single stream for reading or writing. A common example is writing out a series of strings, which in most programming languages would be st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its Instruction (computer science), instructions are designed to operate efficiently and effectively on large Array data structure, one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, Data compression, compression and similar tasks. Vector processing techniques also operate in video game console, video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

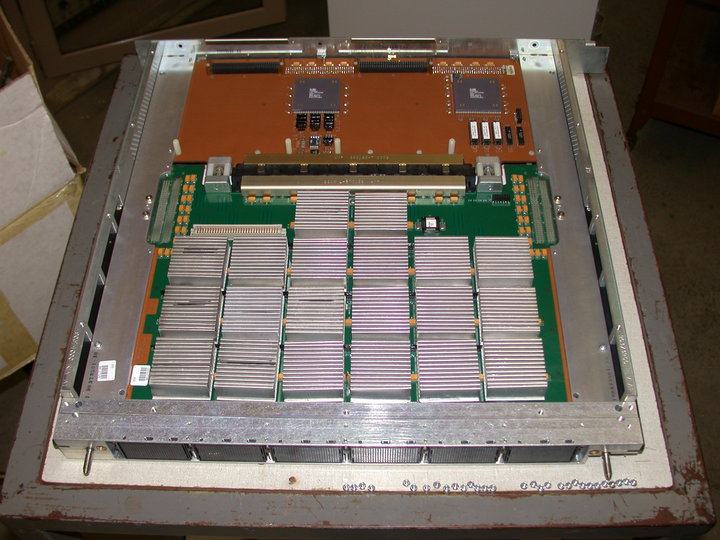

Cray-1

The Cray-1 was a supercomputer designed, manufactured and marketed by Cray Research. Announced in 1975, the first Cray-1 system was installed at Los Alamos National Laboratory in 1976. Eventually, eighty Cray-1s were sold, making it one of the most successful supercomputers in history. It is perhaps best known for its unique shape, a relatively small C-shaped cabinet with a ring of benches around the outside covering the power supplies and the cooling system. The Cray-1 was the first supercomputer to successfully implement the vector processor design. These systems improve the performance of math operations by arranging memory and registers to quickly perform a single operation on a large set of data. Previous systems like the CDC STAR-100 and ASC had implemented these concepts but did so in a way that seriously limited their performance. The Cray-1 addressed these problems and produced a machine that ran several times faster than any similar design. The Cray-1's architect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Access Pattern

In computing, a memory access pattern or IO access pattern is the pattern with which a system or program reads and writes memory on secondary storage. These patterns differ in the level of locality of reference and drastically affect cache performance, and also have implications for the approach to parallelism and distribution of workload in shared memory systems. Further, cache coherency issues can affect multiprocessor performance, which means that certain memory access patterns place a ceiling on parallelism (which manycore approaches seek to break). Computer memory is usually described as " random access", but traversals by software will still exhibit patterns that can be exploited for efficiency. Various tools exist to help system designers and programmers understand, analyse and improve the memory access pattern, including VTune and Vectorization Advisor, including tools to address GPU memory access patterns. Memory access patterns also have implications for securi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compute Kernel

In computing, a compute kernel is a routine compiled for high throughput accelerators (such as graphics processing units (GPUs), digital signal processors (DSPs) or field-programmable gate arrays (FPGAs)), separate from but used by a main program (typically running on a central processing unit). They are sometimes called compute shaders, sharing execution units with vertex shaders and pixel shaders on GPUs, but are not limited to execution on one class of device, or graphics APIs. Description Compute kernels roughly correspond to inner loops when implementing algorithms in traditional languages (except there is no implied sequential operation), or to code passed to internal iterators. They may be specified by a separate programming language such as " OpenCL C" (managed by the OpenCL API), as "compute shaders" written in a shading language (managed by a graphics API such as OpenGL), or embedded directly in application code written in a high level language, as in the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vectorization (other)

Vectorization may refer to: Computing * Array programming, a style of computer programming where operations are applied to whole arrays instead of individual elements * Automatic vectorization, a compiler optimization that transforms loops to vector operations * Image tracing, the creation of vector from raster graphics * Word embedding, mapping words to vectors, in natural language processing Other uses * Vectorization (mathematics), a linear transformation which converts a matrix into a column vector * Drug vectorization, to (intra)cellular targeting See also * Vector (other) Vector most often refers to: * Euclidean vector, a quantity with a magnitude and a direction * Disease vector, an agent that carries and transmits an infectious pathogen into another living organism Vector may also refer to: Mathematics ... * Vector graphics (other) {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SIMD

Single instruction, multiple data (SIMD) is a type of parallel computer, parallel processing in Flynn's taxonomy. SIMD describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should not be confused with an ISA. Such machines exploit Data parallelism, data level parallelism, but not Concurrent computing, concurrency: there are simultaneous (parallel) computations, but each unit performs exactly the same instruction at any given moment (just with different data). A simple example is to add many pairs of numbers together, all of the SIMD units are performing an addition, but each one has different pairs of values to add. SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern Cen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetch

Prefetching is a technique used in computing to improve performance by retrieving data or instructions before they are needed. By predicting what a program will request in the future, the system can load information in advance to reduced wait times . Prefetching is used in various areas of computing, including CPU architectures and operating systems. It can be implemented in both hardware and software, and it relies on detecting access patterns that suggest what data is likely to be needed soon. Overview Prefetching works by predicting which memory addresses or resources will be accessing and load them into faster access storage, like caches. Prefetching may be used: * Hardware-level, such as CPU memory controllers * Software-level, strategies in compilers, operating systems, logic in web browsers or file systems Hardware Processors (CPU's) often include prefetching that attempts to reduce cache misses by loading data into cache before it is requested by the running prog ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

InfiniBand

InfiniBand (IB) is a computer networking communications standard used in high-performance computing that features very high throughput and very low latency. It is used for data interconnect both among and within computers. InfiniBand is also used as either a direct or switched interconnect between servers and storage systems, as well as an interconnect between storage systems. It is designed to be scalable and uses a switched fabric network topology. Between 2014 and June 2016, it was the most commonly used interconnect in the TOP500 list of supercomputers. Mellanox (acquired by Nvidia) manufactures InfiniBand host bus adapters and network switches, which are used by large computer system and database vendors in their product lines. As a computer cluster interconnect, IB competes with Ethernet, Fibre Channel, and Intel Omni-Path. The technology is promoted by the InfiniBand Trade Association. History InfiniBand originated in 1999 from the merger of two competing designs: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Vector Extension

AArch64, also known as ARM64, is a 64-bit version of the ARM architecture family, a widely used set of computer processor designs. It was introduced in 2011 with the ARMv8 architecture and later became part of the ARMv9 series. AArch64 allows processors to handle more memory and perform faster calculations than earlier 32-bit versions. It is designed to work alongside the older 32-bit mode, known as AArch32, allowing compatibility with a wide range of software. Devices that use AArch64 include smartphones, tablets, personal computers, and servers. The AArch64 architecture has continued to evolve through updates that improve performance, security, and support for advanced computing tasks. AArch64 Execution state In ARMv8-A, ARMv8-R, and ARMv9-A, an "Execution state" defines key characteristics of the processor’s environment. This includes the number of bits used in the primary processor registers, the supported instruction sets, and other aspects of the processor's execution ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ARM Architecture

ARM (stylised in lowercase as arm, formerly an acronym for Advanced RISC Machines and originally Acorn RISC Machine) is a family of reduced instruction set computer, RISC instruction set architectures (ISAs) for central processing unit, computer processors. Arm Holdings develops the ISAs and licenses them to other companies, who build the physical devices that use the instruction set. It also designs and licenses semiconductor intellectual property core, cores that implement these ISAs. Due to their low costs, low power consumption, and low heat generation, ARM processors are useful for light, portable, battery-powered devices, including smartphones, laptops, and tablet computers, as well as embedded systems. However, ARM processors are also used for desktop computer, desktops and server (computing), servers, including Fugaku (supercomputer), Fugaku, the world's fastest supercomputer from 2020 to 2022. With over 230 billion ARM chips produced, , ARM is the most widely used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVX-512

AVX-512 are 512-bit extensions to the 256-bit Advanced Vector Extensions SIMD instructions for x86 instruction set architecture (ISA) proposed by Intel in July 2013, and first implemented in the 2016 Intel Xeon Phi x200 (Knights Landing), and then later in a number of AMD and other Intel CPUs ( see list below). AVX-512 consists of multiple extensions that may be implemented independently. This policy is a departure from the historical requirement of implementing the entire instruction block. Only the core extension AVX-512F (AVX-512 Foundation) is required by all AVX-512 implementations. Besides widening most 256-bit instructions, the extensions introduce various new operations, such as new data conversions, scatter operations, and permutations. The number of AVX registers is increased from 16 to 32, and eight new "mask registers" are added, which allow for variable selection and blending of the results of instructions. In CPUs with the vector length (VL) extension—included in m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AVX2

Advanced Vector Extensions (AVX, also known as Gesher New Instructions and then Sandy Bridge New Instructions) are SIMD extensions to the x86 instruction set architecture for microprocessors from Intel and Advanced Micro Devices (AMD). They were proposed by Intel in March 2008 and first supported by Intel with the Sandy Bridge microarchitecture shipping in Q1 2011 and later by AMD with the Bulldozer microarchitecture shipping in Q4 2011. AVX provides new features, new instructions, and a new coding scheme. AVX2 (also known as Haswell New Instructions) expands most integer commands to 256 bits and introduces new instructions. They were first supported by Intel with the Haswell microarchitecture, which shipped in 2013. AVX-512 expands AVX to 512-bit support using a new EVEX prefix encoding proposed by Intel in July 2013 and first supported by Intel with the Knights Landing co-processor, which shipped in 2016. In conventional processors, AVX-512 was introduced with Skylak ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |