|

Matching Pursuit

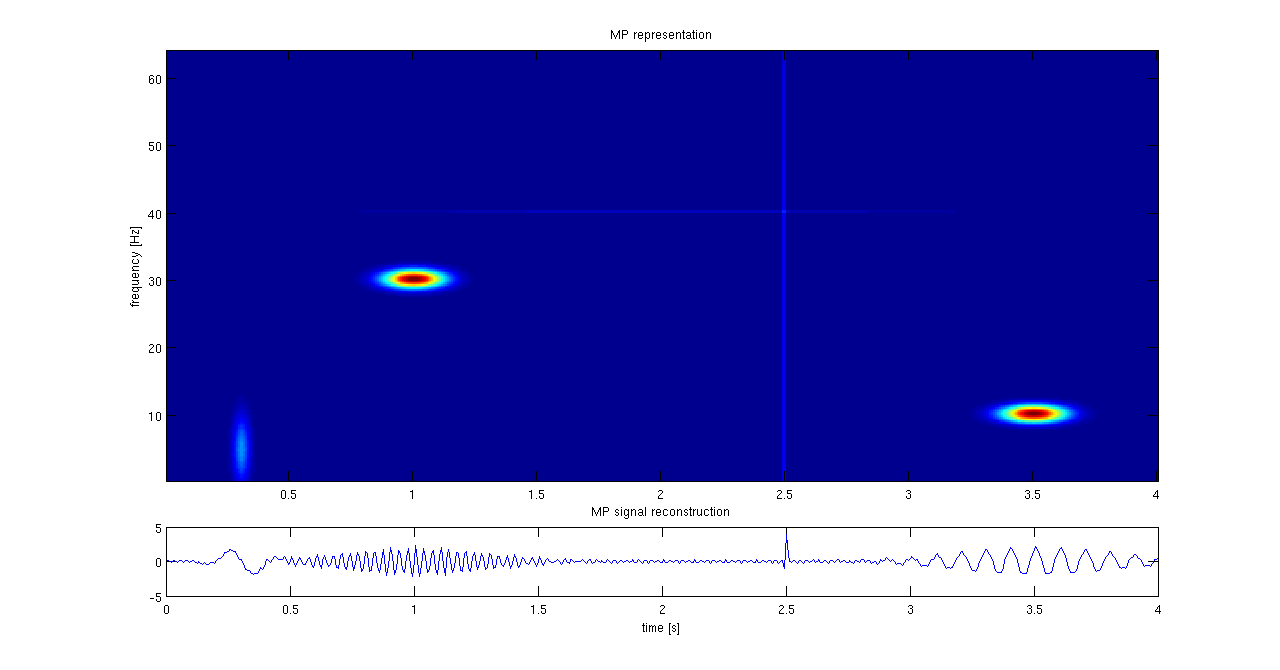

Matching pursuit (MP) is a sparse approximation algorithm which finds the "best matching" projections of multidimensional data onto the span of an over-complete (i.e., redundant) dictionary D. The basic idea is to approximately represent a signal f from Hilbert space H as a weighted sum of finitely many functions g_ (called atoms) taken from D. An approximation with N atoms has the form : f(t) \approx \hat f_N(t) := \sum_^ a_n g_(t) where g_ is the \gamma_nth column of the matrix D and a_n is the scalar weighting factor (amplitude) for the atom g_. Normally, not every atom in D will be used in this sum. Instead, matching pursuit chooses the atoms one at a time in order to maximally (greedily) reduce the approximation error. This is achieved by finding the atom that has the highest inner product with the signal (assuming the atoms are normalized), subtracting from the signal an approximation that uses only that one atom, and repeating the process until the signal is satisfactorily d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Restricted Isometry Property

In linear algebra, the restricted isometry property (RIP) characterizes matrices which are nearly orthonormal, at least when operating on sparse vectors. The concept was introduced by Emmanuel Candès and Terence TaoE. J. Candes and T. Tao, "Decoding by Linear Programming," IEEE Trans. Inf. Th., 51(12): 4203–4215 (2005). and is used to prove many theorems in the field of compressed sensing. There are no known large matrices with bounded restricted isometry constants (computing these constants is strongly NP-hard, and is hard to approximate as well), but many random matrices have been shown to remain bounded. In particular, it has been shown that with exponentially high probability, random Gaussian, Bernoulli, and partial Fourier matrices satisfy the RIP with number of measurements nearly linear in the sparsity level. The current smallest upper bounds for any large rectangular matrices are for those of Gaussian matrices. Web forms to evaluate bounds for the Gaussian ensemble a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stepwise Regression

In statistics, stepwise regression is a method of fitting regression models in which the choice of predictive variables is carried out by an automatic procedure. In each step, a variable is considered for addition to or subtraction from the set of explanatory variables based on some prespecified criterion. Usually, this takes the form of a forward, backward, or combined sequence of ''F''-tests or ''t''-tests. The frequent practice of fitting the final selected model followed by reporting estimates and confidence intervals without adjusting them to take the model building process into account has led to calls to stop using stepwise model building altogetherFlom, P. L. and Cassell, D. L. (2007) "Stopping stepwise: Why stepwise and similar selection methods are bad, and what you should use," NESUG 2007. or to at least make sure model uncertainty is correctly reflected.Chatfield, C. (1995) "Model uncertainty, data mining and statistical inference," J. R. Statist. Soc. A 158, Part ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Approximation

Sparse approximation (also known as sparse representation) theory deals with sparse solutions for systems of linear equations. Techniques for finding these solutions and exploiting them in applications have found wide use in image processing, signal processing, machine learning, medical imaging, and more. Sparse decomposition Noiseless observations Consider a linear system of equations x = D\alpha, where D is an underdetermined m\times p matrix (m < p) and . The matrix (typically assumed to be full-rank) is referred to as the dictionary, and is a signal of interest. The core sparse representation problem is defined as the quest for the sparsest possible representation satisfying . Due to the underdetermined nature of , this linear system admits in general infinitely many possible solutions, and among these we seek the one with the few ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital re ...

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing '' signals'', such as sound, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, digital storage efficiency, correcting distorted signals, subjective video quality and to also detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim Alan Victor Oppenheim''Alan Victor Oppenheim'' was elected in 1987 [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Projection Pursuit

Projection pursuit (PP) is a type of statistical technique which involves finding the most "interesting" possible projections in multidimensional data. Often, projections which deviate more from a normal distribution are considered to be more interesting. As each projection is found, the data are reduced by removing the component along that projection, and the process is repeated to find new projections; this is the "pursuit" aspect that motivated the technique known as matching pursuit. The idea of projection pursuit is to locate the projection or projections from high-dimensional space to low-dimensional space that reveal the most details about the structure of the data set. Once an interesting set of projections has been found, existing structures (clusters, surfaces, etc.) can be extracted and analyzed separately. Projection pursuit has been widely used for blind source separation, so it is very important in independent component analysis. Projection pursuit seeks one pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a popular technique for analyzing large datasets containing a high number of dimensions/features per observation, increasing the interpretability of data while preserving the maximum amount of information, and enabling the visualization of multidimensional data. Formally, PCA is a statistical technique for reducing the dimensionality of a dataset. This is accomplished by linearly transforming the data into a new coordinate system where (most of) the variation in the data can be described with fewer dimensions than the initial data. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identify clusters of closely related data points. Principal component analysis has applications in many fields such as population genetics, microbiome studies, and atmospheric science. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Least-squares Spectral Analysis

Least-squares spectral analysis (LSSA) is a method of estimating a frequency spectrum, based on a least squares fit of sinusoids to data samples, similar to Fourier analysis. Fourier analysis, the most used spectral method in science, generally boosts long-periodic noise in long gapped records; LSSA mitigates such problems. Unlike with Fourier analysis, data need not be equally spaced to use LSSA. LSSA is also known as the Vaníček method or the Gauss-Vaniček method after Petr Vaníček, and as the Lomb method or the Lomb–Scargle periodogram, based on the contributions of Nicholas R. Lomb and, independently, Jeffrey D. Scargle. Historical background The close connections between Fourier analysis, the periodogram, and least-squares fitting of sinusoids have long been known. Most developments, however, are restricted to complete data sets of equally spaced samples. In 1963, Freek J. M. Barning of Mathematisch Centrum, Amsterdam, handled unequally spaced data by similar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Processing

An image is a visual representation of something. It can be two-dimensional, three-dimensional, or somehow otherwise feed into the visual system to convey information. An image can be an artifact, such as a photograph or other two-dimensional picture, that resembles a subject. In the context of signal processing, an image is a distributed amplitude of color(s). In optics, the term “image” may refer specifically to a 2D image. An image does not have to use the entire visual system to be a visual representation. A popular example of this is of a greyscale image, which uses the visual system's sensitivity to brightness across all wavelengths, without taking into account different colors. A black and white visual representation of something is still an image, even though it does not make full use of the visual system's capabilities. Images are typically still, but in some cases can be moving or animated. Characteristics Images may be two or three- dimensional, such as a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CLEAN (algorithm)

The CLEAN algorithm is a computational algorithm to perform a deconvolution on images created in radio astronomy. It was published by Jan Högbom in 1974 and several variations have been proposed since then. a chapter from the ''MAPPING'' software manual The algorithm assumes that the image consists of a number of point sources. It will iteratively find the highest value in the image and subtract a small gain of this point source convolved with the point spread function ("dirty beam") of the observation, until the highest value is smaller than some threshold. Astronomer T. J. Cornwell writes, "The impact of CLEAN on radio astronomy has been immense", both directly in enabling g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compressed Sensing

Compressed sensing (also known as compressive sensing, compressive sampling, or sparse sampling) is a signal processing technique for efficiently acquiring and reconstructing a signal, by finding solutions to underdetermined linear systems. This is based on the principle that, through optimization, the sparsity of a signal can be exploited to recover it from far fewer samples than required by the Nyquist–Shannon sampling theorem. There are two conditions under which recovery is possible. The first one is sparsity, which requires the signal to be sparse in some domain. The second one is incoherence, which is applied through the isometric property, which is sufficient for sparse signals. Overview A common goal of the engineering field of signal processing is to reconstruct a signal from a series of sampling measurements. In general, this task is impossible because there is no way to reconstruct a signal during the times that the signal is not measured. Nevertheless, with prior ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sparse Dictionary Learning

Sparse coding is a representation learning method which aims at finding a sparse representation of the input data (also known as sparse coding) in the form of a linear combination of basic elements as well as those basic elements themselves. These elements are called ''atoms'' and they compose a ''dictionary''. Atoms in the dictionary are not required to be orthogonal, and they may be an over-complete spanning set. This problem setup also allows the dimensionality of the signals being represented to be higher than the one of the signals being observed. The above two properties lead to having seemingly redundant atoms that allow multiple representations of the same signal but also provide an improvement in sparsity and flexibility of the representation. One of the most important applications of sparse dictionary learning is in the field of compressed sensing or signal recovery. In compressed sensing, a high-dimensional signal can be recovered with only a few linear measurements pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |