|

Lag Windowing

Lag windowing is a technique that consists of window function, windowing the autocorrelation coefficients prior to estimating linear prediction coefficients (LPC). The windowing in the autocorrelation domain has the same effect as a convolution (smoothing) in the power spectrum, power spectral domain and helps in stabilizing the result of the Levinson-Durbin algorithm. The window function is typically a Gaussian function. External links PLP and RASTA (and MFCC, and inversion) in Matlab{{Webarchive, url=https://web.archive.org/web/20161122094213/http://labrosa.ee.columbia.edu/matlab/rastamat/ , date=2016-11-22 See also * Linear predictive coding * Bandwidth expansion Autocorrelation Statistical signal processing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Window Function

In signal processing and statistics, a window function (also known as an apodization function or tapering function) is a mathematical function that is zero-valued outside of some chosen interval, normally symmetric around the middle of the interval, usually near a maximum in the middle, and usually tapering away from the middle. Mathematically, when another function or waveform/data-sequence is "multiplied" by a window function, the product is also zero-valued outside the interval: all that is left is the part where they overlap, the "view through the window". Equivalently, and in actual practice, the segment of data within the window is first isolated, and then only that data is multiplied by the window function values. Thus, tapering, not segmentation, is the main purpose of window functions. The reasons for examining segments of a longer function include detection of transient events and time-averaging of frequency spectra. The duration of the segments is determined in ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelation. A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Prediction

Linear prediction is a mathematical operation where future values of a discrete-time signal are estimated as a linear function of previous samples. In digital signal processing, linear prediction is often called linear predictive coding (LPC) and can thus be viewed as a subset of filter theory. In system analysis, a subfield of mathematics, linear prediction can be viewed as a part of mathematical modelling or optimization. The prediction model The most common representation is :\widehat(n) = \sum_^p a_i x(n-i)\, where \widehat(n) is the predicted signal value, x(n-i) the previous observed values, with p \leq n , and a_i the predictor coefficients. The error generated by this estimate is :e(n) = x(n) - \widehat(n)\, where x(n) is the true signal value. These equations are valid for all types of (one-dimensional) linear prediction. The differences are found in the way the predictor coefficients a_i are chosen. For multi-dimensional signals the error metric is often defined ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Spectrum

The power spectrum S_(f) of a time series x(t) describes the distribution of Power (physics), power into frequency components composing that signal. According to Fourier analysis, any physical signal can be decomposed into a number of discrete frequencies, or a spectrum of frequencies over a continuous range. The statistical average of a certain signal or sort of signal (including Noise (electronics), noise) as analyzed in terms of its frequency content, is called its spectrum. When the energy of the signal is concentrated around a finite time interval, especially if its total energy is finite, one may compute the energy spectral density. More commonly used is the power spectral density (or simply power spectrum), which applies to signals existing over ''all'' time, or over a time period large enough (especially in relation to the duration of a measurement) that it could as well have been over an infinite time interval. The power spectral density (PSD) then refers to the spec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Levinson-Durbin

Levinson recursion or Levinson–Durbin recursion is a procedure in linear algebra to recursively calculate the solution to an equation involving a Toeplitz matrix. The algorithm runs in time, which is a strong improvement over Gauss–Jordan elimination, which runs in Θ(''n''3). The Levinson–Durbin algorithm was proposed first by Norman Levinson in 1947, improved by James Durbin in 1960, and subsequently improved to and then multiplications by W. F. Trench and S. Zohar, respectively. Other methods to process data include Schur decomposition and Cholesky decomposition. In comparison to these, Levinson recursion (particularly split Levinson recursion) tends to be faster computationally, but more sensitive to computational inaccuracies like round-off errors. The Bareiss algorithm for Toeplitz matrices (not to be confused with the general Bareiss algorithm) runs about as fast as Levinson recursion, but it uses space, whereas Levinson recursion uses only ''O''(''n'') space. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

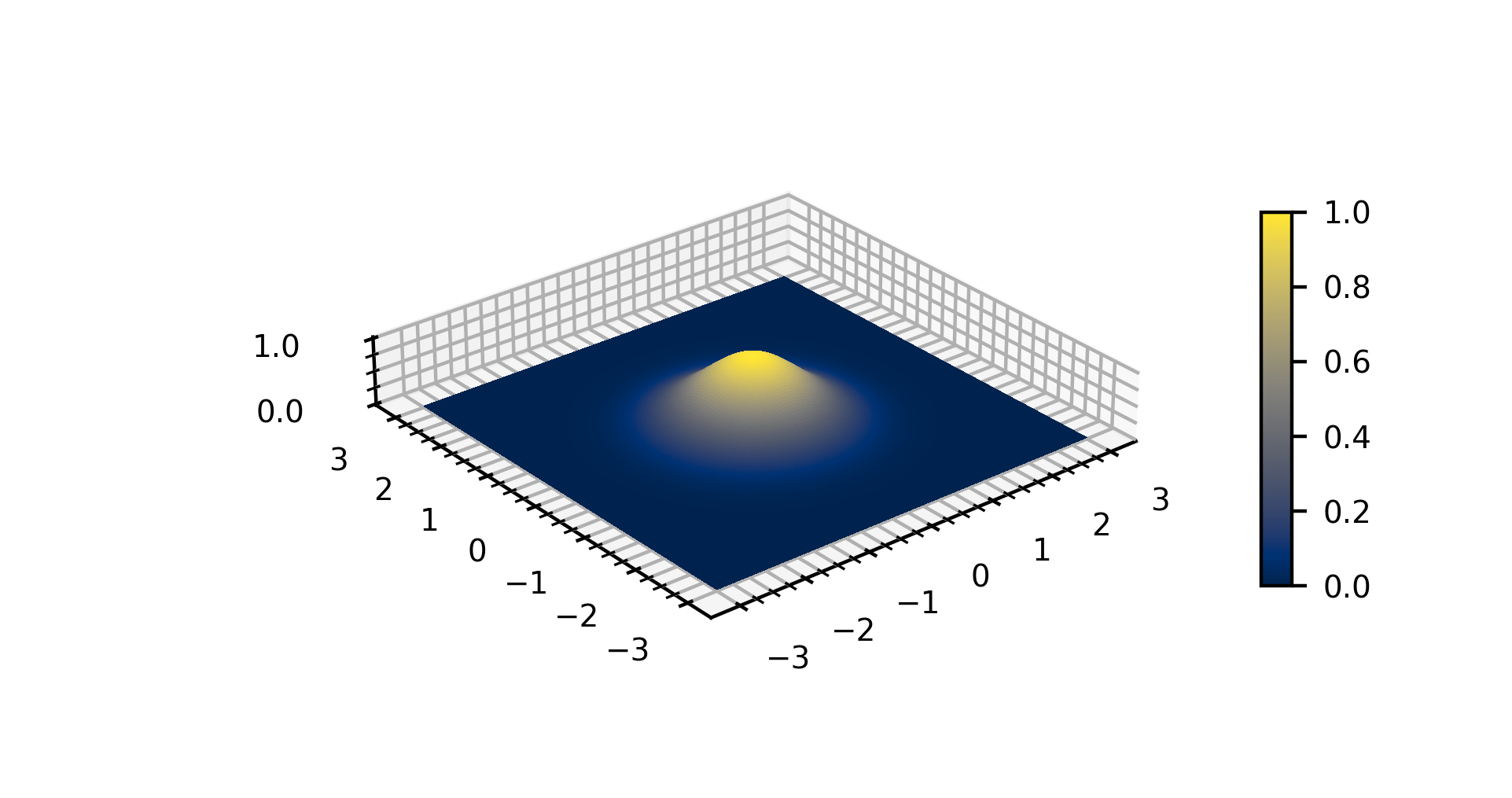

Gaussian Function

In mathematics, a Gaussian function, often simply referred to as a Gaussian, is a function of the base form f(x) = \exp (-x^2) and with parametric extension f(x) = a \exp\left( -\frac \right) for arbitrary real constants , and non-zero . It is named after the mathematician Carl Friedrich Gauss. The graph of a Gaussian is a characteristic symmetric " bell curve" shape. The parameter is the height of the curve's peak, is the position of the center of the peak, and (the standard deviation, sometimes called the Gaussian RMS width) controls the width of the "bell". Gaussian functions are often used to represent the probability density function of a normally distributed random variable with expected value and variance . In this case, the Gaussian is of the form g(x) = \frac \exp\left( -\frac \frac \right). Gaussian functions are widely used in statistics to describe the normal distributions, in signal processing to define Gaussian filters, in image processing where two-dimensio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Predictive Coding

Linear predictive coding (LPC) is a method used mostly in audio signal processing and speech processing for representing the spectral envelope of a digital signal of speech in compressed form, using the information of a linear predictive model. LPC is the most widely used method in speech coding and speech synthesis. It is a powerful speech analysis technique, and a useful method for encoding good quality speech at a low bit rate. Overview LPC starts with the assumption that a speech signal is produced by a buzzer at the end of a tube (for voiced sounds), with occasional added hissing and popping sounds (for voiceless sounds such as sibilants and plosives). Although apparently crude, this Source–filter model is actually a close approximation of the reality of speech production. The glottis (the space between the vocal folds) produces the buzz, which is characterized by its intensity (loudness) and frequency (pitch). The vocal tract (the throat and mouth) forms the tube, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bandwidth Expansion

Bandwidth expansion is a technique for widening the bandwidth or the resonances in an LPC filter. This is done by moving all the poles towards the origin by a constant factor \gamma. The bandwidth-expanded filter A'(z) can be easily derived from the original filter A(z) by: :A'(z) = A(z/\gamma) Let A(z) be expressed as: :A(z) = \sum_^a_kz^ The bandwidth-expanded filter can be expressed as: :A'(z) = \sum_^a_k\gamma^kz^{-k} In other words, each coefficient a_k in the original filter is simply multiplied by \gamma^k in the bandwidth-expanded filter. The simplicity of this transformation makes it attractive, especially in CELP coding of speech, where it is often used for the perceptual noise weighting and/or to stabilize the LPC analysis. However, when it comes to stabilizing the LPC analysis, lag windowing Lag windowing is a technique that consists of windowing the autocorrelation coefficients prior to estimating linear prediction coefficients (LPC). The windowing in the autocorr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelation. A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |