|

Ward's Method

In statistics, Ward's method is a criterion applied in hierarchical cluster analysis. Ward's minimum variance method is a special case of the objective function approach originally presented by Joe H. Ward, Jr. Ward suggested a general agglomerative hierarchical clustering procedure, where the criterion for choosing the pair of clusters to merge at each step is based on the optimal value of an objective function. This objective function could be "any function that reflects the investigator's purpose." Many of the standard clustering procedures are contained in this very general class. To illustrate the procedure, Ward used the example where the objective function is the error sum of squares, and this example is known as ''Ward's method'' or more precisely ''Ward's minimum variance method''. The nearest-neighbor chain algorithm can be used to find the same clustering defined by Ward's method, in time proportional to the size of the input distance matrix and space linear in the nu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two categories: * Agglomerative: Agglomerative: Agglomerative clustering, often referred to as a "bottom-up" approach, begins with each data point as an individual cluster. At each step, the algorithm merges the two most similar clusters based on a chosen distance metric (e.g., Euclidean distance) and linkage criterion (e.g., single-linkage, complete-linkage). This process continues until all data points are combined into a single cluster or a stopping criterion is met. Agglomerative methods are more commonly used due to their simplicity and computational efficiency for small to medium-sized datasets . * Divisive: Divisive clustering, known as a "top-down" approach, starts with all data points in a single cluster and recursively splits the clu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

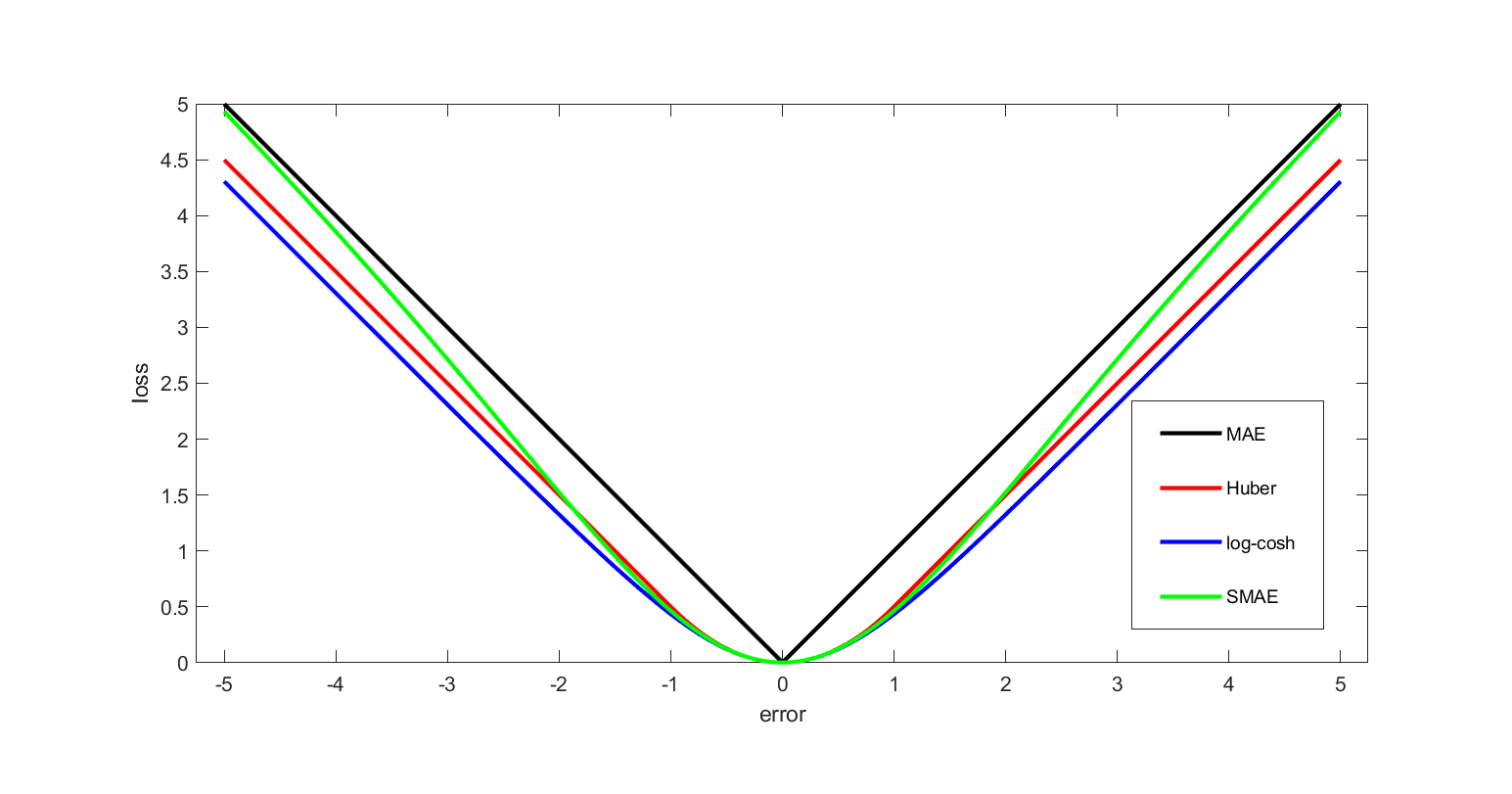

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Journal Of The American Statistical Association

The ''Journal of the American Statistical Association'' is a quarterly peer-reviewed scientific journal published by Taylor & Francis on behalf of the American Statistical Association. It covers work primarily focused on the application of statistics, statistical theory and methods in economic, social, physical, engineering, and health sciences. The journal also includes reviews of books which are relevant to the field. The journal was established in 1888 as the ''Publications of the American Statistical Association''. It was renamed ''Quarterly Publications of the American Statistical Association'' in 1912, obtaining its current title in 1922. Reception According to the ''Journal Citation Reports ''Journal Citation Reports'' (''JCR'') is an annual publication by Clarivate. It has been integrated with the Web of Science and is accessed from the Web of Science Core Collection. It provides information about academic journals in the natur ...'', the journal has a 2023 impac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Agglomerative Hierarchical Clustering

In data mining and statistics, hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis that seeks to build a hierarchy of clusters. Strategies for hierarchical clustering generally fall into two categories: * Agglomerative: Agglomerative: Agglomerative clustering, often referred to as a "bottom-up" approach, begins with each data point as an individual cluster. At each step, the algorithm merges the two most similar clusters based on a chosen distance metric (e.g., Euclidean distance) and linkage criterion (e.g., single-linkage, complete-linkage). This process continues until all data points are combined into a single cluster or a stopping criterion is met. Agglomerative methods are more commonly used due to their simplicity and computational efficiency for small to medium-sized datasets . * Divisive: Divisive clustering, known as a "top-down" approach, starts with all data points in a single cluster and recursively splits the clu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lack-of-fit Sum Of Squares

In statistics, a sum of squares due to lack of fit, or more tersely a lack-of-fit sum of squares, is one of the components of a partition of the sum of squares of residuals in an analysis of variance, used in the numerator in an F-test of the null hypothesis that says that a proposed model fits well. The other component is the pure-error sum of squares. The pure-error sum of squares is the sum of squared deviations of each value of the dependent variable from the average value over all observations sharing its independent variable value(s). These are errors that could never be avoided by any predictive equation that assigned a predicted value for the dependent variable as a function of the value(s) of the independent variable(s). The remainder of the residual sum of squares is attributed to lack of fit of the model since it would be mathematically possible to eliminate these errors entirely. Principle In order for the lack-of-fit sum of squares to differ from the sum of squares ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest-neighbor Chain Algorithm

In the theory of cluster analysis, the nearest-neighbor chain algorithm is an algorithm that can speed up several methods for agglomerative hierarchical clustering. These are methods that take a collection of points as input, and create a hierarchy of clusters of points by repeatedly merging pairs of smaller clusters to form larger clusters. The clustering methods that the nearest-neighbor chain algorithm can be used for include Ward's method, complete-linkage clustering, and single-linkage clustering; these all work by repeatedly merging the closest two clusters but use different definitions of the distance between clusters. The cluster distances for which the nearest-neighbor chain algorithm works are called ''reducible'' and are characterized by a simple inequality among certain cluster distances. The main idea of the algorithm is to find pairs of clusters to merge by following paths in the nearest neighbor graph of the clusters. Every such path will eventually terminate at a p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distance Matrix

In mathematics, computer science and especially graph theory, a distance matrix is a square matrix (two-dimensional array) containing the distances, taken pairwise, between the elements of a set. Depending upon the application involved, the ''distance'' being used to define this matrix may or may not be a metric (mathematics), metric. If there are elements, this matrix will have size . In graph-theoretic applications, the elements are more often referred to as points, nodes or vertices. Non-metric distance matrix In general, a distance matrix is a weighted adjacency matrix of some graph. In a Network (mathematics), network, a directed graph with weights assigned to the arcs, the distance between two nodes of the network can be defined as the minimum of the sums of the weights on the shortest paths joining the two nodes (where the number of steps in the path is bounded). This distance function, while well defined, is not a metric. There need be no restrictions on the weights oth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursive Algorithm

In computer science, recursion is a method of solving a computational problem where the solution depends on solutions to smaller instances of the same problem. Recursion solves such recursive problems by using functions that call themselves from within their own code. The approach can be applied to many types of problems, and recursion is one of the central ideas of computer science. Most computer programming languages support recursion by allowing a function to call itself from within its own code. Some functional programming languages (for instance, Clojure) do not define any looping constructs but rely solely on recursion to repeatedly call code. It is proved in computability theory that these recursive-only languages are Turing complete; this means that they are as powerful (they can be used to solve the same problems) as imperative languages based on control structures such as and . Repeatedly calling a function from within itself may cause the call stack to have a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Distance

In mathematics, the Euclidean distance between two points in Euclidean space is the length of the line segment between them. It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, and therefore is occasionally called the Pythagorean distance. These names come from the ancient Greek mathematicians Euclid and Pythagoras. In the Greek deductive geometry exemplified by Euclid's ''Elements'', distances were not represented as numbers but line segments of the same length, which were considered "equal". The notion of distance is inherent in the compass tool used to draw a circle, whose points all have the same distance from a common center point. The connection from the Pythagorean theorem to distance calculation was not made until the 18th century. The distance between two objects that are not points is usually defined to be the smallest distance among pairs of points from the two objects. Formulas are known for computing distances b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Single-linkage Clustering

In statistics, single-linkage clustering is one of several methods of hierarchical clustering. It is based on grouping clusters in bottom-up fashion (agglomerative clustering), at each step combining two clusters that contain the closest pair of elements not yet belonging to the same cluster as each other. This method tends to produce long thin clusters in which nearby elements of the same cluster have small distances, but elements at opposite ends of a cluster may be much farther from each other than two elements of other clusters. For some classes of data, this may lead to difficulties in defining classes that could usefully subdivide the data. However, it is popular in astronomy for analyzing galaxy clusters, which may often involve long strings of matter; in this application, it is also known as the friends-of-friends algorithm. Overview of agglomerative clustering methods In the beginning of the agglomerative clustering process, each element is in a cluster of its own. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Complete-linkage Clustering

Complete-linkage clustering is one of several methods of agglomerative hierarchical clustering. At the beginning of the process, each element is in a cluster of its own. The clusters are then sequentially combined into larger clusters until all elements end up being in the same cluster. The method is also known as farthest neighbour clustering. The result of the clustering can be visualized as a dendrogram, which shows the sequence of cluster fusion and the distance at which each fusion took place. Clustering procedure At each step, the two clusters separated by the shortest distance are combined. The definition of 'shortest distance' is what differentiates between the different agglomerative clustering methods. In complete-linkage clustering, the link between two clusters contains all element pairs, and the distance between clusters equals the distance between those two elements (one in each cluster) that are farthest away from each other. The shortest of these links that rem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |