|

Web Scraping

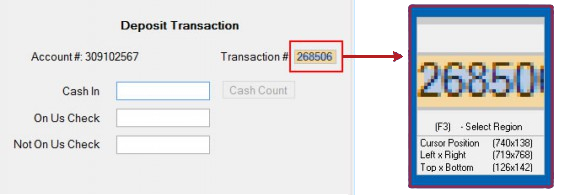

Web scraping, web harvesting, or web data extraction is data scraping used for data extraction, extracting data from websites. Web scraping software may directly access the World Wide Web using the Hypertext Transfer Protocol or a web browser. While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a Internet bot, bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later data retrieval, retrieval or data analysis, analysis. Scraping a web page involves fetching it and then extracting data from it. Fetching is the downloading of a page (which a browser does when a user views a page). Therefore, web crawling is a main component of web scraping, to fetch pages for later processing. Having fetched, extraction can take place. The content of a page may be Parsing, parsed, searched and reformatted, and its ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Scraping

Data scraping is a technique where a computer program extracts data from Human-readable medium, human-readable output coming from another program. Description Normally, Data transmission, data transfer between programs is accomplished using data structures suited for Automation, automated processing by computers, not people. Such interchange File format, formats and Protocol (computing), protocols are typically rigidly structured, well-documented, easily parsing, parsed, and minimize ambiguity. Very often, these transmissions are not human-readable at all. Thus, the key element that distinguishes data scraping from regular parsing is that the data being consumed is intended for display to an End-user (computer science), end-user, rather than as an input to another program. It is therefore usually neither documented nor structured for convenient parsing. Data scraping often involves ignoring binary data (usually images or multimedia data), Display device, display formatting, red ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Change Detection And Notification

Change detection and notification (CDN) is the automatic detection of changes made to World Wide Web pages and notification to interested users by email or other means. Whereas search engines are designed to find web pages, CDN systems are designed to monitor changes to web pages. Before change detection and notification, it was necessary for users to manually check for web page changes, either by revisiting web sites or periodically searching again. Efficient and effective change detection and notification is hampered by the fact that most servers do not accurately track content changes through Last-Modified or ETag web-server headers. In 2019 a comprehensive analysis regarding CDN systems was published. History In 1996, NetMind developed the first change detection and notification tool, known as Mind-it, which ran for six years. This spawned new services such as ChangeDetection (1999), ChangeDetect (2002), Google Alerts (2003), and Versionista (2007) which was used by the Joh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

History Of The World Wide Web

World Wide Web, The World Wide Web ("WWW", "W3" or simply "the Web") is a global information medium that users can access via computers connected to the Internet. The term is often mistakenly used as a synonym for the Internet, but the Web is a service that operates over the Internet, just as email and Usenet do. The history of the Internet and the history of hypertext date back significantly further than that of the World Wide Web. Tim Berners-Lee invented the World Wide Web while working at CERN in 1989. He proposed a "universal linked information system" using several concepts and technologies, the most fundamental of which was the connections that existed between information. He developed the first web server, the first web browser, and a document formatting protocol, called HTML, Hypertext Markup Language (HTML). After publishing the markup language in 1991, and releasing the browser source code for public use in 1993, many other web browsers were soon developed, with Marc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Vision

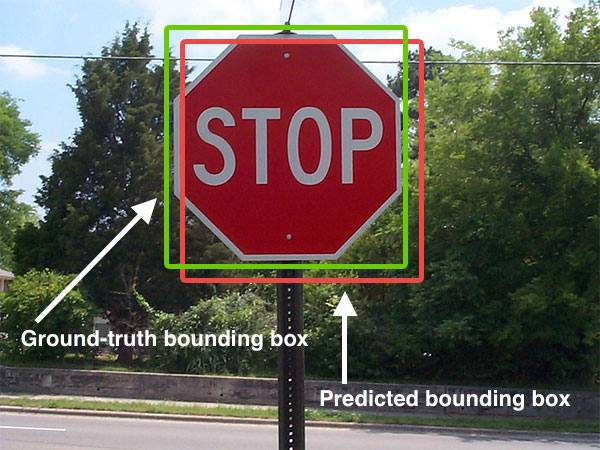

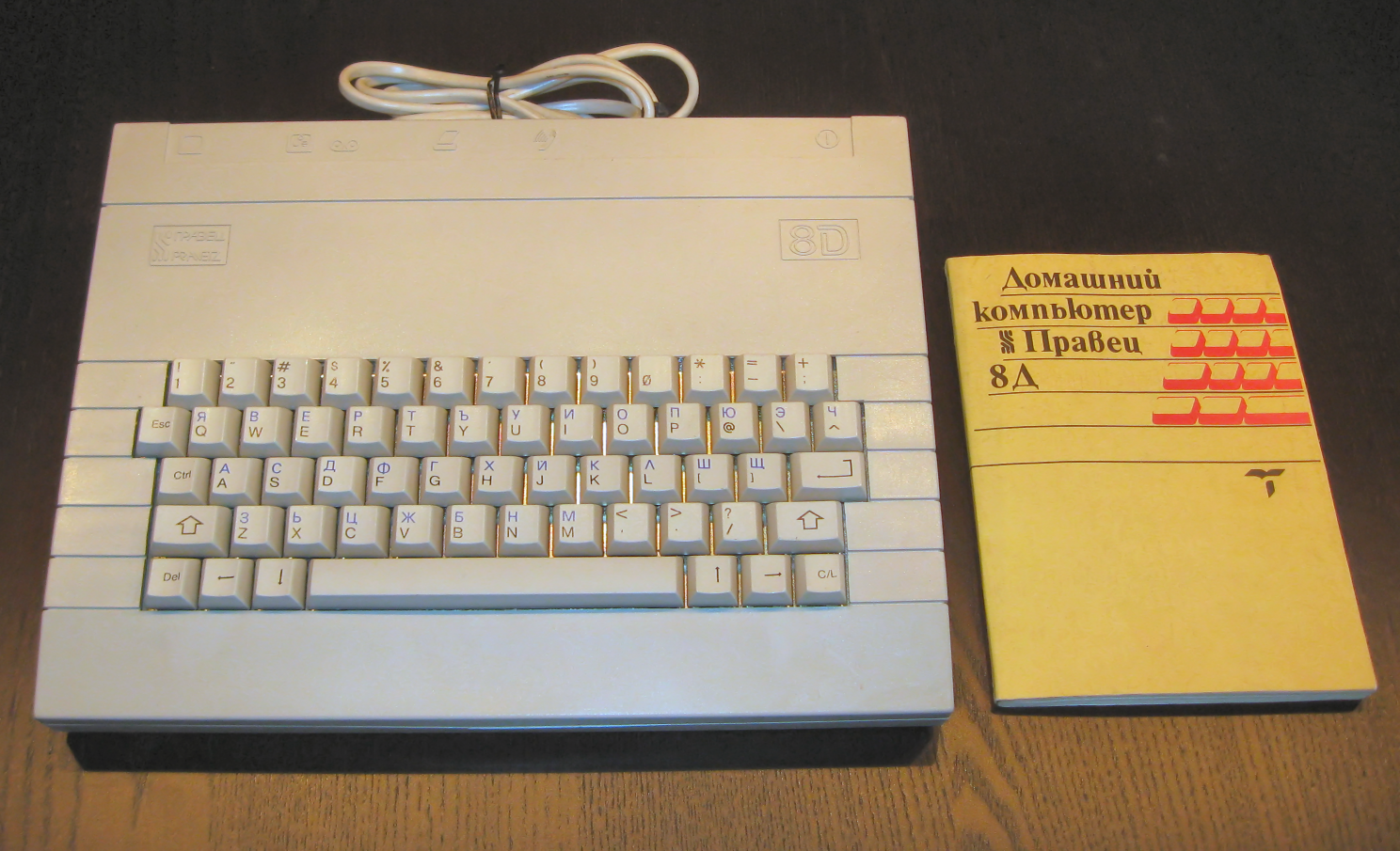

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Document Object Model

The Document Object Model (DOM) is a cros s-platform and language-independent API that treats an HTML or XML document as a tree structure wherein each node is an object representing a part of the document. The DOM represents a document with a logical tree. Each branch of the tree ends in a node, and each node contains objects. DOM methods allow programmatic access to the tree; with them one can change the structure, style or content of a document. Nodes can have event handlers (also known as event listeners) attached to them. Once an event is triggered, the event handlers get executed. The principal standardization of the DOM was handled by the World Wide Web Consortium (W3C), which last developed a recommendation in 2004. WHATWG took over the development of the standard, publishing it as a living document. The W3C now publishes stable snapshots of the WHATWG standard. In HTML DOM (Document Object Model), every element is a node: * A document is a document node. * All HTM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

JSON

JSON (JavaScript Object Notation, pronounced or ) is an open standard file format and electronic data interchange, data interchange format that uses Human-readable medium and data, human-readable text to store and transmit data objects consisting of name–value pairs and array data type, arrays (or other serialization, serializable values). It is a commonly used data format with diverse uses in electronic data interchange, including that of web applications with server (computing), servers. JSON is a Language-independent specification, language-independent data format. It was derived from JavaScript, but many modern programming languages include code to generate and parse JSON-format data. JSON filenames use the extension .json. Douglas Crockford originally specified the JSON format in the early 2000s. Transcript: He and Chip Morningstar sent the first JSON message in April 2001. Naming and pronunciation The 2017 international standard (ECMA-404 and ISO/IEC 21778:2017) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Feed

Data feed is a mechanism for users to receive updated data from data sources. It is commonly used by real-time applications in point-to-point settings as well as on the World Wide Web. The latter is also called web feed. News feed is a popular form of web feed. RSS feed makes dissemination of blogs easy. Product feeds play increasingly important role in e-commerce and internet marketing, as well as news distribution, financial markets, and cybersecurity. Data feeds usually require structured data that include different labelled fields, such as "title" or "product". Data feed formats * RSS 1.0, 2.0 * Atom feed * RDF feed * Comma-separated values (CSV) * JSON * XML Emerging semantic data feed The Web is evolving into a web of data or Semantic Web. Data will be encoded by Semantic Web languages like RDF or OWL according to many experts' visions. So, it is not difficult to envision data feeds will be also in the form of RDF or OWL. A big advantage of providing semantic data feeds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Market Research

Market research is an organized effort to gather information about target markets and customers. It involves understanding who they are and what they need. It is an important component of business strategy and a major factor in maintaining competitiveness. Market research helps to identify and analyze the needs of the market, the market size and the competition. Its techniques encompass both qualitative techniques such as focus groups, in-depth interviews, and ethnography, as well as quantitative techniques such as customer surveys, and analysis of secondary data. It includes social and opinion research, and is the systematic gathering and interpretation of information about individuals or organizations using statistical and analytical methods and techniques of the applied social sciences to gain insight or support decision making. Market research, marketing research, and marketing are a sequence of business activities; sometimes these are handled informally. The field of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

End-user (computer Science)

In product development, an end user (sometimes end-user) is a person who ultimately uses or is intended to ultimately use a product. The end user stands in contrast to users who support or maintain the product, such as sysops, system administrators, database administrators, information technology (IT) experts, software professionals, and computer technicians. End users typically do not possess the technical understanding or skill of the product designers, a fact easily overlooked and forgotten by designers: leading to features creating low customer satisfaction. In information technology, end users are not customers in the usual sense—they are typically employees of the customer. For example, if a large retail corporation buys a software package for its employees to use, even though the large retail corporation was the ''customer'' that purchased the software, the end users are the employees of the company, who will use the software at work. Context End users are one of the thre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XHTML

Extensible HyperText Markup Language (XHTML) is part of the family of XML markup languages which mirrors or extends versions of the widely used HyperText Markup Language (HTML), the language in which Web pages are formulated. While HTML, prior to HTML5, was defined as an application of Standard Generalized Markup Language (SGML), a flexible markup language framework, XHTML is an application of XML, a more restrictive subset of SGML. XHTML documents are well-formed and may therefore be parsed using standard XML parsers, unlike HTML, which requires a lenient HTML-specific parser. XHTML 1.0 became a World Wide Web Consortium (W3C) recommendation on 26 January 2000. XHTML 1.1 became a W3C recommendation on 31 May 2001. XHTML is now referred to as "the XML syntax for HTML" and being developed as an XML adaptation of the HTML living standard. Overview XHTML 1.0 was "a reformulation of the three HTML 4 document types as applications of XML 1.0". The World Wide Web Consortiu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HTML

Hypertext Markup Language (HTML) is the standard markup language for documents designed to be displayed in a web browser. It defines the content and structure of web content. It is often assisted by technologies such as Cascading Style Sheets (CSS) and scripting languages such as JavaScript, a programming language. Web browsers receive HTML documents from a web server or from local storage and browser engine, render the documents into multimedia web pages. HTML describes the structure of a web page Semantic Web, semantically and originally included cues for its appearance. HTML elements are the building blocks of HTML pages. With HTML constructs, HTML element#Images and objects, images and other objects such as Fieldset, interactive forms may be embedded into the rendered page. HTML provides a means to create structured documents by denoting structural semantics for text such as headings, paragraphs, lists, Hyperlink, links, quotes, and other items. HTML elements are delineated ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |