|

Weak Consistency

The name weak consistency can be used in two senses. In the first sense, strict and more popular, weak consistency is one of the consistency models used in the domain of concurrent programming (e.g. in distributed shared memory, distributed transactions etc.). A protocol is said to support weak consistency if: #All accesses to synchronization variables are seen by all processes (or nodes, processors) in the same order (sequentially) - these are synchronization operations. Accesses to critical sections are seen sequentially. #All other accesses may be seen in different order on different processes (or nodes, processors). #The set of both read and write operations in between different synchronization operations is the same in each process. Therefore, there can be no access to a synchronization variable if there are pending write operations. And there can not be any new read/write operation started if the system is performing any synchronization operation. In the second, more genera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency Model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be data consistency, consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in Distributed computing, distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cache coherence, cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java (progr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrent Programming

Concurrent means happening at the same time. Concurrency, concurrent, or concurrence may refer to: Law * Concurrence, in jurisprudence, the need to prove both ''actus reus'' and ''mens rea'' * Concurring opinion (also called a "concurrence"), a legal opinion which supports the conclusion, though not always the reasoning, of the majority. * Concurrent estate, a concept in property law * Concurrent resolution, a legislative measure passed by both chambers of the United States Congress * Concurrent sentences, in criminal law, periods of imprisonment that are served simultaneously Computing * Concurrency (computer science), the property of program, algorithm, or problem decomposition into order-independent or partially-ordered units * Concurrent computing, the overlapping execution of multiple interacting computational tasks * Concurrence (quantum computing), a measure used in quantum information theory * Concurrent Computer Corporation, an American computer systems manufactur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

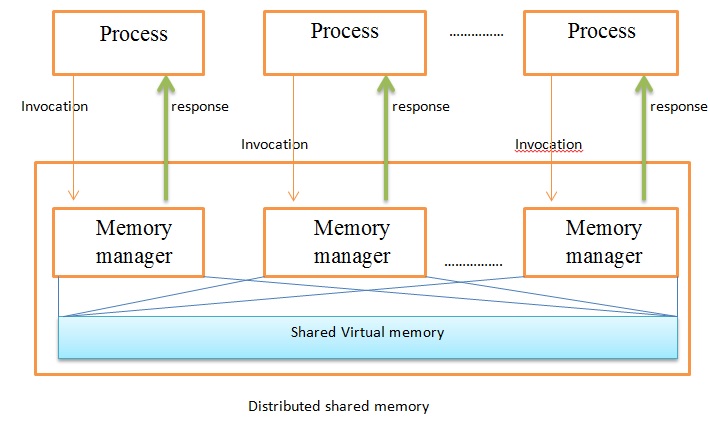

Distributed Shared Memory

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two Processor (computing), processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node (networking), node of a computer cluster, cluster has access to shared memory architecture, shared memory in addition to each node's private (i.e., not shared) Random-access memory, memory. Overview DSM can be achieved via software as well as hardware. Hardware examples include cache coherence circuits and network interface controllers. There are three ways of implementing DSM: * Page (computer memory), Page-based approach using virtual memory * Sha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Transactions

A distributed transaction operates within a Distributed computing, distributed environment, typically involving multiple nodes across a network depending on the location of the data. A key aspect of distributed transactions is Atomicity (programming), atomicity, which ensures that the transaction is completed in its entirety or not executed at all. It's essential to note that distributed transactions are not limited to databases. The Open Group, a vendor consortium, proposed the X/Open XA, X/Open Distributed Transaction Processing Model (X/Open XA), which became a de facto standard for the behavior of transaction model components. Databases are common transactional resources and, often, transactions span a couple of such databases. In this case, a distributed transaction can be seen as a database transaction that must be Synchronization, synchronized (or provide ACID properties) among multiple participating databases which are distributed computing, distributed among different phy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Consistency

Sequential consistency is a consistency model used in the domain of concurrent computing (e.g. in distributed shared memory, distributed transactions, etc.). It is the property that "... the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operations of each individual processor appear in this sequence in the order specified by its program." That is, the execution order of a program in the same processor (or thread) is the same as the program order, while the execution order of a program on different processors (or threads) is undefined. In an example like this: execution order between A1, B1 and C1 is preserved, that is, A1 runs before B1, and B1 before C1. The same for A2 and B2. But, as execution order between processors is undefined, B2 might run before or after C1 (B2 might physically run before C1, but the effect of B2 might be seen after that of C1, which is the same as "B2 run after C1") Conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Strong Consistency

Strong consistency is one of the consistency models used in the domain of concurrent programming (e.g., in distributed shared memory, distributed transactions). The protocol is said to support strong consistency if: # All accesses are seen by all parallel processes (or nodes, processors, etc.) in the same order (sequentially) Therefore, only one consistent state can be observed, as opposed to weak consistency, where different parallel processes (or nodes, etc.) can perceive variables in different states. See also * CAP theorem In database theory, the CAP theorem, also named Brewer's theorem after computer scientist Eric Brewer (scientist), Eric Brewer, states that any distributed data store can provide at most Inconsistent triad, two of the following three guarantees: ; ... References Consistency models {{Tech-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Symposium On Computer Architecture

The International Symposium on Computer Architecture (ISCA) is an annual academic conference on computer architecture, generally viewed as the top-tier in the field. Association for Computing Machinery's Special Interest Group on Computer Architecture (ACM SIGARCH) and Institute of Electrical and Electronics Engineers Computer Society are technical sponsors. ISCA has participated in the Federated Computing Research Conference in 1993, 1996, 1999, 2003, 2007, 2011, 2015, 2019, and 2023; every year that the conference has been organized. ISCA 2018 hosted the 2017 Turing Award Winners lecture by award winners John L. Hennessy and David A. Patterson. ISCA 2024, which was held in Buenos Aires (Argentina), was the first edition ever to take place in a Latin American country. It featured keynote speakers Mateo Valero, Sridhar Iyengar, and Vivienne Sze. The organization of this edition was led by Prof. Esteban Mocskos (University of Buenos Aires) and Dr. Augusto Vega ( IBM T. J. W ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sarita Adve

Sarita Vikram Adve is the Richard T. Cheng Professor of Computer Science at the University of Illinois at Urbana-Champaign. Her research interests are in computer architecture and systems, parallel computing, and power and reliability-aware systems. Education and career Adve completed a Bachelor of Technology degree in electrical engineering at Indian Institute of Technology Bombay in 1987. She subsequently completed a Master of Science (1989) and Ph.D. (1993) in computer science at the University of Wisconsin–Madison. Before joining Illinois, Adve was a member of faculty at Rice University from 1993 to 1999. She served on the NSF CISE directorate's advisory committee from 2003 to 2005 and on the expert group to revise the Java memory model from 2001 to 2005. She served as chair of ACM SIGARCH from 2015 to 2019. She was the PhD supervisor of Parthasarathy Ranganathan. Awards and honors Adve received the Ken Kennedy Award in 2018, the ACM SIGARCH Maurice Wilkes award in 2 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |