Distributed Shared Memory on:

[Wikipedia]

[Google]

[Amazon]

In

DSM can be achieved via software as well as hardware. Hardware examples include

DSM can be achieved via software as well as hardware. Hardware examples include

A basic DSM will track at least three states among nodes for any given block in the directory. There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block.

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home.

A basic DSM will track at least three states among nodes for any given block in the directory. There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block.

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home.

VODCA

which has comparable performance to

VODCA

DIPC

Distributed Shared Cache

''Memory coherence in shared virtual memory systems''

by Kai Li, Paul Hudak published in ACM Transactions on Computer Systems, Volume 7 Issue 4, Nov. 1989 {{Parallel Computing Distributed computing architecture

computer science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ...

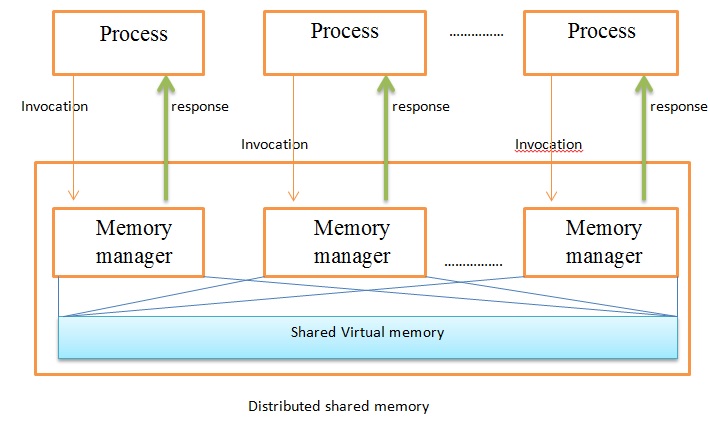

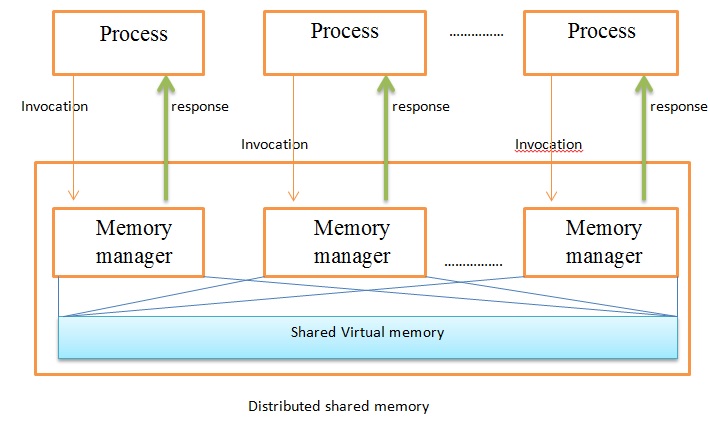

, distributed shared memory (DSM) is a form of memory architecture

Memory architecture describes the methods used to implement electronic computer data storage in a manner that is a combination of the fastest, most reliable, most durable, and least expensive way to store and retrieve information. Depending on the ...

where physically separated memories can be addressed as a single shared address space

In computing, an address space defines a range of discrete addresses, each of which may correspond to a network host, peripheral device, disk sector, a memory cell or other logical or physical entity.

For software programs to save and retrieve ...

. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two processors

Processor may refer to:

Computing Hardware

* Processor (computing)

** Central processing unit (CPU), the hardware within a computer that executes a program

*** Microprocessor, a central processing unit contained on a single integrated circuit ( ...

refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node

In general, a node is a localized swelling (a "knot") or a point of intersection (a vertex).

Node may refer to:

In mathematics

* Vertex (graph theory), a vertex in a mathematical graph

*Vertex (geometry), a point where two or more curves, lines ...

of a cluster

may refer to:

Science and technology Astronomy

* Cluster (spacecraft), constellation of four European Space Agency spacecraft

* Cluster II (spacecraft), a European Space Agency mission to study the magnetosphere

* Asteroid cluster, a small ...

has access to shared memory in addition to each node's private (i.e., not shared) memory

Memory is the faculty of the mind by which data or information is encoded, stored, and retrieved when needed. It is the retention of information over time for the purpose of influencing future action. If past events could not be remembe ...

.

Overview

DSM can be achieved via software as well as hardware. Hardware examples include

DSM can be achieved via software as well as hardware. Hardware examples include cache coherence

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all ...

circuits and network interface controller

A network interface controller (NIC, also known as a network interface card, network adapter, LAN adapter and physical network interface) is a computer hardware component that connects a computer to a computer network.

Early network interface ...

s. There are three ways of implementing DSM:

* Page

Page most commonly refers to:

* Page (paper), one side of a leaf of paper, as in a book

Page, PAGE, pages, or paging may also refer to:

Roles

* Page (assistance occupation), a professional occupation

* Page (servant), traditionally a young m ...

-based approach using virtual memory

* Shared-variable approach using routines to access shared variables

* Object-based approach, ideally accessing shared data through object-oriented discipline

Advantages

* Scales well with a large number of nodes * Message passing is hidden * Can handle complex and large databases without replication or sending the data to processes * Generally cheaper than using a multiprocessor system * Provides large virtual memory space * Programs are more portable due to common programming interfaces * Shield programmers from sending or receiving primitivesDisadvantages

* Generally slower to access than non-distributed shared memory * Must provide additional protection against simultaneous accesses to shared data * May incur a performance penalty * Little programmer control over actual messages being generated * Programmers need to understand consistency models to write correct programsComparison with message passing

Software DSM systems also have the flexibility to organize the shared memory region in different ways. The page based approach organizes shared memory into pages of fixed size. In contrast, the object based approach organizes the shared memory region as an abstract space for storing shareable objects of variable sizes. Another commonly seen implementation uses atuple space

A tuple space is an implementation of the associative memory paradigm for parallel/distributed computing. It provides a repository of tuples that can be accessed concurrently. As an illustrative example, consider that there are a group of process ...

, in which the unit of sharing is a tuple

In mathematics, a tuple is a finite sequence or ''ordered list'' of numbers or, more generally, mathematical objects, which are called the ''elements'' of the tuple. An -tuple is a tuple of elements, where is a non-negative integer. There is o ...

.

Shared memory architecture

A shared-memory architecture (SM) is a distributed computing architecture in which the nodes share the same memory as well as the same storage.{{Cite web , title=Memory: Shared vs Distributed - UFRC , url=https://help.rc.ufl.edu/doc/Memory:_Shared_ ...

may involve separating memory into shared parts distributed amongst nodes and main memory; or distributing all memory between nodes. A coherence protocol, chosen in accordance with a consistency model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be data consistency, consistent and th ...

, maintains memory coherence Memory coherence is an issue that affects the design of computer systems in which two or more Central processing unit, processors or Multi core, cores share a common area of memory (computers), memory.

In a uniprocessor system (where there exists o ...

.

Directory memory coherence

Memory coherence Memory coherence is an issue that affects the design of computer systems in which two or more Central processing unit, processors or Multi core, cores share a common area of memory (computers), memory.

In a uniprocessor system (where there exists o ...

is necessary such that the system which organizes the DSM is able to track and maintain the state of data blocks in nodes across the memories comprising the system. A directory is one such mechanism which maintains the state of cache blocks moving around the system.

States

A basic DSM will track at least three states among nodes for any given block in the directory. There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block.

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home.

A basic DSM will track at least three states among nodes for any given block in the directory. There will be some state to dictate the block as uncached (U), a state to dictate a block as exclusively owned or modified owned (EM), and a state to dictate a block as shared (S). As blocks come into the directory organization, they will transition from U to EM (ownership state) in the initial node. The state can transition to S when other nodes begin reading the block.

There are two primary methods for allowing the system to track where blocks are cached and in what condition across each node. Home-centric request-response uses the home to service requests and drive states, whereas requester-centric allows each node to drive and manage its own requests through the home.

Home-centric request and response

In a home-centric system, the DSM will avoid having to handle request-response races between nodes by allowing only one transaction to occur at a time until the home node has decided that the transaction is finished—usually when the home has received every responding processor's response to the request. An example of this is Intel's QPI home-source mode. The advantages of this approach are that it's simple to implement but its request-response strategy is slow and buffered due to the home node's limitations.Requester-centric request and response

In a requester-centric system, the DSM will allow nodes to talk at will to each other through the home. This means that multiple nodes can attempt to start a transaction, but this requires additional considerations to ensure coherence. For example: when one node is processing a block, if it receives a request for that block from another node it will send a NAck (Negative Acknowledgement) to tell the initiator that the processing node can't fulfill that request right away. An example of this is Intel's QPI snoop-source mode. This approach is fast but it does not naturally prevent race conditions and generates more bus traffic.Consistency models

The DSM must follow certain rules to maintain consistency over how read and write order is viewed among nodes, called the system's ''consistency model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be data consistency, consistent and th ...

''.

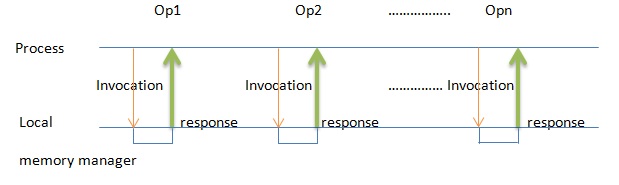

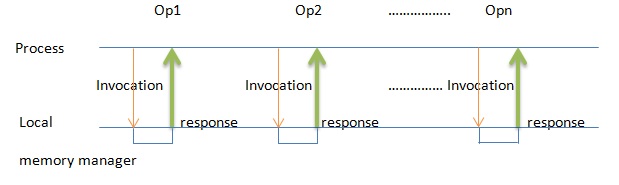

Suppose we have ''n'' processes and ''Mi'' memory operations for each process ''i'', and that all the operations are executed sequentially. We can conclude that (''M1'' + ''M2'' + ... + ''Mn'')!/(''M1''! ''M2''!... ''Mn''!) are possible interleavings of the operations. The issue with this conclusion is determining the correctness of the interleaved operations. Memory coherence for DSM defines which interleavings are permitted.

Replication

There are two types of replication Algorithms. Read replication and Write replication. In Read replication multiple nodes can read at the same time but only one node can write. In Write replication multiple nodes can read and write at the same time. The write requests are handled by a sequencer. Replication of shared data in general tends to: * Reduce network traffic * Promote increased parallelism * Result in fewer page faults However, preserving coherence and consistency may become more challenging.Release and entry consistency

* Release consistency: when a process exits acritical section

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior. Thus, the parts of the program where the shared resource is accessed need to be protected in ways that avoid the concurrent access. One ...

, new values of the variables are propagated to all sites.

* Entry consistency: when a process enters a critical section, it will automatically update the values of the shared variables.

** View-based Consistency: it is a variant of Entry Consistency, except the shared variables of a critical section are automatically detected by the system. An implementation of view-based consistency iVODCA

which has comparable performance to

MPI

MPI or Mpi may refer to:

Science and technology Biology and medicine

* Magnetic particle imaging, a tomographic technique

* Myocardial perfusion imaging, a medical procedure that illustrates heart function

* Mannose phosphate isomerase, an enzyme ...

on cluster computers.

Examples

* Kerrighed * Open SSI *MOSIX

MOSIX is a proprietary distributed operating system. Although early versions were based on older UNIX systems, since 1999 it focuses on Linux clusters and grids. In a MOSIX cluster/grid there is no need to modify or to link applications with any ...

* TreadMarks

VODCA

DIPC

See also

* * * *References

External links

Distributed Shared Cache

''Memory coherence in shared virtual memory systems''

by Kai Li, Paul Hudak published in ACM Transactions on Computer Systems, Volume 7 Issue 4, Nov. 1989 {{Parallel Computing Distributed computing architecture