|

Truthful Job Scheduling

Truthful job scheduling is a mechanism design variant of the job shop scheduling problem from operations research. We have a project composed of several "jobs" (tasks). There are several workers. Each worker can do any job, but for each worker it takes a different amount of time to complete each job. Our goal is to allocate jobs to workers such that the total makespan of the project is minimized. In the standard job shop scheduling problem, the timings of all workers are known, so we have a standard optimization problem. In contrast, in the truthful job scheduling problem, the timings of the workers are not known. We ask each worker how much time he needs to do each job, but, the workers might lie to us. Therefore, we have to give the workers an incentive to tell us their true timings by paying them a certain amount of money. The challenge is to design a payment mechanism which is incentive compatible. The truthful job scheduling problem was introduced by Nisan and Ronen in their 19 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mechanism Design

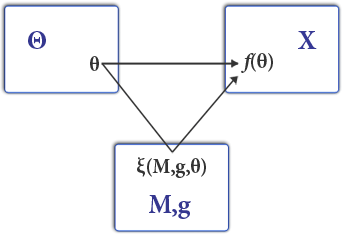

Mechanism design (sometimes implementation theory or institution design) is a branch of economics and game theory. It studies how to construct rules—called Game form, mechanisms or institutions—that produce good outcomes according to Social welfare function, some predefined metric, even when the designer does not know the players' true preferences or what information they have. Mechanism design thus focuses on the study of solution concepts for a class of private-information games. Mechanism design has broad applications, including traditional domains of economics such as market design, but also political science (through voting theory). It is a foundational component in the operation of the internet, being used in networked systems (such as inter-domain routing), e-commerce, and Sponsored search auction, advertisement auctions by Facebook and Google. Because it starts with the end of the game (a particular result), then works backwards to find a game that implements it, it ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Job Shop Scheduling

Job-shop scheduling, the job-shop problem (JSP) or job-shop scheduling problem (JSSP) is an optimization problem in computer science and operations research. It is a variant of optimal job scheduling. In a general job scheduling problem, we are given ''n'' jobs ''J''1, ''J''2, ..., ''Jn'' of varying processing times, which need to be scheduled on ''m'' machines with varying processing power, while trying to minimize the makespan – the total length of the schedule (that is, when all the jobs have finished processing). In the specific variant known as ''job-shop scheduling'', each job consists of a set of ''operations'' ''O''1, ''O''2, ..., ''On'' which need to be processed in a specific order (known as ''precedence constraints''). Each operation has a ''specific machine'' that it needs to be processed on and only one operation in a job can be processed at a given time. A common relaxation is the flexible job shop, where each operation can be processed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Operations Research

Operations research () (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a branch of applied mathematics that deals with the development and application of analytical methods to improve management and decision-making. Although the term management science is sometimes used similarly, the two fields differ in their scope and emphasis. Employing techniques from other mathematical sciences, such as mathematical model, modeling, statistics, and mathematical optimization, optimization, operations research arrives at optimal or near-optimal solutions to decision-making problems. Because of its emphasis on practical applications, operations research has overlapped with many other disciplines, notably industrial engineering. Operations research is often concerned with determining the extreme values of some real-world objective: the Maxima and minima, maximum (of profit, performance, or yield) or minimum (of loss, risk, or cost). Originating in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Makespan

In operations research Operations research () (U.S. Air Force Specialty Code: Operations Analysis), often shortened to the initialism OR, is a branch of applied mathematics that deals with the development and application of analytical methods to improve management and ..., the makespan of a project is the length of time that elapses from the start of work to the end. This type of multi-mode resource constrained project scheduling problem (MRCPSP) seeks to create the shortest logical project schedule, by efficiently using project resources, adding the lowest number of additional resources as possible to achieve the minimum makespan. The term commonly appears in the context of scheduling. Example There is a complex project that is composed of several sub-tasks. We would like to assign tasks to workers, such that the project finishes in the shortest possible time. As an example, suppose the "project" is to feed the goats. There are three goats to feed, one child can only feed on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Incentive Compatible

In game theory and economics, a mechanism is called incentive-compatible (IC) if every participant can achieve their own best outcome by reporting their true preferences. For example, there is incentive compatibility if high-risk clients are better off in identifying themselves as high-risk to insurance firms, who only sell discounted insurance to high-risk clients. Likewise, they would be worse off if they pretend to be low-risk. Low-risk clients who pretend to be high-risk would also be worse off. The concept is attributed to the Russian-born American economist Leonid Hurwicz. Typology There are several different degrees of incentive-compatibility: * The stronger degree is dominant-strategy incentive-compatibility (DSIC). This means that truth-telling is a weakly-dominant strategy, i.e. you fare best or at least not worse by being truthful, regardless of what the others do. In a DSIC mechanism, strategic considerations cannot help any agent achieve better outcomes than the tru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Algorithmic Mechanism Design

Algorithmic mechanism design (AMD) lies at the intersection of economic game theory, optimization, and computer science. The prototypical problem in mechanism design is to design a system for multiple self-interested participants, such that the participants' self-interested actions at equilibrium lead to good system performance. Typical objectives studied include revenue maximization and social welfare maximization. Algorithmic mechanism design differs from classical economic mechanism design in several respects. It typically employs the analytic tools of theoretical computer science, such as worst case analysis and approximation ratios, in contrast to classical mechanism design in economics which often makes distributional assumptions about the agents. It also considers computational constraints to be of central importance: mechanisms that cannot be efficiently implemented in polynomial time are not considered to be viable solutions to a mechanism design problem. This often, for exa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Job (computing)

In computing, a job is a unit of work or unit of execution (that performs said work). A component of a job (as a unit of work) is called a ''task (computing), task'' or a ''step'' (if sequential, as in a job stream). As a unit of execution, a job may be concretely identified with a single process (computing), process, which may in turn have subprocesses (child processes; the process corresponding to the job being the parent process) which perform the tasks or steps that comprise the work of the job; or with a process group; or with an abstract reference to a process or process group, as in Unix job control. Jobs can be started interactively, such as from a command line, or scheduled for non-interactive execution by a job scheduler, and then controlled via automatic or manual job control (computing), job control. Jobs that have finite input can complete, successfully or unsuccessfully, or fail to complete and eventually be terminated. By contrast, online processing such as by serv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vickrey Auction

A Vickrey auction or sealed-bid second-price auction (SBSPA) is a type of sealed-bid auction. Bidders submit written bids without knowing the bid of the other people in the auction. The highest bidder wins but the price paid is the second-highest bid. This type of auction is strategically similar to an English auction and gives bidders an incentive to bid their true value. The auction was first described academically by Columbia University professor William Vickrey in 1961 though it had been used by stamp collectors since 1893. In 1797 Johann Wolfgang von Goethe sold a manuscript using a sealed-bid, second-price auction. Vickrey's original paper mainly considered auctions where only a single, indivisible good is being sold. The terms ''Vickrey auction'' and ''second-price sealed-bid auction'' are, in this case only, equivalent and used interchangeably. In the case of multiple identical goods, the bidders submit inverse demand curves and pay the opportunity cost. Vickrey auctio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pigeonhole Principle

In mathematics, the pigeonhole principle states that if items are put into containers, with , then at least one container must contain more than one item. For example, of three gloves, at least two must be right-handed or at least two must be left-handed, because there are three objects but only two categories of handedness to put them into. This seemingly obvious statement, a type of combinatorics, counting argument, can be used to demonstrate possibly unexpected results. For example, given that the Demographics of London, population of London is more than one unit greater than the maximum number of hairs that can be on a human's head, the principle requires that there must be at least two people in London who have the same number of hairs on their heads. Although the pigeonhole principle appears as early as 1624 in a book attributed to Jean Leurechon, it is commonly called Dirichlet's box principle or Dirichlet's drawer principle after an 1834 treatment of the principle by Pet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Uniform-machines Scheduling

Uniform machine scheduling (also called uniformly-related machine scheduling or related machine scheduling) is an optimization problem in computer science and operations research. It is a variant of optimal job scheduling. We are given ''n'' jobs ''J''1, ''J''2, ..., ''Jn'' of varying processing times, which need to be scheduled on ''m'' different machines. The goal is to minimize the makespan - the total time required to execute the schedule. The time that machine ''i'' needs in order to process job j is denoted by ''pi,j''. In the general case, the times ''pi,j'' are unrelated, and any matrix of positive processing times is possible. In the specific variant called ''uniform machine scheduling'', some machines are ''uniformly'' faster than others. This means that, for each machine ''i'', there is a speed factor ''si'', and the run-time of job ''j'' on machine ''i'' is ''pi,j'' = ''pj'' / ''si''. In the standard three-field notation for optimal job scheduling problems, the uniform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Longest Processing Time

Longest-processing-time-first (LPT) is a greedy algorithm for job scheduling. The input to the algorithm is a set of ''jobs'', each of which has a specific processing-time. There is also a number ''m'' specifying the number of ''machines'' that can process the jobs. The LPT algorithm works as follows: # Order the jobs by descending order of their processing-time, such that the job with the longest processing time is first. # Schedule each job in this sequence into a machine in which the current load (= total processing-time of scheduled jobs) is smallest. Step 2 of the algorithm is essentially the list-scheduling (LS) algorithm. The difference is that LS loops over the jobs in an arbitrary order, while LPT pre-orders them by descending processing time. LPT was first analyzed by Ronald Graham in the 1960s in the context of the identical-machines scheduling problem. Later, it was applied to many other variants of the problem. LPT can also be described in a more abstract way, as an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |