|

Three-point Estimation

The three-point estimation technique is used in management and information systems applications for the construction of an approximate probability distribution representing the outcome of future events, based on very limited information. While the distribution used for the approximation might be a normal distribution, this is not always so. For example, a triangular distribution might be used, depending on the application. In three-point estimation, three figures are produced initially for every distribution that is required, based on prior experience or best-guesses: * ''a'' = the best-case estimate * ''m'' = the most likely estimate * ''b'' = the worst-case estimate These are then combined to yield either a full probability distribution, for later combination with distributions obtained similarly for other variables, or summary descriptors of the distribution, such as the mean, standard deviation or percentage points of the distribution. The accuracy attributed to the results deri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Systems

An information system (IS) is a formal, sociotechnical, organizational system designed to collect, process, store, and distribute information. From a sociotechnical perspective, information systems are composed by four components: task, people, structure (or roles), and technology. Information systems can be defined as an integration of components for collection, storage and processing of data of which the data is used to provide information, contribute to knowledge as well as digital products that facilitate decision making. A computer information system is a system that is composed of people and computers that processes or interprets information. The term is also sometimes used to simply refer to a computer system with software installed. "Information systems" is also an academic field study about systems with a specific reference to information and the complementary networks of computer hardware and software that people and organizations use to collect, filter, process, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expected Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Program Evaluation And Review Technique

The program evaluation and review technique (PERT) is a statistical tool used in project management, which was designed to analyze and represent the tasks involved in completing a given project. First developed by the United States Navy in 1958, it is commonly used in conjunction with the critical path method (CPM) that was introduced in 1957. Overview PERT is a method of analyzing the tasks involved in completing a given project, especially the time needed to complete each task, and to identify the minimum time needed to complete the total project. It incorporates uncertainty by making it possible to schedule a project while not knowing precisely the details and durations of all the activities. It is more of an event-oriented technique rather than start- and completion-oriented, and is used more in those projects where time is the major factor rather than cost. It is applied on very large-scale, one-time, complex, non-routine infrastructure and on Research and Developmen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

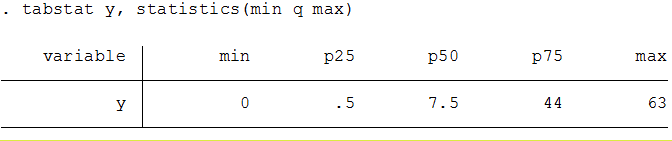

Seven-number Summary

In descriptive statistics, the seven-number summary is a collection of seven summary statistics, and is an extension of the five-number summary. There are three similar, common forms. As with the five-number summary, it can be represented by a modified box plot, adding hatch-marks on the "whiskers" for two of the additional numbers. Seven-number summary The following percentiles are (approximately) evenly spaced under a normally distributed variable: # the 2nd percentile (better: 2.15%) # the 9th percentile (better: 8.87%) # the 25th percentile or lower quartile or ''first quartile'' # the 50th percentile or median (middle value, or ''second quartile'') # the 75th percentile or upper quartile or ''third quartile'' # the 91st percentile (better: 91.13%) # the 98th percentile (better: 97.85%) The middle three values – the lower quartile, median, and upper quartile – are the usual statistics from the five-number summary and are the standard values for the box in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Five-number Summary

The five-number summary is a set of descriptive statistics that provides information about a dataset. It consists of the five most important sample percentiles: # the sample minimum ''(smallest observation)'' # the lower quartile or ''first quartile'' # the median (the middle value) # the upper quartile or ''third quartile'' # the sample maximum (largest observation) In addition to the median of a single set of data there are two related statistics called the upper and lower quartiles. If data are placed in order, then the lower quartile is central to the lower half of the data and the upper quartile is central to the upper half of the data. These quartiles are used to calculate the interquartile range, which helps to describe the spread of the data, and determine whether or not any data points are outliers. In order for these statistics to exist the observations must be from a univariate variable that can be measured on an ordinal, interval or ratio scale. Use and representa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Asymptotic Distribution

In mathematics and statistics, an asymptotic distribution is a probability distribution that is in a sense the "limiting" distribution of a sequence of distributions. One of the main uses of the idea of an asymptotic distribution is in providing approximations to the cumulative distribution functions of statistical estimators. Definition A sequence of distributions corresponds to a sequence of random variables ''Zi'' for ''i'' = 1, 2, ..., I . In the simplest case, an asymptotic distribution exists if the probability distribution of ''Zi'' converges to a probability distribution (the asymptotic distribution) as ''i'' increases: see convergence in distribution. A special case of an asymptotic distribution is when the sequence of random variables is always zero or ''Zi'' = 0 as ''i'' approaches infinity. Here the asymptotic distribution is a degenerate distribution, corresponding to the value zero. However, the most usual sense in which the term asymptotic distribution is used ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confidence Interval

In frequentist statistics, a confidence interval (CI) is a range of estimates for an unknown parameter. A confidence interval is computed at a designated ''confidence level''; the 95% confidence level is most common, but other levels, such as 90% or 99%, are sometimes used. The confidence level represents the long-run proportion of corresponding CIs that contain the true value of the parameter. For example, out of all intervals computed at the 95% level, 95% of them should contain the parameter's true value. Factors affecting the width of the CI include the sample size, the variability in the sample, and the confidence level. All else being the same, a larger sample produces a narrower confidence interval, greater variability in the sample produces a wider confidence interval, and a higher confidence level produces a wider confidence interval. Definition Let be a random sample from a probability distribution with statistical parameter , which is a quantity to be esti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the so-called demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. H ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

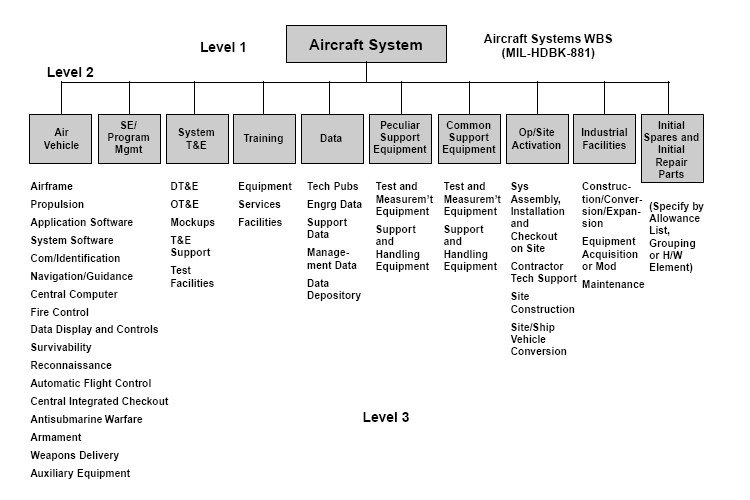

Work Breakdown Structure

A work-breakdown structure (WBS) in project management and systems engineering is a deliverable-oriented breakdown of a project into smaller components. A work breakdown structure is a key project deliverable that organizes the team's work into manageable sections. The Project Management Body of Knowledge (PMBOK 5) defines the work-breakdown structure as a "hierarchical decomposition of the total scope of work to be carried out by the project team to accomplish the project objectives and create the required deliverables." A work-breakdown structure element may be a product, data, service, or any combination of these. A WBS also provides the necessary framework for detailed cost estimation and control while providing guidance for schedule development and control.Booz, Allen & HamiltoEarned Value Management Tutorial Module 2: Work Breakdown Structure [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is the mathematical function that gives the probabilities of occurrence of different possible outcomes for an experiment. It is a mathematical description of a random phenomenon in terms of its sample space and the probabilities of events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilities of events, subsets of the sample space. The sample space, often denoted by \Omega, is the set of all possible outcomes of a ra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |