|

Surrogate Data

Surrogate data, sometimes known as analogous data, usually refers to time series data that is produced using well-defined (linear) models like ARMA processes that reproduce various statistical properties like the autocorrelation structure of a measured data set. The resulting surrogate data can then for example be used for testing for non-linear structure in the empirical data, see surrogate data testing. Surrogate or analogous data may refer to data used to supplement available data from which a mathematical model is built. Under this definition, it may be generated (i.e., synthetic data) or transformed from another source. Uses Surrogate data is used in environmental and laboratory settings, when study data from one source is used in estimation of characteristics of another source. For example, it has been used to model population trends in animal species. It can also be used to model biodiversity, as it would be difficult to gather actual data on all species in a given area. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Time Series

In mathematics, a time series is a series of data points indexed (or listed or graphed) in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Thus it is a sequence of discrete-time data. Examples of time series are heights of ocean tides, counts of sunspots, and the daily closing value of the Dow Jones Industrial Average. A time series is very frequently plotted via a run chart (which is a temporal line chart). Time series are used in statistics, signal processing, pattern recognition, econometrics, mathematical finance, weather forecasting, earthquake prediction, electroencephalography, control engineering, astronomy, communications engineering, and largely in any domain of applied science and engineering which involves temporal measurements. Time series ''analysis'' comprises methods for analyzing time series data in order to extract meaningful statistics and other characteristics of the data. Time series ''forecasting' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autoregressive–moving-average Model

In the statistical analysis of time series, autoregressive–moving-average (ARMA) models provide a parsimonious description of a (weakly) stationary stochastic process in terms of two polynomials, one for the autoregression (AR) and the second for the moving average (MA). The general ARMA model was described in the 1951 thesis of Peter Whittle, ''Hypothesis testing in time series analysis'', and it was popularized in the 1970 book by George E. P. Box and Gwilym Jenkins. Given a time series of data X_t, the ARMA model is a tool for understanding and, perhaps, predicting future values in this series. The AR part involves regressing the variable on its own lagged (i.e., past) values. The MA part involves modeling the error term as a linear combination of error terms occurring contemporaneously and at various times in the past. The model is usually referred to as the ARMA(''p'',''q'') model where ''p'' is the order of the AR part and ''q'' is the order of the MA part (as defined b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelation. A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surrogate Data Testing

Surrogate data testing (or the ''method of surrogate data'') is a statistical proof by contradiction technique and similar to permutation tests and as a resampling technique related (but different) to parametric bootstrapping. It is used to detect non-linearity in a time series. The technique basically involves specifying a null hypothesis H_0 describing a linear process and then generating several surrogate data sets according to H_0 using Monte Carlo methods. A discriminating statistic is then calculated for the original time series and all the surrogate set. If the value of the statistic is significantly different for the original series than for the surrogate set, the null hypothesis is rejected and non-linearity assumed. The particular surrogate data testing method to be used is directly related to the null hypothesis. Usually this is similar to the following: ''The data is a realization of a stationary linear system, whose output has been possibly measured by a monotonicall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Model

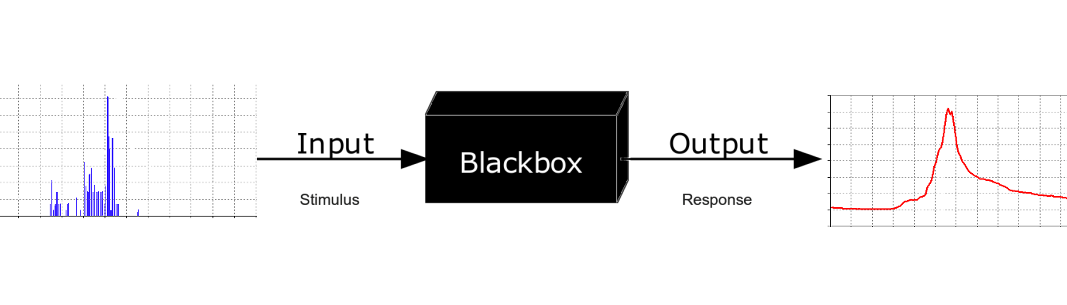

A mathematical model is a description of a system using mathematical concepts and language. The process of developing a mathematical model is termed mathematical modeling. Mathematical models are used in the natural sciences (such as physics, biology, earth science, chemistry) and engineering disciplines (such as computer science, electrical engineering), as well as in non-physical systems such as the social sciences (such as economics, psychology, sociology, political science). The use of mathematical models to solve problems in business or military operations is a large part of the field of operations research. Mathematical models are also used in music, linguistics, and philosophy (for example, intensively in analytic philosophy). A model may help to explain a system and to study the effects of different components, and to make predictions about behavior. Elements of a mathematical model Mathematical models can take many forms, including dynamical systems, statisti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synthetic Data

Synthetic data is information that's artificially generated rather than produced by real-world events. Typically created using algorithms, synthetic data can be deployed to validate mathematical models and to train machine learning models. Data generated by a computer simulation can be seen as synthetic data. This encompasses most applications of physical modeling, such as music synthesizers or flight simulators. The output of such systems approximates the real thing, but is fully algorithmically generated. Synthetic data is used in a variety of fields as a filter for information that would otherwise compromise the confidentiality of particular aspects of the data. In many sensitive applications, datasets theoretically exist but cannot be released to the general public; synthetic data sidesteps the privacy issues that arise from using real consumer information without permission or compensation. Usefulness Synthetic data is generated to meet specific needs or certain conditions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bootstrapping (statistics)

Bootstrapping is any test or metric that uses random sampling with replacement (e.g. mimicking the sampling process), and falls under the broader class of resampling methods. Bootstrapping assigns measures of accuracy (bias, variance, confidence intervals, prediction error, etc.) to sample estimates.software This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Bootstrapping estimates the properties of an (such as its ) by measuring those properties when sampling from an approximating distribution. One standard choice for an a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jackknife Resampling

In statistics, the jackknife (jackknife cross-validation) is a cross-validation technique and, therefore, a form of resampling. It is especially useful for bias and variance estimation. The jackknife pre-dates other common resampling methods such as the bootstrap. Given a sample of size n, a jackknife estimator can be built by aggregating the parameter estimates from each subsample of size (n-1) obtained by omitting one observation. The jackknife technique was developed by Maurice Quenouille (1924–1973) from 1949 and refined in 1956. John Tukey expanded on the technique in 1958 and proposed the name "jackknife" because, like a physical jack-knife (a compact folding knife), it is a rough-and-ready tool that can improvise a solution for a variety of problems even though specific problems may be more efficiently solved with a purpose-designed tool. The jackknife is a linear approximation of the bootstrap. A simple example: Mean estimation The jackknife estimator of a param ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Data Types

Statistics (from German: ''Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An experim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |