|

Surrogate Data Testing

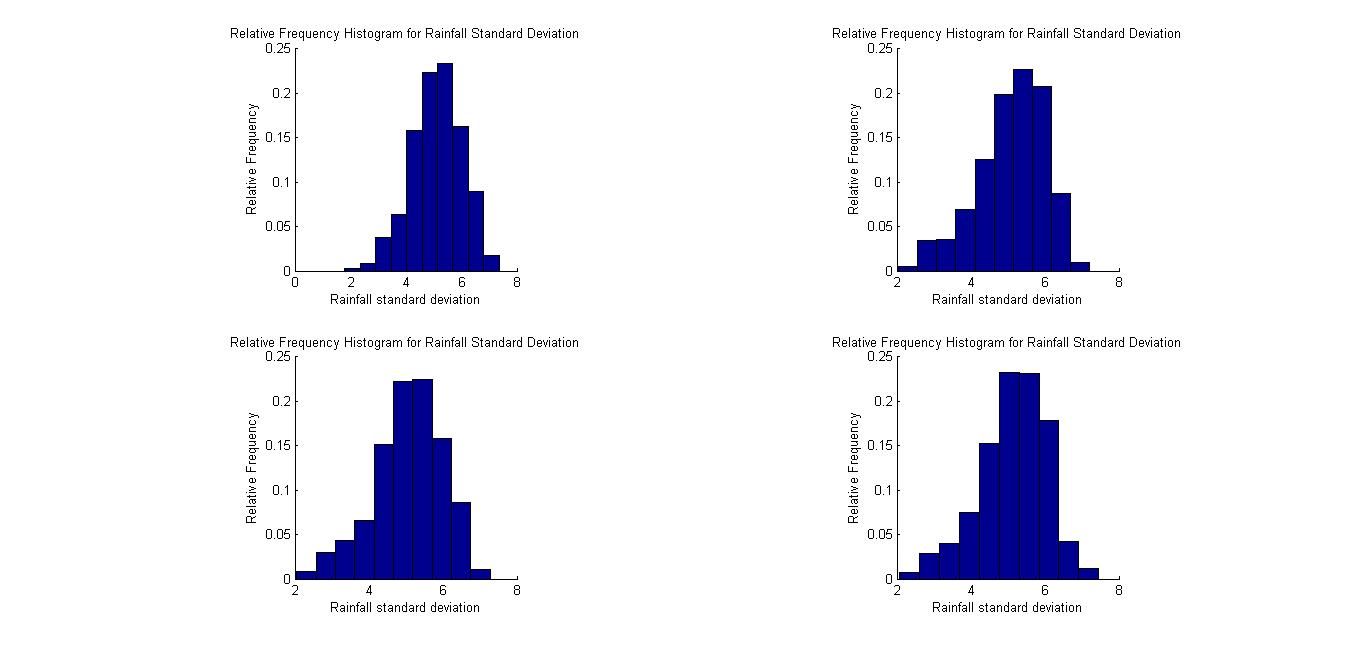

Surrogate data testing (or the ''method of surrogate data'') is a statistical proof by contradiction technique and similar to permutation tests and as a resampling technique related (but different) to parametric bootstrapping. It is used to detect non-linearity in a time series. The technique basically involves specifying a null hypothesis H_0 describing a linear process and then generating several surrogate data sets according to H_0 using Monte Carlo methods. A discriminating statistic is then calculated for the original time series and all the surrogate set. If the value of the statistic is significantly different for the original series than for the surrogate set, the null hypothesis is rejected and non-linearity assumed. The particular surrogate data testing method to be used is directly related to the null hypothesis. Usually this is similar to the following: ''The data is a realization of a stationary linear system, whose output has been possibly measured by a monotonicall ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Proof By Contradiction

In logic and mathematics, proof by contradiction is a form of proof that establishes the truth or the validity of a proposition, by showing that assuming the proposition to be false leads to a contradiction. Proof by contradiction is also known as indirect proof, proof by assuming the opposite, and ''reductio ad impossibile''. It is an example of the weaker logical refutation '' reductio ad absurdum''. A mathematical proof employing proof by contradiction usually proceeds as follows: #The proposition to be proved is ''P''. #We assume ''P'' to be false, i.e., we assume ''¬P''. #It is then shown that ''¬P'' implies falsehood. This is typically accomplished by deriving two mutually contradictory assertions, ''Q'' and ''¬Q'', and appealing to the Law of noncontradiction. #Since assuming ''P'' to be false leads to a contradiction, it is concluded that ''P'' is in fact true. An important special case is the existence proof by contradiction: in order to demonstrate the existence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation Test

A permutation test (also called re-randomization test) is an exact statistical hypothesis test making use of the proof by contradiction. A permutation test involves two or more samples. The null hypothesis is that all samples come from the same distribution H_0: F=G. Under the null hypothesis, the distribution of the test statistic is obtained by calculating all possible values of the test statistic under possible rearrangements of the observed data. Permutation tests are, therefore, a form of resampling. Permutation tests can be understood as surrogate data testing where the surrogate data under the null hypothesis are obtained through permutations of the original data. In other words, the method by which treatments are allocated to subjects in an experimental design is mirrored in the analysis of that design. If the labels are exchangeable under the null hypothesis, then the resulting tests yield exact significance levels; see also exchangeability. Confidence intervals can th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chaos, Solitons & Fractals

Elsevier () is a Dutch academic publishing company specializing in scientific, technical, and medical content. Its products include journals such as '' The Lancet'', '' Cell'', the ScienceDirect collection of electronic journals, '' Trends'', the '' Current Opinion'' series, the online citation database Scopus, the SciVal tool for measuring research performance, the ClinicalKey search engine for clinicians, and the ClinicalPath evidence-based cancer care service. Elsevier's products and services also include digital tools for data management, instruction, research analytics and assessment. Elsevier is part of the RELX Group (known until 2015 as Reed Elsevier), a publicly traded company. According to RELX reports, in 2021 Elsevier published more than 600,000 articles annually in over 2,700 journals; as of 2018 its archives contained over 17 million documents and 40,000 e-books, with over one billion annual downloads. Researchers have criticized Elsevier for its high profit ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude ( absolute value) of the complex value represents the amplitude of a constituent complex sinusoid wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Periodogram

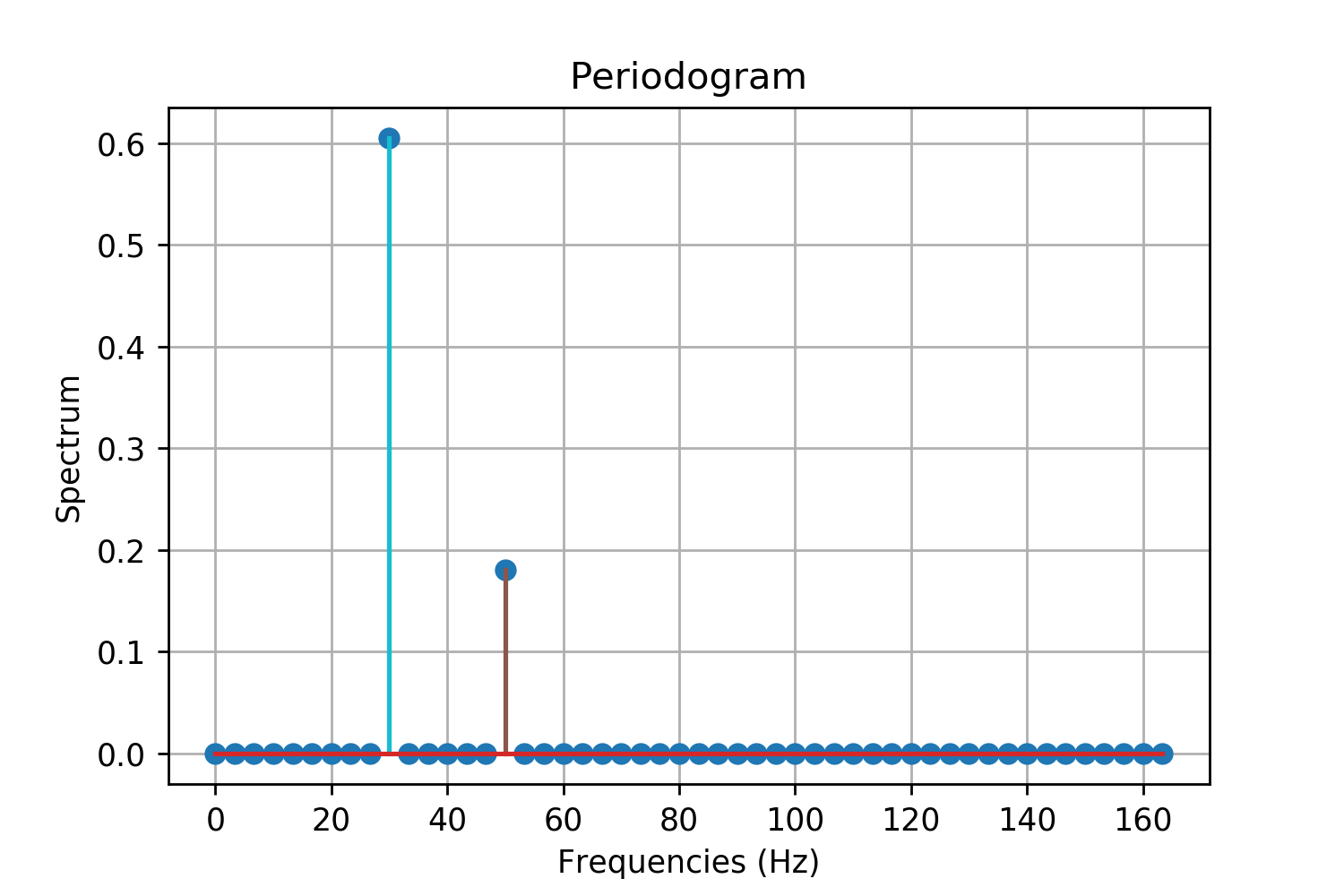

In signal processing, a periodogram is an estimate of the spectral density of a signal. The term was coined by Arthur Schuster in 1898. Today, the periodogram is a component of more sophisticated methods (see spectral estimation). It is the most common tool for examining the amplitude vs frequency characteristics of FIR filters and window functions. FFT spectrum analyzers are also implemented as a time-sequence of periodograms. Definition There are at least two different definitions in use today. One of them involves time-averaging, and one does not. Time-averaging is also the purview of other articles (Bartlett's method and Welch's method). This article is not about time-averaging. The definition of interest here is that the power spectral density of a continuous function, x(t), is the Fourier transform of its auto-correlation function (see Cross-correlation theorem, Spectral density#Power spectral density, and Wiener–Khinchin theorem): :\mathcal\ = X(f)\cdot X^ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Autocorrelation Function

Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals. Different fields of study define autocorrelation differently, and not all of these definitions are equivalent. In some fields, the term is used interchangeably with autocovariance. Unit root processes, trend-stationary processes, autoregressive processes, and moving average processes are specific forms of processes with autocorrelation. Au ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monte Carlo Method

Monte Carlo methods, or Monte Carlo experiments, are a broad class of computational algorithms that rely on repeated random sampling to obtain numerical results. The underlying concept is to use randomness to solve problems that might be deterministic in principle. They are often used in physical and mathematical problems and are most useful when it is difficult or impossible to use other approaches. Monte Carlo methods are mainly used in three problem classes: optimization, numerical integration, and generating draws from a probability distribution. In physics-related problems, Monte Carlo methods are useful for simulating systems with many coupled degrees of freedom, such as fluids, disordered materials, strongly coupled solids, and cellular structures (see cellular Potts model, interacting particle systems, McKean–Vlasov processes, kinetic models of gases). Other examples include modeling phenomena with significant uncertainty in inputs such as the calculation of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Permutation Test

A permutation test (also called re-randomization test) is an exact statistical hypothesis test making use of the proof by contradiction. A permutation test involves two or more samples. The null hypothesis is that all samples come from the same distribution H_0: F=G. Under the null hypothesis, the distribution of the test statistic is obtained by calculating all possible values of the test statistic under possible rearrangements of the observed data. Permutation tests are, therefore, a form of resampling. Permutation tests can be understood as surrogate data testing where the surrogate data under the null hypothesis are obtained through permutations of the original data. In other words, the method by which treatments are allocated to subjects in an experimental design is mirrored in the analysis of that design. If the labels are exchangeable under the null hypothesis, then the resulting tests yield exact significance levels; see also exchangeability. Confidence intervals can th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Surrogate Data

Surrogate data, sometimes known as analogous data, usually refers to time series data that is produced using well-defined (linear) models like ARMA processes that reproduce various statistical properties like the autocorrelation structure of a measured data set. The resulting surrogate data can then for example be used for testing for non-linear structure in the empirical data, see surrogate data testing. Surrogate or analogous data may refer to data used to supplement available data from which a mathematical model is built. Under this definition, it may be generated (i.e., synthetic data) or transformed from another source. Uses Surrogate data is used in environmental and laboratory settings, when study data from one source is used in estimation of characteristics of another source. For example, it has been used to model population trends in animal species. It can also be used to model biodiversity, as it would be difficult to gather actual data on all species in a given area. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Model

In statistics, the term linear model is used in different ways according to the context. The most common occurrence is in connection with regression models and the term is often taken as synonymous with linear regression model. However, the term is also used in time series analysis with a different meaning. In each case, the designation "linear" is used to identify a subclass of models for which substantial reduction in the complexity of the related statistical theory is possible. Linear regression models For the regression case, the statistical model is as follows. Given a (random) sample (Y_i, X_, \ldots, X_), \, i = 1, \ldots, n the relation between the observations Y_i and the independent variables X_ is formulated as :Y_i = \beta_0 + \beta_1 \phi_1(X_) + \cdots + \beta_p \phi_p(X_) + \varepsilon_i \qquad i = 1, \ldots, n where \phi_1, \ldots, \phi_p may be nonlinear functions. In the above, the quantities \varepsilon_i are random variables representing errors in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |