|

Page's Trend Test

In statistics, the Page test for multiple comparisons between ordered correlated variables is the counterpart of Spearman's rank correlation coefficient which summarizes the association of continuous variables. It is also known as Page's trend test or Page's ''L'' test. It is a repeated measure trend test. The Page test is useful where: *there are three or more conditions, *a number of subjects (or other randomly sampled entities) are all observed in each of them, and *we predict that the observations will have a particular order. For example, a number of subjects might each be given three trials at the same task, and we predict that performance will improve from trial to trial. A test of the significance of the trend between conditions in this situation was developed by Ellis Batten Page (1963). More formally, the test considers the null hypothesis that, for ''n'' conditions, where ''m''''i'' is a measure of the central tendency of the ''i''th condition, :m_1 = m_2 = m_3 = \cd ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spearman's Rank Correlation Coefficient

In statistics, Spearman's rank correlation coefficient or Spearman's ''ρ'', named after Charles Spearman and often denoted by the Greek letter \rho (rho) or as r_s, is a nonparametric measure of rank correlation ( statistical dependence between the rankings of two variables). It assesses how well the relationship between two variables can be described using a monotonic function. The Spearman correlation between two variables is equal to the Pearson correlation between the rank values of those two variables; while Pearson's correlation assesses linear relationships, Spearman's correlation assesses monotonic relationships (whether linear or not). If there are no repeated data values, a perfect Spearman correlation of +1 or −1 occurs when each of the variables is a perfect monotone function of the other. Intuitively, the Spearman correlation between two variables will be high when observations have a similar (or identical for a correlation of 1) rank (i.e. relative position lab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ellis Batten Page

Ellis Batten “Bo” Page Ed.D. (April 29, 1924 – May 17, 2005)Potts, Monica (2005, May 23). Ellis Page, 81, Developer of Computerized Grading, obit in the New York ''Times''/ref>"Ellis Page, Computer Grading Developer, Dies". UConn ''Advance'', July 18, 200/ref> is widely acknowledged as the father of automated essay scoring, a multi-disciplinary field exploring computer evaluation and scoring of student writing, particularly essays. Page's development of and pioneering work with Project Essay Grade (PEG) software in the mid-1960s set the stage for the practical application of computer essay scoring technology following the microcomputer revolution of the 1990s. Background Page was born in San Diego, CA. He was a United States Marine Corps veteran, he graduated from Pomona College (Claremont, CA), and he taught high school English while pursuing his master's degree in English at San Diego State University (1955). He received his Ed.D. degree in educational psychology from the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Null Hypothesis

In scientific research, the null hypothesis (often denoted ''H''0) is the claim that no difference or relationship exists between two sets of data or variables being analyzed. The null hypothesis is that any experimentally observed difference is due to chance alone, and an underlying causative relationship does not exist, hence the term "null". In addition to the null hypothesis, an alternative hypothesis is also developed, which claims that a relationship does exist between two variables. Basic definitions The ''null hypothesis'' and the ''alternative hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching conclusions or making decisions on the basis of data. The hypotheses are conjectures about a statistical model of the population, which are based on a sample of the population. The tests are core elements of statistical inference, heavily used in the interpretation of scientific experimental data, to separate scientific claims fr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Tendency

In statistics, a central tendency (or measure of central tendency) is a central or typical value for a probability distribution.Weisberg H.F (1992) ''Central Tendency and Variability'', Sage University Paper Series on Quantitative Applications in the Social Sciences, p.2 Colloquially, measures of central tendency are often called ''averages.'' The term ''central tendency'' dates from the late 1920s. The most common measures of central tendency are the arithmetic mean, the median, and the mode. A middle tendency can be calculated for either a finite set of values or for a theoretical distribution, such as the normal distribution. Occasionally authors use central tendency to denote "the tendency of quantitative data to cluster around some central value."Upton, G.; Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP (entry for "central tendency")Dodge, Y. (2003) ''The Oxford Dictionary of Statistical Terms'', OUP for International Statistical Institute. (entry for "ce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Alternative Hypothesis

In statistical hypothesis testing, the alternative hypothesis is one of the proposed proposition in the hypothesis test. In general the goal of hypothesis test is to demonstrate that in the given condition, there is sufficient evidence supporting the credibility of alternative hypothesis instead of the exclusive proposition in the test (null hypothesis). It is usually consistent with the research hypothesis because it is constructed from literature review, previous studies, etc. However, the research hypothesis is sometimes consistent with the null hypothesis. In statistics, alternative hypothesis is often denoted as Ha or H1. Hypotheses are formulated to compare in a statistical hypothesis test. In the domain of inferential statistics two rival hypotheses can be compared by explanatory power and predictive power. Basic definition The ''alternative hypothesis'' and ''null hypothesis'' are types of conjectures used in statistical tests, which are formal methods of reaching ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

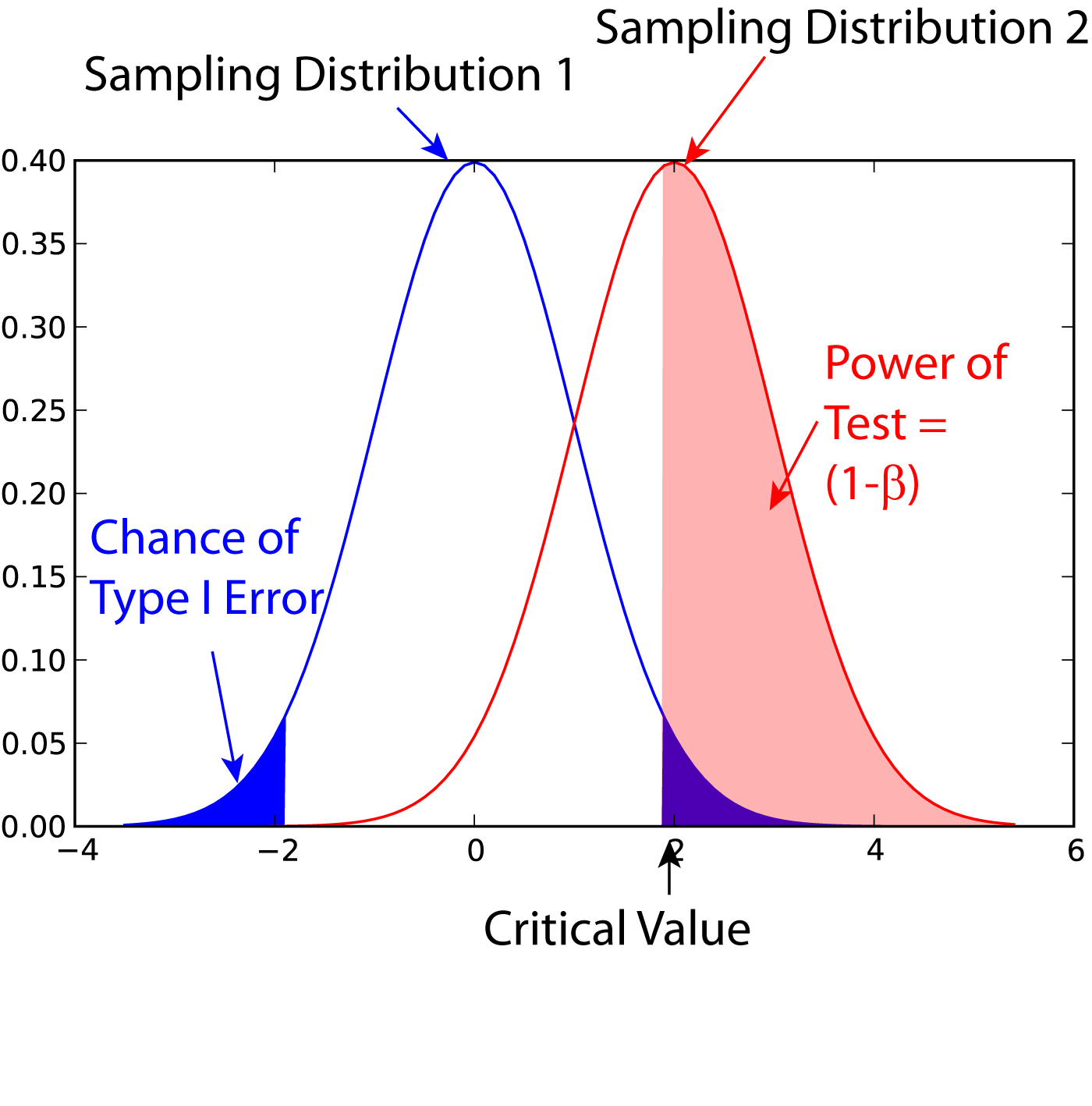

Statistical Power

In statistics, the power of a binary hypothesis test is the probability that the test correctly rejects the null hypothesis (H_0) when a specific alternative hypothesis (H_1) is true. It is commonly denoted by 1-\beta, and represents the chances of a true positive detection conditional on the actual existence of an effect to detect. Statistical power ranges from 0 to 1, and as the power of a test increases, the probability \beta of making a type II error by wrongly failing to reject the null hypothesis decreases. Notation This article uses the following notation: * ''β'' = probability of a Type II error, known as a "false negative" * 1 − ''β'' = probability of a "true positive", i.e., correctly rejecting the null hypothesis. "1 − ''β''" is also known as the power of the test. * ''α'' = probability of a Type I error, known as a "false positive" * 1 − ''α'' = probability of a "true negative", i.e., correctly not rejecting the null hypothesis Description For a ty ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Friedman Test

The Friedman test is a non-parametric statistical test developed by Milton Friedman. Similar to the parametric repeated measures ANOVA, it is used to detect differences in treatments across multiple test attempts. The procedure involves ranking each row (or ''block'') together, then considering the values of ranks by columns. Applicable to complete block designs, it is thus a special case of the Durbin test. Classic examples of use are: * ''n'' wine judges each rate ''k'' different wines. Are any of the ''k'' wines ranked consistently higher or lower than the others? * ''n'' welders each use ''k'' welding torches, and the ensuing welds were rated on quality. Do any of the ''k'' torches produce consistently better or worse welds? The Friedman test is used for one-way repeated measures analysis of variance by ranks. In its use of ranks it is similar to the Kruskal–Wallis one-way analysis of variance by ranks. The Friedman test is widely supported by many statistical software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the chi-squared distribution (also chi-square or \chi^2-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-squared distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in construction of confidence intervals. This distribution is sometimes called the central chi-squared distribution, a special case of the more general noncentral chi-squared distribution. The chi-squared distribution is used in the common chi-squared tests for goodness of fit of an observed distribution to a theoretical one, the independence of two criteria of classification of qualitative data, and in confidence interval estimation for a population standard deviation of a normal distribution from a sample standard deviation. Many other statistical tests a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of ''N'' independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to ''N'' − 1. Mathematically, degrees of freedom is the number of dimensions of the domain o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Two-tailed Test

In statistical significance testing, a one-tailed test and a two-tailed test are alternative ways of computing the statistical significance of a parameter inferred from a data set, in terms of a test statistic. A two-tailed test is appropriate if the estimated value is greater or less than a certain range of values, for example, whether a test taker may score above or below a specific range of scores. This method is used for null hypothesis testing and if the estimated value exists in the critical areas, the alternative hypothesis is accepted over the null hypothesis. A one-tailed test is appropriate if the estimated value may depart from the reference value in only one direction, left or right, but not both. An example can be whether a machine produces more than one-percent defective products. In this situation, if the estimated value exists in one of the one-sided critical areas, depending on the direction of interest (greater than or less than), the alternative hypothesis is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kendall's Tau

In statistics, the Kendall rank correlation coefficient, commonly referred to as Kendall's τ coefficient (after the Greek letter τ, tau), is a statistic used to measure the ordinal association between two measured quantities. A τ test is a non-parametric statistics, non-parametric hypothesis test for statistical dependence based on the τ coefficient. It is a measure of rank correlation: the similarity of the orderings of the data when ranked by each of the quantities. It is named after Maurice Kendall, who developed it in 1938, though Gustav Fechner had proposed a similar measure in the context of time series in 1897. Intuitively, the Kendall correlation between two variables will be high when observations have a similar (or identical for a correlation of 1) Ranking, rank (i.e. relative position label of the observations within the variable: 1st, 2nd, 3rd, etc.) between the two variables, and low when observations have a dissimilar (or fully different for a correlation of − ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |