|

Friedman Test

The Friedman test is a non-parametric statistical test developed by Milton Friedman. Similar to the parametric repeated measures ANOVA, it is used to detect differences in treatments across multiple test attempts. The procedure involves ranking each row (or ''block'') together, then considering the values of ranks by columns. Applicable to complete block designs, it is thus a special case of the Durbin test. Classic examples of use are: * ''n'' wine judges each rate ''k'' different wines. Are any of the ''k'' wines ranked consistently higher or lower than the others? * ''n'' welders each use ''k'' welding torches, and the ensuing welds were rated on quality. Do any of the ''k'' torches produce consistently better or worse welds? The Friedman test is used for one-way repeated measures analysis of variance by ranks. In its use of ranks it is similar to the Kruskal–Wallis one-way analysis of variance by ranks. The Friedman test is widely supported by many statistical softwar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-parametric Statistics

Nonparametric statistics is the branch of statistics that is not based solely on parametrized families of probability distributions (common examples of parameters are the mean and variance). Nonparametric statistics is based on either being distribution-free or having a specified distribution but with the distribution's parameters unspecified. Nonparametric statistics includes both descriptive statistics and statistical inference. Nonparametric tests are often used when the assumptions of parametric tests are violated. Definitions The term "nonparametric statistics" has been imprecisely defined in the following two ways, among others: Applications and purpose Non-parametric methods are widely used for studying populations that take on a ranked order (such as movie reviews receiving one to four stars). The use of non-parametric methods may be necessary when data have a ranking but no clear numerical interpretation, such as when assessing preferences. In terms of levels of me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

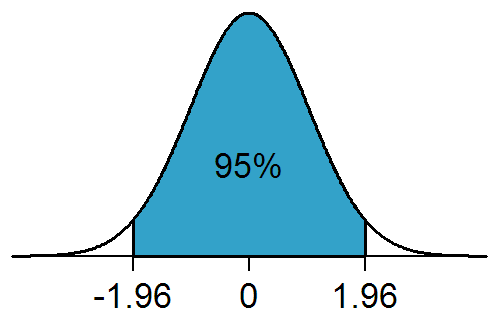

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when it is very unlikely to have occurred given the null hypothesis (simply by chance alone). More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is statistically significant, by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to) the significan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Of Variance

Analysis of variance (ANOVA) is a collection of statistical models and their associated estimation procedures (such as the "variation" among and between groups) used to analyze the differences among means. ANOVA was developed by the statistician Ronald Fisher. ANOVA is based on the law of total variance, where the observed variance in a particular variable is partitioned into components attributable to different sources of variation. In its simplest form, ANOVA provides a statistical test of whether two or more population means are equal, and therefore generalizes the ''t''-test beyond two means. In other words, the ANOVA is used to test the difference between two or more means. History While the analysis of variance reached fruition in the 20th century, antecedents extend centuries into the past according to Stigler. These include hypothesis testing, the partitioning of sums of squares, experimental techniques and the additive model. Laplace was performing hypothesis testi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SPSS

SPSS Statistics is a statistical software suite developed by IBM for data management, advanced analytics, multivariate analysis, business intelligence, and criminal investigation. Long produced by SPSS Inc., it was acquired by IBM in 2009. Current versions (post 2015) have the brand name: IBM SPSS Statistics. The software name originally stood for Statistical Package for the Social Sciences (SPSS), reflecting the original market, then later changed to Statistical Product and Service Solutions. Overview SPSS is a widely used program for statistical analysis in social science. It is also used by market researchers, health researchers, survey companies, government, education researchers, marketing organizations, data miners, and others. The original SPSS manual (Nie, Bent & Hull, 1970) has been described as one of "sociology's most influential books" for allowing ordinary researchers to do their own statistical analysis. In addition to statistical analysis, data management (case ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Post-hoc Analysis

In a scientific study, post hoc analysis (from Latin '' post hoc'', "after this") consists of statistical analyses that were specified after the data were seen. They are usually used to uncover specific differences between three or more group means when an analysis of variance (ANOVA) test is significant. This typically creates a multiple testing problem because each potential analysis is effectively a statistical test. Multiple testing procedures are sometimes used to compensate, but that is often difficult or impossible to do precisely. Post hoc analysis that is conducted and interpreted without adequate consideration of this problem is sometimes called ''data dredging'' by critics because the statistical associations that it finds are often spurious. Common post hoc tests Some common post hoc tests include: {{Cite web , last=Pamplona , first=Fabricio , date=2022-07-28 , title=Post Hoc Analysis: Process and types of tests , url=https://mindthegraph.com/blog/post-hoc-analysis/ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

R (programming Language)

R is a programming language for statistical computing and graphics supported by the R Core Team and the R Foundation for Statistical Computing. Created by statisticians Ross Ihaka and Robert Gentleman, R is used among data miners, bioinformaticians and statisticians for data analysis and developing statistical software. Users have created packages to augment the functions of the R language. According to user surveys and studies of scholarly literature databases, R is one of the most commonly used programming languages used in data mining. R ranks 12th in the TIOBE index, a measure of programming language popularity, in which the language peaked in 8th place in August 2020. The official R software environment is an open-source free software environment within the GNU package, available under the GNU General Public License. It is written primarily in C, Fortran, and R itself (partially self-hosting). Precompiled executables are provided for various operating systems. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wittkowski Test

Witkowski (Polish feminine: Witkowska, plural: Witkowscy) is a Polish surname. Notable people with the surname include: Witkowski * August Witkowski (1854–1913), Polish physicist **Collegium Witkowski in Kraków, Poland *Bronisław Witkowski (1899–1971), Polish luger *Charles S. Witkowski (1907–1993), American politician * Georg Witkowski (1863–1939), Jewish German literary historian *Georges Martin Witkowski (1867–1943), French conductor and composer * Igor Witkowski * John Witkowski (born 1962), American football player *Kalikst Witkowski (1818–1877), Polish politician * Kamil Witkowski (born 1984), Polish footballer *Karol D. Witkowski (1860–1910), Polish-American painter * (born 1925), German author * Marek Witkowski (born 1974), Polish sprint canoer *Maximilian Harden, born Felix Ernst Witkowski (1861–1927), Jewish German journalist * Michał Witkowski (born 1975), Polish novelist and journalist * (born 1949), French physicist * Nik Witkowski (born 1976), Cana ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wilcoxon Signed-rank Test

The Wilcoxon signed-rank test is a Non-parametric statistics, non-parametric statistical hypothesis testing, statistical hypothesis test used either to test the Location parameter, location of a population based on a sample of data, or to compare the locations of two populations using two matched samples., p. 350 The one-sample version serves a purpose similar to that of the one-sample Student's t-test, Student's ''t''-test. For two matched samples, it is a paired difference test like the paired Student's ''t''-test (also known as the "''t''-test for matched pairs" or "''t''-test for dependent samples"). The Wilcoxon test can be a good alternative to the ''t''-test when population means are not of interest; for example, when one wishes to test whether a population's median is nonzero, or whether there is a better than 50% chance that a sample from one population is greater than a sample from another population. History The test is named for Frank Wilcoxon (1892–1965) who, in a si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kendall's W

Kendall's ''W'' (also known as Kendall's coefficient of concordance) is a non-parametric statistic for rank correlation. It is a normalization of the statistic of the Friedman test, and can be used for assessing agreement among raters and in particular inter-rater reliability. Kendall's ''W'' ranges from 0 (no agreement) to 1 (complete agreement). Suppose, for instance, that a number of people have been asked to rank a list of political concerns, from the most important to the least important. Kendall's ''W'' can be calculated from these data. If the test statistic ''W'' is 1, then all the survey respondents have been unanimous, and each respondent has assigned the same order to the list of concerns. If ''W'' is 0, then there is no overall trend of agreement among the respondents, and their responses may be regarded as essentially random. Intermediate values of ''W'' indicate a greater or lesser degree of unanimity among the various responses. While tests using the standard Pears ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sign Test

The sign test is a statistical method to test for consistent differences between pairs of observations, such as the weight of subjects before and after treatment. Given pairs of observations (such as weight pre- and post-treatment) for each subject, the sign test determines if one member of the pair (such as pre-treatment) tends to be greater than (or less than) the other member of the pair (such as post-treatment). The paired observations may be designated ''x'' and ''y''. For comparisons of paired observations (''x'',y), the sign test is most useful if comparisons can only be expressed as ''x'' > ''y'', ''x'' = ''y'', or ''x'' 0. Assuming that H0 is true, then ''W'' follows a binomial distribution ''W'' ~ b(''m'', 0.5). Assumptions Let ''Z''i = ''Y''i – ''X''i for ''i'' = 1, ... , ''n''. # The differences ''Zi'' are assumed to be independent. # Each ''Zi'' comes from the same continuous population. # The values ''X''''i' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |