|

Okapi Framework

The Okapi Framework is a cross-platform and open-source set of components and applications that offer extensive support for localizing and translating documentation and software. Architecture The Okapi Framework is organized around the following parts: * Interface Specifications — The framework's components and applications communicate through several common API sets: the interfaces. A few of them are defined as high-level specifications. Implementing these interfaces allows you to seamlessly plug new components in the overall framework. For example: all filters have the same API to parse input files, so you can write utilities that use any of the available filters. * Format Specifications — Storing and exchanging data is an important part of the localization process. Using open standards for as many formats as possible increases interoperability. Whenever possible the Okapi Framework make use of existing standards such as XLIFF, SRX Segmentation Rules eXchage LISA OSCAR XML ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-platform

In computing, cross-platform software (also called multi-platform software, platform-agnostic software, or platform-independent software) is computer software that is designed to work in several computing platforms. Some cross-platform software requires a separate build for each platform, but some can be directly run on any platform without special preparation, being written in an interpreted language or compiled to portable bytecode for which the interpreters or run-time packages are common or standard components of all supported platforms. For example, a cross-platform application may run on Microsoft Windows, Linux, and macOS. Cross-platform software may run on many platforms, or as few as two. Some frameworks for cross-platform development are Codename One, Kivy, Qt, Flutter, NativeScript, Xamarin, Phonegap, Ionic, and React Native. Platforms ''Platform'' can refer to the type of processor (CPU) or other hardware on which an operating system (OS) or application runs, t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Java (programming Language)

Java is a high-level, class-based, object-oriented programming language that is designed to have as few implementation dependencies as possible. It is a general-purpose programming language intended to let programmers ''write once, run anywhere'' ( WORA), meaning that compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax of Java is similar to C and C++, but has fewer low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as reflection and runtime code modification) that are typically not available in traditional compiled languages. , Java was one of the most popular programming languages in use according to GitHub, particularly for client–server web applications, with a reported 9 million developers. Java was originally developed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XLIFF

XLIFF (XML Localization Interchange File Format) is an XML-based bitext format created to standardize the way localizable data are passed between and among tools during a localization process and a common format for CAT tool exchange. The XLIFF Technical Committee (TC) first convened at OASIS in December 2001 (first meeting in January 2002), but the first fully ratified version of XLIFF appeared as XLIFF Version 1.2 in February 2008. Its current specification is v2.1 released on 2018-02-13, which is backwards compatible with v2.0 released on 2014-08-05. The specification is aimed at the localization industry. It specifies elements and attributes to store content extracted from various original file formats and its corresponding translation. The goal was to abstract the localization skills from the engineering skills related to specific formats such as HTML. XLIFF is part of the Open Architecture for XML Authoring and Localization (OAXAL) reference architecture. XLIFF 2.0 and hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SRX Segmentation Rules EXchage LISA OSCAR XML Based Standard

Segmentation Rules eXchange or SRX is an XML-based standard that was maintained by Localization Industry Standards Association, until it became insolvent in 2011, and then by the Globalization and Localization Association (GALA). SRX provides a common way to describe how to segment text for translation and other language-related processes. It was created when it was realized that TMX was less useful than expected in certain instances due to differences in how tools segment text. SRX is intended to enhance the TMX standard so that translation memory (TM) data that is exchanged between applications can be used more effectively. Having the segmentation rules available that were used when a TM was created increases the usefulness of the TM data. Implementation difficulties SRX make use of the ICU Regular Expression syntax, but not all programming languages support all ICU expressions, making implementing SRX in some languages difficult or impossible. Java is an example of this. Versi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Translation Memory EXchange

Translation Memory eXchange (TMX) is an XML specification for the exchange of translation memory (TM) data between computer-aided translation and localization tools with little or no loss of critical data. TMX was originally developed and maintained by OSCAR (Open Standards for Container/Content Allowing Re-use), a special interest group of LISA (Localization Industry Standards Association), and first released in 1997. Specification 1.4b of 2005 remained current . It allows the original source and target documents to be recreated from the TMX data. A working draft of TMX 2.0 was released for public comment in March 2007 but no work was done on the new version; in March 2011 LISA was declared insolvent and as a result its standards were moved under a Creative Commons license and the standards specification relocated. TMX forms part of the Open Architecture for XML Authoring and Localization (OAXAL OAXAL: Open Architecture for XML Authoring and Localization is an Organization for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gettext

In computing, gettext is an internationalization and localization (i18n and l10n) system commonly used for writing multilingual programs on Unix-like computer operating systems. One of the main benefits of gettext is that it separates programming from translating. The most commonly used implementation of gettext is GNU gettext, released by the GNU Project in 1995. The runtime library is libintl. gettext provides an option to use different strings for any number of plural forms of nouns, but this feature has no support for grammatical gender. History Initially, POSIX provided no means of localizing messages. Two proposals were raised in the late 1980s, the 1988 Uniforum gettext and the 1989 X/Open catgets (XPG-3 § 5). Sun Microsystems implemented the first gettext in 1993. The Unix and POSIX developers never really agreed on what kind of interface to use (the other option is the X/Open catgets), so many C libraries, including glibc, implemented both. , whether gettext should be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Qt (toolkit)

Qt (pronounced "cute") is cross-platform software for creating graphical user interfaces as well as cross-platform applications that run on various software and hardware platforms such as Linux, Windows, macOS, Android or embedded systems with little or no change in the underlying codebase while still being a native application with native capabilities and speed. Qt is currently being developed by The Qt Company, a publicly listed company, and the Qt Project under open-source governance, involving individual developers and organizations working to advance Qt. Qt is available under both commercial licenses and open-source GPL 2.0, GPL 3.0, and LGPL 3.0 licenses. Purposes and abilities Qt is used for developing graphical user interfaces (GUIs) and multi-platform applications that run on all major desktop platforms and most mobile or embedded platforms. Most GUI programs created with Qt have a native-looking interface, in which case Qt is classified as a '' widget toolki ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Expression

A regular expression (shortened as regex or regexp; sometimes referred to as rational expression) is a sequence of characters that specifies a search pattern in text. Usually such patterns are used by string-searching algorithms for "find" or "find and replace" operations on strings, or for input validation. Regular expression techniques are developed in theoretical computer science and formal language theory. The concept of regular expressions began in the 1950s, when the American mathematician Stephen Cole Kleene formalized the concept of a regular language. They came into common use with Unix text-processing utilities. Different syntaxes for writing regular expressions have existed since the 1980s, one being the POSIX standard and another, widely used, being the Perl syntax. Regular expressions are used in search engines, in search and replace dialogs of word processors and text editors, in text processing utilities such as sed and AWK, and in lexical analysis. Most gener ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internationalization Tag Set

The Internationalization Tag Set (ITS) is a set of attributes and elements designed to provide internationalization and localization support in XML documents. The ITS specification identifies concepts (called "ITS data categories") which are important for internationalization and localization. It also defines implementation of these concepts through a set of elements and attributes grouped in the ITS namespace. XML developers can use this namespace to integrate internationalization features directly into their own XML schemas and documents. Overview ITS v1.0 includes seven data categories: * Translate: Defines what parts of a document are translatable or not. * Localization Note: Provides alerts, hints, instructions, or other information to help the localizers or the translators. * Terminology: Indicates which parts of the documents are terms and optionally points to information about these terms. * Directionality: Indicates what type of display directionality should be applied t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Character Encoding

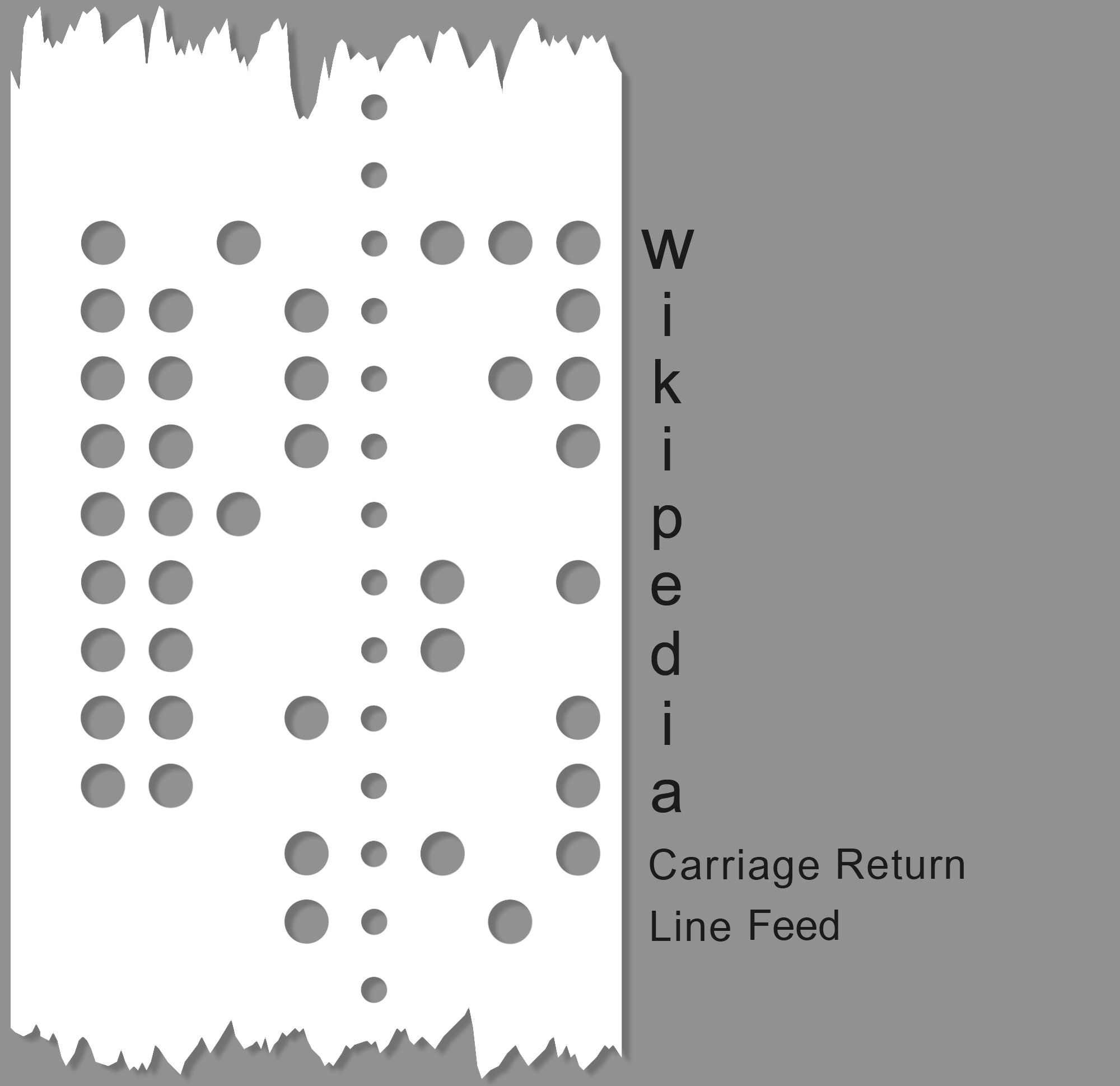

Character encoding is the process of assigning numbers to Graphics, graphical character (computing), characters, especially the written characters of Language, human language, allowing them to be Data storage, stored, Data communication, transmitted, and Computing, transformed using Digital electronics, digital computers. The numerical values that make up a character encoding are known as "code points" and collectively comprise a "code space", a "code page", or a "Character Map (Windows), character map". Early character codes associated with the optical or electrical Telegraphy, telegraph could only represent a subset of the characters used in written languages, sometimes restricted to Letter case, upper case letters, Numeral system, numerals and some punctuation only. The low cost of digital representation of data in modern computer systems allows more elaborate character codes (such as Unicode) which represent most of the characters used in many written languages. Character enc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Term Extraction

Terminology extraction (also known as term extraction, glossary extraction, term recognition, or terminology mining) is a subtask of information extraction. The goal of terminology extraction is to automatically extract relevant terms from a given corpus. In the semantic web era, a growing number of communities and networked enterprises started to access and interoperate through the internet. Modeling these communities and their information needs is important for several web applications, like topic-driven web crawlers, web services, recommender systems, etc. The development of terminology extraction is also essential to the language industry. One of the first steps to model a knowledge domain is to collect a vocabulary of domain-relevant terms, constituting the linguistic surface manifestation of domain concepts. Several methods to automatically extract technical terms from domain-specific document warehouses have been described in the literature. Typically, approaches to aut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |