|

Online Machine Learning

In computer science, online machine learning is a method of machine learning in which data becomes available in a sequential order and is used to update the best predictor for future data at each step, as opposed to batch learning techniques which generate the best predictor by learning on the entire training data set at once. Online learning is a common technique used in areas of machine learning where it is computationally infeasible to train over the entire dataset, requiring the need of out-of-core algorithms. It is also used in situations where it is necessary for the algorithm to dynamically adapt to new patterns in the data, or when the data itself is generated as a function of time, e.g., prediction of prices in the financial international markets. Online learning algorithms may be prone to catastrophic interference, a problem that can be addressed by incremental learning approaches. Introduction In the setting of supervised learning, a function of f : X \to Y is to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, applied disciplines (including the design and implementation of Computer architecture, hardware and Software engineering, software). Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of computational problem, problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and preventing security vulnerabilities. Computer graphics (computer science), Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Artificial Neural Networks

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, neur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Online Mirror Descent

In mathematics, mirror descent is an iterative optimization algorithm for finding a local minimum of a differentiable function. It generalizes algorithms such as gradient descent and multiplicative weights. History Mirror descent was originally proposed by Nemirovski and Yudin in 1983. Motivation In gradient descent with the sequence of learning rates (\eta_n)_ applied to a differentiable function F, one starts with a guess \mathbf_0 for a local minimum of F, and considers the sequence \mathbf_0, \mathbf_1, \mathbf_2, \ldots such that :\mathbf_=\mathbf_n-\eta_n \nabla F(\mathbf_n),\ n \ge 0. This can be reformulated by noting that :\mathbf_=\arg \min_ \left(F(\mathbf_n) + \nabla F(\mathbf_n)^T (\mathbf - \mathbf_n) + \frac\, \mathbf - \mathbf_n\, ^2\right) In other words, \mathbf_ minimizes the first-order approximation to F at \mathbf_n with added proximity term \, \mathbf - \mathbf_n\, ^2. This squared Euclidean distance term is a particular example of a Bregman d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Hinge Loss

In machine learning, the hinge loss is a loss function used for training classifiers. The hinge loss is used for "maximum-margin" classification, most notably for support vector machines (SVMs). For an intended output and a classifier score , the hinge loss of the prediction is defined as :\ell(y) = \max(0, 1-t \cdot y) Note that y should be the "raw" output of the classifier's decision function, not the predicted class label. For instance, in linear SVMs, y = \mathbf \cdot \mathbf + b, where (\mathbf,b) are the parameters of the hyperplane and \mathbf is the input variable(s). When and have the same sign (meaning predicts the right class) and , y, \ge 1, the hinge loss \ell(y) = 0. When they have opposite signs, \ell(y) increases linearly with , and similarly if , y, < 1, even if it has the same sign (correct prediction, but not by enough margin). Extensions While binary SVMs are commonly extended to[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Subgradient

In mathematics, the subderivative (or subgradient) generalizes the derivative to convex functions which are not necessarily differentiable. The set of subderivatives at a point is called the subdifferential at that point. Subderivatives arise in convex analysis, the study of convex functions, often in connection to convex optimization. Let f:I \to \mathbb be a real-valued convex function defined on an open interval of the real line. Such a function need not be differentiable at all points: For example, the absolute value function f(x)=, x, is non-differentiable when x=0. However, as seen in the graph on the right (where f(x) in blue has non-differentiable kinks similar to the absolute value function), for any x_0 in the domain of the function one can draw a line which goes through the point (x_0,f(x_0)) and which is everywhere either touching or below the graph of ''f''. The slope of such a line is called a ''subderivative''. Definition Rigorously, a ''subderivative'' of a c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Greedy Algorithm

A greedy algorithm is any algorithm that follows the problem-solving heuristic of making the locally optimal choice at each stage. In many problems, a greedy strategy does not produce an optimal solution, but a greedy heuristic can yield locally optimal solutions that approximate a globally optimal solution in a reasonable amount of time. For example, a greedy strategy for the travelling salesman problem (which is of high computational complexity) is the following heuristic: "At each step of the journey, visit the nearest unvisited city." This heuristic does not intend to find the best solution, but it terminates in a reasonable number of steps; finding an optimal solution to such a complex problem typically requires unreasonably many steps. In mathematical optimization, greedy algorithms optimally solve combinatorial problems having the properties of matroids and give constant-factor approximations to optimization problems with the submodular structure. Specifics Greedy algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Randomization

Randomization is a statistical process in which a random mechanism is employed to select a sample from a population or assign subjects to different groups.Oxford English Dictionary "randomization" The process is crucial in ensuring the random allocation of experimental units or treatment protocols, thereby minimizing selection bias and enhancing the Validity (statistics), statistical validity. It facilitates the objective comparison of treatment effects in Design of experiments, experimental design, as it equates groups statistically by balancing both known and unknown factors at the outset of the study. In statistical terms, it underpins the principle of probabilistic equivalence among groups, allowing for the unbiased estimation of treatment effects and the generalizability of conclusions drawn from sample data to the broader population. Randomization is not haphazard; instead, a stochastic process, random process is a sequence of random variables describing a process whose outcom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concavification

In mathematics, concavification is the process of converting a non-concave function to a concave function. A related concept is convexification – converting a non-convex function to a convex function. It is especially important in economics and mathematical optimization. Concavification of a quasiconcave function by monotone transformation An important special case of concavification is where the original function is a quasiconcave function. It is known that: * Every concave function is quasiconcave, but the opposite is not true. * Every monotone transformation of a quasiconcave function is also quasiconcave. For example, if f : \mathbb^n \to \mathbb is quasiconcave and g : \mathbb \to \mathbb is a monotonically-increasing function, then x \mapsto g(f(x)) is also quasiconcave. Therefore, a natural question is: ''given a quasiconcave function'' f : \mathbb^n \to \mathbb, ''does there exist a monotonically increasing'' g : \mathbb \to \mathbb ''such that'' x \mapsto g(f(x)) ''is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Regret (decision Theory)

In decision theory, regret aversion (or anticipated regret) describes how the human emotional response of regret can influence decision-making under uncertainty. When individuals make choices without complete information, they often experience regret if they later discover that a different choice would have produced a better outcome. This regret can be quantified as the difference in value between the actual decision made and what would have been the optimal decision in hindsight. Unlike traditional models that consider regret as merely a post-decision emotional response, the theory of regret aversion proposes that decision-makers actively anticipate potential future regret and incorporate this anticipation into their current decision-making process. This anticipation can lead individuals to make choices specifically designed to minimize the possibility of experiencing regret later, even if those choices are not optimal from a purely probabilistic expected-value perspective. Regre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

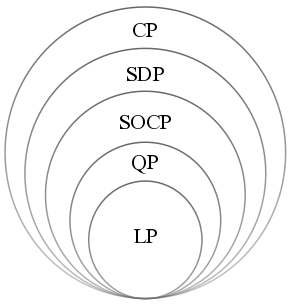

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Representer Theorem

For computer science, in statistical learning theory, a representer theorem is any of several related results stating that a minimizer f^ of a regularized Empirical risk minimization, empirical risk functional defined over a reproducing kernel Hilbert space can be represented as a finite linear combination of kernel products evaluated on the input points in the training set data. Formal statement The following Representer Theorem and its proof are due to Bernhard Schölkopf, Schölkopf, Herbrich, and Smola: Theorem: Consider a positive-definite real-valued kernel k : \mathcal \times \mathcal \to \R on a non-empty set \mathcal with a corresponding reproducing kernel Hilbert space H_k. Let there be given * a training sample (x_1, y_1), \dotsc, (x_n, y_n) \in \mathcal \times \R, * a strictly increasing real-valued function g \colon [0, \infty) \to \R, and * an arbitrary error function E \colon (\mathcal \times \R^2)^n \to \R \cup \lbrace \infty \rbrace, which together define the f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |