|

Modified Half-normal Distribution

In probability theory and statistics, the half-normal distribution is a special case of the folded normal distribution. Let X follow an ordinary normal distribution, N(0,\sigma^2). Then, Y=, X, follows a half-normal distribution. Thus, the half-normal distribution is a fold at the mean of an ordinary normal distribution with mean zero. Properties Using the \sigma parametrization of the normal distribution, the probability density function (PDF) of the half-normal is given by : f_Y(y; \sigma) = \frac\exp \left( -\frac \right) \quad y \geq 0, where E = \mu = \frac. Alternatively using a scaled precision (inverse of the variance) parametrization (to avoid issues if \sigma is near zero), obtained by setting \theta=\frac, the probability density function is given by : f_Y(y; \theta) = \frac\exp \left( -\frac \right) \quad y \geq 0, where E = \mu = \frac. The cumulative distribution function (CDF) is given by : F_Y(y; \sigma) = \int_0^y \frac\sqrt \, \exp \left( -\frac \rig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Half Normal Pdf

One half ( : halves) is the irreducible fraction resulting from dividing one by two or the fraction resulting from dividing any number by its double. Multiplication by one half is equivalent to division by two, or "halving"; conversely, division by one half is equivalent to multiplication by two, or "doubling". One half often appears in mathematical equations, recipes, measurements, etc. Half can also be said to be one part of something divided into two equal parts. For instance, the area ''S'' of a triangle is computed. :''S'' = × perpendicular height. One half also figures in the formula for calculating figurate numbers, such as triangular numbers and pentagonal numbers: : \frac and in the formula for computing magic constants for magic squares : M_2(n) = \frac \left(n^ + 1\right) The Riemann hypothesis states that every nontrivial complex root of the Riemann zeta function has a real part equal to . One half has two different decimal expansions, the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generalized Gamma Distribution

The generalized gamma distribution is a continuous probability distribution with two shape parameters (and a scale parameter). It is a generalization of the gamma distribution which has one shape parameter (and a scale parameter). Since many distributions commonly used for parametric models in survival analysis (such as the exponential distribution, the Weibull distribution and the gamma distribution) are special cases of the generalized gamma, it is sometimes used to determine which parametric model is appropriate for a given set of data. Another example is the half-normal distribution. Characteristics The generalized gamma distribution has two shape parameters, d > 0 and p > 0, and a scale parameter, a > 0. For non-negative ''x'' from a generalized gamma distribution, the probability density function is : f(x; a, d, p) = \frac, where \Gamma(\cdot) denotes the gamma function. The cumulative distribution function is : F(x; a, d, p) = \frac , where \gamma(\cdot) denotes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Truncated Normal Distribution

In probability and statistics, the truncated normal distribution is the probability distribution derived from that of a normally distributed random variable by bounding the random variable from either below or above (or both). The truncated normal distribution has wide applications in statistics and econometrics. Definitions Suppose X has a normal distribution with mean \mu and variance \sigma^2 and lies within the interval (a,b), \text \; -\infty \leq a < b \leq \infty . Then conditional on has a truncated normal distribution. Its , , for , is given by : and by otherwise. Here, : is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Half-t Distribution

In statistics, the folded-''t'' and half-''t'' distributions are derived from Student's ''t''-distribution by taking the absolute values of variates. This is analogous to the folded-normal and the half-normal statistical distributions being derived from the normal distribution. Definitions The folded non-standardized ''t'' distribution is the distribution of the absolute value of the non-standardized ''t'' distribution with \nu degrees of freedom; its probability density function is given by: :g\left(x\right)\;=\;\frac\left\lbrace \left +\frac\frac\right+\left +\frac\frac\right \right\rbrace \qquad(\mbox\quad x \geq 0). The half-''t'' distribution results as the special case of \mu=0, and the standardized version as the special case of \sigma=1. If \mu=0, the folded-''t'' distribution reduces to the special case of the half-''t'' distribution. Its probability density function then simplifies to :g\left(x\right)\;=\;\frac \left(1+\frac\frac\right)^ \qquad(\mbox\quad x \geq 0). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skewness

In probability theory and statistics, skewness is a measure of the asymmetry of the probability distribution of a real-valued random variable about its mean. The skewness value can be positive, zero, negative, or undefined. For a unimodal distribution, negative skew commonly indicates that the ''tail'' is on the left side of the distribution, and positive skew indicates that the tail is on the right. In cases where one tail is long but the other tail is fat, skewness does not obey a simple rule. For example, a zero value means that the tails on both sides of the mean balance out overall; this is the case for a symmetric distribution, but can also be true for an asymmetric distribution where one tail is long and thin, and the other is short but fat. Introduction Consider the two distributions in the figure just below. Within each graph, the values on the right side of the distribution taper differently from the values on the left side. These tapering sides are called ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment (mathematics)

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. The mathematical concept is closely related to the concept of moment in physics. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution (Hausdorff moment problem). The same is not true on unbounded intervals ( Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure between random variables. They are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that they induce. Undirected Graphical Model The undire ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Regression

In statistics, specifically regression analysis, a binary regression estimates a relationship between one or more explanatory variables and a single output binary variable. Generally the probability of the two alternatives is modeled, instead of simply outputting a single value, as in linear regression. Binary regression is usually analyzed as a special case of binomial regression, with a single outcome (n = 1), and one of the two alternatives considered as "success" and coded as 1: the value is the count of successes in 1 trial, either 0 or 1. The most common binary regression models are the logit model (logistic regression) and the probit model ( probit regression). Applications Binary regression is principally applied either for prediction ( binary classification), or for estimating the association between the explanatory variables and the output. In economics, binary regressions are used to model binary choice. Interpretations Binary regression models can be interpreted ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian

Thomas Bayes (/beɪz/; c. 1701 – 1761) was an English statistician, philosopher, and Presbyterian minister. Bayesian () refers either to a range of concepts and approaches that relate to statistical methods based on Bayes' theorem, or a follower of these methods. A number of things have been named after Thomas Bayes, including: Bayes *Bayes action *Bayes Business School * Bayes classifier * Bayes discriminability index *Bayes error rate *Bayes estimator *Bayes factor * Bayes Impact *Bayes linear statistics * Bayes prior * Bayes' theorem / Bayes-Price theorem -- sometimes called Bayes' rule or Bayesian updating. *Empirical Bayes method *Evidence under Bayes theorem *Hierarchical Bayes model * Laplace–Bayes estimator *Naive Bayes classifier * Random naive Bayes Bayesian *Approximate Bayesian computation * Bayesian average *Bayesian Analysis (journal) *Bayesian approaches to brain function * Bayesian bootstrap *Bayesian control rule *Bayesian cognitive science *Bayesian e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) methods comprise a class of algorithms for sampling from a probability distribution. By constructing a Markov chain that has the desired distribution as its equilibrium distribution, one can obtain a sample of the desired distribution by recording states from the chain. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Various algorithms exist for constructing chains, including the Metropolis–Hastings algorithm. Application domains MCMC methods are primarily used for calculating numerical approximations of multi-dimensional integrals, for example in Bayesian statistics, computational physics, computational biology and computational linguistics. In Bayesian statistics, the recent development of MCMC methods has made it possible to compute large hierarchical models that require integrations over hundreds to thousands of unknown parameters. In rare even ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

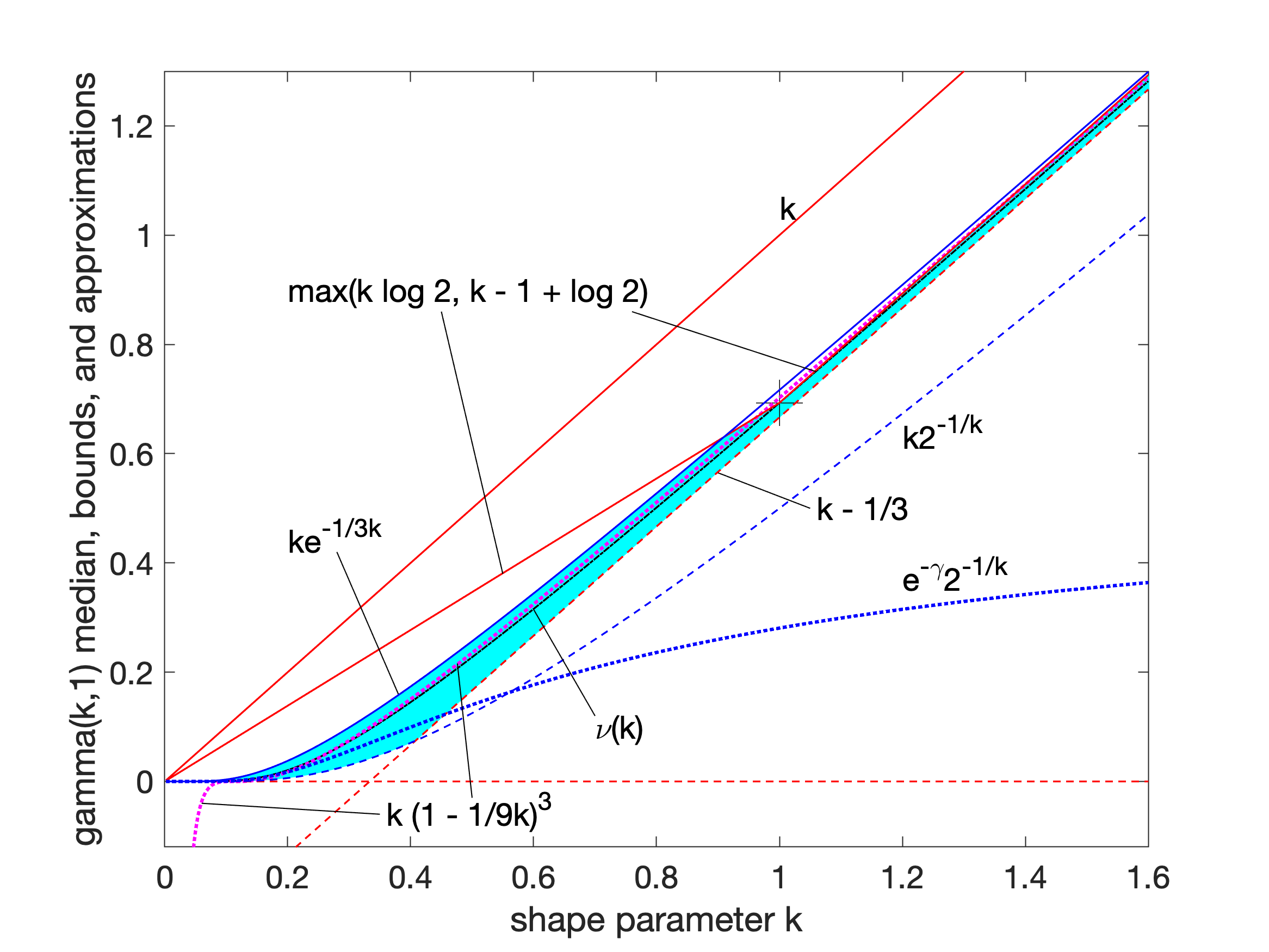

Gamma Distribution

In probability theory and statistics, the gamma distribution is a two- parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-square distribution are special cases of the gamma distribution. There are two equivalent parameterizations in common use: #With a shape parameter k and a scale parameter \theta. #With a shape parameter \alpha = k and an inverse scale parameter \beta = 1/ \theta , called a rate parameter. In each of these forms, both parameters are positive real numbers. The gamma distribution is the maximum entropy probability distribution (both with respect to a uniform base measure and a 1/x base measure) for a random variable X for which E 'X''= ''kθ'' = ''α''/''β'' is fixed and greater than zero, and E n(''X'')= ''ψ''(''k'') + ln(''θ'') = ''ψ''(''α'') − ln(''β'') is fixed (''ψ'' is the digamma function). Definitions The parameterization with ''k'' and ''θ'' appears to be more common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Incomplete Gamma Function

In mathematics, the upper and lower incomplete gamma functions are types of special functions which arise as solutions to various mathematical problems such as certain integrals. Their respective names stem from their integral definitions, which are defined similarly to the gamma function but with different or "incomplete" integral limits. The gamma function is defined as an integral from zero to infinity. This contrasts with the lower incomplete gamma function, which is defined as an integral from zero to a variable upper limit. Similarly, the upper incomplete gamma function is defined as an integral from a variable lower limit to infinity. Definition The upper incomplete gamma function is defined as: \Gamma(s,x) = \int_x^ t^\,e^\, dt , whereas the lower incomplete gamma function is defined as: \gamma(s,x) = \int_0^x t^\,e^\, dt . In both cases is a complex parameter, such that the real part of is positive. Properties By integration by parts we find the recurrence re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |