|

MPEG-4 Audio

MPEG-4 Part 3 or MPEG-4 Audio (formally ISO/ IEC 14496-3) is the third part of the ISO/ IEC MPEG-4 international standard developed by Moving Picture Experts Group. It specifies audio coding methods. The first version of ISO/IEC 14496-3 was published in 1999. The MPEG-4 Part 3 consists of a variety of audio coding technologies – from lossy speech coding ( HVXC, CELP), general audio coding ( AAC, TwinVQ, BSAC), lossless audio compression (MPEG-4 SLS, Audio Lossless Coding, MPEG-4 DST), a Text-To-Speech Interface (TTSI), Structured Audio (using SAOL, SASL, MIDI) and many additional audio synthesis and coding techniques. MPEG-4 Audio does not target a single application such as real-time telephony or high-quality audio compression. It applies to every application which requires the use of advanced sound compression, synthesis, manipulation, or playback. MPEG-4 Audio is a new type of audio standard that integrates numerous different types of audio coding: natural sound and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AAC-LC

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. It was developed by Dolby, AT&T, Fraunhofer and Sony, originally as part of the MPEG-2 specification but later improved under MPEG-4.ISO (2006ISO/IEC 13818-7:2006 – Information technology — Generic coding of moving pictures and associated audio information — Part 7: Advanced Audio Coding (AAC), Retrieved on 2009-08-06ISO (2006, Retrieved on 2009-08-06 AAC was designed to be the successor of the MP3 format (MPEG-2 Audio Layer III) and generally achieves higher sound quality than MP3 at the same bit rate. AAC encoded audio files are typically packaged in an MP4 container most commonly using the filename extension .m4a. The basic profile of AAC (both MPEG-4 and MPEG-2) is called AAC-LC (''Low Complexity''). It is widely supported in the industry and has been adopted as the default or standard audio format on products including Apple's iTunes Store, Nintendo's Wii, DSi and 3DS and S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Audio Coding

Advanced Audio Coding (AAC) is an audio coding standard for lossy digital audio compression. It was developed by Dolby, AT&T, Fraunhofer and Sony, originally as part of the MPEG-2 specification but later improved under MPEG-4.ISO (2006ISO/IEC 13818-7:2006 – Information technology — Generic coding of moving pictures and associated audio information — Part 7: Advanced Audio Coding (AAC), Retrieved on 2009-08-06ISO (2006, Retrieved on 2009-08-06 AAC was designed to be the successor of the MP3 format (MPEG-2 Audio Layer III) and generally achieves higher sound quality than MP3 at the same bit rate. AAC encoded audio files are typically packaged in an MP4 container most commonly using the filename extension .m4a. The basic profile of AAC (both MPEG-4 and MPEG-2) is called AAC-LC (''Low Complexity''). It is widely supported in the industry and has been adopted as the default or standard audio format on products including Apple's iTunes Store, Nintendo's Wii, DSi and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Audio Lossless Coding

MPEG-4 Audio Lossless Coding, also known as MPEG-4 ALS, is an extension to the MPEG-4 Part 3 audio standard to allow lossless audio compression. The extension was finalized in December 2005 and published as ISO/ IEC 14496-3:2005/Amd 2:2006 in 2006. The latest description of MPEG-4 ALS was published as subpart 11 of the MPEG-4 Audio standard (ISO/IEC 14496-3:2019) (5th edition) in December 2019. MPEG-4 ALS combines a short-term predictor and a long term predictor. The short-term predictor is similar to FLAC in its operation – it is a quantized LPC predictor with a losslessly coded residual using Golomb Rice Coding or Block Gilbert Moore Coding (BGMC). The long term predictor is modeled by 5 long-term weighted residues, each with its own lag (delay). The lag can be hundreds of samples. This predictor improves the compression for sounds with rich harmonics (containing multiples of a single fundamental frequency, locked in phase) present in many musical instruments and human vo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Organization For Standardization

The International Organization for Standardization (ISO ; ; ) is an independent, non-governmental, international standard development organization composed of representatives from the national standards organizations of member countries. Membership requirements are given in Article 3 of the ISO Statutes. ISO was founded on 23 February 1947, and () it has published over 25,000 international standards covering almost all aspects of technology and manufacturing. It has over 800 technical committees (TCs) and subcommittees (SCs) to take care of standards development. The organization develops and publishes international standards in technical and nontechnical fields, including everything from manufactured products and technology to food safety, transport, IT, agriculture, and healthcare. More specialized topics like electrical and electronic engineering are instead handled by the International Electrotechnical Commission.Editors of Encyclopedia Britannica. 3 June 2021.Inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIDI

Musical Instrument Digital Interface (; MIDI) is an American-Japanese technical standard that describes a communication protocol, digital interface, and electrical connectors that connect a wide variety of electronic musical instruments, computers, and related audio devices for playing, editing, and recording music. A single MIDI cable can carry up to sixteen channels of MIDI data, each of which can be routed to a separate device. Each interaction with a key, button, knob or slider is converted into a MIDI event, which specifies musical instructions, such as a note's pitch, timing and velocity. One common MIDI application is to play a MIDI keyboard or other controller and use it to trigger a digital sound module (which contains synthesized musical sounds) to generate sounds, which the audience hears produced by a keyboard amplifier. MIDI data can be transferred via MIDI or USB cable, or recorded to a sequencer or digital audio workstation to be edited or played back. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Table-lookup Synthesis

Wavetable synthesis is a sound synthesis technique used to create quasi-periodic waveforms often used in the production of musical tones or notes. Development Wavetable synthesis was invented by Max Mathews in 1958 as part of MUSIC II. MUSIC II “had four-voice polyphony and was capable of generating sixteen wave shapes via the introduction of a wavetable oscillator.” Hal Chamberlin discussed wavetable synthesis in Byte's September 1977 issue. Wolfgang Palm of Palm Products GmbH (PPG) developed his version in the late 1970s and published it in 1979. The technique has since been used as the primary synthesis method in synthesizers built by PPG and Waldorf Music and as an auxiliary synthesis method by Ensoniq and Access. It is currently used in hardware synthesizers from Waldorf Music and in software synthesizers for PCs and tablets, including apps offered by PPG and Waldorf, among others. It was also independently developed by Michael McNabb, who used it in his 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HE-AAC

High-Efficiency Advanced Audio Coding (HE-AAC) is an audio coding format for lossy data compression of digital audio as part of the MPEG-4 standards. It is an extension of Low Complexity AAC (AAC-LC) optimized for low- bitrate applications such as streaming audio. The usage profile HE-AAC v1 uses spectral band replication (SBR) to enhance the modified discrete cosine transform (MDCT) compression efficiency in the frequency domain. The usage profile HE-AAC v2 couples SBR with Parametric Stereo (PS) to further enhance the compression efficiency of stereo signals. HE-AAC is defined as an MPEG-4 Audio profile in ISO/ IEC 14496–3. HE-AAC is used in digital radio standards like HD Radio, DAB+ and Digital Radio Mondiale. History The progenitor of HE-AAC was developed by Coding Technologies by combining MPEG-2 AAC-LC with a proprietary mechanism for spectral band replication (SBR), to be used by XM Radio for their satellite radio service. Subsequently, Coding Te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

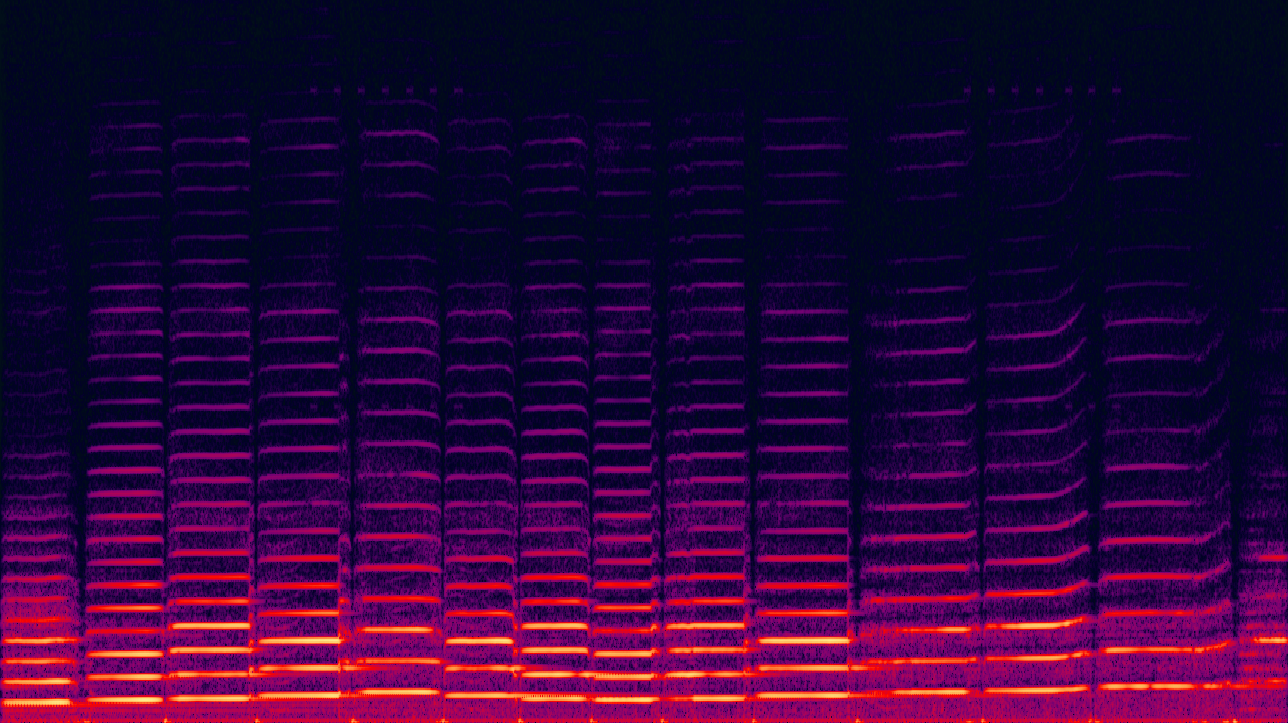

Spectral Band Replication

Spectral band replication (SBR) is a technology to enhance audio or speech codecs, especially at low bit rates and is based on harmonic redundancy in the frequency domain. It can be combined with any Audio compression (data), audio compression codec: the codec itself transmits the lower and midfrequencies of the spectrum, while SBR replicates higher frequency content by transposing up harmonics from the lower and midfrequencies at the decoder. Some guidance information for reconstruction of the high-frequency spectral envelope is transmitted as side information. When needed, it also reconstructs or adaptively mixes in noise-like information in selected frequency bands in order to faithfully replicate signals that originally contained no or fewer tonal components. The SBR idea is based on the principle that the psychoacoustic part of the human brain tends to analyse higher frequencies with less accuracy; thus harmonic phenomena associated with the spectral band replication process ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Long Term Prediction

In GSM, a Regular Pulse Excitation-Long Term Prediction (RPE-LTP) scheme is employed in order to reduce the amount of data sent between the mobile station (MS) and base transceiver station (BTS). In essence, when a voltage level of a particular speech sample is quantified, the mobile station's internal logic predicts the voltage level for the next sample. When the next sample is quantified, the packet sent by the MS to the BTS contains only the error (the signed difference between the actual and predicted level of the sample). See also * GSM * Quantization (signal processing) Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set (often a continuous set) to output values in a (countable) smaller set, often with a finite number of elements. Rounding and tr ... GSM standard {{mobile-tech-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scalable Lossless Coding

MPEG-4 SLS, or MPEG-4 Scalable to Lossless as per ISO/ IEC 14496-3:2005/Amd 3:2006 (Scalable Lossless Coding), is an extension to the MPEG-4 Part 3 (MPEG-4 Audio) standard to allow lossless audio compression scalable to lossy MPEG-4 General Audio coding methods (e.g., variations of AAC). It was developed jointly by the Institute for Infocomm Research (I2R) and Fraunhofer, which commercializes its implementation of a limited subset of the standard under the name of HD-AAC. Standardization of the HD-AAC profile for MPEG-4 Audio is under development (as of September 2009). MPEG-4 SLS allows having both a lossy layer and a lossless correction layer similar to Wavpack Hybrid, OptimFROG DualStream and DTS-HD Master Audio, providing backwards compatibility to MPEG AAC-compliant bitstreams. MPEG-4 SLS can also work without a lossy layer (a.k.a. "SLS Non-Core"), in which case it will not be backwards compatible, Lossy compression of files is necessary for files that need to be stre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-2

MPEG-2 (a.k.a. H.222/H.262 as was defined by the ITU) is a standard for "the generic coding of moving pictures and associated audio information". It describes a combination of lossy video compression and lossy audio data compression methods, which permit storage and transmission of movies using currently available storage media and transmission bandwidth. While MPEG-2 is not as efficient as newer standards such as H.264/AVC and H.265/HEVC, backwards compatibility with existing hardware and software means it is still widely used, for example in over-the-air digital television broadcasting and in the DVD-Video standard. Main characteristics MPEG-2 is widely used as the format of digital television signals that are broadcast by terrestrial (over-the-air), cable, and direct broadcast satellite TV systems. It also specifies the format of movies and other programs that are distributed on DVD and similar discs. TV stations, TV receivers, DVD players, and other equipment are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MPEG-1

MPEG-1 is a Technical standard, standard for lossy compression of video and Audio frequency, audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s (26:1 and 6:1 compression ratios respectively) without excessive quality loss, making video CDs, digital Cable television, cable/Satellite television, satellite TV and digital audio broadcasting (DAB) practical. Today, MPEG-1 has become the most widely compatible lossy audio/video format in the world, and is used in a large number of products and technologies. Perhaps the best-known part of the MPEG-1 standard is the first version of the MP3 audio format it introduced. The MPEG-1 standard is published as International Organization for Standardization, ISO/International Electrotechnical Commission, IEC 11172, titled ''Information technology—Coding of moving pictures and associated audio for digital storage media at up to about 1.5 Mbit/s''. The standard consists of the follow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |