|

Lê Viết Quốc

Lê Viết Quốc (born 1982), or in romanized form Quoc Viet Le, is a Vietnamese-American computer scientist and a machine learning pioneer at Google Brain, which he established with others from Google. He co-invented the Doc2Vec, doc2vec and seq2seq models in natural language processing. Le also initiated and lead the AutoML initiative at Google Brain, including the proposal of neural architecture search. Education and career Le was born in Hương Thủy in the Thừa Thiên Huế province of Vietnam. He studied at Quốc Học – Huế High School for the Gifted, Quốc Học Huế High School. In 2004, Le moved to Australia and attended Australian National University for Bachelor's program, during which he worked under Alex Smola on Kernel method in machine learning. In 2007, Le moved to Stanford University for graduate studies in computer science, where his PhD advisor was Andrew Ng. In 2011, Le became a founding member of Google Brain along with his then PhD advisor An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AutoML

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. AutoML potentially includes every stage from beginning with a raw dataset to building a machine learning model ready for deployment. AutoML was proposed as an artificial intelligence-based solution to the growing challenge of applying machine learning. The high degree of automation in AutoML aims to allow non-experts to make use of machine learning models and techniques without requiring them to become experts in machine learning. Automating the process of applying machine learning end-to-end additionally offers the advantages of producing simpler solutions, faster creation of those solutions, and models that often outperform hand-designed models. Common techniques used in AutoML include hyperparameter optimization, meta-learning and neural architecture search. Comparison to the standard approach In a typical machine learning application, practitio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

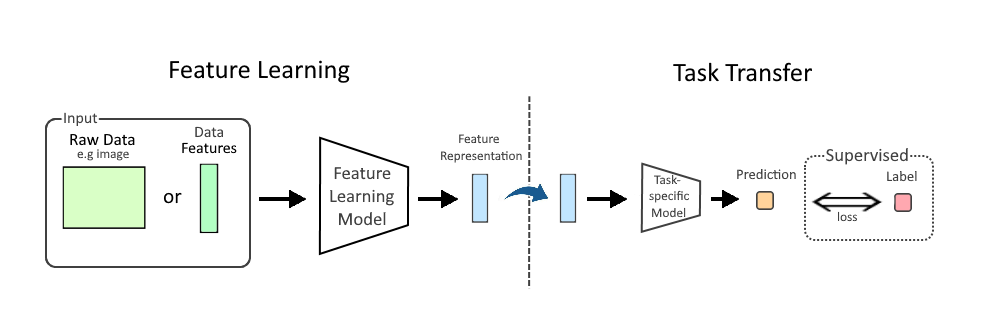

Representation Learning

In machine learning, feature learning or representation learning is a set of techniques that allows a system to automatically discover the representations needed for feature detection or classification from raw data. This replaces manual feature engineering and allows a machine to both learn the features and use them to perform a specific task. Feature learning is motivated by the fact that machine learning tasks such as classification often require input that is mathematically and computationally convenient to process. However, real-world data such as images, video, and sensor data has not yielded to attempts to algorithmically define specific features. An alternative is to discover such features or representations through examination, without relying on explicit algorithms. Feature learning can be either supervised, unsupervised or self-supervised. * In supervised feature learning, features are learned using labeled input data. Labeled data includes input-label pairs where the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tomáš Mikolov

Tomáš Mikolov is a Czech computer scientist working in the field of machine learning. In March of 2020, Mikolov became a senior research scientist at the Czech Institute of Informatics, Robotics and Cybernetics. Career Mikolov obtained his PhD in Computer Science from Brno University of Technology Brno University of Technology (abbreviated: ''BUT''; in Czech: Vysoké učení technické v Brně – Czech abbreviation: ''VUT'') is a university located in Brno, Czech Republic. Being founded in 1899 and initially offering a single course ... for his work on recurrent neural network-based language models. He is the lead author of the 2013 paper that introduced the Word2vec technique in natural language processing and is an author on the FastText architecture. Mikolov came up with the idea to generate text from neural language models in 2007 and his RNNLM toolkit was the first to demonstrate the capability to train language models on large corpora, resulting in large ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation, sometimes referred to by the abbreviation MT (not to be confused with computer-aided translation, machine-aided human translation or interactive translation), is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another. On a basic level, MT performs mechanical substitution of words in one language for words in another, but that alone rarely produces a good translation because recognition of whole phrases and their closest counterparts in the target language is needed. Not all words in one language have equivalent words in another language, and many words have more than one meaning. Solving this problem with corpus statistical and neural techniques is a rapidly growing field that is leading to better translations, handling differences in linguistic typology, translation of idioms, and the isolation of anomalies. Current machine translation software often allows for customizat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Oriol Vinyals

Oriol Vinyals (born 1983) is a Spanish machine learning researcher at DeepMind, where he is the principal research scientist. His research in DeepMind is regularly featured in the mainstream media especially after being acquired by Google. Education and career Vinyals was born in Barcelona, Spain. He studied mathematics and telecommunication engineering at the Universitat Politècnica de Catalunya. He then moved to the US and studied for a Master's degree in computer science at University of California, San Diego, and at University of California, Berkeley, where he received his PhD in 2013 under Nelson Morgan in the Department of Electrical Engineering and Computer Science. Vinyals co-invented the seq2seq model for machine translation along with Ilya Sutskever and Quoc Viet Le. He led AlphaStar research group at DeepMind, which applies artificial intelligence to computer games such as StarCraft II. In 2016, he was chosen by the magazine MIT Technology Review as one of the 3 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ilya Sutskever

Ilya Sutskever is a computer scientist working in machine learning, who co-founded and serves as Chief Scientist of OpenAI. He has made several major contributions to the field of deep learning. He is the co-inventor, with Alex Krizhevsky and Geoffrey Hinton, of AlexNet, a convolutional neural network. Sutskever is also one of the many authors of the AlphaGo paper. Career Sutskever attended the Open University of Israel between 2000 and 2002. In 2002, he moved with his family to Canada and transferred to the University of Toronto, where he then obtained his Bachelor of Science, B.Sc (2005) in mathematicsand his Master of Science, M.Sc (2007) and Doctor of Philosophy, Ph.D (2012) in computer science under the supervision of Geoffrey Hinton. After graduation in 2012, Sutskever spent two months as a postdoc with Andrew Ng at Stanford University. He then returned to University of Toronto and joined Hinton's new research company DNNResearch, a University spin-off, spinoff of Hinton's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

YouTube

YouTube is a global online video platform, online video sharing and social media, social media platform headquartered in San Bruno, California. It was launched on February 14, 2005, by Steve Chen, Chad Hurley, and Jawed Karim. It is owned by Google, and is the List of most visited websites, second most visited website, after Google Search. YouTube has more than 2.5 billion monthly users who collectively watch more than one billion hours of videos each day. , videos were being uploaded at a rate of more than 500 hours of content per minute. In October 2006, YouTube was bought by Google for $1.65 billion. Google's ownership of YouTube expanded the site's business model, expanding from generating revenue from advertisements alone, to offering paid content such as movies and exclusive content produced by YouTube. It also offers YouTube Premium, a paid subscription option for watching content without ads. YouTube also approved creators to participate in Google's Google AdSens ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Core

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry that executes instructions comprising a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs). The form, design, and implementation of CPUs have changed over time, but their fundamental operation remains almost unchanged. Principal components of a CPU include the arithmetic–logic unit (ALU) that performs arithmetic and logic operations, processor registers that supply operands to the ALU and store the results of ALU operations, and a control unit that orchestrates the fetching (from memory), decoding and execution (of instructions) by directing the coordinated operations of the ALU, registers and other compo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised. Deep-learning architectures such as deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distributed communication nodes in biological systems. ANNs have various differences from biological brains. Specifically, artificial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google

Google LLC () is an American multinational technology company focusing on search engine technology, online advertising, cloud computing, computer software, quantum computing, e-commerce, artificial intelligence, and consumer electronics. It has been referred to as "the most powerful company in the world" and one of the world's most valuable brands due to its market dominance, data collection, and technological advantages in the area of artificial intelligence. Its parent company Alphabet is considered one of the Big Five American information technology companies, alongside Amazon, Apple, Meta, and Microsoft. Google was founded on September 4, 1998, by Larry Page and Sergey Brin while they were PhD students at Stanford University in California. Together they own about 14% of its publicly listed shares and control 56% of its stockholder voting power through super-voting stock. The company went public via an initial public offering (IPO) in 2004. In 2015, Google was reor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jeff Dean

Jeffrey Adgate "Jeff" Dean (born July 23, 1968) is an American computer scientist and software engineer. Since 2018, he is the lead of Google AI, Google's AI division. Education Dean received a B.S., ''summa cum laude'', from the University of Minnesota in computer science and economics in 1990. He received a Ph.D. in computer science from the University of Washington in 1996, working under Craig Chambers on compilers and whole-program optimization techniques for object-oriented programming languages. He was elected to the National Academy of Engineering in 2009, which recognized his work on "the science and engineering of large-scale distributed computer systems". Career Before joining Google, Dean worked at DEC/Compaq's Western Research Laboratory, where he worked on profiling tools, microprocessor architecture and information retrieval. Much of his work was completed in close collaboration with Sanjay Ghemawat. Before graduate school, he worked at the World Health Org ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |