|

Limits To Computation

The limits of computation are governed by a number of different factors. In particular, there are several physical and practical limits to the amount of computation or data storage that can be performed with a given amount of mass, volume, or energy. Hardware limits or physical limits Processing and memory density * The Bekenstein bound limits the amount of information that can be stored within a spherical volume to the entropy of a black hole with the same surface area. * Thermodynamics limit the data storage of a system based on its energy, number of particles and particle modes. In practice, it is a stronger bound than the Bekenstein bound. Processing speed * Bremermann's limit is the maximum computational speed of a self-contained system in the material universe, and is based on mass–energy versus quantum uncertainty constraints. Communication delays * The Margolus–Levitin theorem sets a bound on the maximum computational speed per unit of energy: 6 × 1033 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computation

Computation is any type of arithmetic or non-arithmetic calculation that follows a well-defined model (e.g., an algorithm). Mechanical or electronic devices (or, historically, people) that perform computations are known as ''computers''. An especially well-known discipline of the study of computation is computer science. Physical process of Computation Computation can be seen as a purely physical process occurring inside a closed physical system called a computer. Examples of such physical systems are digital computers, mechanical computers, quantum computers, DNA computers, molecular computers, microfluidics-based computers, analog computers, and wetware computers. This point of view has been adopted by the physics of computation, a branch of theoretical physics, as well as the field of natural computing. An even more radical point of view, pancomputationalism (inaudible word), is the postulate of digital physics that argues that the evolution of the universe is itself ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reversible Computing

Reversible computing is any model of computation where the computational process, to some extent, is time-reversible. In a model of computation that uses deterministic transitions from one state of the abstract machine to another, a necessary condition for reversibility is that the relation of the mapping from states to their successors must be one-to-one. Reversible computing is a form of unconventional computing. Due to the unitarity of quantum mechanics, quantum circuits are reversible, as long as they do not "collapse" the quantum states they operate on. Reversibility There are two major, closely related types of reversibility that are of particular interest for this purpose: physical reversibility and logical reversibility. A process is said to be ''physically reversible'' if it results in no increase in physical entropy; it is isentropic. There is a style of circuit design ideally exhibiting this property that is referred to as charge recovery logic, adiabatic circui ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hawking Radiation

Hawking radiation is theoretical black body radiation that is theorized to be released outside a black hole's event horizon because of relativistic quantum effects. It is named after the physicist Stephen Hawking, who developed a theoretical argument for its existence in 1974. Hawking radiation is a purely kinematic effect that is generic to Lorentzian geometries containing event horizons or local apparent horizons. Hawking radiation reduces the mass and rotational energy of black holes and is therefore also theorized to cause black hole evaporation. Because of this, black holes that do not gain mass through other means are expected to shrink and ultimately vanish. For all except the smallest black holes, this would happen extremely slowly. The radiation temperature is inversely proportional to the black hole's mass, so micro black holes are predicted to be larger emitters of radiation than larger black holes and should dissipate faster. Overview Black holes are astrophysica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Seth Lloyd

Seth Lloyd (born August 2, 1960) is a professor of mechanical engineering and physics at the Massachusetts Institute of Technology. His research area is the interplay of information with complex systems, especially quantum systems. He has performed seminal work in the fields of quantum computation, quantum communication and quantum biology, including proposing the first technologically feasible design for a quantum computer, demonstrating the viability of quantum analog computation, proving quantum analogs of Shannon's noisy channel theorem, and designing novel methods for quantum error correction and noise reduction. Biography Lloyd was born on August 2, 1960. He graduated from Phillips Academy in 1978 and received a bachelor of arts degree from Harvard College in 1982. He earned a certificate of advanced study in mathematics and a master of philosophy degree from Cambridge University in 1983 and 1984, while on a Marshall Scholarship. Lloyd was awarded a doctorate by Rockefeller ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Black Hole Information Paradox

The black hole information paradox is a puzzle that appears when the predictions of quantum mechanics and general relativity are combined. The theory of general relativity predicts the existence of black holes that are regions of spacetime from which nothing — not even light — can escape. In the 1970s, Stephen Hawking applied the rules of quantum mechanics to such systems and found that an isolated black hole would emit a form of radiation called Hawking radiation. Hawking also argued that the detailed form of the radiation would be independent of the initial state of the black hole and would depend only on its mass, electric charge and angular momentum. The information paradox appears when one considers a process in which a black hole is formed through a physical process and then evaporates away entirely through Hawking radiation. Hawking's calculation suggests that the final state of radiation would retain information only about the total mass, electric charge and angular m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nanotechnology

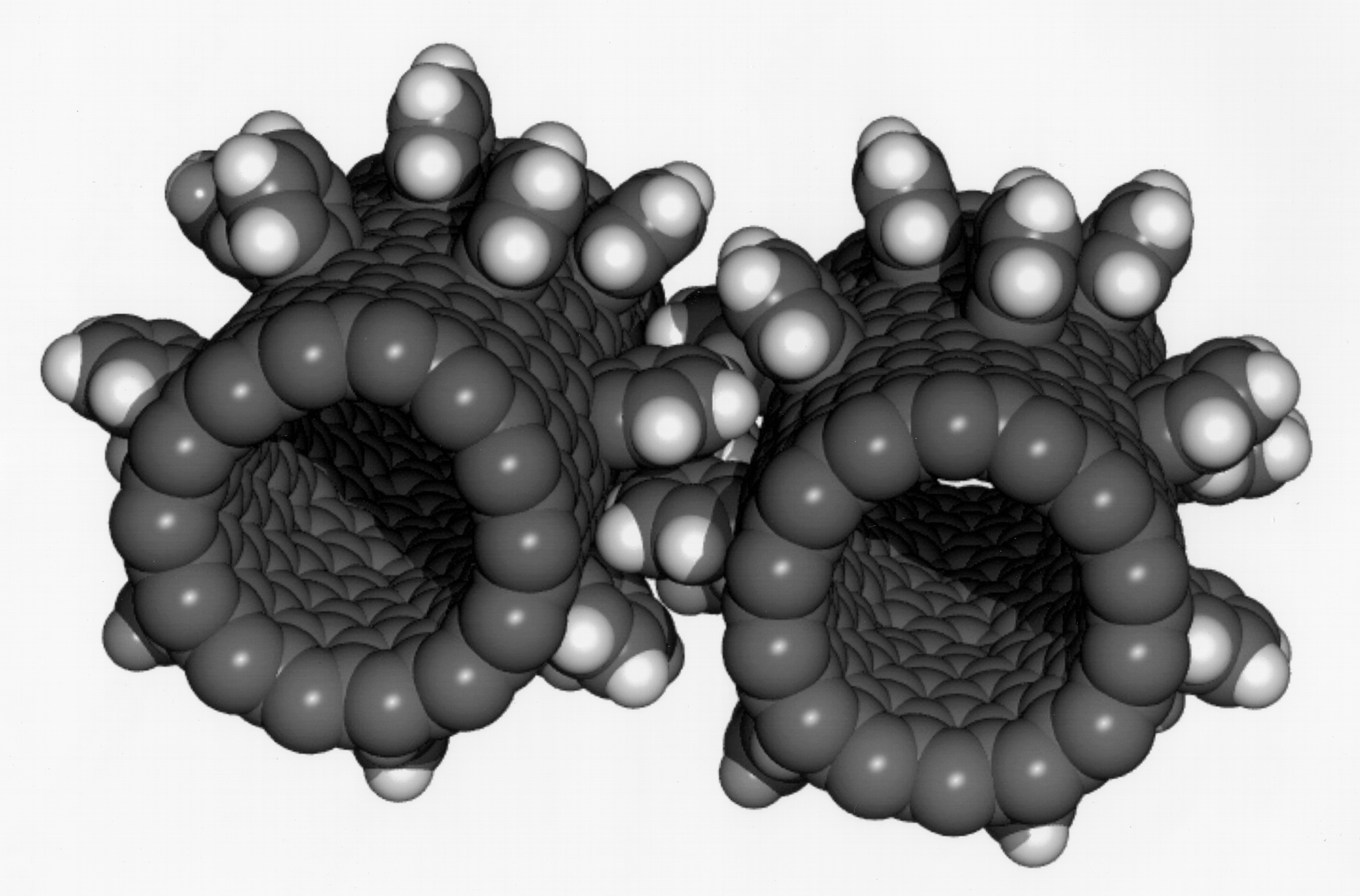

Nanotechnology, also shortened to nanotech, is the use of matter on an atomic, molecular, and supramolecular scale for industrial purposes. The earliest, widespread description of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabrication of macroscale products, also now referred to as molecular nanotechnology. A more generalized description of nanotechnology was subsequently established by the National Nanotechnology Initiative, which defined nanotechnology as the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). This definition reflects the fact that quantum mechanical effects are important at this quantum-realm scale, and so the definition shifted from a particular technological goal to a research category inclusive of all types of research and technologies that deal with the special properties of matter which occur below the given size threshold. It is therefore common to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Femtotechnology

Femtotechnology is a hypothetical term used in reference to structuring of matter on the scale of a femtometer, which is 10−15 m. This is a smaller scale in comparison with nanotechnology and picotechnology which refer to 10−9 m and 10−12 m respectively. Theory Work in the femtometer range involves manipulation of excited energy states within atomic nuclei, specifically nuclear isomers, to produce metastable (or otherwise stabilized) states with unusual properties. In the extreme case, excited states of the individual nucleons that make up the atomic nucleus (protons and neutrons) are considered, ostensibly to tailor the behavioral properties of these particles. The most advanced form of molecular nanotechnology is often imagined to involve self-replicating molecular machines, and there have been some speculations suggesting something similar might be possible with analogues of molecules composed of nucleons rather than atoms. For example, the astrophysici ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computronium

Computronium is a material hypothesized by Norman Margolus and Tommaso Toffoli of MIT in 1991 to be used as "programmable matter", a substrate for computer modeling of virtually any real object. It also refers to a arrangement of matter that is the best possible form of computing device for that amount of matter. In this context, the term can refer both to a theoretically perfect arrangement of hypothetical materials that would have been developed using nanotechnology at the molecular, atomic, or subatomic level (in which case this interpretation of computronium could be unobtainium), and to the best possible achievable form using currently available and used computational materials. According to the Barrow scale, a modified variant of the Kardashev scale created by British physicist John D. Barrow, which is intended to categorize the development stage of extraterrestrial civilizations, it would be conceivable that advanced civilizations do not claim more and more space and reso ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neutron Star

A neutron star is the collapsed core of a massive supergiant star, which had a total mass of between 10 and 25 solar masses, possibly more if the star was especially metal-rich. Except for black holes and some hypothetical objects (e.g. white holes, quark stars, and strange stars), neutron stars are the smallest and densest currently known class of stellar objects. Neutron stars have a radius on the order of and a mass of about 1.4 solar masses. They result from the supernova explosion of a massive star, combined with gravitational collapse, that compresses the core past white dwarf star density to that of atomic nuclei. Once formed, they no longer actively generate heat, and cool over time; however, they may still evolve further through collision or accretion. Most of the basic models for these objects imply that neutron stars are composed almost entirely of neutrons (subatomic particles with no net electrical charge and with slightly larger mass than protons); the electro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nucleon

In physics and chemistry, a nucleon is either a proton or a neutron, considered in its role as a component of an atomic nucleus. The number of nucleons in a nucleus defines the atom's mass number (nucleon number). Until the 1960s, nucleons were thought to be elementary particles, not made up of smaller parts. Now they are known to be composite particles, made of three quarks bound together by the strong interaction. The interaction between two or more nucleons is called internucleon interaction or nuclear force, which is also ultimately caused by the strong interaction. (Before the discovery of quarks, the term "strong interaction" referred to just internucleon interactions.) Nucleons sit at the boundary where particle physics and nuclear physics overlap. Particle physics, particularly quantum chromodynamics, provides the fundamental equations that describe the properties of quarks and of the strong interaction. These equations describe quantitatively how quarks can bind toget ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantum Well

A quantum well is a potential well with only discrete energy values. The classic model used to demonstrate a quantum well is to confine particles, which were initially free to move in three dimensions, to two dimensions, by forcing them to occupy a planar region. The effects of quantum confinement take place when the quantum well thickness becomes comparable to the de Broglie wavelength of the carriers (generally electrons and holes), leading to energy levels called "energy subbands", i.e., the carriers can only have discrete energy values. A wide variety of electronic quantum well devices have been developed based on the theory of quantum well systems. These devices have found applications in lasers, photodetectors, modulators, and switches for example. Compared to conventional devices, quantum well devices are much faster and operate much more economically and are a point of incredible importance to the technological and telecommunication industries. These quantum well devices a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |