|

Filter Bank

In signal processing, a filter bank (or filterbank) is an array of bandpass filters that separates the input signal into multiple components, each one carrying a single frequency sub-band of the original signal. One application of a filter bank is a graphic equalizer, which can attenuate the components differently and recombine them into a modified version of the original signal. The process of decomposition performed by the filter bank is called ''analysis'' (meaning analysis of the signal in terms of its components in each sub-band); the output of analysis is referred to as a subband signal with as many subbands as there are filters in the filter bank. The reconstruction process is called ''synthesis'', meaning reconstitution of a complete signal resulting from the filtering process. In digital signal processing, the term ''filter bank'' is also commonly applied to a bank of receivers. The difference is that receivers also down-convert the subbands to a low center frequency th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Undersampling

In signal processing, undersampling or bandpass sampling is a technique where one samples a bandpass-filtered signal at a sample rate below its Nyquist rate (twice the upper cutoff frequency), but is still able to reconstruct the signal. When one undersamples a bandpass signal, the samples are indistinguishable from the samples of a low-frequency alias of the high-frequency signal. Such sampling is also known as bandpass sampling, harmonic sampling, IF sampling, and direct IF-to-digital conversion. Description The Fourier transforms of real-valued functions are symmetrical around the 0 Hz axis. After sampling, only a periodic summation of the Fourier transform (called discrete-time Fourier transform) is still available. The individual frequency-shifted copies of the original transform are called ''aliases''. The frequency offset between adjacent aliases is the sampling-rate, denoted by ''fs''. When the aliases are mutually exclusive (spectrally), the original transform ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital re ...

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing '' signals'', such as sound, images, and scientific measurements. Signal processing techniques are used to optimize transmissions, digital storage efficiency, correcting distorted signals, subjective video quality and to also detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim Alan Victor Oppenheim''Alan Victor Oppenheim'' was elected in 1987 [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nyquist Rate

In signal processing, the Nyquist rate, named after Harry Nyquist, is a value (in units of samples per second or hertz, Hz) equal to twice the highest frequency ( bandwidth) of a given function or signal. When the function is digitized at a higher sample rate (see ), the resulting discrete-time sequence is said to be free of the distortion known as aliasing. Conversely, for a given sample-rate the corresponding Nyquist frequency in Hz is one-half the sample-rate. Note that the ''Nyquist rate'' is a property of a continuous-time signal, whereas ''Nyquist frequency'' is a property of a discrete-time system. The term ''Nyquist rate'' is also used in a different context with units of symbols per second, which is actually the field in which Harry Nyquist was working. In that context it is an upper bound for the symbol rate across a bandwidth-limited baseband channel such as a telegraph line or passband channel such as a limited radio frequency band or a frequency division m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Downsampling

In digital signal processing, downsampling, compression, and decimation are terms associated with the process of ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''compression'', or they can describe an entire process of bandwidth reduction ( filtering) and sample-rate reduction. When the process is performed on a sequence of samples of a ''signal'' or a continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a lower rate (or density, as in the case of a photograph). ''Decimation'' is a term that historically means the '' removal of every tenth one''. But in signal processing, ''decimation by a factor of 10'' actually means ''keeping'' only every tenth sample. This factor multiplies the sampling interval or, equivalently, divides the sampling rate. For example, if compact disc audio at 44,100 samples/second is ''decimated'' by a factor of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multidimensional Filter Design

In signal processing, multidimensional signal processing covers all signal processing done using multidimensional signals and systems. While multidimensional signal processing is a subset of signal processing, it is unique in the sense that it deals specifically with data that can only be adequately detailed using more than one dimension. In m-D digital signal processing, useful data is sampled in more than one dimension. Examples of this are image processing and multi-sensor radar detection. Both of these examples use multiple sensors to sample signals and form images based on the manipulation of these multiple signals. Processing in multi-dimension (m-D) requires more complex algorithms, compared to the 1-D case, to handle calculations such as the fast Fourier transform due to more degrees of freedom.D. Dudgeon and R. Mersereau, Multidimensional Digital Signal Processing, Prentice-Hall, First Edition, pp. 2, 1983. In some cases, m-D signals and systems can be simplified into single ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Screenshot (80)

screenshot (also known as screen capture or screen grab) is a digital image that shows the contents of a computer display. A screenshot is created by the operating system or software running on the device powering the display. Additionally, screenshots can be captured by an external camera, using photography to capture contents on the screen. Screenshot techniques Digital techniques The first screenshots were created with the first interactive computers around 1960. Through the 1980s, computer operating systems did not universally have built-in functionality for capturing screenshots. Sometimes text-only screens could be dumped to a text file, but the result would only capture the content of the screen, not the appearance, nor were graphics screens preservable this way. Some systems had a BSAVE command that could be used to capture the area of memory where screen data was stored, but this required access to a BASIC prompt. Systems with composite video output could be connec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lowpass Filter

A low-pass filter is a filter that passes signals with a frequency lower than a selected cutoff frequency and attenuates signals with frequencies higher than the cutoff frequency. The exact frequency response of the filter depends on the filter design. The filter is sometimes called a high-cut filter, or treble-cut filter in audio applications. A low-pass filter is the complement of a high-pass filter. In optics, high-pass and low-pass may have different meanings, depending on whether referring to frequency or wavelength of light, since these variables are inversely related. High-pass frequency filters would act as low-pass wavelength filters, and vice versa. For this reason it is a good practice to refer to wavelength filters as ''short-pass'' and ''long-pass'' to avoid confusion, which would correspond to ''high-pass'' and ''low-pass'' frequencies. Low-pass filters exist in many different forms, including electronic circuits such as a hiss filter used in audio, anti-aliasing f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Upsampling

In digital signal processing, upsampling, expansion, and interpolation are terms associated with the process of resampling in a multi-rate digital signal processing system. ''Upsampling'' can be synonymous with ''expansion'', or it can describe an entire process of ''expansion'' and filtering (''interpolation''). When upsampling is performed on a sequence of samples of a ''signal'' or other continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a higher rate (or density, as in the case of a photograph). For example, if compact disc audio at 44,100 samples/second is upsampled by a factor of 5/4, the resulting sample-rate is 55,125. Upsampling by an integer factor Rate increase by an integer factor ''L'' can be explained as a 2-step process, with an equivalent implementation that is more efficient: #Expansion: Create a sequence, x_L comprising the original samples, x separated by ''L'' − 1 zeros.& ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Downsampling (signal Processing)

In digital signal processing, downsampling, compression, and decimation are terms associated with the process of ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''compression'', or they can describe an entire process of bandwidth reduction (filtering) and sample-rate reduction. When the process is performed on a sequence of samples of a ''signal'' or a continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a lower rate (or density, as in the case of a photograph). ''Decimation'' is a term that historically means the '' removal of every tenth one''. But in signal processing, ''decimation by a factor of 10'' actually means ''keeping'' only every tenth sample. This factor multiplies the sampling interval or, equivalently, divides the sampling rate. For example, if compact disc audio at 44,100 samples/second is ''decimated'' by a factor of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bilinear Time–frequency Distribution

Bilinear time–frequency distributions, or quadratic time–frequency distributions, arise in a sub-field of signal analysis and signal processing called time–frequency signal processing, and, in the statistical analysis of time series data. Such methods are used where one needs to deal with a situation where the frequency composition of a signal may be changing over time; this sub-field used to be called time–frequency signal analysis, and is now more often called time–frequency signal processing due to the progress in using these methods to a wide range of signal-processing problems. Background Methods for analysing time series, in both signal analysis and time series analysis, have been developed as essentially separate methodologies applicable to, and based in, either the time or the frequency domain. A mixed approach is required in time–frequency analysis techniques which are especially effective in analyzing non-stationary signals, whose frequency distribution and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

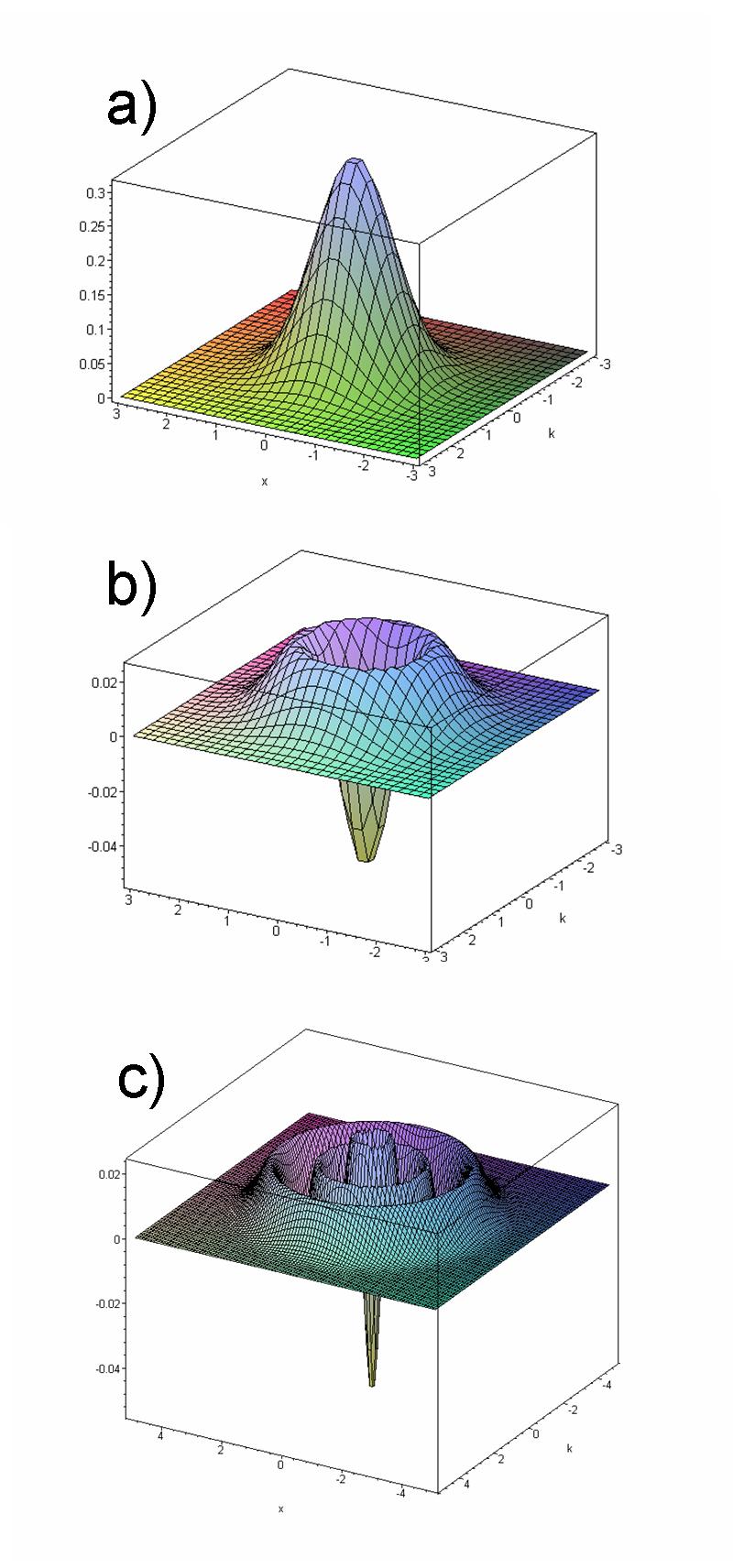

Wigner–Ville Distribution

The Wigner quasiprobability distribution (also called the Wigner function or the Wigner–Ville distribution, after Eugene Wigner and Jean-André Ville) is a quasiprobability distribution. It was introduced by Eugene Wigner in 1932 to study quantum corrections to classical statistical mechanics. The goal was to link the wavefunction that appears in Schrödinger's equation to a probability distribution in phase space. It is a generating function for all spatial autocorrelation functions of a given quantum-mechanical wavefunction . Thus, it maps on the quantum density matrix in the map between real phase-space functions and Hermitian operators introduced by Hermann Weyl in 1927, in a context related to representation theory in mathematics (see Weyl quantization). In effect, it is the Wigner–Weyl transform of the density matrix, so the realization of that operator in phase space. It was later rederived by Jean Ville in 1948 as a quadratic (in signal) representation of the loc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)