|

Factorial Code

{{Short description, Data representation for machine learning Most real world data sets consist of data vectors whose individual components are not statistically independent. In other words, knowing the value of an element will provide information about the value of elements in the data vector. When this occurs, it can be desirable to create a factorial code of the data, i.e., a new vector-valued Representation (mathematics), representation of each data vector such that it gets uniquely encoded by the resulting code vector (loss-free coding), but the code components are statistically independent. Later supervised learning usually works much better when the raw input data is first translated into such a factorial code. For example, suppose the final goal is to classify images with highly redundant pixels. A naive Bayes classifier will assume the pixels are statistically independent random variables and therefore fail to produce good results. If the data are first encoded in a factoria ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistically Independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called Pairwise independence, pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomography, seismic signals, Altimeter, altimetry processing, and scientific measurements. Signal processing techniques are used to optimize transmissions, Data storage, digital storage efficiency, correcting distorted signals, improve subjective video quality, and to detect or pinpoint components of interest in a measured signal. History According to Alan V. Oppenheim and Ronald W. Schafer, the principles of signal processing can be found in the classical numerical analysis techniques of the 17th century. They further state that the digital refinement of these techniques can be found in the digital control systems of the 1940s and 1950s. In 1948, Claude Shannon wrote the influential paper "A Mathematical Theory of Communication" which was publis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Processing

An image or picture is a visual representation. An image can be two-dimensional, such as a drawing, painting, or photograph, or three-dimensional, such as a carving or sculpture. Images may be displayed through other media, including a projection on a surface, activation of electronic signals, or digital displays; they can also be reproduced through mechanical means, such as photography, printmaking, or photocopying. Images can also be animated through digital or physical processes. In the context of signal processing, an image is a distributed amplitude of color(s). In optics, the term ''image'' (or ''optical image'') refers specifically to the reproduction of an object formed by light waves coming from the object. A ''volatile image'' exists or is perceived only for a short period. This may be a reflection of an object by a mirror, a projection of a camera obscura, or a scene displayed on a cathode-ray tube. A ''fixed image'', also called a hard copy, is one that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a framework in machine learning where, in contrast to supervised learning, algorithms learn patterns exclusively from unlabeled data. Other frameworks in the spectrum of supervisions include weak- or semi-supervision, where a small portion of the data is tagged, and self-supervision. Some researchers consider self-supervised learning a form of unsupervised learning. Conceptually, unsupervised learning divides into the aspects of data, training, algorithm, and downstream applications. Typically, the dataset is harvested cheaply "in the wild", such as massive text corpus obtained by web crawling, with only minor filtering (such as Common Crawl). This compares favorably to supervised learning, where the dataset (such as the ImageNet1000) is typically constructed manually, which is much more expensive. There were algorithms designed specifically for unsupervised learning, such as clustering algorithms like k-means, dimensionality reduction techniques l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Principal Component Analysis

Principal component analysis (PCA) is a linear dimensionality reduction technique with applications in exploratory data analysis, visualization and data preprocessing. The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified. The principal components of a collection of points in a real coordinate space are a sequence of p unit vectors, where the i-th vector is the direction of a line that best fits the data while being orthogonal to the first i-1 vectors. Here, a best-fitting line is defined as one that minimizes the average squared perpendicular distance from the points to the line. These directions (i.e., principal components) constitute an orthonormal basis in which different individual dimensions of the data are linearly uncorrelated. Many studies use the first two principal components in order to plot the data in two dimensions and to visually identi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blind Signal Separation

Source separation, blind signal separation (BSS) or blind source separation, is the separation of a set of source signal processing, signals from a set of mixed signals, without the aid of information (or with very little information) about the source signals or the mixing process. It is most commonly applied in digital signal processing and involves the analysis of mixtures of Signal (information theory), signals; the objective is to recover the original component signals from a mixture signal. The classical example of a source separation problem is the cocktail party problem, where a number of people are talking simultaneously in a room (for example, at a cocktail party), and a listener is trying to follow one of the discussions. The human brain can handle this sort of auditory source separation problem, but it is a difficult problem in digital signal processing. This problem is in general highly underdetermined system, underdetermined, but useful solutions can be derived under ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independent Component Analysis

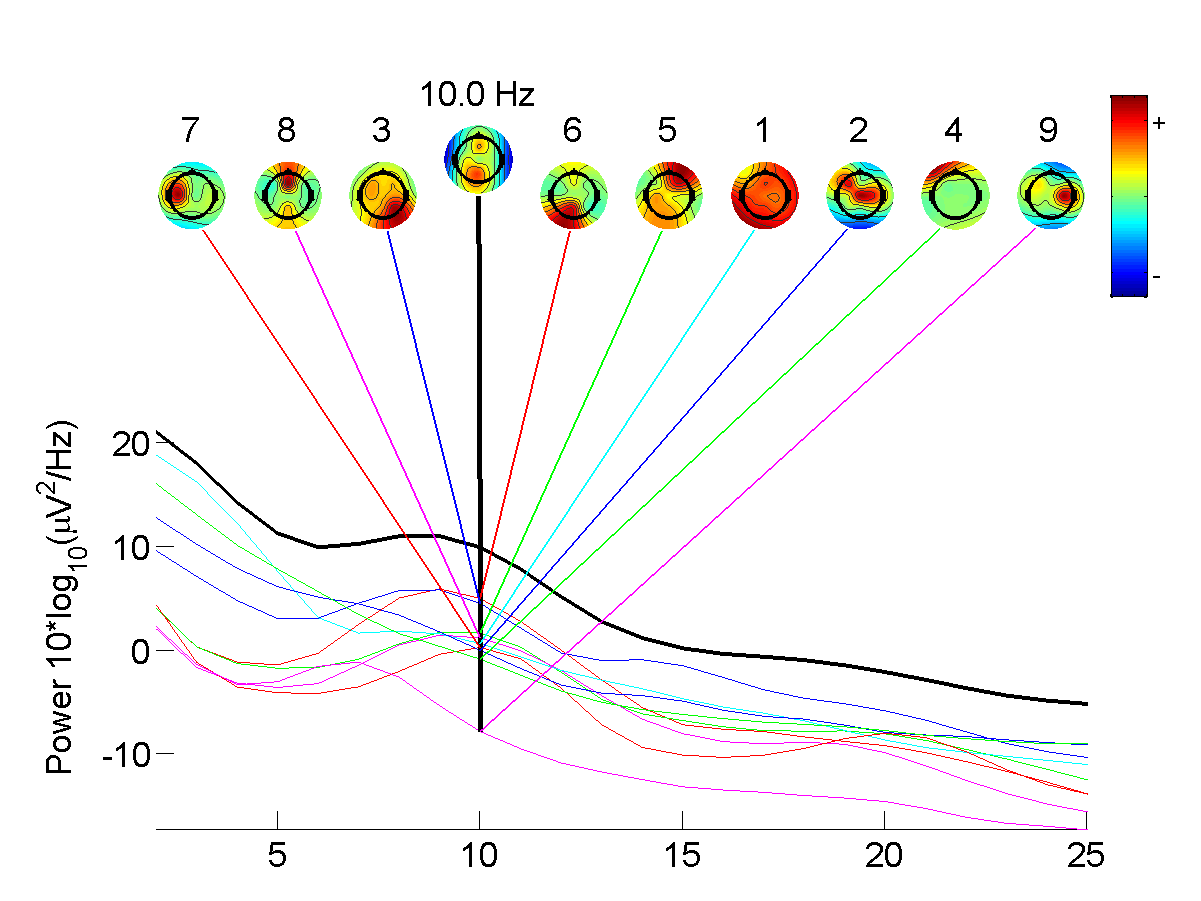

In signal processing, independent component analysis (ICA) is a computational method for separating a multivariate statistics, multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are Statistical independence, statistically independent from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the "cocktail party problem" of listening in on one person's speech in a noisy room. Introduction Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Objective Function

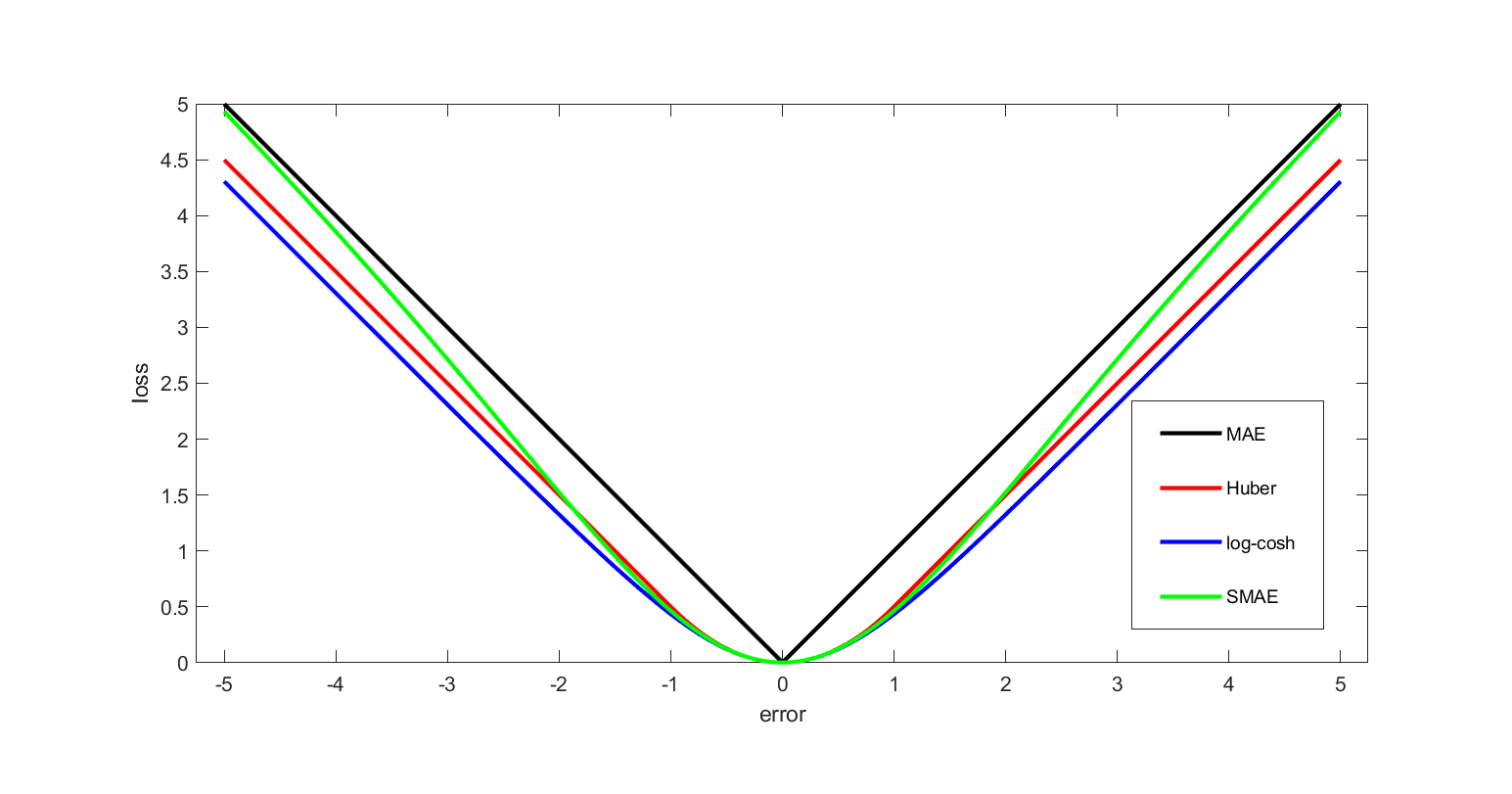

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Detectors

A sensor is often defined as a device that receives and responds to a signal or stimulus. The stimulus is the quantity, property, or condition that is sensed and converted into electrical signal. In the broadest definition, a sensor is a device, module, machine, or subsystem that detects events or changes in its environment and sends the information to other electronics, frequently a computer processor. Sensors are used in everyday objects such as touch-sensitive elevator buttons ( tactile sensor) and lamps which dim or brighten by touching the base, and in innumerable applications of which most people are never aware. With advances in micromachinery and easy-to-use microcontroller platforms, the uses of sensors have expanded beyond the traditional fields of temperature, pressure and flow measurement, for example into MARG sensors. Analog sensors such as potentiometers and force-sensing resistors are still widely used. Their applications include manufacturing and machinery ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Representation (mathematics)

In mathematics, a representation is a very general relationship that expresses similarities (or equivalences) between mathematical objects or structures. Roughly speaking, a collection ''Y'' of mathematical objects may be said to ''represent'' another collection ''X'' of objects, provided that the properties and relationships existing among the representing objects ''yi'' conform, in some consistent way, to those existing among the corresponding represented objects ''xi''. More specifically, given a set ''Π'' of properties and relations, a ''Π''-representation of some structure ''X'' is a structure ''Y'' that is the image of ''X'' under a homomorphism that preserves ''Π''. The label ''representation'' is sometimes also applied to the homomorphism itself (such as group homomorphism in group theory). Representation theory Perhaps the most well-developed example of this general notion is the subfield of abstract algebra called representation theory, which studies the representin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |