Independent component analysis on:

[Wikipedia]

[Google]

[Amazon]

In

Loosely speaking, a sum of two independent random variables usually has a distribution that is closer to Gaussian than any of the two original variables. Here we consider the value of each signal as the random variable. #Complexity: The temporal complexity of any signal mixture is greater than that of its simplest constituent source signal. Those principles contribute to the basic establishment of ICA. If the signals extracted from a set of mixtures are independent and have non-Gaussian distributions or have low complexity, then they must be source signals. Another common example is image steganography, where ICA is used to embed one image within another. For instance, two grayscale images can be linearly combined to create mixed images in which the hidden content is visually imperceptible. ICA can then be used to recover the original source images from the mixtures. This technique underlies digital watermarking, which allows the embedding of ownership information into images, as well as more covert applications such as undetected information transmission. The method has even been linked to real-world cyberespionage cases. In such applications, ICA serves to unmix the data based on statistical independence, making it possible to extract hidden components that are not apparent in the observed data. Steganographic techniques, including those potentially involving ICA-based analysis, have been used in real-world cyberespionage cases. In 2010, the FBI uncovered a Russian spy network known as the "Illegals Program" (Operation Ghost Stories), where agents used custom-built steganography tools to conceal encrypted text messages within image files shared online. In another case, a former General Electric engineer, Xiaoqing Zheng, was convicted in 2022 for economic espionage. Zheng used steganography to exfiltrate sensitive turbine technology by embedding proprietary data within image files for transfer to entities in China.

Independent Component Analysis For Binary Data: An Experimental Study

', Proc. Int. Workshop on Independent Component Analysis and Blind Signal Separation (ICA2001), San Diego, California, 2001. by assuming variables are continuous and running

Binary Independent Component Analysis With or Mixtures

', IEEE Transactions on Signal Processing, Vol. 59, Issue 7. (July 2011), pp. 3168–3181. show that this approach is accurate under moderate noise levels. The Generalized Binary ICA framework introduces a broader problem formulation which does not necessitate any knowledge on the generative model. In other words, this method attempts to decompose a source into its independent components (as much as possible, and without losing any information) with no prior assumption on the way it was generated. Although this problem appears quite complex, it can be accurately solved with a

HAL link

/ref> and popularized in his paper of 1994.Pierre Comon (1994) Independent component analysis, a new concept? http://www.ece.ucsb.edu/wcsl/courses/ECE594/594C_F10Madhow/comon94.pdf In 1995, Tony Bell and Terry Sejnowski introduced a fast and efficient ICA algorithm based on infomax, a principle introduced by Ralph Linsker in 1987. A link exists between maximum-likelihood estimation and Infomax approaches.J-F.Cardoso, "Infomax and Maximum Likelihood for source separation", IEEE Sig. Proc. Letters, 1997, 4(4):112-114. A quite comprehensive tutorial on the maximum-likelihood approach to ICA has been published by J-F. Cardoso in 1998.J-F.Cardoso, "Blind signal separation: statistical principles", Proc. of the IEEE, 1998, 90(8):2009-2025. There are many algorithms available in the literature which do ICA. A largely used one, including in industrial applications, is the FastICA algorithm, developed by Hyvärinen and Oja, which uses the negentropy as cost function, already proposed 7 years before by Pierre Comon in this context. Other examples are rather related to blind source separation where a more general approach is used. For example, one can drop the independence assumption and separate mutually correlated signals, thus, statistically "dependent" signals. Sepp Hochreiter and Jürgen Schmidhuber showed how to obtain non-linear ICA or source separation as a by-product of regularization (1999). Their method does not require a priori knowledge about the number of independent sources.

* image steganography

* optical Imaging of neurons

* neuronal spike sorting

* face recognition

* modelling receptive fields of primary visual neurons

* predicting stock market prices

* mobile phone communications

* colour based detection of the ripeness of tomatoes

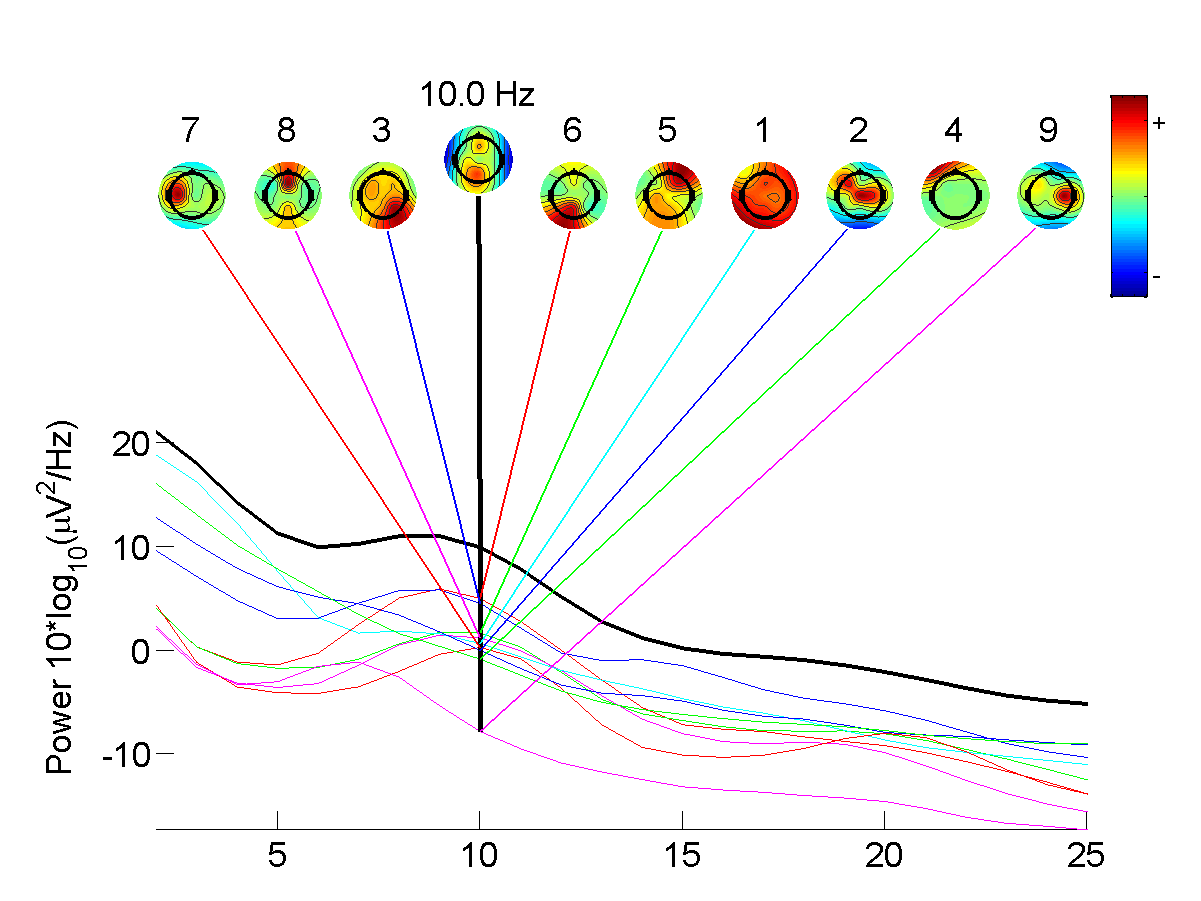

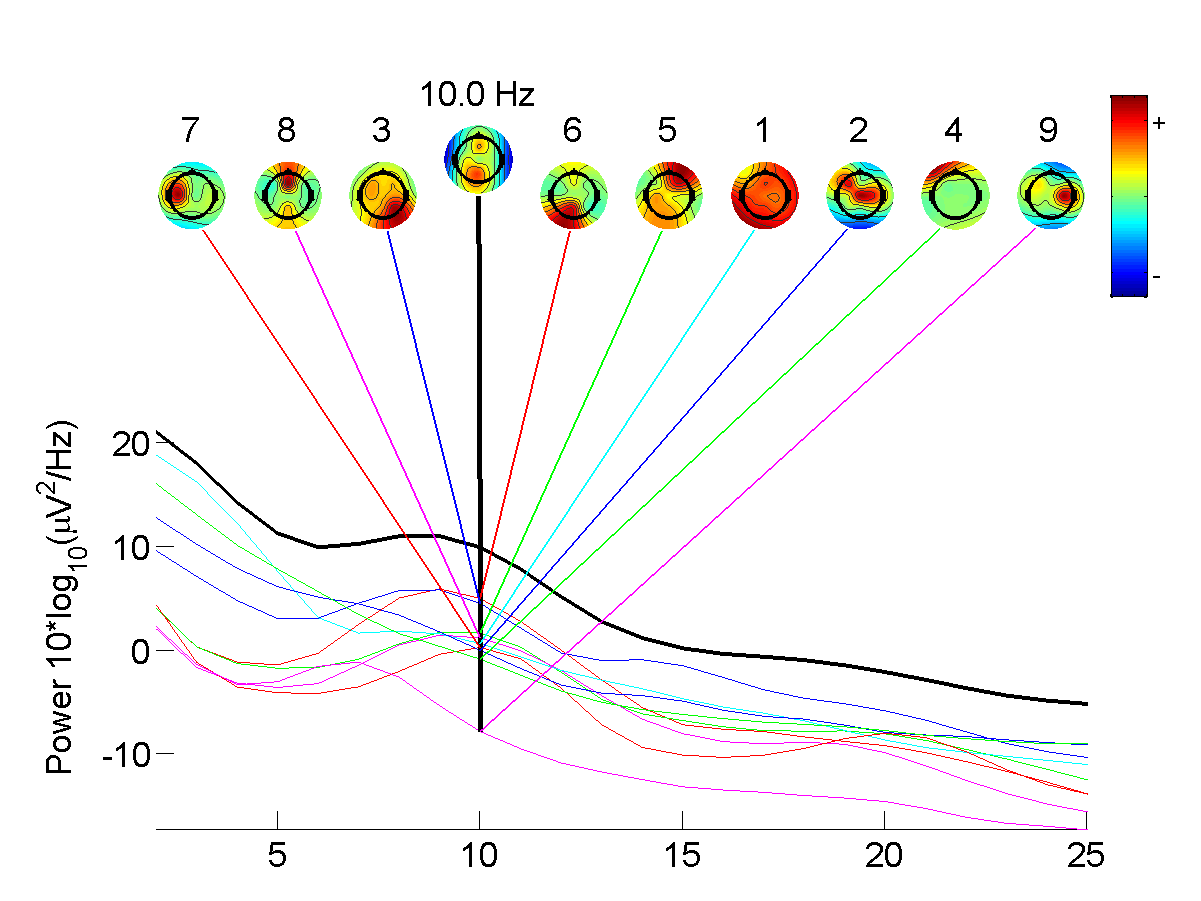

* removing artifacts, such as eye blinks, from EEG data.

* predicting decision-making using EEG

* analysis of changes in gene expression over time in single cell RNA-sequencing experiments.

* studies of the resting state network of the brain.

* astronomy and cosmology

* finance

* image steganography

* optical Imaging of neurons

* neuronal spike sorting

* face recognition

* modelling receptive fields of primary visual neurons

* predicting stock market prices

* mobile phone communications

* colour based detection of the ripeness of tomatoes

* removing artifacts, such as eye blinks, from EEG data.

* predicting decision-making using EEG

* analysis of changes in gene expression over time in single cell RNA-sequencing experiments.

* studies of the resting state network of the brain.

* astronomy and cosmology

* finance

sklearn.decomposition.FastICA

*

"Independent Component Analysis: a new concept?"

''Signal Processing'', 36(3):287–314 (The original paper describing the concept of ICA) * Hyvärinen, A.; Karhunen, J.; Oja, E. (2001):

Independent Component Analysis

', New York: Wiley,

Introductory chapter

) * Hyvärinen, A.; Oja, E. (2000)

"Independent Component Analysis: Algorithms and Application"

''Neural Networks'', 13(4-5):411-430. (Technical but pedagogical introduction). * Comon, P.; Jutten C., (2010): Handbook of Blind Source Separation, Independent Component Analysis and Applications. Academic Press, Oxford UK. * Lee, T.-W. (1998): ''Independent component analysis: Theory and applications'', Boston, Mass: Kluwer Academic Publishers, * Acharyya, Ranjan (2008): ''A New Approach for Blind Source Separation of Convolutive Sources - Wavelet Based Separation Using Shrinkage Function'' (this book focuses on unsupervised learning with Blind Source Separation)

What is independent component analysis?

by Aapo Hyvärinen

by Aapo Hyvärinen

A Tutorial on Independent Component Analysis

FastICA as a package for Matlab, in R language, C++

ICALAB Toolboxes

for Matlab, developed at RIKEN

High Performance Signal Analysis Toolkit

provides C++ implementations of FastICA and Infomax

ICA toolbox

Matlab tools for ICA with Bell-Sejnowski, Molgedey-Schuster and mean field ICA. Developed at DTU.

Demonstration of the cocktail party problem

{{Webarchive, url=https://web.archive.org/web/20100313152128/http://www.cis.hut.fi/projects/ica/cocktail/cocktail_en.cgi , date=2010-03-13

EEGLAB Toolbox

ICA of EEG for Matlab, developed at UCSD.

FMRLAB Toolbox

ICA of fMRI for Matlab, developed at UCSD

MELODIC

part of the FMRIB Software Library.

Discussion of ICA used in a biomedical shape-representation context

FastICA, CuBICA, JADE and TDSEP algorithm for Python and more...

Group ICA Toolbox and Fusion ICA Toolbox

Tutorial: Using ICA for cleaning EEG signals

Signal estimation Dimension reduction

signal processing

Signal processing is an electrical engineering subfield that focuses on analyzing, modifying and synthesizing ''signals'', such as audio signal processing, sound, image processing, images, Scalar potential, potential fields, Seismic tomograph ...

, independent component analysis (ICA) is a computational method for separating a multivariate signal into additive subcomponents. This is done by assuming that at most one subcomponent is Gaussian and that the subcomponents are statistically independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two event (probability theory), events are independent, statistically independent, or stochastically independent if, informally s ...

from each other. ICA was invented by Jeanny Hérault and Christian Jutten in 1985. ICA is a special case of blind source separation. A common example application of ICA is the " cocktail party problem" of listening in on one person's speech in a noisy room.

Introduction

Independent component analysis attempts to decompose a multivariate signal into independent non-Gaussian signals. As an example, sound is usually a signal that is composed of the numerical addition, at each time t, of signals from several sources. The question then is whether it is possible to separate these contributing sources from the observed total signal. When the statistical independence assumption is correct, blind ICA separation of a mixed signal gives very good results.Comon, P.; Jutten C., (2010): Handbook of Blind Source Separation, Independent Component Analysis and Applications. Academic Press, Oxford UK. It is also used for signals that are not supposed to be generated by mixing for analysis purposes. A simple application of ICA is the " cocktail party problem", where the underlying speech signals are separated from a sample data consisting of people talking simultaneously in a room. Usually the problem is simplified by assuming no time delays or echoes. Note that a filtered and delayed signal is a copy of a dependent component, and thus the statistical independence assumption is not violated. Mixing weights for constructing the '''' observed signals from the components can be placed in an matrix. An important thing to consider is that if sources are present, at least observations (e.g. microphones if the observed signal is audio) are needed to recover the original signals. When there are an equal number of observations and source signals, the mixing matrix is square (''''). Other cases of underdetermined ('''') and overdetermined ('''') have been investigated. The success of ICA separation of mixed signals relies on two assumptions and three effects of mixing source signals. Two assumptions: #The source signals are independent of each other. #The values in each source signal have non-Gaussian distributions. Three effects of mixing source signals: #Independence: As per assumption 1, the source signals are independent; however, their signal mixtures are not. This is because the signal mixtures share the same source signals. #Normality: According to theCentral Limit Theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distributi ...

, the distribution of a sum of independent random variables with finite variance tends towards a Gaussian distribution.Loosely speaking, a sum of two independent random variables usually has a distribution that is closer to Gaussian than any of the two original variables. Here we consider the value of each signal as the random variable. #Complexity: The temporal complexity of any signal mixture is greater than that of its simplest constituent source signal. Those principles contribute to the basic establishment of ICA. If the signals extracted from a set of mixtures are independent and have non-Gaussian distributions or have low complexity, then they must be source signals. Another common example is image steganography, where ICA is used to embed one image within another. For instance, two grayscale images can be linearly combined to create mixed images in which the hidden content is visually imperceptible. ICA can then be used to recover the original source images from the mixtures. This technique underlies digital watermarking, which allows the embedding of ownership information into images, as well as more covert applications such as undetected information transmission. The method has even been linked to real-world cyberespionage cases. In such applications, ICA serves to unmix the data based on statistical independence, making it possible to extract hidden components that are not apparent in the observed data. Steganographic techniques, including those potentially involving ICA-based analysis, have been used in real-world cyberespionage cases. In 2010, the FBI uncovered a Russian spy network known as the "Illegals Program" (Operation Ghost Stories), where agents used custom-built steganography tools to conceal encrypted text messages within image files shared online. In another case, a former General Electric engineer, Xiaoqing Zheng, was convicted in 2022 for economic espionage. Zheng used steganography to exfiltrate sensitive turbine technology by embedding proprietary data within image files for transfer to entities in China.

Defining component independence

ICA finds the independent components (also called factors, latent variables or sources) by maximizing the statistical independence of the estimated components. We may choose one of many ways to define a proxy for independence, and this choice governs the form of the ICA algorithm. The two broadest definitions of independence for ICA are # Minimization of mutual information # Maximization of non-Gaussianity The Minimization-of-Mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

(MMI) family of ICA algorithms uses measures like Kullback-Leibler Divergence and maximum entropy. The non-Gaussianity family of ICA algorithms, motivated by the central limit theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distributi ...

, uses kurtosis and negentropy.

Typical algorithms for ICA use centering (subtract the mean to create a zero mean signal), whitening (usually with the eigenvalue decomposition), and dimensionality reduction as preprocessing steps in order to simplify and reduce the complexity of the problem for the actual iterative algorithm.

Mathematical definitions

Linear independent component analysis can be divided into noiseless and noisy cases, where noiseless ICA is a special case of noisy ICA. Nonlinear ICA should be considered as a separate case.General Derivation

In the classical ICA model, it is assumed that the observed data at time is generated from source signals via a linear transformation , where is an unknown, invertible mixing matrix. To recover the source signals, the data is first centered (zero mean), and then whitened so that the transformed data has unit covariance. This whitening reduces the problem from estimating a general matrix to estimating an orthogonal matrix , significantly simplifying the search for independent components. If the covariance matrix of the centered data is , then using the eigen-decomposition , the whitening transformation can be taken as . This step ensures that the recovered sources are uncorrelated and of unit variance, leaving only the task of rotating the whitened data to maximize statistical independence. This general derivation underlies many ICA algorithms and is foundational in understanding the ICA model.Reduced Mixing Problem

Independent component analysis (ICA) addresses the problem of recovering a set of unobserved source signals from observed mixed signals , based on the linear mixing model: where the is an invertible matrix called the mixing matrix, represents the m‑dimensional vector containing the values of the sources at time , and is the corresponding vector of observed values at time . The goal is to estimate both and the source signals solely from the observed data . After centering, the Gram matrix is computed as: where D is a diagonal matrix with positive entries (assuming has maximum rank), and Q is an orthogonal matrix. Writing the SVD of the mixing matrix and comparing with the mixing A has the form So, the normalized source values satisfy , where Thus, ICA reduces to finding the orthogonal matrix . This matrix can be computed using optimization techniques via projection pursuit methods (seeProjection Pursuit

Projection pursuit (PP) is a type of statistical technique that involves finding the most "interesting" possible projections in multidimensional data. Often, projections that deviate more from a normal distribution are considered to be more intere ...

).

Well-known algorithms for ICA include infomax, FastICA

FastICA is an efficient and popular algorithm for independent component analysis invented by Aapo Hyvärinen at Helsinki University of Technology. Like most ICA algorithms, FastICA seeks an orthogonal rotation of FastICA#Prewhitening the data, prew ...

, JADE

Jade is an umbrella term for two different types of decorative rocks used for jewelry or Ornament (art), ornaments. Jade is often referred to by either of two different silicate mineral names: nephrite (a silicate of calcium and magnesium in t ...

, and kernel-independent component analysis In statistics, kernel-independent component analysis (kernel ICA) is an efficient algorithm for independent component analysis which estimates source components by optimizing a ''generalized variance'' contrast function, which is based on representa ...

, among others. In general, ICA cannot identify the actual number of source signals, a uniquely correct ordering of the source signals, nor the proper scaling (including sign) of the source signals.

ICA is important to blind signal separation

Source separation, blind signal separation (BSS) or blind source separation, is the separation of a set of source signal processing, signals from a set of mixed signals, without the aid of information (or with very little information) about the s ...

and has many practical applications. It is closely related to (or even a special case of) the search for a factorial code {{Short description, Data representation for machine learning

Most real world data sets consist of data vectors whose individual components are not statistically independent. In other words, knowing the value of an element will provide information a ...

of the data, i.e., a new vector-valued representation of each data vector such that it gets uniquely encoded by the resulting code vector (loss-free coding), but the code components are statistically independent.

Linear noiseless ICA

The components of the observed random vector are generated as a sum of the independent components , : weighted by the mixing weights . The same generative model can be written in vector form as , where the observed random vector is represented by the basis vectors . The basis vectors form the columns of the mixing matrix and the generative formula can be written as , where . Given the model and realizations (samples) of the random vector , the task is to estimate both the mixing matrix and the sources . This is done by adaptively calculating the vectors and setting up a cost function which either maximizes the non-gaussianity of the calculated or minimizes the mutual information. In some cases, a priori knowledge of the probability distributions of the sources can be used in the cost function. The original sources can be recovered by multiplying the observed signals with the inverse of the mixing matrix , also known as the unmixing matrix. Here it is assumed that the mixing matrix is square (). If the number of basis vectors is greater than the dimensionality of the observed vectors, , the task is overcomplete but is still solvable with the pseudo inverse.Linear noisy ICA

With the added assumption of zero-mean and uncorrelated Gaussian noise , the ICA model takes the form .Nonlinear ICA

The mixing of the sources does not need to be linear. Using a nonlinear mixing function with parameters the nonlinear ICA model is .Identifiability

The independent components are identifiable up to a permutation and scaling of the sources. This identifiability requires that: * At most one of the sources is Gaussian, * The number of observed mixtures, , must be at least as large as the number of estimated components : . It is equivalent to say that the mixing matrix must be of full rank for its inverse to exist.Binary ICA

A special variant of ICA is binary ICA in which both signal sources and monitors are in binary form and observations from monitors are disjunctive mixtures of binary independent sources. The problem was shown to have applications in many domains includingmedical diagnosis

Medical diagnosis (abbreviated Dx, Dx, or Ds) is the process of determining which disease or condition explains a person's symptoms and signs. It is most often referred to as a diagnosis with the medical context being implicit. The information ...

, multi-cluster assignment, network tomography and internet resource management

The Internet (or internet) is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP) to communicate between networks and devices. It is a network of networks that consists of private, publ ...

.

Let be the set of binary variables from monitors and be the set of binary variables from sources. Source-monitor connections are represented by the (unknown) mixing matrix , where indicates that signal from the ''i''-th source can be observed by the ''j''-th monitor. The system works as follows: at any time, if a source is active () and it is connected to the monitor () then the monitor will observe some activity (). Formally we have:

:

where is Boolean AND and is Boolean OR. Noise is not explicitly modelled, rather, can be treated as independent sources.

The above problem can be heuristically solvedJohan Himbergand Aapo Hyvärinen, Independent Component Analysis For Binary Data: An Experimental Study

', Proc. Int. Workshop on Independent Component Analysis and Blind Signal Separation (ICA2001), San Diego, California, 2001. by assuming variables are continuous and running

FastICA

FastICA is an efficient and popular algorithm for independent component analysis invented by Aapo Hyvärinen at Helsinki University of Technology. Like most ICA algorithms, FastICA seeks an orthogonal rotation of FastICA#Prewhitening the data, prew ...

on binary observation data to get the mixing matrix (real values), then apply round number techniques on to obtain the binary values. This approach has been shown to produce a highly inaccurate result.

Another method is to use dynamic programming: recursively breaking the observation matrix into its sub-matrices and run the inference algorithm on these sub-matrices. The key observation which leads to this algorithm is the sub-matrix of where corresponds to the unbiased observation matrix of hidden components that do not have connection to the -th monitor. Experimental results fromHuy Nguyen and Rong Zheng, Binary Independent Component Analysis With or Mixtures

', IEEE Transactions on Signal Processing, Vol. 59, Issue 7. (July 2011), pp. 3168–3181. show that this approach is accurate under moderate noise levels. The Generalized Binary ICA framework introduces a broader problem formulation which does not necessitate any knowledge on the generative model. In other words, this method attempts to decompose a source into its independent components (as much as possible, and without losing any information) with no prior assumption on the way it was generated. Although this problem appears quite complex, it can be accurately solved with a

branch and bound

Branch and bound (BB, B&B, or BnB) is a method for solving optimization problems by breaking them down into smaller sub-problems and using a bounding function to eliminate sub-problems that cannot contain the optimal solution.

It is an algorithm ...

search tree algorithm or tightly upper bounded with a single multiplication of a matrix with a vector.

Methods for blind source separation

Projection pursuit

Signal mixtures tend to have Gaussian probability density functions, and source signals tend to have non-Gaussian probability density functions. Each source signal can be extracted from a set of signal mixtures by taking the inner product of a weight vector and those signal mixtures where this inner product provides an orthogonal projection of the signal mixtures. The remaining challenge is finding such a weight vector. One type of method for doing so isprojection pursuit

Projection pursuit (PP) is a type of statistical technique that involves finding the most "interesting" possible projections in multidimensional data. Often, projections that deviate more from a normal distribution are considered to be more intere ...

.James V. Stone(2004); "Independent Component Analysis: A Tutorial Introduction", The MIT Press Cambridge, Massachusetts, London, England;

Projection pursuit seeks one projection at a time such that the extracted signal is as non-Gaussian as possible. This contrasts with ICA, which typically extracts ''M'' signals simultaneously from ''M'' signal mixtures, which requires estimating a ''M'' × ''M'' unmixing matrix. One practical advantage of projection pursuit over ICA is that fewer than ''M'' signals can be extracted if required, where each source signal is extracted from ''M'' signal mixtures using an ''M''-element weight vector.

We can use kurtosis to recover the multiple source signal by finding the correct weight vectors with the use of projection pursuit.

The kurtosis of the probability density function of a signal, for a finite sample, is computed as

:

where is the sample mean of , the extracted signals. The constant 3 ensures that Gaussian signals have zero kurtosis, Super-Gaussian signals have positive kurtosis, and Sub-Gaussian signals have negative kurtosis. The denominator is the variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

of , and ensures that the measured kurtosis takes account of signal variance. The goal of projection pursuit is to maximize the kurtosis, and make the extracted signal as non-normal as possible.

Using kurtosis as a measure of non-normality, we can now examine how the kurtosis of a signal extracted from a set of ''M'' mixtures varies as the weight vector is rotated around the origin. Given our assumption that each source signal is super-gaussian we would expect:

#the kurtosis of the extracted signal to be maximal precisely when .

#the kurtosis of the extracted signal to be maximal when is orthogonal to the projected axes or , because we know the optimal weight vector should be orthogonal to a transformed axis or .

For multiple source mixture signals, we can use kurtosis and Gram-Schmidt Orthogonalization (GSO) to recover the signals. Given ''M'' signal mixtures in an ''M''-dimensional space, GSO project these data points onto an (''M-1'')-dimensional space by using the weight vector. We can guarantee the independence of the extracted signals with the use of GSO.

In order to find the correct value of , we can use gradient descent method. We first of all whiten the data, and transform into a new mixture , which has unit variance, and . This process can be achieved by applying Singular value decomposition

In linear algebra, the singular value decomposition (SVD) is a Matrix decomposition, factorization of a real number, real or complex number, complex matrix (mathematics), matrix into a rotation, followed by a rescaling followed by another rota ...

to ,

:

Rescaling each vector , and let . The signal extracted by a weighted vector is . If the weight vector w has unit length, then the variance of y is also 1, that is . The kurtosis can thus be written as:

:

The updating process for is:

:

where is a small constant to guarantee that converges to the optimal solution. After each update, we normalize , and set , and repeat the updating process until convergence. We can also use another algorithm to update the weight vector .

Another approach is using negentropy instead of kurtosis. Using negentropy is a more robust method than kurtosis, as kurtosis is very sensitive to outliers. The negentropy methods are based on an important property of Gaussian distribution: a Gaussian variable has the largest entropy among all continuous random variables of equal variance. This is also the reason why we want to find the most nongaussian variables. A simple proof can be found in Differential entropy.

:

y is a Gaussian random variable of the same covariance matrix as x

:

An approximation for negentropy is

:

A proof can be found in the original papers of Comon; it has been reproduced in the book ''Independent Component Analysis'' by Aapo Hyvärinen, Juha Karhunen, and Erkki Oja This approximation also suffers from the same problem as kurtosis (sensitivity to outliers). Other approaches have been developed.

:

A choice of and are

: and

Based on infomax

Infomax ICABell, A. J.; Sejnowski, T. J. (1995). "An Information-Maximization Approach to Blind Separation and Blind Deconvolution", Neural Computation, 7, 1129-1159 is essentially a multivariate, parallel version of projection pursuit. Whereas projection pursuit extracts a series of signals one at a time from a set of ''M'' signal mixtures, ICA extracts ''M'' signals in parallel. This tends to make ICA more robust than projection pursuit.James V. Stone (2004). "Independent Component Analysis: A Tutorial Introduction", The MIT Press Cambridge, Massachusetts, London, England; The projection pursuit method uses Gram-Schmidt orthogonalization to ensure the independence of the extracted signal, while ICA use infomax and maximum likelihood estimate to ensure the independence of the extracted signal. The Non-Normality of the extracted signal is achieved by assigning an appropriate model, or prior, for the signal. The process of ICA based on infomax in short is: given a set of signal mixtures and a set of identical independent model cumulative distribution functions(cdfs) , we seek the unmixing matrix which maximizes the jointentropy

Entropy is a scientific concept, most commonly associated with states of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynamics, where it was first recognized, to the micros ...

of the signals , where are the signals extracted by . Given the optimal , the signals have maximum entropy and are therefore independent, which ensures that the extracted signals are also independent. is an invertible function, and is the signal model. Note that if the source signal model probability density function

In probability theory, a probability density function (PDF), density function, or density of an absolutely continuous random variable, is a Function (mathematics), function whose value at any given sample (or point) in the sample space (the s ...

matches the probability density function

In probability theory, a probability density function (PDF), density function, or density of an absolutely continuous random variable, is a Function (mathematics), function whose value at any given sample (or point) in the sample space (the s ...

of the extracted signal , then maximizing the joint entropy of also maximizes the amount of mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual Statistical dependence, dependence between the two variables. More specifically, it quantifies the "Information conten ...

between and . For this reason, using entropy to extract independent signals is known as infomax.

Consider the entropy of the vector variable , where is the set of signals extracted by the unmixing matrix . For a finite set of values sampled from a distribution with pdf , the entropy of can be estimated as:

:

The joint pdf can be shown to be related to the joint pdf of the extracted signals by the multivariate form:

:

where is the Jacobian matrix

In vector calculus, the Jacobian matrix (, ) of a vector-valued function of several variables is the matrix of all its first-order partial derivatives. If this matrix is square, that is, if the number of variables equals the number of component ...

. We have , and is the pdf assumed for source signals , therefore,

:

therefore,

:

We know that when , is of uniform distribution, and is maximized. Since

:

where is the absolute value of the determinant of the unmixing matrix . Therefore,

:

so,

:

since , and maximizing does not affect , so we can maximize the function

:

to achieve the independence of the extracted signal.

If there are ''M'' marginal pdfs of the model joint pdf are independent and use the commonly super-gaussian model pdf for the source signals , then we have

:

In the sum, given an observed signal mixture , the corresponding set of extracted signals and source signal model , we can find the optimal unmixing matrix , and make the extracted signals independent and non-gaussian. Like the projection pursuit situation, we can use gradient descent method to find the optimal solution of the unmixing matrix.

Based on maximum likelihood estimation

Maximum likelihood estimation (MLE) is a standard statistical tool for finding parameter values (e.g. the unmixing matrix ) that provide the best fit of some data (e.g., the extracted signals ) to a given a model (e.g., the assumed joint probability density function (pdf) of source signals). The ML "model" includes a specification of a pdf, which in this case is the pdf of the unknown source signals . Using ML ICA, the objective is to find an unmixing matrix that yields extracted signals with a joint pdf as similar as possible to the joint pdf of the unknown source signals . MLE is thus based on the assumption that if the model pdf and the model parameters are correct then a high probability should be obtained for the data that were actually observed. Conversely, if is far from the correct parameter values then a low probability of the observed data would be expected. Using MLE, we call the probability of the observed data for a given set of model parameter values (e.g., a pdf and a matrix ) the ''likelihood'' of the model parameter values given the observed data. We define a ''likelihood'' function of : This equals to the probability density at , since . Thus, if we wish to find a that is most likely to have generated the observed mixtures from the unknown source signals with pdf then we need only find that which maximizes the ''likelihood'' . The unmixing matrix that maximizes equation is known as the MLE of the optimal unmixing matrix. It is common practice to use the log ''likelihood'', because this is easier to evaluate. As the logarithm is a monotonic function, the that maximizes the function also maximizes its logarithm . This allows us to take the logarithm of equation above, which yields the log ''likelihood'' function If we substitute a commonly used high- Kurtosis model pdf for the source signals then we have This matrix that maximizes this function is the '' maximum likelihood estimation''.History and background

The early general framework for independent component analysis was introduced by Jeanny Hérault and Bernard Ans from 1984, further developed by Christian Jutten in 1985 and 1986,Ans, B., Hérault, J., & Jutten, C. (1985). Architectures neuromimétiques adaptatives : Détection de primitives. ''Cognitiva 85'' (Vol. 2, pp. 593-597). Paris: CESTA. and refined by Pierre Comon in 1991,P.Comon, Independent Component Analysis, Workshop on Higher-Order Statistics, July 1991, republished in J-L. Lacoume, editor, Higher Order Statistics, pp. 29-38. Elsevier, Amsterdam, London, 1992HAL link

/ref> and popularized in his paper of 1994.Pierre Comon (1994) Independent component analysis, a new concept? http://www.ece.ucsb.edu/wcsl/courses/ECE594/594C_F10Madhow/comon94.pdf In 1995, Tony Bell and Terry Sejnowski introduced a fast and efficient ICA algorithm based on infomax, a principle introduced by Ralph Linsker in 1987. A link exists between maximum-likelihood estimation and Infomax approaches.J-F.Cardoso, "Infomax and Maximum Likelihood for source separation", IEEE Sig. Proc. Letters, 1997, 4(4):112-114. A quite comprehensive tutorial on the maximum-likelihood approach to ICA has been published by J-F. Cardoso in 1998.J-F.Cardoso, "Blind signal separation: statistical principles", Proc. of the IEEE, 1998, 90(8):2009-2025. There are many algorithms available in the literature which do ICA. A largely used one, including in industrial applications, is the FastICA algorithm, developed by Hyvärinen and Oja, which uses the negentropy as cost function, already proposed 7 years before by Pierre Comon in this context. Other examples are rather related to blind source separation where a more general approach is used. For example, one can drop the independence assumption and separate mutually correlated signals, thus, statistically "dependent" signals. Sepp Hochreiter and Jürgen Schmidhuber showed how to obtain non-linear ICA or source separation as a by-product of regularization (1999). Their method does not require a priori knowledge about the number of independent sources.

Applications

ICA can be extended to analyze non-physical signals. For instance, ICA has been applied to discover discussion topics on a bag of news list archives. Some ICA applications are listed below: * image steganography

* optical Imaging of neurons

* neuronal spike sorting

* face recognition

* modelling receptive fields of primary visual neurons

* predicting stock market prices

* mobile phone communications

* colour based detection of the ripeness of tomatoes

* removing artifacts, such as eye blinks, from EEG data.

* predicting decision-making using EEG

* analysis of changes in gene expression over time in single cell RNA-sequencing experiments.

* studies of the resting state network of the brain.

* astronomy and cosmology

* finance

* image steganography

* optical Imaging of neurons

* neuronal spike sorting

* face recognition

* modelling receptive fields of primary visual neurons

* predicting stock market prices

* mobile phone communications

* colour based detection of the ripeness of tomatoes

* removing artifacts, such as eye blinks, from EEG data.

* predicting decision-making using EEG

* analysis of changes in gene expression over time in single cell RNA-sequencing experiments.

* studies of the resting state network of the brain.

* astronomy and cosmology

* finance

Availability

ICA can be applied through the following software: * SAS PROC ICA * R ICA package *scikit-learn

scikit-learn (formerly scikits.learn and also known as sklearn) is a free and open-source machine learning library for the Python programming language.

It features various classification, regression and clustering algorithms including support ...

Python implementatiosklearn.decomposition.FastICA

*

mlpack

mlpack is a free, open-source and header-only software library for machine learning and artificial intelligence written in C++, built on top of the Armadillo library and thensmallennumerical optimization library. mlpack has an emphasis on scal ...

C++ implementation of RADICAL (The Robust Accurate, Direct ICA aLgorithm (RADICAL).See also

* Blind deconvolution *Factor analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observe ...

* Hilbert spectrum

The Hilbert spectrum (sometimes referred to as the Hilbert amplitude spectrum), named after David Hilbert, is a statistical tool that can help in distinguishing among a mixture of moving signals. The spectrum itself is decomposed into its compo ...

* Image processing

An image or picture is a visual representation. An image can be two-dimensional, such as a drawing, painting, or photograph, or three-dimensional, such as a carving or sculpture. Images may be displayed through other media, including a pr ...

* Non-negative matrix factorization (NMF)

* Nonlinear dimensionality reduction

* Projection pursuit

Projection pursuit (PP) is a type of statistical technique that involves finding the most "interesting" possible projections in multidimensional data. Often, projections that deviate more from a normal distribution are considered to be more intere ...

* Varimax rotation

Notes

References

* Comon, Pierre (1994)"Independent Component Analysis: a new concept?"

''Signal Processing'', 36(3):287–314 (The original paper describing the concept of ICA) * Hyvärinen, A.; Karhunen, J.; Oja, E. (2001):

Independent Component Analysis

', New York: Wiley,

Introductory chapter

) * Hyvärinen, A.; Oja, E. (2000)

"Independent Component Analysis: Algorithms and Application"

''Neural Networks'', 13(4-5):411-430. (Technical but pedagogical introduction). * Comon, P.; Jutten C., (2010): Handbook of Blind Source Separation, Independent Component Analysis and Applications. Academic Press, Oxford UK. * Lee, T.-W. (1998): ''Independent component analysis: Theory and applications'', Boston, Mass: Kluwer Academic Publishers, * Acharyya, Ranjan (2008): ''A New Approach for Blind Source Separation of Convolutive Sources - Wavelet Based Separation Using Shrinkage Function'' (this book focuses on unsupervised learning with Blind Source Separation)

External links

What is independent component analysis?

by Aapo Hyvärinen

by Aapo Hyvärinen

A Tutorial on Independent Component Analysis

FastICA as a package for Matlab, in R language, C++

ICALAB Toolboxes

for Matlab, developed at RIKEN

High Performance Signal Analysis Toolkit

provides C++ implementations of FastICA and Infomax

ICA toolbox

Matlab tools for ICA with Bell-Sejnowski, Molgedey-Schuster and mean field ICA. Developed at DTU.

Demonstration of the cocktail party problem

{{Webarchive, url=https://web.archive.org/web/20100313152128/http://www.cis.hut.fi/projects/ica/cocktail/cocktail_en.cgi , date=2010-03-13

EEGLAB Toolbox

ICA of EEG for Matlab, developed at UCSD.

FMRLAB Toolbox

ICA of fMRI for Matlab, developed at UCSD

MELODIC

part of the FMRIB Software Library.

Discussion of ICA used in a biomedical shape-representation context

FastICA, CuBICA, JADE and TDSEP algorithm for Python and more...

Group ICA Toolbox and Fusion ICA Toolbox

Tutorial: Using ICA for cleaning EEG signals

Signal estimation Dimension reduction