|

Double Compare-and-swap

Double compare-and-swap (DCAS or CAS2) is an atomic primitive proposed to support certain concurrent programming techniques. DCAS takes two not necessarily contiguous memory locations and writes new values into them only if they match pre-supplied "expected" values; as such, it is an extension of the much more popular compare-and-swap (CAS) operation. DCAS is sometimes confused with the double-width compare-and-swap (DWCAS) implemented by instructions such as x86 CMPXCHG16B. DCAS, as discussed here, handles two discontiguous memory locations, typically of pointer size, whereas DWCAS handles two adjacent pointer-sized memory locations. In his doctoral thesis, Michael Greenwald recommended adding DCAS to modern hardware, showing it could be used to create easy-to-apply yet efficient software transactional memory (STM). Greenwald points out that an advantage of DCAS vs CAS is that higher-order (multiple item) CAS''n'' can be implemented in O(''n'') with DCAS, but requires O(''n'' log ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Atomic Primitive

In concurrent programming, an operation (or set of operations) is linearizable if it consists of an ordered list of invocation and response events (event), that may be extended by adding response events such that: # The extended list can be re-expressed as a sequential history (is serializable). # That sequential history is a subset of the original unextended list. Informally, this means that the unmodified list of events is linearizable if and only if its invocations were serializable, but some of the responses of the serial schedule have yet to return. In a concurrent system, processes can access a shared object at the same time. Because multiple processes are accessing a single object, there may arise a situation in which while one process is accessing the object, another process changes its contents. Making a system linearizable is one solution to this problem. In a linearizable system, although operations overlap on a shared object, each operation appears to take place i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Tree

In computer science, a binary search tree (BST), also called an ordered or sorted binary tree, is a rooted binary tree data structure with the key of each internal node being greater than all the keys in the respective node's left subtree and less than the ones in its right subtree. The time complexity of operations on the binary search tree is directly proportional to the height of the tree. Binary search trees allow binary search for fast lookup, addition, and removal of data items. Since the nodes in a BST are laid out so that each comparison skips about half of the remaining tree, the lookup performance is proportional to that of binary logarithm. BSTs were devised in the 1960s for the problem of efficient storage of labeled data and are attributed to Conway Berners-Lee and David Wheeler. The performance of a binary search tree is dependent on the order of insertion of the nodes into the tree since arbitrary insertions may lead to degeneracy; several variations of the bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sun Microsystems

Sun Microsystems, Inc. (Sun for short) was an American technology company that sold computers, computer components, software, and information technology services and created the Java programming language, the Solaris operating system, ZFS, the Network File System (NFS), and SPARC microprocessors. Sun contributed significantly to the evolution of several key computing technologies, among them Unix, RISC processors, thin client computing, and virtualized computing. Notable Sun acquisitions include Cray Business Systems Division, Storagetek, and ''Innotek GmbH'', creators of VirtualBox. Sun was founded on February 24, 1982. At its height, the Sun headquarters were in Santa Clara, California (part of Silicon Valley), on the former west campus of the Agnews Developmental Center. Sun products included computer servers and workstations built on its own RISC-based SPARC processor architecture, as well as on x86-based AMD Opteron and Intel Xeon processors. Sun also developed its own ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel TSX

Transactional Synchronization Extensions (TSX), also called Transactional Synchronization Extensions New Instructions (TSX-NI), is an extension to the x86 instruction set architecture (ISA) that adds hardware transactional memory support, speeding up execution of multi-threaded software through lock elision. According to different benchmarks, TSX/TSX-NI can provide around 40% faster applications execution in specific workloads, and 4–5 times more database transactions per second (TPS). TSX/TSX-NI was documented by Intel in February 2012, and debuted in June 2013 on selected Intel microprocessors based on the Haswell microarchitecture. Haswell processors below 45xx as well as R-series and K-series (with unlocked multiplier) SKUs do not support TSX/TSX-NI. In August 2014, Intel announced a bug in the TSX/TSX-NI implementation on current steppings of Haswell, Haswell-E, Haswell-EP and early Broadwell CPUs, which resulted in disabling the TSX/TSX-NI feature on affected CPUs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

POWER8

POWER8 is a family of superscalar multi-core microprocessors based on the Power ISA, announced in August 2013 at the Hot Chips conference. The designs are available for licensing under the OpenPOWER Foundation, which is the first time for such availability of IBM's highest-end processors. Systems based on POWER8 became available from IBM in June 2014. Systems and POWER8 processor designs made by other OpenPOWER members were available in early 2015. Design POWER8 is designed to be a massively multithreaded chip, with each of its cores capable of handling eight hardware threads simultaneously, for a total of 96 threads executed simultaneously on a 12-core chip. The processor makes use of very large amounts of on- and off-chip eDRAM caches, and on-chip memory controllers enable very high bandwidth to memory and system I/O. For most workloads, the chip is said to perform two to three times as fast as its predecessor, the POWER7. POWER8 chips comes in 6- or 12-core variants; e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

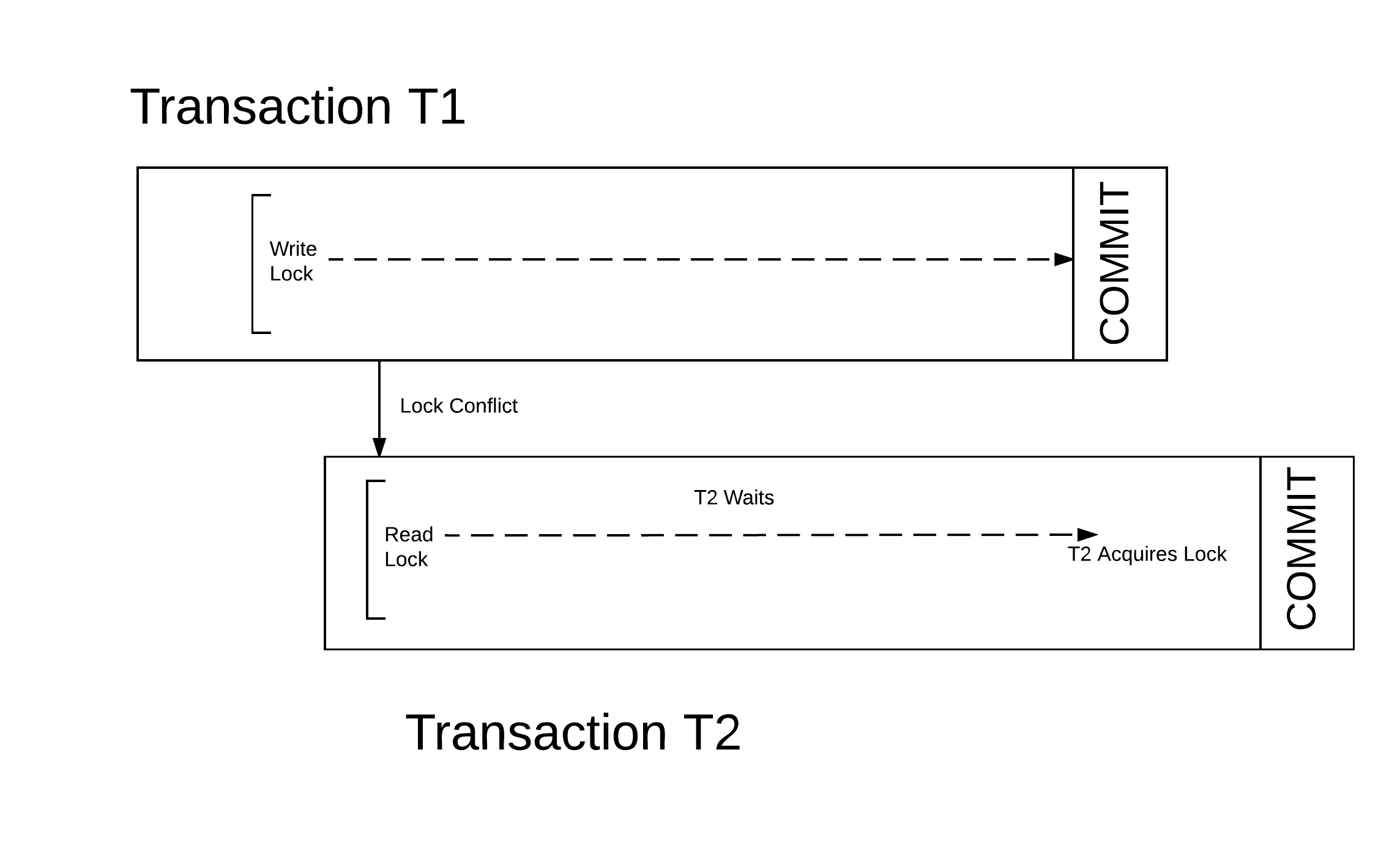

Transactional Memory

In computer science and engineering, transactional memory attempts to simplify concurrent programming by allowing a group of load and store instructions to execute in an atomic way. It is a concurrency control mechanism analogous to database transactions for controlling access to shared memory in concurrent computing. Transactional memory systems provide high-level abstraction as an alternative to low-level thread synchronization. This abstraction allows for coordination between concurrent reads and writes of shared data in parallel systems. Motivation In concurrent programming, synchronization is required when parallel threads attempt to access a shared resource. Low-level thread synchronization constructs such as locks are pessimistic and prohibit threads that are outside a critical section from making any changes. The process of applying and releasing locks often functions as additional overhead in workloads with little conflict among threads. Transactional memory provides o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Skip List

In computer science, a skip list (or skiplist) is a probabilistic data structure that allows \mathcal(\log n) average complexity for search as well as \mathcal(\log n) average complexity for insertion within an ordered sequence of n elements. Thus it can get the best features of a sorted array (for searching) while maintaining a linked list-like structure that allows insertion, which is not possible with a static array. Fast search is made possible by maintaining a linked hierarchy of subsequences, with each successive subsequence skipping over fewer elements than the previous one (see the picture below on the right). Searching starts in the sparsest subsequence until two consecutive elements have been found, one smaller and one larger than or equal to the element searched for. Via the linked hierarchy, these two elements link to elements of the next sparsest subsequence, where searching is continued until finally searching in the full sequence. The elements that are skipped ov ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

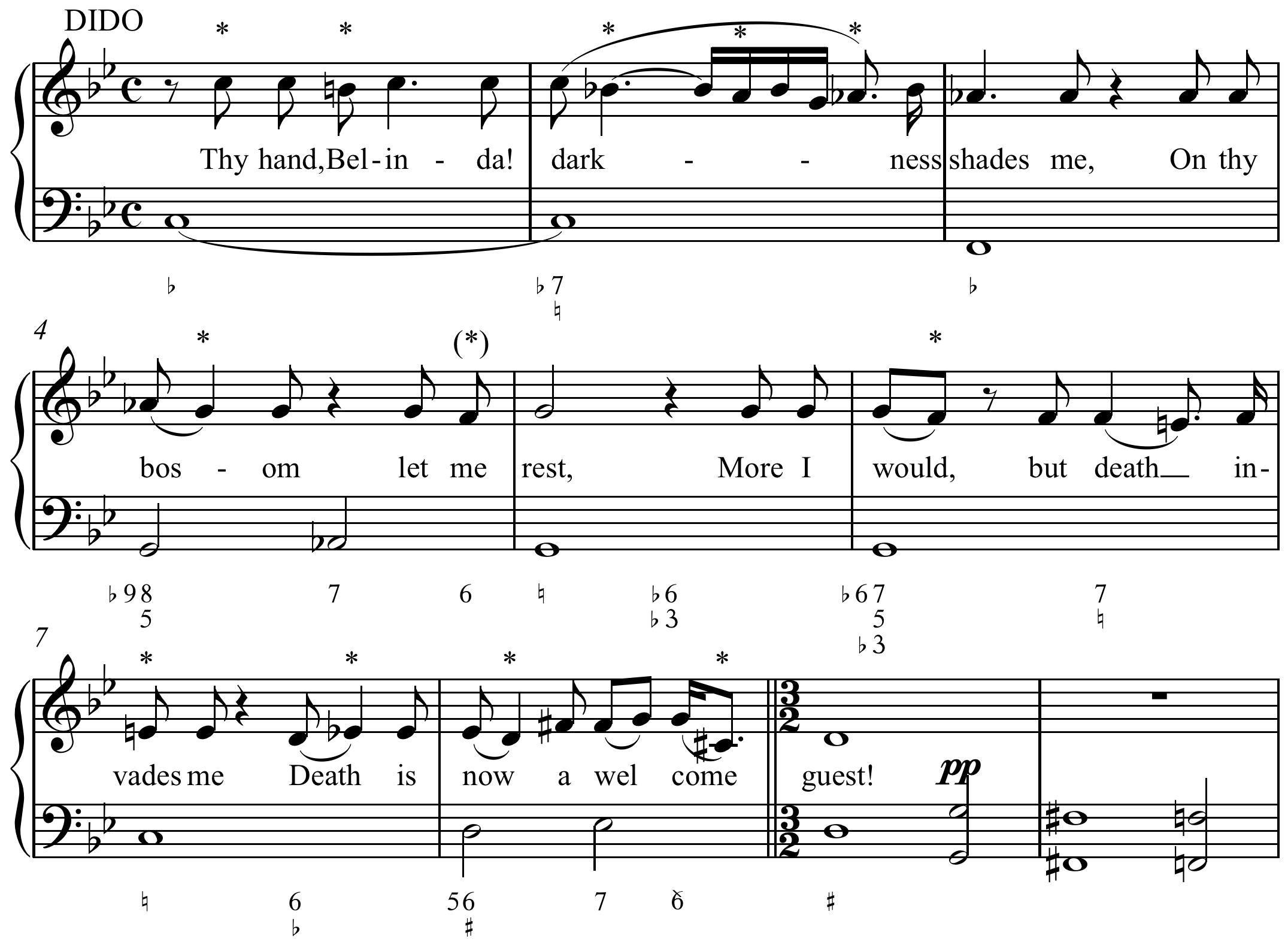

Chromatic Tree

Diatonic and chromatic are terms in music theory that are most often used to characterize scales, and are also applied to musical instruments, intervals, chords, notes, musical styles, and kinds of harmony. They are very often used as a pair, especially when applied to contrasting features of the common practice music of the period 1600–1900. These terms may mean different things in different contexts. Very often, ''diatonic'' refers to musical elements derived from the modes and transpositions of the "white note scale" C–D–E–F–G–A–B. In some usages it includes all forms of heptatonic scale that are in common use in Western music (the major, and all forms of the minor). ''Chromatic'' most often refers to structures derived from the twelve-note chromatic scale, which consists of all semitones. Historically, however, it had other senses, referring in Ancient Greek music theory to a particular tuning of the tetrachord, and to a rhythmic notational convention ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Faith Ellen

Faith Ellen (formerly known as Faith E. Fich) is a professor of computer science at the University of Toronto who studies distributed data structures and the theory of distributed computing. She earned her bachelor's degree and masters from the University of Waterloo in 1977 and 1978, respectively, and doctorate in 1982 from the University of California, Berkeley under the supervision of Richard Karp; her dissertation concerned lower bounds for cycle detection and parallel prefix sums. She joined the faculty of the University of Washington in 1983, and moved to Toronto in 1986. From 1997 to 2001, she was the vice chair of SIGACT, the leading international society for theory of computation. From 2006 to 2009, she was chair of the steering committee for PODC, a top international conference for theory of distributed computing. In 2014, she also co-authored the book, ''Impossibility Results for Distributed Computing''. She became a Fellow of the Association for Computing Machinery ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrent Programming

Concurrent means happening at the same time. Concurrency, concurrent, or concurrence may refer to: Law * Concurrence, in jurisprudence, the need to prove both ''actus reus'' and ''mens rea'' * Concurring opinion (also called a "concurrence"), a legal opinion which supports the conclusion, though not always the reasoning, of the majority. * Concurrent estate, a concept in property law * Concurrent resolution, a legislative measure passed by both chambers of the United States Congress * Concurrent sentences, in criminal law, periods of imprisonment that are served simultaneously Computing * Concurrency (computer science), the property of program, algorithm, or problem decomposition into order-independent or partially-ordered units * Concurrent computing, the overlapping execution of multiple interacting computational tasks * Concurrence (quantum computing), a measure used in quantum information theory * Concurrent Computer Corporation, an American computer systems manufacturer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LL/SC

In computer science, load-linked/store-conditional (LL/SC), sometimes known as load-reserved/store-conditional (LR/SC), are a pair of instructions used in multithreading to achieve synchronization. Load-link returns the current value of a memory location, while a subsequent store-conditional to the same memory location will store a new value only if no updates have occurred to that location since the load-link. Together, this implements a lock-free atomic read-modify-write operation. "Load-linked" is also known as load-link, load-reserved, and load-locked. LL/SC was originally proposed by Jensen, Hagensen, and Broughton for the S-1 AAP multiprocessor at Lawrence Livermore National Laboratory. Comparison of LL/SC and compare-and-swap If any updates have occurred, the store-conditional is guaranteed to fail, even if the value read by the load-link has since been restored. As such, an LL/SC pair is stronger than a read followed by a compare-and-swap (CAS), which will not detect u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lock-free And Wait-free Algorithms

In computer science, an algorithm is called non-blocking if failure or suspension of any thread cannot cause failure or suspension of another thread; for some operations, these algorithms provide a useful alternative to traditional blocking implementations. A non-blocking algorithm is lock-free if there is guaranteed system-wide progress, and wait-free if there is also guaranteed per-thread progress. "Non-blocking" was used as a synonym for "lock-free" in the literature until the introduction of obstruction-freedom in 2003. The word "non-blocking" was traditionally used to describe telecommunications networks that could route a connection through a set of relays "without having to re-arrange existing calls" (see Clos network). Also, if the telephone exchange "is not defective, it can always make the connection" (see nonblocking minimal spanning switch). Motivation The traditional approach to multi-threaded programming is to use locks to synchronize access to shared resourc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |