|

Distributed Shared Memory

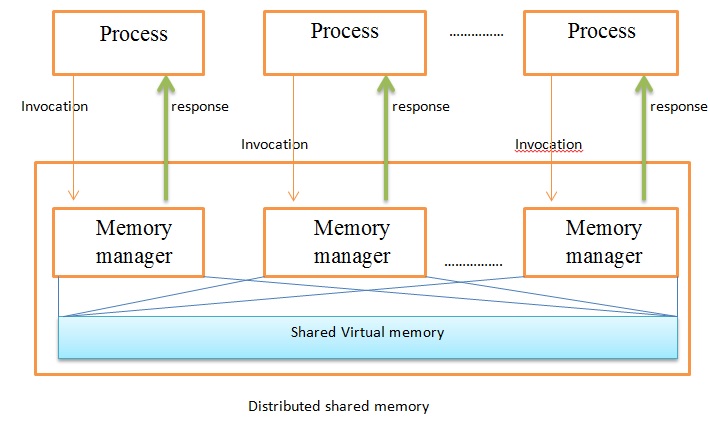

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's private (i.e., not shared) memory. Overview A distributed-memory system, often called a multicomputer, consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an operating system, or as a programming library and can be thought of as extensions of the underlying virtual memory arch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Science

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including the design and implementation of hardware and software). Computer science is generally considered an area of academic research and distinct from computer programming. Algorithms and data structures are central to computer science. The theory of computation concerns abstract models of computation and general classes of problems that can be solved using them. The fields of cryptography and computer security involve studying the means for secure communication and for preventing security vulnerabilities. Computer graphics and computational geometry address the generation of images. Programming language theory considers different ways to describe computational processes, and database theory concerns the management of repositories ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tuple Space

A tuple space is an implementation of the associative memory paradigm for parallel/distributed computing. It provides a repository of tuples that can be accessed concurrently. As an illustrative example, consider that there are a group of processors that produce pieces of data and a group of processors that use the data. Producers post their data as tuples in the space, and the consumers then retrieve data from the space that match a certain pattern. This is also known as the blackboard metaphor. Tuple space may be thought as a form of distributed shared memory. Tuple spaces were the theoretical underpinning of the Linda language developed by David Gelernter and Nicholas Carriero at Yale University in 1986. Implementations of tuple spaces have also been developed for Java ( JavaSpaces), Lisp, Lua, Prolog, Python, Ruby, Smalltalk, Tcl, and the .NET Framework. Object Spaces Object Spaces is a paradigm for development of distributed computing applications. It is characteri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenSSI

OpenSSI is an open-source single-system image clustering system. It allows a collection of computers to be treated as one large system, allowing applications running on any one machine access to the resources of all the machines in the cluster. OpenSSI is based on the Linux operating system and was released as an open source project by Compaq in 2001. It is the final stage of a long process of development, stretching back to LOCUS, developed in the early 1980s. Description OpenSSI allows a cluster of individual computers (''nodes'') to be treated as one large system. Processes run on any node have full access to the resources of all nodes. Processes can be migrated from node to node automatically to balance system utilization. Inbound network connections can be directed to the least loaded node available. OpenSSI is designed to be used for both high performance and high availability clusters. It is possible to create an OpenSSI cluster with no single point of failure, for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kerrighed

Kerrighed is an open source single-system image (SSI) cluster software project. The project started in October 1998 at the Paris research group The French National Institute for Research in Computer Science and Control. From 2006 to 2011, the project was mainly developed by Kerlabs. In January, 2012 the Linux clustering mission of Kerlabs was adopted by a new company: We Cluster, Inc. headquartered in Pacific Grove, California. January 18, 2012: Kerrighed 3.0 has been ported to Ubuntu 12.04 with Linux Kernel v3.2. Background Kerrighed is implemented as an extension to the Linux operating system. It helps scientific applications such as numerical simulations to use more power. Such applications may be using OpenMP, Message Passing Interface, and/or a Posix multithreaded programming model. Kerrighed implements a set of global resource management services that aim at making resource distribution transparent to the applications, at managing resource sharing in and between applica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Critical Section

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior, so parts of the program where the shared resource is accessed need to be protected in ways that avoid the concurrent access. One way to do so is known as a critical section or critical region. This protected section cannot be entered by more than one process or thread at a time; others are suspended until the first leaves the critical section. Typically, the critical section accesses a shared resource, such as a data structure, a peripheral device, or a network connection, that would not operate correctly in the context of multiple concurrent accesses. Need for critical sections Different codes or processes may consist of the same variable or other resources that need to be read or written but whose results depend on the order in which the actions occur. For example, if a variable is to be read by process A, and process B has to write to the same variabl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sequential Invocations And Responses In DSM

In mathematics, a sequence is an enumerated collection of objects in which repetitions are allowed and order matters. Like a set, it contains members (also called ''elements'', or ''terms''). The number of elements (possibly infinite) is called the ''length'' of the sequence. Unlike a set, the same elements can appear multiple times at different positions in a sequence, and unlike a set, the order does matter. Formally, a sequence can be defined as a function from natural numbers (the positions of elements in the sequence) to the elements at each position. The notion of a sequence can be generalized to an indexed family, defined as a function from an ''arbitrary'' index set. For example, (M, A, R, Y) is a sequence of letters with the letter 'M' first and 'Y' last. This sequence differs from (A, R, M, Y). Also, the sequence (1, 1, 2, 3, 5, 8), which contains the number 1 at two different positions, is a valid sequence. Sequences can be ''finite'', as in these examples, or ''infini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel QuickPath Interconnect

The Intel QuickPath Interconnect (QPI) is a point-to-point processor interconnect developed by Intel which replaced the front-side bus (FSB) in Xeon, Itanium, and certain desktop platforms starting in 2008. It increased the scalability and available bandwidth. Prior to the name's announcement, Intel referred to it as Common System Interface (CSI). Earlier incarnations were known as Yet Another Protocol (YAP) and YAP+. QPI 1.1 is a significantly revamped version introduced with Sandy Bridge-EP (Romley platform). QPI was replaced by Intel Ultra Path Interconnect (UPI) in Skylake-SP Xeon processors based on LGA 3647 socket. Background Although sometimes called a "bus", QPI is a point-to-point interconnect. It was designed to compete with HyperTransport that had been used by Advanced Micro Devices (AMD) since around 2003. Intel developed QPI at its Massachusetts Microprocessor Design Center (MMDC) by members of what had been the Alpha Development Group, which Intel had acquired from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dsm States

DSM or dsm may refer to: Science and technology * Deep space maneuver * Design structure matrix or dependency structure matrix, a representation of a system or project * Diagnostic and Statistical Manual of Mental Disorders ** DSM-5, the fifth edition of the DSM * Digital Standard MUMPS, a version of the MUMPS programming language created by Digital Equipment Corporation * Digital surface model, another term for digital elevation model, used to digitally map topography * DiskStation Manager, a Linux-based operating system used by Synology * Distributed shared memory, software and hardware implementations in which each cluster node accesses a large shared memory * Door status monitor, a device that reads whether a door is open or closed. * Dynamic scattering mode, a principle used by the first operational liquid-crystal display * Dynamic spectrum management, a technique to increase bandwidth over DSL * Doctor of Medicine, awarded as specialized or surgical medicine (D.SM) Orga ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory Coherence

Memory coherence is an issue that affects the design of computer systems in which two or more processors or cores share a common area of memory. In a uniprocessor system (whereby, in today's terms, there exists only one core), there is only one processing element doing all the work and therefore only one processing element that can read or write from/to a given memory location. As a result, when a value is changed, all subsequent read operations of the corresponding memory location will see the updated value, even if it is cached. Conversely, in multiprocessor (or multicore) systems, there are two or more processing elements working at the same time, and so it is possible that they simultaneously access the same memory location. Provided none of them changes the data in this location, they can share it indefinitely and cache it as they please. But as soon as one updates the location, the others might work on an out-of-date copy that, e.g., resides in their local cache. Consequently ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistency Model

In computer science, a consistency model specifies a contract between the programmer and a system, wherein the system guarantees that if the programmer follows the rules for operations on memory, memory will be consistent and the results of reading, writing, or updating memory will be predictable. Consistency models are used in distributed systems like distributed shared memory systems or distributed data stores (such as filesystems, databases, optimistic replication systems or web caching). Consistency is different from coherence, which occurs in systems that are cached or cache-less, and is consistency of data with respect to all processors. Coherence deals with maintaining a global order in which writes to a single location or single variable are seen by all processors. Consistency deals with the ordering of operations to multiple locations with respect to all processors. High level languages, such as C++ and Java, maintain the consistency contract by translating mem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |