|

Cramér's V

In statistics, Cramér's V (sometimes referred to as Cramér's phi and denoted as φ''c'') is a measure of association between two nominal variables, giving a value between 0 and +1 (inclusive). It is based on Pearson's chi-squared statistic and was published by Harald Cramér in 1946. Usage and interpretation φ''c'' is the intercorrelation of two discrete variablesSheskin, David J. (1997). Handbook of Parametric and Nonparametric Statistical Procedures. Boca Raton, Fl: CRC Press. and may be used with variables having two or more levels. φ''c'' is a symmetrical measure: it does not matter which variable we place in the columns and which in the rows. Also, the order of rows/columns doesn't matter, so φ''c'' may be used with nominal data types or higher (notably, ordered or numerical). Cramér's V may also be applied to goodness of fit chi-squared models when there is a 1 × ''k'' table (in this case ''r'' = 1). In this case ''k'' is taken as the number of optional ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Tschuprow's T

In statistics, Tschuprow's ''T'' is a measure of association between two nominal variables, giving a value between 0 and 1 (inclusive). It is closely related to Cramér's V, coinciding with it for square contingency tables. It was published by Alexander Tschuprow (alternative spelling: Chuprov) in 1939.Tschuprow, A. A. (1939) ''Principles of the Mathematical Theory of Correlation''; translated by M. Kantorowitsch. W. Hodge & Co. Definition For an ''r'' × ''c'' contingency table with ''r'' rows and ''c'' columns, let \pi_ be the proportion of the population in cell (i,j) and let :\pi_=\sum_^c\pi_ and \pi_=\sum_^r\pi_. Then the mean square contingency is given as : \phi^2 = \sum_^r\sum_^c\frac , and Tschuprow's ''T'' as : T = \sqrt . Properties ''T'' equals zero if and only if independence holds in the table, i.e., if and only if \pi_=\pi_\pi_. ''T'' equals one if and only there is perfect dependence in the table, i.e., if and only if for each ''i'' there is onl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Ratios

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Effect Size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of a parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes complement statistical hypothesis testing, and play an important role in power analyses, sample size planning, and in meta-analyses. The cluster of data-analysis methods concerning effect sizes is referred to as estimation statistics. Effect size is an essential component when evaluating the strength of a statistical claim, and it is the first item (magnitude) in the MA ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contingency Table

In statistics, a contingency table (also known as a cross tabulation or crosstab) is a type of table in a matrix format that displays the (multivariate) frequency distribution of the variables. They are heavily used in survey research, business intelligence, engineering, and scientific research. They provide a basic picture of the interrelation between two variables and can help find interactions between them. The term ''contingency table'' was first used by Karl Pearson in "On the Theory of Contingency and Its Relation to Association and Normal Correlation", part of the '' Drapers' Company Research Memoirs Biometric Series I'' published in 1904. A crucial problem of multivariate statistics is finding the (direct-)dependence structure underlying the variables contained in high-dimensional contingency tables. If some of the conditional independences are revealed, then even the storage of the data can be done in a smarter way (see Lauritzen (2002)). In order to do this one can u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fowlkes–Mallows Index

The Fowlkes–Mallows index is an external evaluation method that is used to determine the similarity between two clusterings (clusters obtained after a clustering algorithm), and also a metric to measure confusion matrices. This measure of similarity could be either between two hierarchical clusterings or a clustering and a benchmark classification. A higher value for the Fowlkes–Mallows index indicates a greater similarity between the clusters and the benchmark classifications. It was invented by Bell Labs statisticians Edward Fowlkes and Collin Mallows in 1983. Preliminaries The Fowlkes–Mallows index, when results of two clustering algorithms are used to evaluate the results, is defined as : FM = \sqrt= \sqrt where TP is the number of true positives, FP is the number of false positives, and FN is the number of false negatives. TPR is the ''true positive rate'', also called '' sensitivity'' or '' recall'', and PPV is the ''positive predictive rate'', also known as ''p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jaccard Index

The Jaccard index, also known as the Jaccard similarity coefficient, is a statistic used for gauging the similarity and diversity of sample sets. It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is frequently referred to as the Critical Success Index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name ''coefficient de communauté'', and independently formulated again by T. Tanimoto. Thus, the Tanimoto index or Tanimoto coefficient are also used in some fields. However, they are identical in generally taking the ratio of Intersection over Union. The Jaccard coefficient measures similarity between finite sample sets, and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A,B) = = . Note that by design, 0\le J(A,B)\le 1. If ''A'' intersection ''B'' is empty, then ''J''(''A'',''B'') = 0. The Jaccard coefficient is widely used in co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dunn Index

The Dunn index (DI) (introduced by J. C. Dunn in 1974) is a metric for evaluating clustering algorithms. This is part of a group of validity indices including the Davies–Bouldin index or Silhouette (clustering), Silhouette index, in that it is an internal evaluation scheme, where the result is based on the clustered data itself. As do all other such indices, the aim is to identify sets of clusters that are compact, with a small variance between members of the cluster, and well separated, where the means of different clusters are sufficiently far apart, as compared to the within cluster variance. For a given assignment of clusters, a higher Dunn index indicates better clustering. One of the drawbacks of using this is the computational cost as the number of clusters and dimensionality of the data increase. Preliminaries There are many ways to define the size or diameter of a cluster. It could be the distance between the farthest two points inside a cluster, it could be the mean of a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Davies–Bouldin Index

The Davies–Bouldin index (DBI), introduced by David L. Davies and Donald W. Bouldin in 1979, is a metric for evaluating clustering algorithms. This is an internal evaluation scheme, where the validation of how well the clustering has been done is made using quantities and features inherent to the dataset. This has a drawback that a good value reported by this method does not imply the best information retrieval. Preliminaries Given ''n'' dimensional points, let ''C''''i'' be a cluster of data points. Let ''X''''j'' be an ''n''-dimensional feature vector assigned to cluster ''C''''i''. : S_i = \left(\frac \sum_^ \right)^ Here A_i is the centroid of ''C''''i'' and ''T''''i'' is the size of the cluster ''i''. S_i is the ''q''th root of the ''q''th moment of the points in cluster ''i'' about the mean. If q=1 then S_i is the average distance between the feature vectors in cluster ''i'' and the centroid of the cluster. Usually the value of ''p'' is 2, which makes the dista ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rand Index

The RAND Corporation (from the phrase "research and development") is an American nonprofit global policy think tank created in 1948 by Douglas Aircraft Company to offer research and analysis to the United States Armed Forces. It is financed by the U.S. government and private endowment, corporations, universities and private individuals. The company assists other governments, international organizations, private companies and foundations with a host of defense and non-defense issues, including healthcare. RAND aims for interdisciplinary and quantitative problem solving by translating theoretical concepts from formal economics and the physical sciences into novel applications in other areas, using applied science and operations research. Overview RAND has approximately 1,850 employees. Its American locations include: Santa Monica, California (headquarters); Arlington, Virginia; Pittsburgh, Pennsylvania; and Boston, Massachusetts. The RAND Gulf States Policy Institute has an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Goodman And Kruskall's Lambda

In probability theory and statistics, Goodman & Kruskal's lambda (\lambda) is a measure of proportional reduction in error in cross tabulation analysis. For any sample with a nominal independent variable and dependent variable (or ones that can be treated nominally), it indicates the extent to which the modal categories and frequencies for each value of the independent variable differ from the overall modal category and frequency, i.e., for all values of the independent variable together. \lambda is defined by the equation :\lambda = \frac. where :\varepsilon_1 is the overall non-modal frequency, and :\varepsilon_2 is the sum of the non-modal frequencies for each value of the independent variable. Values for lambda range from zero (no association between independent and dependent variables) to one (perfect association). Weaknesses Although Goodman and Kruskal's lambda is a simple way to assess the association between variables, it yields a value of 0 (no association) whenever ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Coefficient

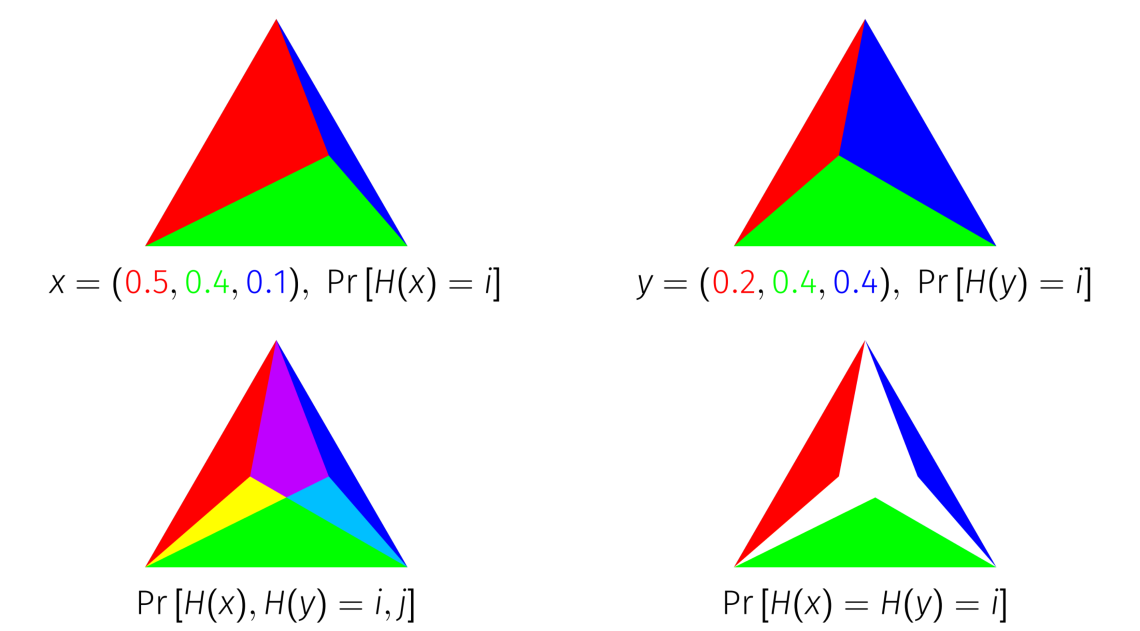

In statistics, the uncertainty coefficient, also called proficiency, entropy coefficient or Theil's U, is a measure of nominal association. It was first introduced by Henri Theil and is based on the concept of information entropy. Definition Suppose we have samples of two discrete random variables, ''X'' and ''Y''. By constructing the joint distribution, , from which we can calculate the conditional distributions, and , and calculating the various entropies, we can determine the degree of association between the two variables. The entropy of a single distribution is given as: : H(X)= -\sum_x P_X(x) \log P_X(x) , while the conditional entropy is given as: : H(X, Y) = -\sum_ P_(x,~y) \log P_(x, y) . The uncertainty coefficient or proficiency is defined as: : U(X, Y) = \frac = \frac , and tells us: given ''Y'', what fraction of the bits of ''X'' can we predict? In this case we can think of ''X'' as containing the total information, and of ''Y'' as allowing one to pr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |