|

Large Language Models

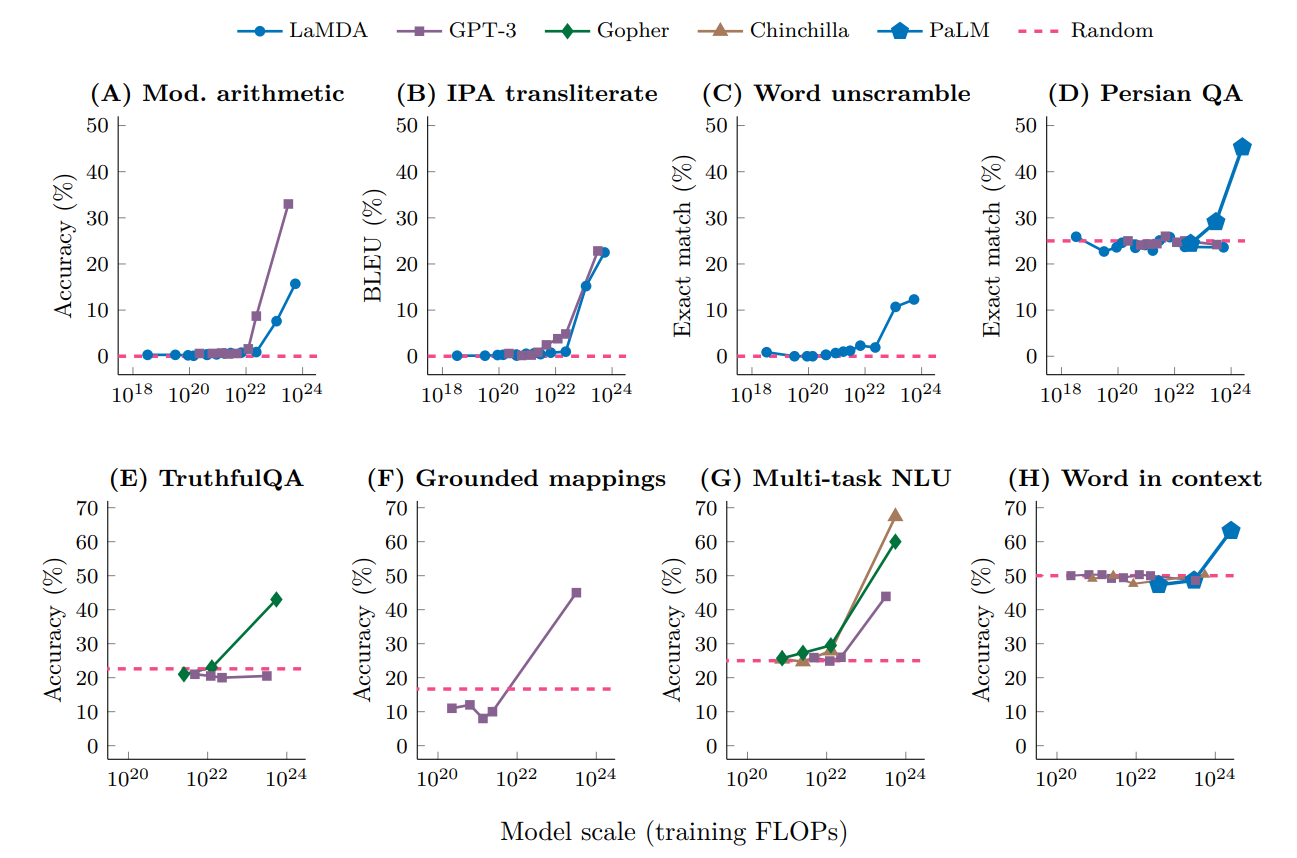

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-siz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Model

A language model is a probability distribution over sequences of words. Given any sequence of words of length , a language model assigns a probability P(w_1,\ldots,w_m) to the whole sequence. Language models generate probabilities by training on text corpora In linguistics, a corpus (plural ''corpora'') or text corpus is a language resource consisting of a large and structured set of texts (nowadays usually electronically stored and processed). In corpus linguistics, they are used to do statistical ... in one or many languages. Given that languages can be used to express an infinite variety of valid sentences (the property of digital infinity), language modeling faces the problem of assigning non-zero probabilities to linguistically valid sequences that may never be encountered in the training data. Several modelling approaches have been designed to surmount this problem, such as applying the Markov assumption or using neural architectures such as recurrent neural networks or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a type of algorithm that learns patterns from untagged data. The hope is that through mimicry, which is an important mode of learning in people, the machine is forced to build a concise representation of its world and then generate imaginative content from it. In contrast to supervised learning where data is tagged by an expert, e.g. tagged as a "ball" or "fish", unsupervised methods exhibit self-organization that captures patterns as probability densities or a combination of neural feature preferences encoded in the machine's weights and activations. The other levels in the supervision spectrum are reinforcement learning where the machine is given only a numerical performance score as guidance, and semi-supervised learning where a small portion of the data is tagged. Neural networks Tasks vs. methods Neural network tasks are often categorized as discriminative (recognition) or generative (imagination). Often but not always, discriminative tas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Part-of-speech Tagging

In corpus linguistics, part-of-speech tagging (POS tagging or PoS tagging or POST), also called grammatical tagging is the process of marking up a word in a text (corpus) as corresponding to a particular part of speech, based on both its definition and its context. A simplified form of this is commonly taught to school-age children, in the identification of words as nouns, verbs, adjectives, adverbs, etc. Once performed by hand, POS tagging is now done in the context of computational linguistics, using algorithms which associate discrete terms, as well as hidden parts of speech, by a set of descriptive tags. POS-tagging algorithms fall into two distinctive groups: rule-based and stochastic. E. Brill's tagger, one of the first and most widely used English POS-taggers, employs rule-based algorithms. Principle Part-of-speech tagging is harder than just having a list of words and their parts of speech, because some words can represent more than one part of speech at different times, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Named-entity Recognition

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. Most research on NER/NEE systems has been structured as taking an unannotated block of text, such as this one: And producing an annotated block of text that highlights the names of entities: In this example, a person name consisting of one token, a two-token company name and a temporal expression have been detected and classified. State-of-the-art NER systems for English produce near-human performance. For example, the best system entering MUC-7 scored 93.39% of F-measure while human annotators scored 97.60% and 96.95%. Named-entity recognition platforms Notable NER platforms include ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sentiment Analysis

Sentiment analysis (also known as opinion mining or emotion AI) is the use of natural language processing, text analysis, computational linguistics, and biometrics to systematically identify, extract, quantify, and study affective states and subjective information. Sentiment analysis is widely applied to voice of the customer materials such as reviews and survey responses, online and social media, and healthcare materials for applications that range from marketing to customer service to clinical medicine. With the rise of deep language models, such as RoBERTa, also more difficult data domains can be analyzed, e.g., news texts where authors typically express their opinion/sentiment less explicitly.Hamborg, Felix; Donnay, Karsten (2021)"NewsMTSC: A Dataset for (Multi-)Target-dependent Sentiment Classification in Political News Articles" "Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume" Examples The objective an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt. The architecture is a standard transformer network (with a few engineering tweaks) with the unprecedented size of 2048- token-long context and 175 billion parameters (requiring 800 GB of storage). The training method is "generative pretraining", meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks. It is the third-generation language prediction model in the GPT-n series (and the successor to GPT-2) created by OpenAI, a San Francisco-based artificial intelligence research laboratory. GPT-3, which was introduced in May 2020, and was in beta testing as of July 2020, is part of a trend in natural language processing (NLP) systems of pre-trained language representations. The quality of the t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fine-tuning (machine Learning)

In deep learning, fine-tuning is an approach to transfer learning in which the weights of a pre-trained model are trained on new data. Fine-tuning can be done on the entire neural network, or on only a subset of its layers, in which case the layers that are not being fine-tuned are "frozen" (not updated during the backpropagation step). A model may also be augmented with "adapters" that consist of far fewer parameters than the original model, and fine-tuned in a parameter-efficient way by tuning the weights of the adapters and leaving the rest of the model's weights frozen. For some architectures, such as convolutional neural networks, it is common to keep the earlier layers (those closest to the input layer) frozen because they capture lower-level features, while later layers often discern high-level features that can be more related to the task that the model is trained on. Models that are pre-trained on large and general corpora are usually fine-tuned by reusing the model's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Law

In statistics, a power law is a Function (mathematics), functional relationship between two quantities, where a Relative change and difference, relative change in one quantity results in a proportional relative change in the other quantity, independent of the initial size of those quantities: one quantity varies as a Exponentiation, power of another. For instance, considering the area of a square in terms of the length of its side, if the length is doubled, the area is multiplied by a factor of four. Empirical examples The distributions of a wide variety of physical, biological, and man-made phenomena approximately follow a power law over a wide range of magnitudes: these include the sizes of craters on the moon and of solar flares, the foraging pattern of various species, the sizes of activity patterns of neuronal populations, the frequencies of words in most languages, frequencies of family names, the species richness in clades of organisms, the sizes of power outages, volcanic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loss Function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost" associated with the event. An optimization problem seeks to minimize a loss function. An objective function is either a loss function or its opposite (in specific domains, variously called a reward function, a profit function, a utility function, a fitness function, etc.), in which case it is to be maximized. The loss function could include terms from several levels of the hierarchy. In statistics, typically a loss function is used for parameter estimation, and the event in question is some function of the difference between estimated and true values for an instance of data. The concept, as old as Laplace, was reintroduced in statistics by Abraham Wald in the middle of the 20th century. In the context of economics, for example, this i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BookCorpus

BookCorpus (also sometimes referred to as the Toronto Book Corpus) is a dataset consisting of the text of around 7,000 self-published books scraped from the indie ebook distribution website Smashwords. It was the main corpus used to train the initial GPT model by OpenAI, and has been used as training data for other early large language model A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 an ...s including Google's BERT. The dataset consists of around 985 million words, and the books that comprise it span a range of genres, including romance, science fiction, and fantasy. The corpus was introduced in a 2015 paper by researchers from the University of Toronto and MIT titled "Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books". T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole. The organization was founded in San Francisco in late 2015 by Sam Altman, Elon Musk, and others, who collectively pledged US$1 billion. Musk resigned from the board in February 2018 but remained a donor. In 2019, OpenAI LP received a 1 billion investment from Microsoft. OpenAI is headquartered at the Pioneer Building in Mission District, San Francisco. History In December 2015, Sam Altman, Elon Musk, Greg Brockman, Reid Hoffman, Jessica Livingston, Peter Thiel, Amazon Web Services (AWS), Infosys, and YC Research announced the formation of OpenAI and pledged over 1 billion to the venture. The organization stated it would "freely collaborate" wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_-1.jpg)