|

BookCorpus

BookCorpus (also sometimes referred to as the Toronto Book Corpus) is a dataset consisting of the text of around 7,000 self-published books scraped from the indie ebook distribution website Smashwords. It was the main corpus used to train the initial GPT model by OpenAI, and has been used as training data for other early large language model A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 an ...s including Google's BERT. The dataset consists of around 985 million words, and the books that comprise it span a range of genres, including romance, science fiction, and fantasy. The corpus was introduced in a 2015 paper by researchers from the University of Toronto and MIT titled "Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books". T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

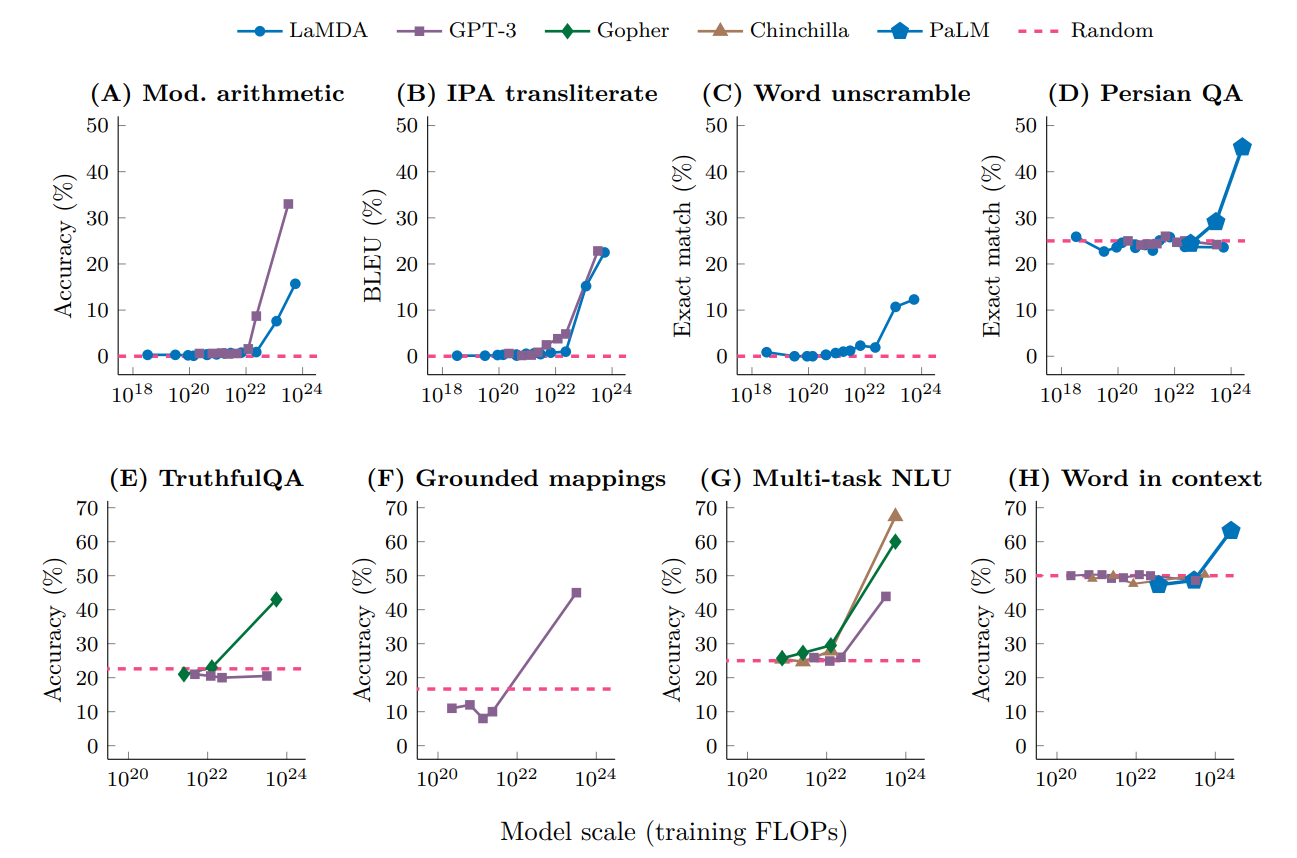

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Set

A data set (or dataset) is a collection of data. In the case of tabular data, a data set corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record of the data set in question. The data set lists values for each of the variables, such as for example height and weight of an object, for each member of the data set. Data sets can also consist of a collection of documents or files. In the open data discipline, data set is the unit to measure the information released in a public open data repository. The European data.europa.eu portal aggregates more than a million data sets. Some other issues ( real-time data sources, non-relational data sets, etc.) increases the difficulty to reach a consensus about it. Properties Several characteristics define a data set's structure and properties. These include the number and types of the attributes or variables, and various statistical measures applica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Scraping

Web scraping, web harvesting, or web data extraction is data scraping used for extracting data from websites. Web scraping software may directly access the World Wide Web using the Hypertext Transfer Protocol or a web browser. While web scraping can be done manually by a software user, the term typically refers to automated processes implemented using a bot or web crawler. It is a form of copying in which specific data is gathered and copied from the web, typically into a central local database or spreadsheet, for later retrieval or analysis. Scraping a web page involves fetching it and extracting from it. Fetching is the downloading of a page (which a browser does when a user views a page). Therefore, web crawling is a main component of web scraping, to fetch pages for later processing. Once fetched, extraction can take place. The content of a page may be parsed, searched and reformatted, and its data copied into a spreadsheet or loaded into a database. Web scrapers typically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Smashwords

Smashwords, Inc., based in Los Gatos, California, is a platform for self-publishing e-books. The company, founded by Mark Coker, began public operation in 2008. Authors and independent publishers upload their manuscripts as electronic files to the service, which converts them into multiple e-book formats for various devices. Once published, the books are made available for sale online at a price set by the author or independent publisher. History Coker began work on Smashwords in 2005 and officially launched the website in May 2008. Within the first seven months of launching, the website published 140 books."Book Value" ''Forbes'' Due to initially low profits, the firm switched to a distribution model that offered retailers a "30% commission in exchange for digital shelf space". [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Corpus

In linguistics, a corpus (plural ''corpora'') or text corpus is a language resource consisting of a large and structured set of texts (nowadays usually electronically stored and processed). In corpus linguistics, they are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory. In search technology, a corpus is the collection of documents which is being searched. Overview A corpus may contain texts in a single language (''monolingual corpus'') or text data in multiple languages (''multilingual corpus''). In order to make the corpora more useful for doing linguistic research, they are often subjected to a process known as annotation. An example of annotating a corpus is part-of-speech tagging, or ''POS-tagging'', in which information about each word's part of speech (verb, noun, adjective, etc.) is added to the corpus in the form of ''tags''. Another example is indicating the lemma (b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole. The organization was founded in San Francisco in late 2015 by Sam Altman, Elon Musk, and others, who collectively pledged US$1 billion. Musk resigned from the board in February 2018 but remained a donor. In 2019, OpenAI LP received a 1 billion investment from Microsoft. OpenAI is headquartered at the Pioneer Building in Mission District, San Francisco. History In December 2015, Sam Altman, Elon Musk, Greg Brockman, Reid Hoffman, Jessica Livingston, Peter Thiel, Amazon Web Services (AWS), Infosys, and YC Research announced the formation of OpenAI and pledged over 1 billion to the venture. The organization stated it would "freely collabora ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BERT (language Model)

Bidirectional Encoder Representations from Transformers (BERT) is a transformer-based machine learning technique for natural language processing (NLP) pre-training developed by Google. BERT was created and published in 2018 by Jacob Devlin and his colleagues from Google. In 2019, Google announced that it had begun leveraging BERT in its search engine, and by late 2020 it was using BERT in almost every English-language query. A 2020 literature survey concluded that "in a little over a year, BERT has become a ubiquitous baseline in NLP experiments", counting over 150 research publications analyzing and improving the model. The original English-language BERT has two models: (1) the BERTBASE: 12 encoders with 12 bidirectional self-attention heads, and (2) the BERTLARGE: 24 encoders with 16 bidirectional self-attention heads. Both models are pre-trained from unlabeled data extracted from the BooksCorpus with 800M words and English Wikipedia with 2,500M words. Architecture BERT is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Toronto

The University of Toronto (UToronto or U of T) is a public research university in Toronto, Ontario, Canada, located on the grounds that surround Queen's Park. It was founded by royal charter in 1827 as King's College, the first institution of higher learning in Upper Canada. Originally controlled by the Church of England, the university assumed its present name in 1850 upon becoming a secular institution. As a collegiate university, it comprises eleven colleges each with substantial autonomy on financial and institutional affairs and significant differences in character and history. The university maintains three campuses, the oldest of which, St. George, is located in downtown Toronto. The other two satellite campuses are located in Scarborough and Mississauga. The University of Toronto offers over 700 undergraduate and 200 graduate programs. In all major rankings, the university consistently ranks in the top ten public universities in the world and as the top ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_-1.jpg)