Large Language Models on:

[Wikipedia]

[Google]

[Amazon]

A large language model (LLM) is a

While it is generally the case that performance of large models on various tasks can be extrapolated based on the performance of similar smaller models, sometimes large models undergo a "discontinuous phase shift" where the model suddenly acquires substantial abilities not seen in smaller models. These are known as "emergent abilities", and have been the subject of substantial study. Researchers note that such abilities "cannot be predicted simply by extrapolating the performance of smaller models". These abilities are discovered rather than programmed-in or designed, in some cases only after the LLM has been publicly deployed. Hundreds of emergent abilities have been described. Examples include multi-step arithmetic, taking college-level exams, identifying the intended meaning of a word, chain-of-thought prompting, decoding the

While it is generally the case that performance of large models on various tasks can be extrapolated based on the performance of similar smaller models, sometimes large models undergo a "discontinuous phase shift" where the model suddenly acquires substantial abilities not seen in smaller models. These are known as "emergent abilities", and have been the subject of substantial study. Researchers note that such abilities "cannot be predicted simply by extrapolating the performance of smaller models". These abilities are discovered rather than programmed-in or designed, in some cases only after the LLM has been publicly deployed. Hundreds of emergent abilities have been described. Examples include multi-step arithmetic, taking college-level exams, identifying the intended meaning of a word, chain-of-thought prompting, decoding the

language model

A language model is a probability distribution over sequences of words. Given any sequence of words of length , a language model assigns a probability P(w_1,\ldots,w_m) to the whole sequence. Language models generate probabilities by training on ...

consisting of a neural network

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological ...

with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning

Self-supervised learning (SSL) refers to a machine learning paradigm, and corresponding methods, for processing unlabelled data to obtain useful representations that can help with downstream learning tasks. The most salient thing about SSL metho ...

. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to pro ...

research away from the previous paradigm of training specialized supervised models for specific tasks.

Properties

Though the term ''large language model'' has no formal definition, it often refers todeep learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be supervised, semi-supervised or unsupervised.

De ...

models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-size, computing power) devoted to them, in a way that is not dependent on additional breakthroughs in design.

Though trained on simple tasks along the lines of predicting the next word in a sentence, neural language models with sufficient training and parameter counts are found to capture much of the syntax and semantics of human language. In addition, large language models demonstrate considerable general knowledge about the world, and are able to "memorize" a great quantity of facts during training. LLMs have been observed to confidently assert claims of fact which do not seem to be justified by their training data

In machine learning, a common task is the study and construction of algorithms that can learn from and make predictions on data. Such algorithms function by making data-driven predictions or decisions, through building a mathematical model from ...

, a phenomenon which has been termed "hallucination

A hallucination is a perception in the absence of an external stimulus that has the qualities of a real perception. Hallucinations are vivid, substantial, and are perceived to be located in external objective space. Hallucination is a combinatio ...

".

Emergent abilities

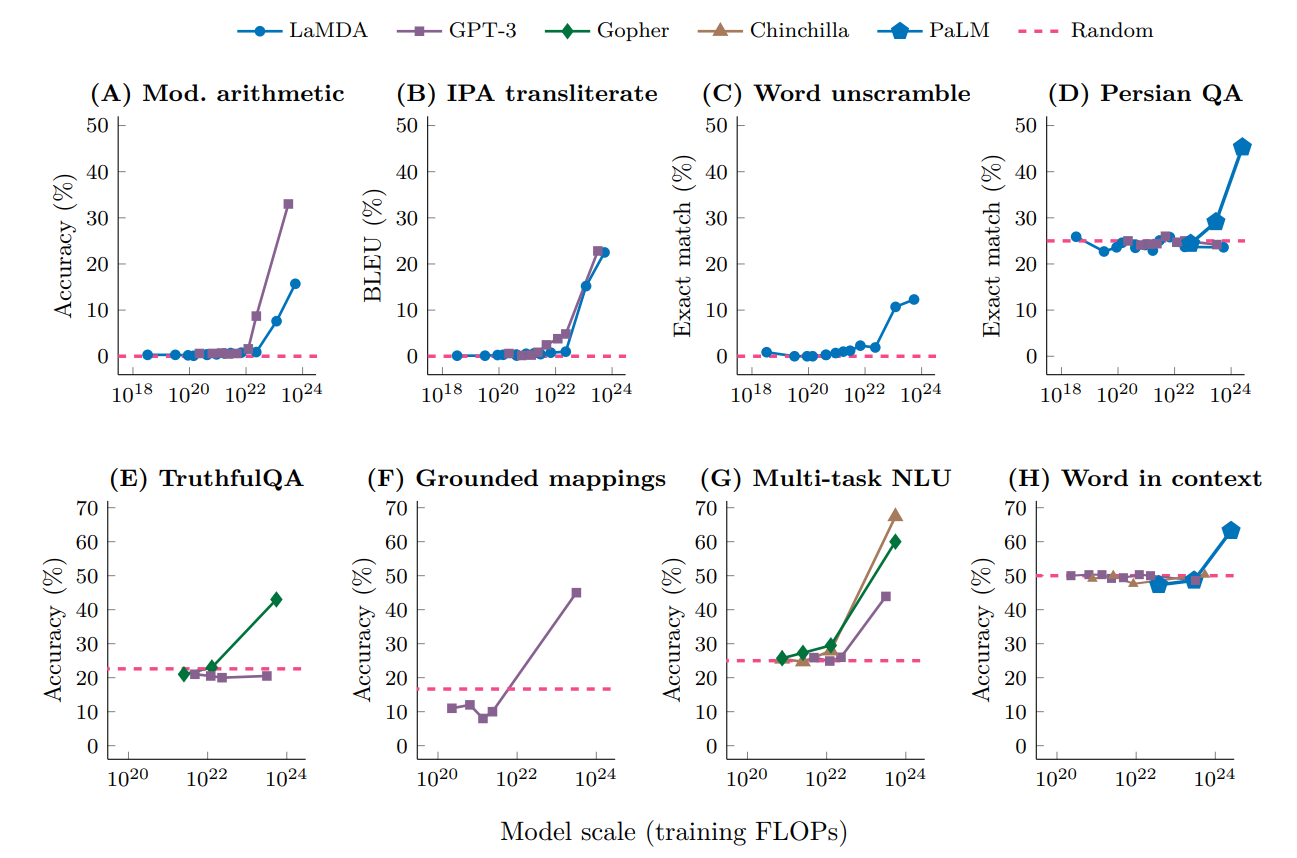

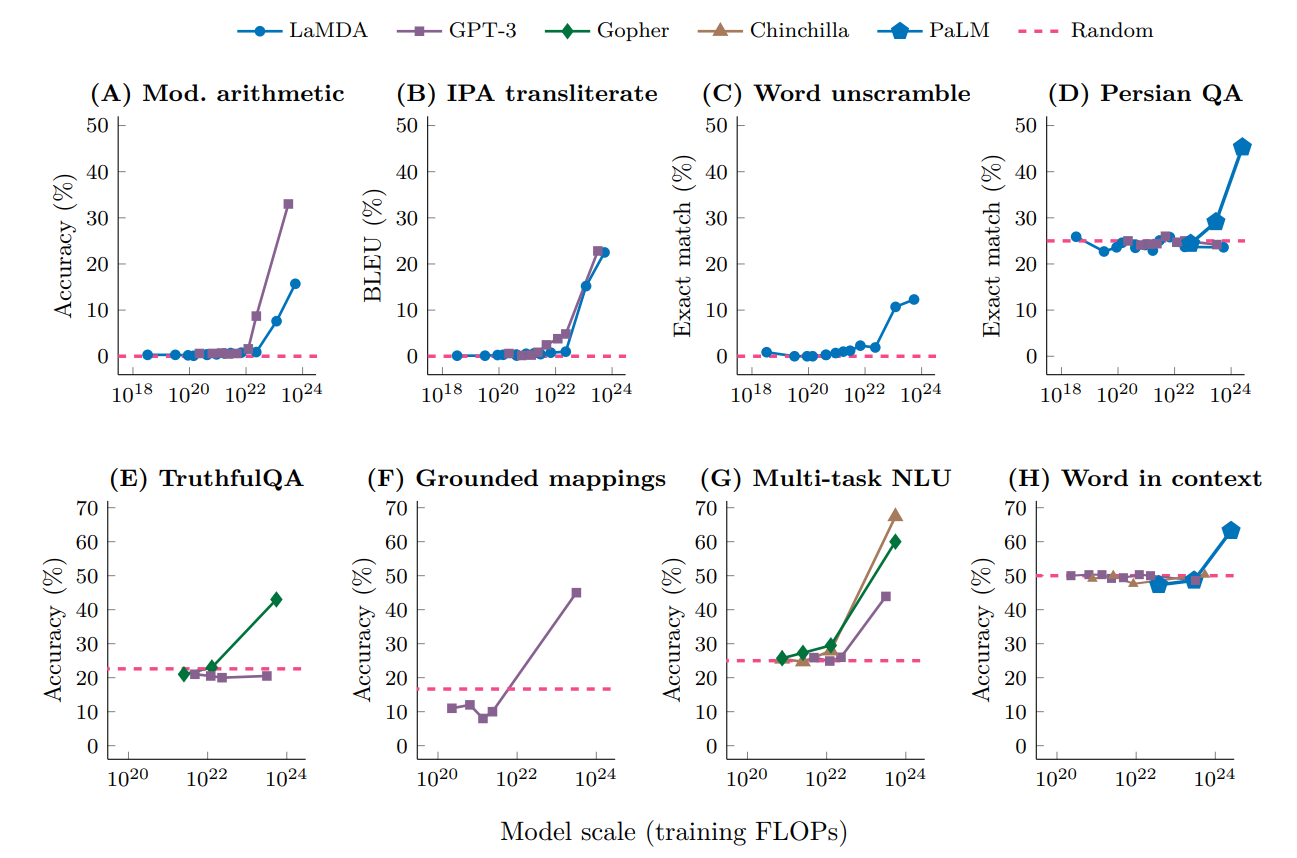

While it is generally the case that performance of large models on various tasks can be extrapolated based on the performance of similar smaller models, sometimes large models undergo a "discontinuous phase shift" where the model suddenly acquires substantial abilities not seen in smaller models. These are known as "emergent abilities", and have been the subject of substantial study. Researchers note that such abilities "cannot be predicted simply by extrapolating the performance of smaller models". These abilities are discovered rather than programmed-in or designed, in some cases only after the LLM has been publicly deployed. Hundreds of emergent abilities have been described. Examples include multi-step arithmetic, taking college-level exams, identifying the intended meaning of a word, chain-of-thought prompting, decoding the

While it is generally the case that performance of large models on various tasks can be extrapolated based on the performance of similar smaller models, sometimes large models undergo a "discontinuous phase shift" where the model suddenly acquires substantial abilities not seen in smaller models. These are known as "emergent abilities", and have been the subject of substantial study. Researchers note that such abilities "cannot be predicted simply by extrapolating the performance of smaller models". These abilities are discovered rather than programmed-in or designed, in some cases only after the LLM has been publicly deployed. Hundreds of emergent abilities have been described. Examples include multi-step arithmetic, taking college-level exams, identifying the intended meaning of a word, chain-of-thought prompting, decoding the International Phonetic Alphabet

The International Phonetic Alphabet (IPA) is an alphabetic system of phonetic transcription, phonetic notation based primarily on the Latin script. It was devised by the International Phonetic Association in the late 19th century as a standa ...

, unscrambling a word’s letters, identifying offensive content in paragraphs of Hinglish

Hinglish, a portmanteau of Hindi and English, is the macaronic hybrid use of English and languages of the Indian subcontinent, and especially Hindi. It involves code-switching or translanguaging between these languages whereby they are freely i ...

(a combination of Hindi and English), and generating a similar English equivalent of Kiswahili

Swahili, also known by its local name , is the native language of the Swahili people, who are found primarily in Tanzania, Kenya and Mozambique (along the East African coast and adjacent litoral islands). It is a Bantu language, though Swahili ...

proverbs.

Architecture and training

Large language models have most commonly used thetransformer

A transformer is a passive component that transfers electrical energy from one electrical circuit to another circuit, or multiple circuits. A varying current in any coil of the transformer produces a varying magnetic flux in the transformer' ...

architecture, which, since 2018, has become the standard deep learning technique for sequential data (previously, recurrent architectures such as the LSTM

Long short-term memory (LSTM) is an artificial neural network used in the fields of artificial intelligence and deep learning. Unlike standard feedforward neural networks, LSTM has feedback connections. Such a recurrent neural network (RNN) ca ...

were most common). LLMs are trained in an unsupervised

''Unsupervised'' is an American adult animated sitcom created by David Hornsby, Rob Rosell, and Scott Marder which ran on FX from January 19 to December 20, 2012. The show was created, and for the most part, written by David Hornsby, Scott Marder ...

manner on unannotated text. A left-to-right transformer is trained to maximize the probability assigned to the next word in the training data, given the previous context. Alternatively, an LLM may use a bidirectional transformer (as in the example of BERT

Bert or BERT may refer to:

Persons, characters, or animals known as Bert

*Bert (name), commonly an abbreviated forename and sometimes a surname

*Bert, a character in the poem "Bert the Wombat" by The Wiggles; from their 1992 album Here Comes a Son ...

), which assigns a probability distribution over words given access to both preceding and following context. In addition to the task of predicting the next word or "filling in the blanks", LLMs may be trained on auxiliary tasks which test their understanding of the data distribution, such as Next Sentence Prediction (NSP), in which pairs of sentences are presented and the model must predict whether they appear side-by-side in the training corpus.

The earliest LLMs were trained on corpora

Corpus is Latin for "body". It may refer to:

Linguistics

* Text corpus, in linguistics, a large and structured set of texts

* Speech corpus, in linguistics, a large set of speech audio files

* Corpus linguistics, a branch of linguistics

Music

* ...

having on the order of billions of words. The first model in OpenAI

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promo ...

's GPT series was trained in 2018 on BookCorpus, consisting of 985 million words. In the same year, BERT

Bert or BERT may refer to:

Persons, characters, or animals known as Bert

*Bert (name), commonly an abbreviated forename and sometimes a surname

*Bert, a character in the poem "Bert the Wombat" by The Wiggles; from their 1992 album Here Comes a Son ...

was trained on a combination of BookCorpus and English Wikipedia, totalling 3.3 billion words. Since then, training corpora for LLMs have increased by orders of magnitude, reaching up to hundreds of billions or trillions of tokens.

LLMs are computationally expensive to train. A 2020 study estimated the cost of training a 1.5 billion parameter model (2 orders of magnitude smaller than the state of the art at the time) at $1.6 million. Advances in software and hardware have brought the cost substantially down, with a 2023 paper reporting a cost in the hundreds of thousands of dollars to train a 12 billion parameter model.

A 2020 analysis found that neural language models' capability (as measured by training loss) increased smoothly in a power law

In statistics, a power law is a Function (mathematics), functional relationship between two quantities, where a Relative change and difference, relative change in one quantity results in a proportional relative change in the other quantity, inde ...

relationship with number of parameters, quantity of training data, and computation used for training. These relationships were tested over a wide range of values (up to seven orders of magnitude), and no attenuation of the relationship was observed at the highest end of the range (including for network sizes up to trillions of parameters).

Application to downstream tasks

Between 2018 and 2020, the standard method for harnessing an LLM for a specific natural language processing (NLP) task was tofine tune

Fine may refer to:

Characters

* Sylvia Fine (''The Nanny''), Fran's mother on ''The Nanny''

* Officer Fine, a character in ''Tales from the Crypt'', played by Vincent Spano

Legal terms

* Fine (penalty), money to be paid as punishment for an offe ...

the model with additional task-specific training. It has subsequently been found that more powerful LLMs such as GPT-3

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model that uses deep learning to produce human-like text. Given an initial text as prompt, it will produce text that continues the prompt.

The architecture is a standard ...

can solve tasks without additional training via "prompting" techniques, in which the problem to be solved is presented to the model as a text prompt, possibly with some textual examples of similar problems and their solutions.

Fine-tuning

Fine-tuning is the practice of modifying an existing pretrained language model by training it (in a supervised fashion) on a specific task (e.g.sentiment analysis

Sentiment analysis (also known as opinion mining or emotion AI) is the use of natural language processing, text analysis, computational linguistics, and biometrics to systematically identify, extract, quantify, and study affective states and subjec ...

, named-entity recognition

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre ...

, or part-of-speech tagging

In corpus linguistics, part-of-speech tagging (POS tagging or PoS tagging or POST), also called grammatical tagging is the process of marking up a word in a text (corpus) as corresponding to a particular part of speech, based on both its definitio ...

). It is a form of transfer learning

Transfer learning (TL) is a research problem in machine learning (ML) that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. For example, knowledge gained while learning to recognize ...

. It generally involves the introduction of a new set of weights connecting the final layer of the language model to the output of the downstream task. The original weights of the language model may be "frozen", such that only the new layer of weights connecting them to the output are learned during training. Alternatively, the original weights may receive small updates (possibly with earlier layers frozen).

Prompting

In the prompting paradigm, popularized by GPT-3, the problem to be solved is formulated via a text prompt, which the model must solve by providing a completion (viainference

Inferences are steps in reasoning, moving from premises to logical consequences; etymologically, the word '' infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinction that in ...

). In "few-shot prompting", the prompt includes a small number of examples of similar (problem, solution) pairs. For example, a sentiment analysis task of labelling the sentiment of a movie review could be prompted as follows:

Review: This movie stinks. Sentiment: negative Review: This movie is fantastic! Sentiment:If the model outputs "positive", then it has correctly solved the task. In zero-shot prompting, no solve examples are provided. An example of a zero-shot prompt for the same sentiment analysis task would be "The sentiment associated with the movie review 'This movie is fantastic!' is". Few-shot performance of LLMs has been shown to achieve competitive results on NLP tasks, sometimes surpassing prior state-of-the-art fine-tuning approaches. Examples of such NLP tasks are

translation

Translation is the communication of the Meaning (linguistic), meaning of a #Source and target languages, source-language text by means of an Dynamic and formal equivalence, equivalent #Source and target languages, target-language text. The ...

, question answering

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP), which is concerned with building systems that automatically answer questions posed by humans in a natural l ...

, cloze

A cloze test (also cloze deletion test or occlusion test) is an exercise, test, or assessment consisting of a portion of language with certain items, words, or signs removed (cloze text), where the participant is asked to replace the missing la ...

tasks, unscrambling words, and using a novel word in a sentence. The creation and optimisation of such prompts is called prompt engineering

Prompt engineering is a concept in artificial intelligence, particularly natural language processing (NLP).

In prompt engineering, the description of the task is embedded in the input, e.g., as a question instead of it being implicitly given.

Promp ...

.

Instruction tuning

Instruction tuning is a form of fine-tuning designed to facilitate more natural and accurate zero-shot prompting interactions. Given a text input, a pretrained language model will generate a completion which matches the distribution of text on which it was trained. A naive language model given the prompt "Write an essay about the main themes of ''Hamlet''." might provide a completion such as "A late penalty of 10% per day will be applied to submissions received after March 17." In instruction tuning, the language model is trained on many examples of tasks formulated as natural language instructions, along with appropriate responses. Various techniques for instruction tuning have been applied in practice. OpenAI's InstructGPT protocol involves supervised fine-tuning on a dataset of human-generated (prompt, response) pairs, followed by reinforcement learning from human feedback (RLHF), in which a reward function was learned based on a dataset of human preferences. Another technique, "self-instruct", fine-tunes the language model on a training set of examples which are themselves generated by an LLM ( bootstrapped from a small initial set of human-generated examples).List of large language models

See also

* Foundation models * Reinforcement learning from human feedbackNotes

References

{{Natural language processing Deep learning Natural language processing