|

Fine-tuning (machine Learning)

In deep learning, fine-tuning is an approach to transfer learning in which the weights of a pre-trained model are trained on new data. Fine-tuning can be done on the entire neural network, or on only a subset of its layers, in which case the layers that are not being fine-tuned are "frozen" (not updated during the backpropagation step). A model may also be augmented with "adapters" that consist of far fewer parameters than the original model, and fine-tuned in a parameter-efficient way by tuning the weights of the adapters and leaving the rest of the model's weights frozen. For some architectures, such as convolutional neural networks, it is common to keep the earlier layers (those closest to the input layer) frozen because they capture lower-level features, while later layers often discern high-level features that can be more related to the task that the model is trained on. Models that are pre-trained on large and general corpora are usually fine-tuned by reusing the model's ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning (also known as deep structured learning) is part of a broader family of machine learning methods based on artificial neural networks with representation learning. Learning can be Supervised learning, supervised, Semi-supervised learning, semi-supervised or Unsupervised learning, unsupervised. Deep-learning architectures such as #Deep_neural_networks, deep neural networks, deep belief networks, deep reinforcement learning, recurrent neural networks, convolutional neural networks and Transformer (machine learning model), Transformers have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, Climatology, climate science, material inspection and board game programs, where they have produced results comparable to and in some cases surpassing human expert performance. Artificial neural networks (ANNs) were inspired by information processing and distr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stable Diffusion

Stable Diffusion is a deep learning, text-to-image model released in 2022. It is primarily used to generate detailed images conditioned on text descriptions, though it can also be applied to other tasks such as inpainting, outpainting, and generating image-to-image translations guided by a text prompt. Stable Diffusion is a latent diffusion model, a kind of deep generative neural network developed by the CompVis group at LMU Munich. The model has been released by a collaboration of Stability AI, CompVis LMU, and Runway with support from EleutherAI and LAION. In October 2022, Stability AI raised US$101 million in a round led by Lightspeed Venture Partners and Coatue Management. Stable Diffusion's code and model weights have been released publicly, and it can run on most consumer hardware equipped with a modest GPU with at least 8 GB VRAM. This marked a departure from previous proprietary text-to-image models such as DALL-E and Midjourney which were accessible only via clo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transfer Learning

Transfer learning (TL) is a research problem in machine learning (ML) that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. For example, knowledge gained while learning to recognize cars could apply when trying to recognize trucks. This area of research bears some relation to the long history of psychological literature on transfer of learning, although practical ties between the two fields are limited. From the practical standpoint, reusing or transferring information from previously learned tasks for the learning of new tasks has the potential to significantly improve the sample efficiency of a reinforcement learning agent. History In 1976, Stevo Bozinovski and Ante Fulgosi published a paper explicitly addressing transfer learning in neural networks training. The paper gives a mathematical and geometrical model of transfer learning. In 1981, a report was given on the application of transfer learning in training ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PaLM

Palm most commonly refers to: * Palm of the hand, the central region of the front of the hand * Palm plants, of family Arecaceae ** List of Arecaceae genera * Several other plants known as "palm" Palm or Palms may also refer to: Music * Palm (band), an American rock band * Palms (band), an American rock band featuring members of Deftones and Isis ** Palms (Palms album), their 2013 album * Palms (Thrice album), a 2018 album by American rock band Thrice Businesses and organizations * Palm, Inc., defunct American electronics manufacturer * Palm Breweries, a Belgian company * Palm Pictures, an American entertainment company * Palm Records, a French jazz record label * Palms Casino Resort, a hotel and casino in Las Vegas, U.S. * The Palm (restaurant), New York City, U.S. * Palm Cabaret and Bar, Puerto Vallarta, Jalisco, Mexico Places United States * Midway, Lafayette County, Arkansas, also known as Palm * Palm, Pennsylvania * Palms, Los Angeles ** Palms station * Palms, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Cloud Platform

Google Cloud Platform (GCP), offered by Google, is a suite of cloud computing services that runs on the same infrastructure that Google uses internally for its end-user products, such as Google Search, Gmail, Google Drive, and YouTube. Alongside a set of management tools, it provides a series of modular cloud services including computing, data storage, data analytics and machine learning. Registration requires a credit card or bank account details. Google Cloud Platform provides infrastructure as a service, platform as a service, and serverless computing environments. In April 2008, Google announced App Engine, a platform for developing and hosting web applications in Google-managed data centers, which was the first cloud computing service from the company. The service became generally available in November 2011. Since the announcement of App Engine, Google added multiple cloud services to the platform. Google Cloud Platform is a part of Google Cloud, which includes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Azure OpenAI Service

Azure may refer to: Colour * Azure (color), a hue of blue ** Azure (heraldry) ** Shades of azure, shades and variations Arts and media * ''Azure'' (Art Farmer and Fritz Pauer album), 1987 * Azure (Gary Peacock and Marilyn Crispell album), 2013 * ''Azure'' (design magazine), Toronto, Ontario * Azure (heraldry), a blue tincture on flags or coats of arms * ''Azure'' (magazine), a periodical on Jewish thought and identity * ''Azure'' (painting), by Gustave Van de Woestijne * "Azure" (song), by Duke Ellington * "Azure", a song by the 3rd and the Mortal from the album '' Painting on Glass'' Computing * Microsoft Azure, a cloud computing platform * Mozilla Azure, a graphics abstraction API Places * Azure, Alberta, a locality in Canada * Azure, Montana, a census-designated place in the United States * Azure Window, a former natural arch in Malta Other uses * Azure Ryder (born 1996), an Australian singer, songwriter, and musician * Azure (barley), a malting barley variety * B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft Azure

Microsoft Azure, often referred to as Azure ( , ), is a cloud computing platform operated by Microsoft for application management via around the world-distributed data centers. Microsoft Azure has multiple capabilities such as software as a service (SaaS), platform as a service (PaaS) and infrastructure as a service (IaaS) and supports many different programming languages, tools, and frameworks, including both Microsoft-specific and third-party software and systems. Azure, announced at Microsoft's Professional Developers Conference (PDC) in October 2008, went by the internal project codename "Project Red Dog", and was formally released in February 2010 as Windows Azure, before being renamed Microsoft Azure on March 25, 2014. Services Microsoft Azure uses large-scale virtualization at Microsoft data centers worldwide and it offers more than 600 services. Compute services * Virtual machines, infrastructure as a service (IaaS) allowing users to launch general-purpos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

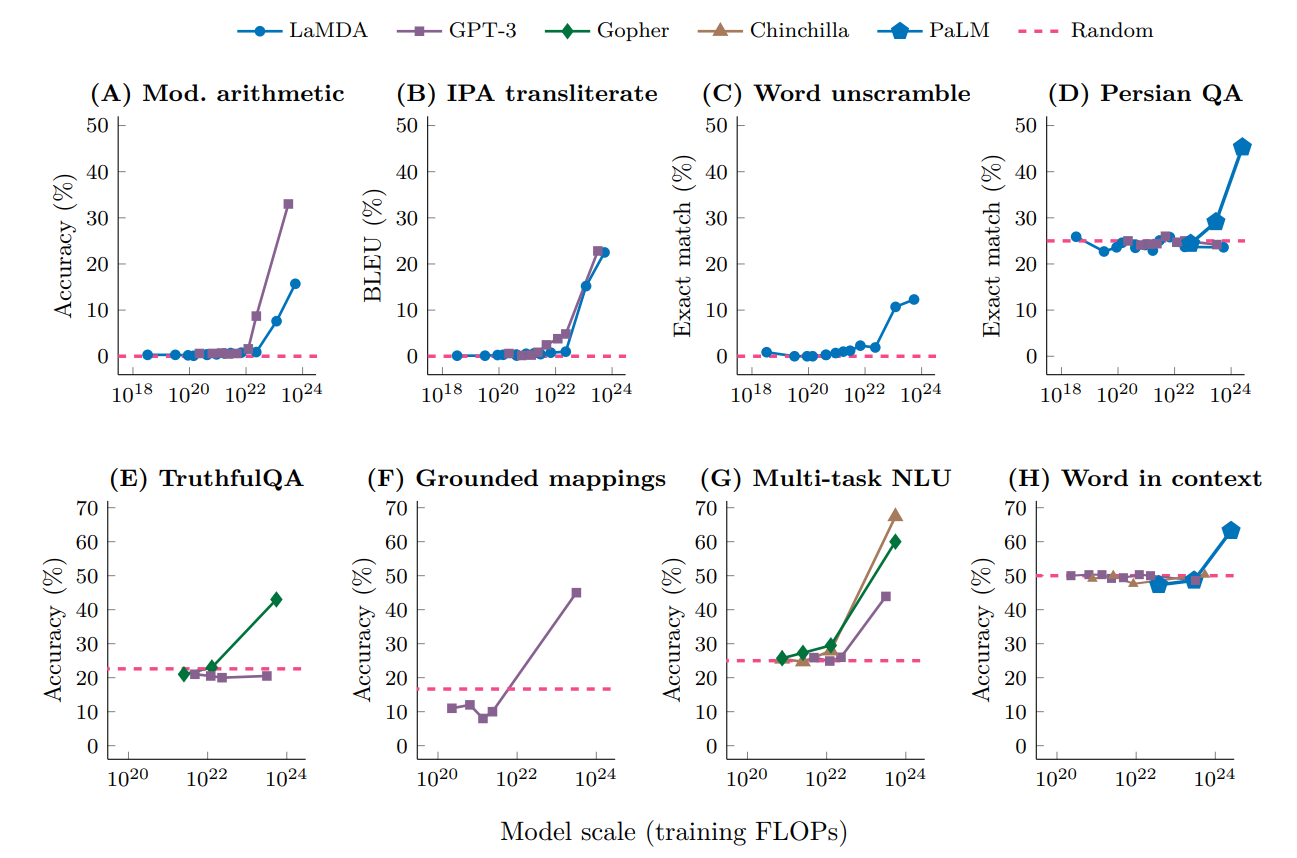

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI is an artificial intelligence (AI) research laboratory consisting of the for-profit corporation OpenAI LP and its parent company, the non-profit OpenAI Inc. The company conducts research in the field of AI with the stated goal of promoting and developing friendly AI in a way that benefits humanity as a whole. The organization was founded in San Francisco in late 2015 by Sam Altman, Elon Musk, and others, who collectively pledged US$1 billion. Musk resigned from the board in February 2018 but remained a donor. In 2019, OpenAI LP received a 1 billion investment from Microsoft. OpenAI is headquartered at the Pioneer Building in Mission District, San Francisco. History In December 2015, Sam Altman, Elon Musk, Greg Brockman, Reid Hoffman, Jessica Livingston, Peter Thiel, Amazon Web Services (AWS), Infosys, and YC Research announced the formation of OpenAI and pledged over 1 billion to the venture. The organization stated it would "freely collabora ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Model

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Modeling

A language model is a probability distribution over sequences of words. Given any sequence of words of length , a language model assigns a probability P(w_1,\ldots,w_m) to the whole sequence. Language models generate probabilities by training on text corpora in one or many languages. Given that languages can be used to express an infinite variety of valid sentences (the property of digital infinity), language modeling faces the problem of assigning non-zero probabilities to linguistically valid sequences that may never be encountered in the training data. Several modelling approaches have been designed to surmount this problem, such as applying the Markov assumption or using neural architectures such as recurrent neural networks or transformers. Language models are useful for a variety of problems in computational linguistics; from initial applications in speech recognition to ensure nonsensical (i.e. low-probability) word sequences are not predicted, to wider use in machine tr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

_-1.jpg)