|

Coscheduling

Coscheduling is the principle for concurrent systems of scheduling related processes to run on different processors at the same time (in parallel). There are various specific implementations to realize this. If an application consists of a collection of processes working closely together, and if some but not all of the processes are scheduled for execution, the executing processes may attempt to communicate with those that are not executing, which will cause them to block. Eventually the other processes will be scheduled for execution, but by this time the situation may be reversed so that these processes also block waiting for interactions with others. As a result, the application makes progress at the rate of at most one interprocess interaction per time slice, and will have low throughput Network throughput (or just throughput, when in context) refers to the rate of message delivery over a communication channel in a communication network, such as Ethernet or packet r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Gang Scheduling

In computer science, gang scheduling is a scheduling algorithm for parallel systems that schedules related threads or processes to run simultaneously on different processors. Usually these will be threads all belonging to the same process, but they may also be from different processes, where the processes could have a producer-consumer relationship or come from the same MPI program. Gang scheduling is used to ensure that if two or more threads or processes communicate with each other, they will all be ready to communicate at the same time. If they were not gang-scheduled, then one could wait to send or receive a message to another while it is sleeping, and vice versa. When processors are over-subscribed and gang scheduling is not used within a group of processes or threads which communicate with each other, each communication event could suffer the overhead of a context switch. Gang scheduling is based on a data structure called the Ousterhout matrix. In this matrix each row repr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrent System

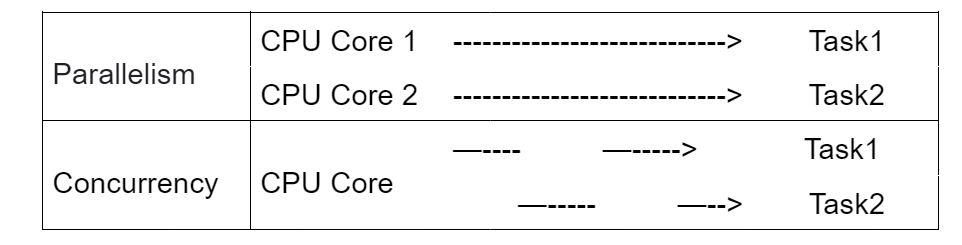

Concurrency refers to the ability of a system to execute multiple tasks through simultaneous execution or time-sharing (context switching), sharing resources and managing interactions. Concurrency improves responsiveness, throughput, and scalability in modern computing, including: * Operating systems and embedded systems * Distributed systems, parallel computing, and high-performance computing * Database systems, web applications, and cloud computing Related concepts Concurrency is a broader concept that encompasses several related ideas, including: * Parallelism (simultaneous execution on multiple processing units). Parallelism executes tasks independently on multiple CPU cores. Concurrency allows for multiple ''threads of control'' at the program level, which can use parallelism or time-slicing to perform these tasks. Programs may exhibit parallelism only, concurrency only, both parallelism and concurrency, neither. * Multi-threading and multi-processing (shared syste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Scheduling (computing)

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows. The scheduling activity is carried out by a mechanism called a scheduler. Schedulers are often designed so as to keep all computer resources busy (as in load balancing), allow multiple users to share system resources effectively, or to achieve a target quality-of-service. Scheduling is fundamental to computation itself, and an intrinsic part of the execution model of a computer system; the concept of scheduling makes it possible to have computer multitasking with a single central processing unit (CPU). Goals A scheduler may aim at one or more goals, for example: * maximizing '' throughput'' (the total amount of work completed per time unit); * minimizing '' wait time'' (time from work becoming ready until the first point it begins execution); * minimizing '' latency'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Process (computing)

In computing, a process is the Instance (computer science), instance of a computer program that is being executed by one or many thread (computing), threads. There are many different process models, some of which are light weight, but almost all processes (even entire virtual machines) are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions Concurrency (computer science), concurrently. While a computer program is a passive collection of Instruction set, instructions typically stored in a file on disk, a process is the execution of those instructions after being loaded from the disk into memory. Several processes may be associated with the same program; for example, opening up several instances of the same progra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interprocess Communication

In computer science, interprocess communication (IPC) is the sharing of data between running processes in a computer system. Mechanisms for IPC may be provided by an operating system. Applications which use IPC are often categorized as clients and servers, where the client requests data and the server responds to client requests. Many applications are both clients and servers, as commonly seen in distributed computing. IPC is very important to the design process for microkernels and nanokernels, which reduce the number of functionalities provided by the kernel. Those functionalities are then obtained by communicating with servers via IPC, leading to a large increase in communication when compared to a regular monolithic kernel. IPC interfaces generally encompass variable analytic framework structures. These processes ensure compatibility between the multi-vector protocols upon which IPC models rely. An IPC mechanism is either synchronous or asynchronous. Synchronization pri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Time Slice

In computing, preemption is the act performed by an external scheduler — without assistance or cooperation from the task — of temporarily interrupting an executing task, with the intention of resuming it at a later time. This preemptive scheduler usually runs in the most privileged protection ring, meaning that interruption and then resumption are considered highly secure actions. Such changes to the currently executing task of a processor are known as context switching. User mode and kernel mode In any given system design, some operations performed by the system may not be preemptable. This usually applies to kernel functions and service interrupts which, if not permitted to run to completion, would tend to produce race conditions resulting in deadlock. Barring the scheduler from preempting tasks while they are processing kernel functions simplifies the kernel design at the expense of system responsiveness. The distinction between user mode and kernel mode, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Throughput

Network throughput (or just throughput, when in context) refers to the rate of message delivery over a communication channel in a communication network, such as Ethernet or packet radio. The data that these messages contain may be delivered over physical or logical links, or through network nodes. Throughput is usually measured in bits per second (, sometimes abbreviated bps), and sometimes in packets per second ( or pps) or data packets per time slot. The system throughput or aggregate throughput is the sum of the data rates that are delivered over all channels in a network. Throughput represents digital bandwidth consumption. The throughput of a communication system may be affected by various factors, including the limitations of the underlying physical medium, available processing power of the system components, end-user behavior, etc. When taking various protocol overheads into account, the useful rate of the data transfer can be significantly lower than the maximum a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Latency (engineering)

Latency, from a general point of view, is a time delay between the Causality, cause and the effect of some physical change in the system being observed. Lag (video games), Lag, as it is known in Gaming culture, gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games. The original meaning of “latency”, as used widely in psychology, medicine and most other disciplines, derives from “latent”, a word of Latin origin meaning “hidden”. Its different and relatively recent meaning (this topic) of “lateness” or “delay” appears to derive from its superficial similarity to the word “late”, from the old English “laet”. Latency is physically a consequence of the limited velocity at which any Event (relativity), physical interaction can propagate. The magnitude of this velocity is always less than or equal to the speed of light. Therefore, every physical s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |