Constrained Minimum Criterion on:

[Wikipedia]

[Google]

[Amazon]

Model selection is the task of selecting a

In its most basic forms, model selection is one of the fundamental tasks of

In its most basic forms, model selection is one of the fundamental tasks of

) * * * * * * * * * * * * * * * * * * * * * {{Least Squares and Regression Analysis Model selection, Regression variable selection Mathematical and quantitative methods (economics) Management science

model

A model is an informative representation of an object, person, or system. The term originally denoted the plans of a building in late 16th-century English, and derived via French and Italian ultimately from Latin , .

Models can be divided in ...

from among various candidates on the basis of performance criterion to choose the best one.

In the context of machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

and more generally statistical analysis

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of ...

, this may be the selection of a statistical model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of Sample (statistics), sample data (and similar data from a larger Statistical population, population). A statistical model repre ...

from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments

The design of experiments (DOE), also known as experiment design or experimental design, is the design of any task that aims to describe and explain the variation of information under conditions that are hypothesized to reflect the variation. ...

such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor

In philosophy, Occam's razor (also spelled Ockham's razor or Ocham's razor; ) is the problem-solving principle that recommends searching for explanations constructed with the smallest possible set of elements. It is also known as the principle o ...

).

state, "The majority of the problems in statistical inference

Statistical inference is the process of using data analysis to infer properties of an underlying probability distribution.Upton, G., Cook, I. (2008) ''Oxford Dictionary of Statistics'', OUP. . Inferential statistical analysis infers properties of ...

can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis".

Model selection may also refer to the problem of selecting a few representative models from a large set of computational models for the purpose of decision making

In psychology, decision-making (also spelled decision making and decisionmaking) is regarded as the cognitive process resulting in the selection of a belief or a course of action among several possible alternative options. It could be either ra ...

or optimization under uncertainty.

In machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

, algorithmic approaches to model selection include feature selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons:

* sim ...

, hyperparameter optimization

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process, which must be con ...

, and statistical learning theory

Statistical learning theory is a framework for machine learning drawing from the fields of statistics and functional analysis. Statistical learning theory deals with the statistical inference problem of finding a predictive function based on da ...

.

Introduction

In its most basic forms, model selection is one of the fundamental tasks of

In its most basic forms, model selection is one of the fundamental tasks of scientific inquiry

Models of scientific inquiry have two functions: first, to provide a descriptive account of ''how'' scientific inquiry is carried out in practice, and second, to provide an explanatory account of ''why'' scientific inquiry succeeds as well as it ap ...

. Determining the principle that explains a series of observations is often linked directly to a mathematical model predicting those observations. For example, when Galileo

Galileo di Vincenzo Bonaiuti de' Galilei (15 February 1564 – 8 January 1642), commonly referred to as Galileo Galilei ( , , ) or mononymously as Galileo, was an Italian astronomer, physicist and engineer, sometimes described as a poly ...

performed his inclined plane

An inclined plane, also known as a ramp, is a flat supporting surface tilted at an angle from the vertical direction, with one end higher than the other, used as an aid for raising or lowering a load. The inclined plane is one of the six clas ...

experiments, he demonstrated that the motion of the balls fitted the parabola predicted by his model .

Of the countless number of possible mechanisms and processes that could have produced the data, how can one even begin to choose the best model? The mathematical approach commonly taken decides among a set of candidate models; this set must be chosen by the researcher. Often simple models such as polynomial

In mathematics, a polynomial is a Expression (mathematics), mathematical expression consisting of indeterminate (variable), indeterminates (also called variable (mathematics), variables) and coefficients, that involves only the operations of addit ...

s are used, at least initially . emphasize throughout their book the importance of choosing models based on sound scientific principles, such as understanding of the phenomenological processes or mechanisms (e.g., chemical reactions) underlying the data.

Once the set of candidate models has been chosen, the statistical analysis allows us to select the best of these models. What is meant by ''best'' is controversial. A good model selection technique will balance goodness of fit

The goodness of fit of a statistical model describes how well it fits a set of observations. Measures of goodness of fit typically summarize the discrepancy between observed values and the values expected under the model in question. Such measur ...

with simplicity. More complex models will be better able to adapt their shape to fit the data (for example, a fifth-order polynomial can exactly fit six points), but the additional parameters may not represent anything useful. (Perhaps those six points are really just randomly distributed about a straight line.) Goodness of fit is generally determined using a likelihood ratio

A likelihood function (often simply called the likelihood) measures how well a statistical model explains observed data by calculating the probability of seeing that data under different parameter values of the model. It is constructed from the j ...

approach, or an approximation of this, leading to a chi-squared test

A chi-squared test (also chi-square or test) is a Statistical hypothesis testing, statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine w ...

. The complexity is generally measured by counting the number of parameters

A parameter (), generally, is any characteristic that can help in defining or classifying a particular system (meaning an event, project, object, situation, etc.). That is, a parameter is an element of a system that is useful, or critical, when ...

in the model.

Model selection techniques can be considered as estimator

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on Sample (statistics), observed data: thus the rule (the estimator), the quantity of interest (the estimand) and its result (the estimate) are distinguish ...

s of some physical quantity, such as the probability of the model producing the given data. The bias

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is inaccurate, closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individ ...

and variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion ...

are both important measures of the quality of this estimator; efficiency

Efficiency is the often measurable ability to avoid making mistakes or wasting materials, energy, efforts, money, and time while performing a task. In a more general sense, it is the ability to do things well, successfully, and without waste.

...

is also often considered.

A standard example of model selection is that of curve fitting

Curve fitting is the process of constructing a curve, or mathematical function, that has the best fit to a series of data points, possibly subject to constraints. Curve fitting can involve either interpolation, where an exact fit to the data is ...

, where, given a set of points and other background knowledge (e.g. points are a result of i.i.d. samples), we must select a curve that describes the function that generated the points.

Two directions of model selection

There are two main objectives in inference and learning from data. One is for scientific discovery, also called statistical inference, understanding of the underlying data-generating mechanism and interpretation of the nature of the data. Another objective of learning from data is for predicting future or unseen observations, also called Statistical Prediction. In the second objective, the data scientist does not necessarily concern an accurate probabilistic description of the data. Of course, one may also be interested in both directions. In line with the two different objectives, model selection can also have two directions: model selection for inference and model selection for prediction. The first direction is to identify the best model for the data, which will preferably provide a reliable characterization of the sources of uncertainty for scientific interpretation. For this goal, it is significantly important that the selected model is not too sensitive to the sample size. Accordingly, an appropriate notion for evaluating model selection is the selection consistency, meaning that the mostrobust

Robustness is the property of being strong and healthy in constitution. When it is transposed into a system, it refers to the ability of tolerating perturbations that might affect the system's functional body. In the same line ''robustness'' can ...

candidate will be consistently selected given sufficiently many data samples.

The second direction is to choose a model as machinery to offer excellent predictive performance. For the latter, however, the selected model may simply be the lucky winner among a few close competitors, yet the predictive performance can still be the best possible. If so, the model selection is fine for the second goal (prediction), but the use of the selected model for insight and interpretation may be severely unreliable and misleading. Moreover, for very complex models selected this way, even predictions may be unreasonable for data only slightly different from those on which the selection was made.

Methods to assist in choosing the set of candidate models

*Data transformation (statistics)

In statistics, data transformation is the application of a deterministic mathematical function to each point in a data set—that is, each data point ''zi'' is replaced with the transformed value ''yi'' = ''f''(''zi''), where ''f'' is a functi ...

*Exploratory data analysis

In statistics, exploratory data analysis (EDA) is an approach of data analysis, analyzing data sets to summarize their main characteristics, often using statistical graphics and other data visualization methods. A statistical model can be used or ...

* Model specification

*Scientific method

The scientific method is an Empirical evidence, empirical method for acquiring knowledge that has been referred to while doing science since at least the 17th century. Historically, it was developed through the centuries from the ancient and ...

Criteria

Below is a list of criteria for model selection. The most commonly used information criteria are (i) the Akaike information criterion and (ii) the Bayes factor and/or the Bayesian information criterion (which to some extent approximates the Bayes factor), see for a review. *Akaike information criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to ...

(AIC), a measure of the goodness fit of an estimated statistical model

* Bayes factor

The Bayes factor is a ratio of two competing statistical models represented by their evidence, and is used to quantify the support for one model over the other. The models in question can have a common set of parameters, such as a null hypothesis ...

* Bayesian information criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on ...

(BIC), also known as the Schwarz information criterion, a statistical criterion for model selection

*Bridge criterion (BC), a statistical criterion that can attain the better performance of AIC and BIC despite the appropriateness of model specification.

* Cross-validation

* Deviance information criterion

The deviance information criterion (DIC) is a hierarchical modeling generalization of the Akaike information criterion (AIC). It is particularly useful in Bayesian model selection problems where the posterior distributions of the models have been ...

(DIC), another Bayesian oriented model selection criterion

* False discovery rate

In statistics, the false discovery rate (FDR) is a method of conceptualizing the rate of type I errors in null hypothesis testing when conducting multiple comparisons. FDR-controlling procedures are designed to control the FDR, which is the exp ...

* Focused information criterion In statistics, the focused information criterion (FIC) is a method for selecting the most appropriate model among a set of competitors for a given data set. Unlike most other model selection strategies, like the Akaike information criterion (AIC), t ...

(FIC), a selection criterion sorting statistical models by their effectiveness for a given focus parameter

* Hannan–Quinn information criterion In statistics, the Hannan–Quinn information criterion (HQC) is a criterion for model selection. It is an alternative to Akaike information criterion (AIC) and Bayesian information criterion (BIC). It is given as

: \mathrm = -2 L_ + 2 k \ln(\ln(n ...

, an alternative to the Akaike and Bayesian criteria

* Kashyap information criterion (KIC) is a powerful alternative to AIC and BIC, because KIC uses Fisher information matrix

* Likelihood-ratio test

In statistics, the likelihood-ratio test is a hypothesis test that involves comparing the goodness of fit of two competing statistical models, typically one found by maximization over the entire parameter space and another found after imposing ...

* Mallows's ''Cp''

* Minimum description length

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam ...

* Minimum message length (MML)

* PRESS statistic

In statistics, the predicted residual error sum of squares (PRESS) is a form of cross-validation used in regression analysis to provide a summary measure of the fit of a model to a sample of observations that were not themselves used to estimat ...

, also known as the PRESS criterion

* Structural risk minimization

Structural risk minimization (SRM) is an inductive principle of use in machine learning. Commonly in machine learning, a generalized model must be selected from a finite data set, with the consequent problem of overfitting – the model becomin ...

* Stepwise regression

In statistics, stepwise regression is a method of fitting regression models in which the choice of predictive variables is carried out by an automatic procedure. In each step, a variable is considered for addition to or subtraction from the set of ...

* Watanabe–Akaike information criterion

In statistics, the Widely Applicable Information Criterion (WAIC), also known as Watanabe–Akaike information criterion, is the generalized version of the Akaike information criterion (AIC) onto singular statistical models. It is used as measur ...

(WAIC), also called the widely applicable information criterion

* Extended Bayesian Information Criterion (EBIC) is an extension of ordinary Bayesian information criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on ...

(BIC) for models with high parameter spaces.

* Extended Fisher Information Criterion (EFIC) is a model selection criterion for linear regression models.

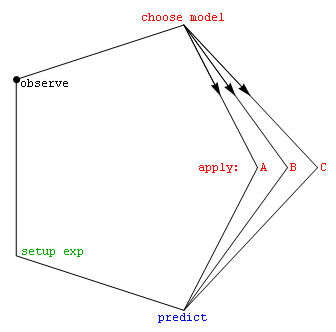

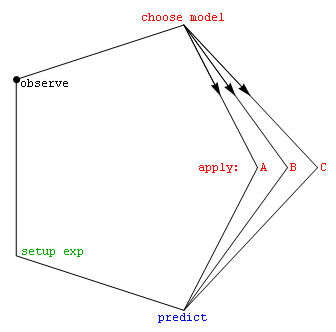

* Constrained Minimum Criterion (CMC) is a frequentist method for regression model selection based on the following geometric observations. In the parameter vector space of the full model, every vector represents a model. There exists a ball centered on the true parameter vector of the full model in which the true model is the smallest model (in norm). As the sample size goes to infinity, the MLE for the true parameter vector converges to and thus pulls the shrinking likelihood ratio confidence region to the true parameter vector. The confidence region will be inside the ball with probability tending to one. The CMC selects the smallest model in this region. When the region captures the true parameter vector, the CMC selection is the true model. Hence, the probability that the CMC selection is the true model is greater than or equal to the confidence level.

Among these criteria, cross-validation is typically the most accurate, and computationally the most expensive, for supervised learning problems.

say the following:

See also

*All models are wrong

"All models are wrong" is a common aphorism and anapodoton in statistics. It is often expanded as "All models are wrong, but some are useful". The aphorism acknowledges that statistical models always fall short of the complexities of reality but ca ...

* Analysis of competing hypotheses

The analysis of competing hypotheses (ACH) is a methodology for evaluating multiple competing hypotheses for observed data. It was developed by Richards (Dick) J. Heuer, Jr., a 45-year veteran of the Central Intelligence Agency, in the 1970s for ...

* Automated machine learning

Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. It is the combination of automation and ML.

AutoML potentially includes every stage from beginning with a raw datas ...

(AutoML)

* Bias-variance dilemma

* Feature selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons:

* sim ...

* Freedman's paradox

In statistical analysis, Freedman's paradox, named after David Freedman, is a problem in model selection whereby predictor variables with no relationship to the dependent variable can pass tests of significance – both individually via a t-test, ...

* Grid search

Grid, The Grid, or GRID may refer to:

Space partitioning

* Regular grid, a tessellation of space with translational symmetry, typically formed from parallelograms or higher-dimensional analogs

** Grid graph, a graph structure with nodes connec ...

* Identifiability Analysis

Identifiability analysis is a group of methods found in mathematical statistics that are used to determine how well the parameters of a model are estimated by the quantity and quality of experimental data. Therefore, these methods explore not onl ...

* Log-linear analysis

Log-linear analysis is a technique used in statistics to examine the relationship between more than two categorical variables. The technique is used for both hypothesis testing and model building. In both these uses, models are tested to find the m ...

* Model identification

* Occam's razor

In philosophy, Occam's razor (also spelled Ockham's razor or Ocham's razor; ) is the problem-solving principle that recommends searching for explanations constructed with the smallest possible set of elements. It is also known as the principle o ...

* Optimal design

In the design of experiments, optimal experimental designs (or optimum designs) are a class of experimental designs that are optimal with respect to some statistical criterion. The creation of this field of statistics has been credited to D ...

* Parameter identification problem

In economics and econometrics, the parameter identification problem arises when the value of one or more parameters in an economic model cannot be determined from observable variables. It is closely related to non-identifiability in statistics and ...

* Scientific modelling

Scientific modelling is an activity that produces models representing empirical objects, phenomena, and physical processes, to make a particular part or feature of the world easier to understand, define, quantify, visualize, or simulate. It ...

* Statistical model validation

In statistics, model validation is the task of evaluating whether a chosen statistical model is appropriate or not. Oftentimes in statistical inference, inferences from models that appear to fit their data may be flukes, resulting in a misundersta ...

* Stein's paradox

Notes

References

* * * * * * his has over 38000 citations on Google Scholar">Google_Scholar.html" ;"title="his has over 38000 citations on Google Scholar">his has over 38000 citations on Google Scholar* (reprinted 1965, ''Science'' 148: 754–75) * * * * * * * * * * * * * * * * * * * * * {{Least Squares and Regression Analysis Model selection, Regression variable selection Mathematical and quantitative methods (economics) Management science