|

VGGNet

The VGGNets are a series of convolutional neural networks (CNNs) developed by the Visual Geometry Group (VGG) at the University of Oxford. The VGG family includes various configurations with different depths, denoted by the letter "VGG" followed by the number of weight layers. The most common ones are VGG-16 (13 convolutional layers + 3 fully connected layers, 138M parameters) and VGG-19 (16 + 3, 144M parameters). The VGG family were widely applied in various computer vision areas. An Ensemble learning, ensemble model of VGGNets achieved state-of-the-art results in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2014. It was used as a baseline comparison in the Residual neural network, ResNet paper for image classification, as the network in the Region Based Convolutional Neural Networks, Fast Region-based CNN for object detection, and as a base network in neural style transfer. The series was historically important as an early influential model designed by co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DenseNet

A residual neural network (also referred to as a residual network or ResNet) is a deep learning architecture in which the layers learn residual functions with reference to the layer inputs. It was developed in 2015 for image recognition, and won the ImageNet Large Scale Visual Recognition ChallengeILSVRC of that year. As a point of terminology, "residual connection" refers to the specific architectural motif of , where f is an arbitrary neural network module. The motif had been used previously (see Residual neural network#History, §History for details). However, the publication of ResNet made it widely popular for Feedforward neural network, feedforward networks, appearing in neural networks that are seemingly unrelated to ResNet. The residual connection stabilizes the training and convergence of deep neural networks with hundreds of layers, and is a common motif in deep neural networks, such as Transformer (deep learning architecture), transformer models (e.g., BERT (language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ImageNet Large Scale Visual Recognition Challenge

The ImageNet project is a large visual database designed for use in Outline of object recognition, visual object recognition software research. More than 14 million images have been hand-annotated by the project to indicate what objects are pictured and in at least one million of the images, bounding boxes are also provided. ImageNet contains more than 20,000 categories, with a typical category, such as "balloon" or "strawberry", consisting of several hundred images. The database of annotations of third-party image URLs is freely available directly from ImageNet, though the actual images are not owned by ImageNet. Since 2010, the ImageNet project runs an annual software contest, the ImageNet Large Scale Visual Recognition Challenge (#History_of_the_ImageNet_challenge, ILSVRC), where software programs compete to correctly classify and detect objects and scenes. The challenge uses a "trimmed" list of one thousand non-overlapping classes. History AI researcher Fei-Fei Li began working ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AlexNet

AlexNet is a convolutional neural network architecture developed for image classification tasks, notably achieving prominence through its performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). It classifies images into 1,000 distinct object categories and is regarded as the first widely recognized application of deep convolutional networks in large-scale visual recognition. Developed in 2012 by Alex Krizhevsky in collaboration with Ilya Sutskever and his Ph.D. advisor Geoffrey Hinton at the University of Toronto, the model contains 60 million parameters and 650,000 neurons. The original paper's primary result was that the depth of the model was essential for its high performance, which was computationally expensive, but made feasible due to the utilization of graphics processing units (GPUs) during training. The three formed team SuperVision and submitted AlexNet in the ImageNet Large Scale Visual Recognition Challenge on September 30, 2012. The network ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Caffe (software)

Caffe (Convolutional Architecture for Fast Feature Embedding) is a deep learning framework, originally developed at University of California, Berkeley. It is open source, under a BSD license. It is written in C++, with a Python interface. History Yangqing Jia created the Caffe project during his PhD at UC Berkeley, while working the lab of Trevor Darrell. The first version, called "DeCAF", made its first appearance in spring 2013 when it was used for the ILSVRC challenge (later called ImageNet). The library was named Caffe and released to the public in December 2013. It reached end-of-support in 2018. It is hosted on GitHub. Features Caffe supports many different types of deep learning architectures geared towards image classification and image segmentation. It supports CNN, RCNN, LSTM and fully-connected neural network designs. Caffe supports GPU- and CPU-based acceleration computational kernel libraries such as Nvidia cuDNN and Intel MKL. Applications Caffe is b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning Software

Deep or The Deep may refer to: Places United States * Deep Creek (Appomattox River tributary), Virginia * Deep Creek (Great Salt Lake), Idaho and Utah * Deep Creek (Mahantango Creek tributary), Pennsylvania * Deep Creek (Mojave River tributary), California * Deep Creek (Pine Creek tributary), Pennsylvania * Deep Creek (Soque River tributary), Georgia * Deep Creek (Texas), a tributary of the Colorado River * Deep Creek (Washington), a tributary of the Spokane River * Deep River (Indiana), a tributary of the Little Calumet River * Deep River (Iowa), a minor tributary of the English River * Deep River (North Carolina) * Deep River (Washington), a minor tributary of the Columbia River * Deep Voll Brook, New Jersey, also known as Deep Brook Elsewhere * Deep Creek (Bahamas) * Deep Creek (Melbourne, Victoria), Australia, a tributary of the Maribyrnong River * Deep River (Western Australia) People * Deep (given name) * Deep (rapper), Punjabi rapper from Houston, Texas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Parallelism

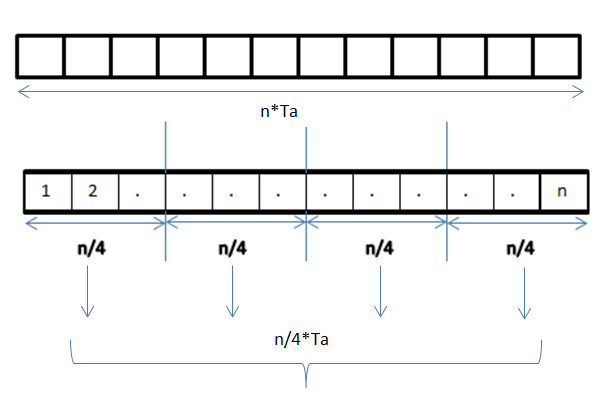

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. The Locality of reference, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pooling Layer

In neural networks, a pooling layer is a kind of network layer that downsamples and aggregates information that is dispersed among many vectors into fewer vectors. It has several uses. It removes redundant information, reducing the amount of computation and memory required, makes the model more robust to small variations in the input, and increases the receptive field of neurons in later layers in the network. Convolutional neural network pooling Pooling is most commonly used in convolutional neural networks (CNN). Below is a description of pooling in 2-dimensional CNNs. The generalization to n-dimensions is immediate. As notation, we consider a tensor x \in \R^, where H is height, W is width, and C is the number of channels. A pooling layer outputs a tensor y \in \R^. We define two variables f, s called "filter size" (aka "kernel size") and "stride". Sometimes, it is necessary to use a different filter size and stride for horizontal and vertical directions. In such cases, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inceptionv3

Inception is a family of convolutional neural network (CNN) for computer vision, introduced by researchers at Google in 2014 as GoogLeNet (later renamed Inception v1). The series was historically important as an early CNN that separates the stem (data ingest), body (data processing), and head (prediction), an architectural design that persists in all modern CNN. Version history Inception v1 In 2014, a team at Google developed the GoogLeNet architecture, an instance of which won the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). The name came from the LeNet of 1998, since both LeNet and GoogLeNet are CNNs. They also called it "Inception" after a "we need to go deeper" internet meme, a phrase from ''Inception'' (2010) the film. Because later, more versions were released, the original Inception architecture was renamed again as "Inception v1". The models and the code were released under Apache 2.0 license on GitHub. The Inception v1 architecture is a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |